What Google core updates are and how to recover after a drop

Every day, or almost every day, Google publishes one or more changes with the aim of improving the quality of search results: most of these changes are not particularly visible to users, but serve precisely to incrementally improve the efficiency of the search engine. Just to rattle off a few numbers, in 2020 there were more than 4500 total search improvement changes. And then there are them, Google’s dreaded broad core updates, significant and far-reaching changes to Google’s search algorithms and systems, released periodically throughout the year and usually announced officially to allow site owners, content producers and SEOs to have the right information to understand any changes that are noticed in terms of ranking and traffic.

What Google broad core updates are

A broad core update is a change to the search engine’s basic algorithm, i.e. the core that makes Google’s ‘main’ systems and, on a general level, search ranking work.

As we have said elsewhere, Google’s core algorithm is actually a collection of algorithms that interpret signals from web pages, with the aim of ranking the content that best answers a search query. We know practically nothing about Google’s secret formula for ranking, and we have vague information about the 200 factors used for ranking or about some more specific algorithms, but from what (little) has been explained to us by Google, we can say that the broad core updates affect the whole of this system.

From a technical point of view, then, broad core updates are ‘substantial improvements to Google’s general ranking processes’, are repeated several times a year, and are designed to increase the general relevance of search results, so as to make them even more useful for everyone. They are broad because they do not target anything specific, but are designed to improve Google’s systems in general, and they apply simultaneously to all languages and versions of Google, because their implementation is global and simultaneous worldwide.

What Google’s major updates are for

More precisely, the broad core algorithm updates are changes made to improve Search in general and ‘keep pace with the changing nature of the Web’, and are designed to ensure that, on the whole, the search engine always presents relevant and authoritative content to users who perform searches, and may also affect Google Discover.

According to an interesting insight by Ryan Jones, with the broad core updates Google changes the weight and importance of certain ranking factors, to a greater or lesser extent on the SERPs, and without (obviously) any further public details (so as not to reveal the algorithm’s secrets). Returning to the search engine’s official guide, Danny Sullivan told us that the choice of announcing and confirming the release of basic updates stems precisely from the fact that they generally ‘have a noteworthy impact and, during their implementation, some sites may experience performance drops or improvements’.

As they are not intended to improve specific areas of Search, but are indeed general and broad, broad core updates serve to improve search results globally and – an expression used on the occasion of the release of one such update – to benefit pages that were ‘previously undervalued‘, an element that is important to bear in mind with a view to optimisation and recovery for affected sites.

In addition, several analysts noted an (indirect) connection between Core Update and the work of quality raters, or rather with the guidelines for quality raters (and their various updates). It is still Jones who hypothesises, for instance, that the release of the update could be the culmination of a complex process, which starts with the execution of a new training set through Google’s machine learning ranking model, passes precisely through the application of this new set by the quality raters (who identify the most relevant result sets according to the new criteria), and ends with the changes in the generation of results. This does not imply a direct relationship between quality raters and ranking, however, because we know that the work of these external contributors is limited to the evaluation of the relevance of the results with respect to the query and the quality of the answers with respect to the instructions given to them, and thus not to the evaluation of the site with respect to others.

The relationship between core update and other updates

Again, to clarify the context, it is important to understand that broad core updates represent only a very small part (there are usually two or at most three per year) of the thousands of changes Google makes to its core algorithm every year.

As we were saying, in 2020 Google made 4,500 changes to the search system, with an average of more than 12 interventions per day, and the number is steadily increasing (in 2018 there were 3,200, in 2010 less than 500!); in addition to these, Google also announced that it made more than 600,000 experiments in 2020 alone, which can also affect ranking, traffic, and SERP visibility.

Already a few years ago, Google’s Gary Illyes explained to us how most of Google’s algorithms and modifications do not specifically target particular pages or topics, but ‘work in unison to generate ranking scores’: Illyes called them ‘baby alghoritms’, that is small algorithms, which would be millions and look for signals in pages or content.

How the implementation of a broad core update works

Those who follow our blog should by now be somewhat familiar with the format by which the SEO community is informed of the launch of a broad core update, a standardised practice first launched in March 2018 for the March 2018 broad core alghoritm update.

The first announcement arrives a few hours before the release on Big G’s official channels (website or social), followed by the announcement of the official start of the update and, about 15 days later, the message that the roll-out has been completed; only sometimes, very rarely, are (few) additional details shared during the release period.

By now standard is also the name Google gives to the core algorithms, which follows a very simple Month+Year+Core Update scheme since the March 2019 Core Update, to ‘avoid confusion and simply and clearly say what kind of update it was and when it happened’, as Danny Sullivan clarified on the occasion.

Google’s decision to confirm the broad core updates stems from the fact that these ‘generally produce some broadly noticeable effects’, i.e. ‘some sites may notice dips or gains’ over the course of the release. By clarifying the context, therefore, Google gives those who experience such situations, and in particular traffic drops, the opportunity to know what is happening and to plan recovery actions – without neglecting Google’s guidance in this regard, which we will see shortly.

From a practical point of view, some broad core algorithm updates are implemented quickly, while others take up to 14 days for the end of the rollout: their impact on SERPs is generally distributed, because they make themselves felt for several days along this timeframe (and sometimes even afterwards) and not only exactly on the day an update is announced or confirmed, and this adds a certain complexity to data analysis – which is why it is useful to check tools such as our SERP Observatory, which keep a constant eye on search engine activity.

The difference between broad core updates and other major updates

Broad core updates are therefore different from Google’s other algorithmic updates, be they baby alghoritm or big updates that directly affect ranking, or rather a specific aspect of the search engine’s ranking system.

Many of the famous updates we hear about, from Penguin to Panda, from Pigeon to Fred, and so on, have been implemented to solve specific errors or problems in Google’s algorithms: for instance, Penguin had to deal with link spam, while Google Panda improved the general quality of Search and Pigeon dealt with spam on local SEO. More recently, then, we can recall the Page Experience Update, which introduced core web vitals among the ranking factors, or the three different product reviews updates.

The characteristic of these types of updates is obvious: they have a precise purpose and only address a specific aspect of the algorithm. When providing information on this, Google explains what it is trying to achieve or prevent with the individual algorithm update, thus giving webmasters, site owners and SEOs the opportunity to possibly change strategies and misbehaviours to correct their pages.

On the contrary, the broad core updates do not evaluate a single ranking factor, but the entire evaluation system (or at least a large portion of it), going so far as to define new criteria for judging the quality of pages in relation to parameters such as user search intent, relevance, authoritativeness of the content offered (the famous EAT triad), overall user experience, mobile experience, and so on.

Precisely for this reason, it is difficult to isolate and determine precisely to what the ranking drops found after a major Google update are due, and indeed, according to the guidelines offered by the search engine itself, it is even ‘useless’ to try to correct anything.

Basically, a broad core update is not a sanction or penalty: a site suffering a drop in ranking, traffic and visibility may not have done anything wrong and there may be no corrections to be made.

It is the context around it that has changed, as Google has made an update to its own search results, based on a new set of ranking ‘rules’ that (while not designed to affect specific pages or sites) may produce some noticeable changes to site performance – and all this without taking into account the natural fickleness of SERPs and rankings, which may also be affected by competitor activity and other variables such as seasonality, news or events affecting search, and more.

Core Updates, how to respond to Google updates

It is clear, however, that the most natural human reaction, especially when there are declines for one’s own site, is to look for a solution to turn it around, and this is why Google has also provided various pointers for sites affected by an update.

The premise is that ‘there may be nothing to fix’ on the pages of the site, but above all that one should not ‘try to fix what is not broken’ by focusing on the wrong areas.

Therefore, Google’s message is that there is ‘no miracle intervention or fix’ that can save pages, but only a set of useful guidelines to keep in mind and apply to any site that wants to compete on the search engine and do SEO, which can help keep its pages safer from search engine algorithm updates and prevent any jolts in SERPs.

How to react to a Google update

Broad core updates are designed to ensure that, on the whole, the search engine effectively pursues its goals of presenting relevant and authoritative content to users, and in their release period they have an effect on SERPs that can be considerable, with sites seeing drops or gains.

According to Google, ‘there is nothing wrong with pages that may perform less well after a core update’: declines after an update are not due to violations of Google’s webmaster guidelines nor are they due to manual or algorithmic penalties, because a core update is not intended to affect specific pages or sites. Instead, the changes are about improving the way Google’s systems evaluate content in general and ‘may make certain pages that were previously undervalued perform better’.

A practical example, the ranking of the best films

Google’s guide also gives us an example to understand how a major update works: ‘we made in 2015 a list of the 100 best films ever made’ and now, in 20221, we want to update it. Obviously there will be changes, because ‘some new and wonderful films that never existed before will now be candidates for inclusion, or we may even re-evaluate some films and realise that they deserved a higher place on the list than before’. Thus, the ranking will change and films that were previously at the top and move down have not become “bad” nor have they done anything wrong: there are “simply other films deserving of a higher place than theirs”.

Focus on creating quality content

Having concluded the film simile, the guide returns to focus on a hot topic for all those who work online, namely the creation of texts and content: faced with a drop, it is normal to react by trying to ‘do something’, and the advice that comes in is to first check whether the site offers the best possible content, because ‘this is what our algorithms try to reward’.

New questions to evaluate the quality of sites

The starting point to understand whether the direction is right are Google’s 23 questions for building quality sites, but reinterpreted in the light of the changes that have taken place in recent years and divided into four categories: ‘content and quality’, ‘experience’ and competence (the Expertise of the EAT paradigm), ‘introduction and production‘ (writing well) and finally ‘comparison’, i.e. doing competitor analysis and comparisons with those ahead of us in the SERPs.

- Content and quality issues

- Does the content provide original information, reports, research or analysis?

- Does the content provide a substantial, complete and comprehensive description of the topic?

- Does the content provide in-depth analysis or interesting information that goes beyond the obvious?

- If the content is based on other sources, does it simply avoid copying or rewriting articles and instead provide substantial added value and originality?

- Does the title and/or H1 of the page provide a descriptive and useful summary of the content?

- Does the title and/or H1 of the page avoid being exaggerated or shocking?

- Is this the type of page you would like to bookmark, share with a friend or recommend?

- Would you expect to see this content in or referenced by a printed magazine, encyclopaedia or book?

- Experience and expertise issues

- Does the content present the information in a trustworthy manner, such as with clear references to sources, evidence of the expertise involved, information about the author or the site that publishes it (e.g. with links to the author’s page or About page of a site)?

- If you research the site that published the content, do you have the impression that it is well trusted or widely recognised as an authority on its subject?

- Was this content written by an expert or enthusiast who demonstrates knowledge of the topic?

- Is the content free of easily verifiable factual errors?

- Would you feel comfortable trusting this content for matters related to your money or your life?

- Introduction and Production issues

- Is the content free of spelling mistakes or stylistic problems?

- Is the content well written or does it look sloppy or rushed?

- Is the content mass-produced, outsourced to a large number of creators or spread over a vast network of sites, so that individual pages or sites do not receive the same attention or care?

- Does the content contain an excessive number of ads that distract or interfere with the main content?

- Is the content displayed well for mobile devices?

- Comparison issues

- Does the content provide substantial value compared to other pages in search results?

- Does the content seem to serve the genuine interests of site visitors or does it seem to exist only to try to rank well in search engines?

In addition to answering these questions ourselves on our site, Google invites us to request ‘honest evaluations from others we trust who are not affiliated with the site’. To study declines in traffic and keywords, then, an audit may be necessary to identify which pages have been most affected and which types of searches, carefully analysing the results to see how they are evaluated in relation to what has been written before.

Understanding the evaluations of Google quality raters

Other sources of information for trying to have well-performing sites regardless of updates are Google quality raters guidelines, remembering (once again) that the information provided by raters is not used directly in ranking algorithms, but serves as a useful indication, just as ‘a restaurant does with customer feedback’, as to whether systems are working and functioning well.

That being said, understanding how raters learn to evaluate good content could help improve a site’s content and potentially achieve improvements in search engine rankings.

Time to recover from slumps after updates

Google’s latest advice concerns the recovery and optimisation work that can be done on the site, and in particular the answer to the frequently asked question “how long does it take to recover if I improve content?”.

Broad core updates tend to be rolled out several times during the year, and thus content that has suffered a negative backlash from one update (and makes the right improvements) may not recover until the next update.

However, Google is constantly working on search algorithm updates, also making smaller core updates that are not publicly announced because they are ‘generally not very noticeable’; however, when they are released they may result in recovery for sites that have made valid optimisations.

No guarantee of traffic recovery and ranking

It is important to emphasise that ‘improvements made by site owners are not a guarantee of recovery, nor do pages have a static or guaranteed position in Google’s search results’, because only the rule that ‘more deserving content will continue to rank well on our systems’ applies.

Another important clarification concerns the way the search engine works, because ‘Google does not understand content the way humans do’, and therefore looks for signals about content and tries to understand how these are related to the way humans assess relevance. “The way pages link together is a well-known signal we use,” Google admits, “but we use many others, which we don’t disclose to help protect the integrity of our results.”

Google’s update: the only hope is to improve content

And so, Google’s indications certainly offer us a good guide for trying to react to the consequences of a major update, and an invitation not to panic: the main advice is precisely this, i.e. not to try to make substantial interventions in the immediate future, to upset the site due to declines caused by updates, because fluctuations can be continuous and there is no certainty of recovering lost traffic anyway.

Better then to think about the bigger picture, check the site’s content and verify that it meets Google’s quality requirements, and try to improve it if necessary. With the awareness, however, that sometimes it may not even be necessary to take any action whatsoever to go back up in the ranking, if Google (with a new update) again considers that page worthy of attention and favour.

Google update, suggestions to recover rankings and traffic

Further practical advice and ideas for recovering from a collapse following an update have also arrived over time from other Googlers, and in particular from the ubiquitous John Mueller who, during a Hangout on YouTube, replied to a user who submitted the case of his site, which collapsed after a Google algorithmic update, asking in particular “how many months should I wait to see an upturn and recover” having started “working on quality content”.

Mueller immediately reiterates a concept that we have already addressed, namely that a core update is not a penalty for any particular site or type of site, and that when drops occur after updates it does not mean that Google’s algorithm ‘is saying that that site is of bad quality’. Although the consequences are identical (loss of positions in SERPs and thus of organic traffic), core updates and penalties are substantially different, and Google is keen to emphasise this.

The penalty is a sanction that Google assigns to a site after a violation of the Webmaster Guidelines, which is communicated through a warning via the Search Console. Declines after algorithmic updates, on the other hand, are part of the ‘normal’ fluctuations in SERPs and there are no notes or notices officially reporting them.

More importantly, a penalisation is sanctioned after an error on the part of the editor, a violation, or behaviour that does not comply with Google’s rules (spam content or unnatural links, for instance), whereas a drop following updates only means that the search engine has reworked its rankings and decided that other sites offer better answers to users.

With its updates Google perfects its results and answers

John Mueller makes it very clear: the reason why sites lose ranking is that Google’s new algorithm has decided that some sites are more relevant. Basically, with the updates Google evolves its ranking system on the basis of new criteria, which serve to determine which pages are more relevant to the queries.

Thus, it is not a matter of finding fault or a problem in the collapsed site, ‘it is not a matter of doing something wrong’ and fixing it (and then, adds the Search Advocate, ‘communicating it to Google, which recognises the correction and returns the site to its previous position’), but rather a reworking of the search engine’s own analysis and calculations. In practice, Google determines that those pages are no longer as relevant as it originally thought, and such changes may occur several times over time.

Core update, what to do when the site loses traffic and ranking

A further contribution on the subject also comes from Roger Montti on Search Engine Journal, who in a guide to ‘core alghorithm update recovery‘ dwells on the characteristics of Google broad core updates, saying that in his experience one can recognise at least two broad categories of losses after algorithmic updates.

The first case is the simple drop in rankings for certain queries: this happens when Google decides to reward certain sites by raising their positions in SERPs, consequently causing a drop in pages previously positioned high in the search engine’s rankings. Then there are complex situations, when a site completely loses its rankings, which are indications of broader problems that need to be addressed by a complete optimisation of the project.

Time needed to recover

The type of criticality obviously depends on the time needed for recovery, although there is good news coming from John Mueller: Google’s algorithm is constantly being updated, and any improvements made to web pages can already yield results in the short to medium term, without having to wait for the next broad core update.

Specifically, the Googler premises that ‘on the one hand we have the broad core updates, which are sort of big changes in our algorithm, and on the other hand we have a lot of little things that keep changing over time. The whole Internet is constantly evolving and so Google’s search results change substantially from day to day and similarly they can improve from day to day’.

Therefore, when significant improvements are made to a website that has been negatively affected by an update, it might be possible ‘to see these kinds of subtle changes over a period of time, because it’s not a matter of waiting for a specific update to see the effects of the interventions’.

A drop after updates does not mean that the site is bad

Concluding his answer, Mueller however reassures: losing rankings after an update does not mean that there is something wrong (bad is the adjective he uses) with the site, because such manoeuvres are more general and do not target specific sites.

Where to intervene to recover positions

Despite the premises and recommendations, however, let’s face it: if our site collapses after a broad core update we want to roll up our sleeves and try to turn it around as quickly as possible!

In fact, there are some more or less complex interventions we can try to implement in quick response to remedy the ranking collapse: we can for example start working on optimising page content, updating or refreshing it, or check technical aspects such as user experience, speed or general and mobile performance.

Moreover, given John’s insistence on the term ‘relevance’, it is a good idea to try to check how the site responds to this criterion, by studying the new SERPs and above all trying to investigate the competitors and the pages that have benefited from a rise in ranking, so as to understand which contents best respond to Google’s search intent of the query, which ultimately represents the final reference of our work, the key with which Google tries to ensure that the user satisfactorily concludes his path on the search engine.

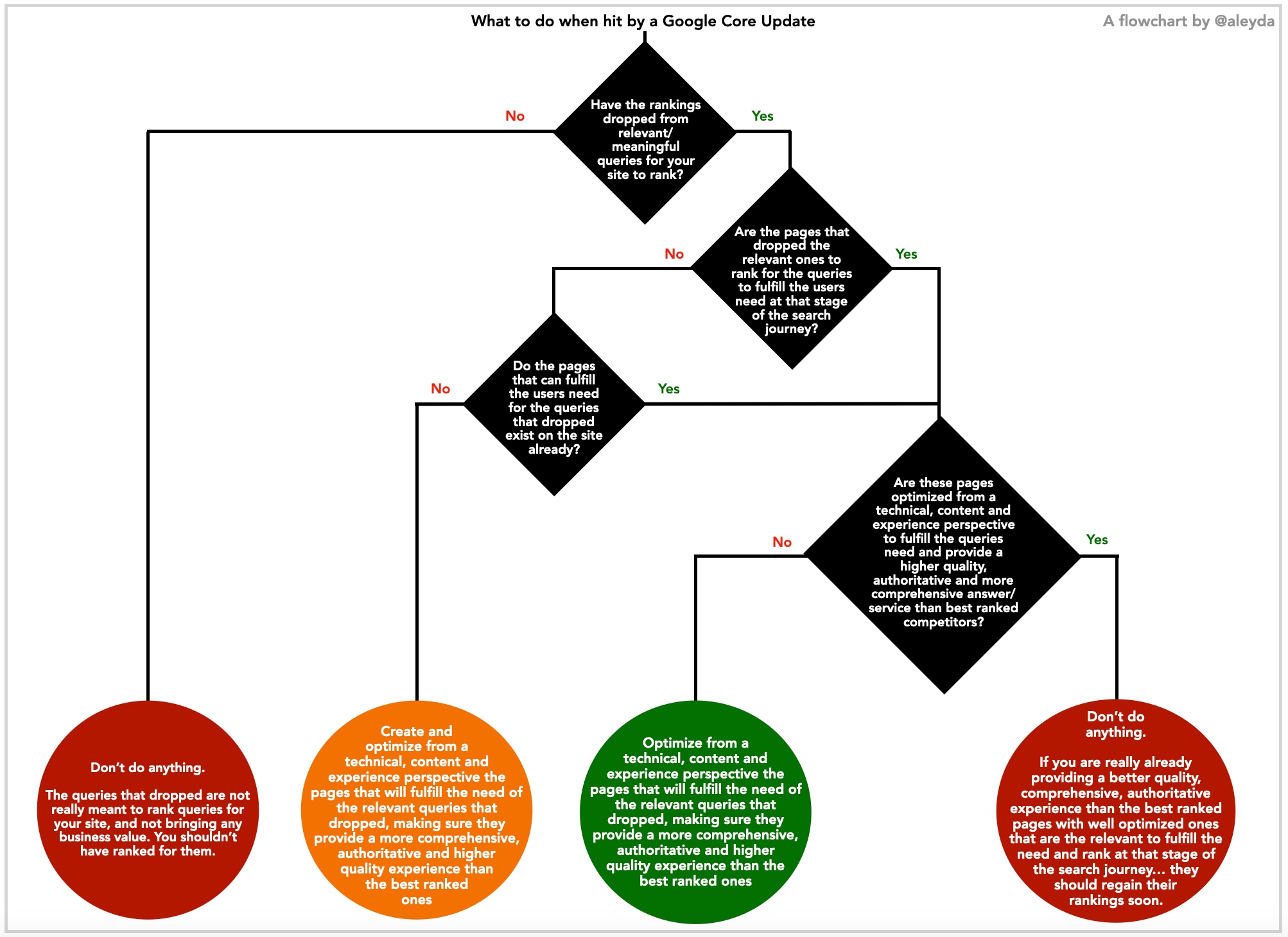

Ultimately, in order to understand what to do if our site has been hit by a Google Core Update, we can refer to this scheme devised by Aleyda Solis (who gave us permission to use it!), which takes us along the path that is closest to our experience and our specific case!