Sitemap: cosâè, a cosa serve, come si realizza e si gestisce

La possiamo definire come una vera e propria mappa che aiuta i motori di ricerca a farsi strada all’interno del nostro sito Web, trovando (e analizzando) in modo facile ed efficiente gli URL inseriti. La Sitemap è un elemento cruciale nella costruzione di un dialogo positivo tra il nostro sito e Googlebot, soprattutto per far scoprire le pagine per noi rilevanti. Seppure meno visibile rispetto ad altre componenti del sito, la sua funzione è quindi vitale, e i nostri approfondimenti ci portano oggi ad affrontare che cos’è una sitemap, come si realizza, perché può essere utile comunicarla a Google e agli altri motori di ricerca e in che modo possa influire sul successo a lungo termine di un sito web, garantendo una corretta indicizzazione dei contenuti.

Cos’è una sitemap?

La sitemap è un file che contiene tutti gli URL di un sito, elencati secondo una gerarchia impostata in fase di creazione. Inoltre, contiene anche dettagli precisi sulle pagine come data dell’ultimo aggiornamento, frequenza di modifica ed eventuali versioni in altre lingue delle pagine.

La definizione di sitemap è quindi facile da comprendere, perché questo documento è letteralmente una mappa del sito, utile per motori di ricerca e, in alcuni casi, anche per gli utenti.

Inizialmente, infatti, il suo senso era agevolare la navigazione degli utenti, come una vera mappa, ma la sua utilità si estende anche all’attività di scansione e indicizzazione da parte dei crawler dei motori di ricerca, che così possono comprendere prima e meglio la struttura del sito grazie alle informazioni che reperiscono circa le relazioni tra le pagine, in modo da esplorare più facilmente i contenuti di un sito e di identificarli per l’indicizzazione.

Più in particolare, questo file è un segnale di quali URL del nostro sito vogliamo che Google scansioni e può fornire informazioni sugli URL appena creati o modificati. Questo facilita l’inclusione di tutte le pagine nell’Indice e nelle SERP, specialmente nel caso di siti complessi o di grandi dimensioni.

Il significato della sitemap

Se dunque ci chiediamo “cos’è una sitemap” , la risposta è che si tratta di un documento, generalmente in formato XML, che comunica ai crawler quali pagine esistono sul sito, quali necessitano priorità durante la scansione e con che frequenza tali pagine sono aggiornate. Fornire una sitemap ai motori di ricerca aiuta a garantire che tutto il contenuto rilevante del nostro sito venga visitato regolarmente, senza che alcune pagine restino nascoste.

Esistono vari tipi di sitemap, ma la più conosciuta e utilizzata è senza dubbio la sitemap XML, fruibile esclusivamente dai motori di ricerca. È possibile visualizzare questo tipo di sitemap visitando solitamente l’URL del sito seguito da “/sitemap.xml“. Ad esempio, se ci interessa trovare la sitemap di un sito specifico possiamo provare ad aggiungere questo suffisso all’indirizzo radice, come in https://www.seozoom.it/sitemap_index.xml .

In parallelo, esistono anche le sitemap HTML, che sono pensate più per l’utente finale. Queste forniscono una visualizzazione in formato di elenco delle pagine del sito, utile in caso di siti molto grandi in cui la navigazione può risultare confusa. Tuttavia, oggi sono meno utilizzate, prevalendo l’importanza della sitemap XML per questioni di ottimizzazione SEO.

Quindi, al di là della sua funzione tecnica, la funzione strategica della sitemap è quella di essere uno strumento centrale per migliorare la visibilità digitale del nostro sito, garantendo che tutte le pagine – o almeno le più rilevanti – vengano “lette” dai motori di ricerca.

A cosa serve la sitemap e perché è importante per la SEO?

La sitemap rappresenta un punto di contatto fondamentale tra il nostro sito web e i motori di ricerca. Non dobbiamo pensarla semplicisticamente come un “elenco di pagine”, ma piuttosto come uno strumento attivo di ottimizzazione del flusso di informazioni tra il nostro sito e i motori di ricerca, utile a consolidare la nostra presenza online.

Grazie all’accesso a questo documento, un crawler dei motori di ricerca come Googlebot può eseguire una scansione più efficiente del sito perché ha a disposizione una panoramica dei contenuti disponibili, con indicazioni delle risorse presenti e sul percorso per raggiungerle.

In linea di massima, i web crawler riescono a trovare la maggior parte dei contenuti se le pagine di un sito sono collegate in modo corretto: usare una mappa è però un sistema sicuro per consentire ai motori di ricerca di comprendere più velocemente e in modo preciso l’intera struttura del sito.

Il motivo principale risiede nella sua capacità di facilitare l’indicizzazione delle pagine del nostro sito, rendendo molto più semplice per Google e altri motori di ricerca scansionare ogni contenuto disponibile. Quando creiamo una sitemap, stiamo fornendo una guida organizzata che permette ai crawler di passare in rassegna l’architettura del sito, percependo chiaramente quali pagine esistono, quali devono avere priorità e con quale frequenza vengono aggiornate.

Il beneficio più evidente di una sitemap SEO è proprio quello di assicurarsi che nessuna pagina rimanga “invisibile” ai motori di ricerca. Ciò è particolarmente utile per i siti di grandi dimensioni o per quelli con una struttura URL complessa, dove alcune pagine potrebbero risultare difficili da scoprire per i bot di Google.

Un altro aspetto cruciale della sitemap è che consente di segnalare ai motori di ricerca le pagine che riteniamo più significative per l’utente e per il business. Questo è possibile grazie a parametri come la priorità e la frequenza degli aggiornamenti, che vengono comunicati ai crawler mediante il file XML. Questi aspetti incidono sull’indicizzazione: aggiornando regolarmente la sitemap, possiamo far capire a Google che nuove pagine o modifiche su pagine esistenti sono disponibili e dovrebbero essere scansionate nuovamente.

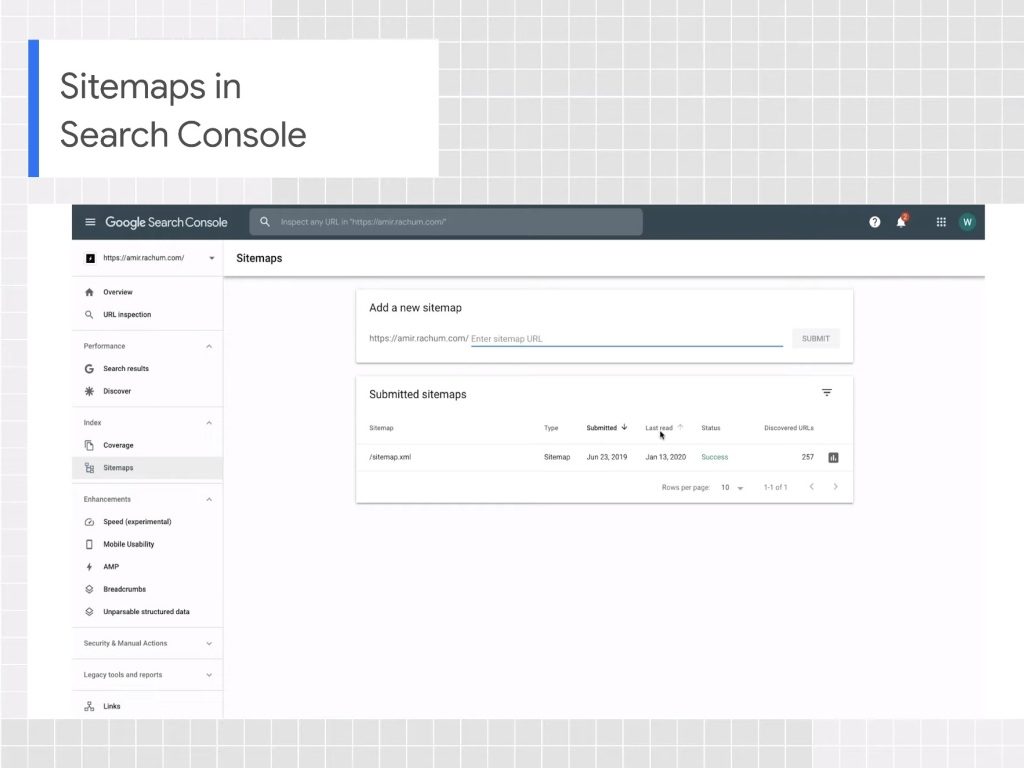

L’integrazione della sitemap con la Google Search Console rende l’intero processo ancora più potente. Una volta generata la sitemap, possiamo inviarla a Google attraverso la Search Console, fornendo ai motori di ricerca un accesso ufficiale a tutte le risorse del nostro sito. Questo passaggio non è solo un modo per ottimizzare l’indicizzazione, ma ci consente anche di monitorare eventuali errori o modifiche non recepite dai crawler. Se una pagina non viene indicizzata correttamente o è stata respinta per qualche problema, la Google Search Console ci notifica immediatamente, permettendoci di risolvere prontamente le criticità.

La storia della sitemap: come è nata e come si è evoluta

Le sitemap nascono quasi in contemporanea allo sviluppo del web. Nei primi anni, quando i siti erano ancora relativamente semplici e meno popolati di contenuti, i webmaster si affidavano a sitemap HTML come unica soluzione per organizzare e facilitare la navigazione. Queste pagine erano essenzialmente liste di link a tutte le sezioni del sito, pensate più per gli utenti che per i motori di ricerca. Le sitemap HTML fungevano spesso da “indice”, permettendo a chi visitava il sito di accedere rapidamente a sezioni di rilevo o a pagine più nascoste. Tuttavia, con la crescita e la complessità dei contenuti, questo tipo di approccio iniziò a dimostrare i suoi limiti.

Con l’evoluzione del web, l’esigenza di migliorare la comunicazione con i crawler dei motori di ricerca divenne infatti sempre più pressante. Man mano che aumentava il numero di pagine web, i motori di ricerca come Google dovettero affrontare la sfida di mappare e indicizzare rapidamente un volume crescente di siti, sempre più ricchi di contenuti. Proprio in questo contesto, alla fine degli anni 2000 furono introdotte le sitemap XML.

A differenza delle precedenti mappe HTML, le sitemap XML non erano destinate agli utenti, ma erano pensate esclusivamente per i motori di ricerca. Queste sitemap sono file strutturati su un protocollo standard (il sitemap protocol), che permette ai crawler di comprendere non solo la gerarchia e la struttura del sito, ma anche informazioni più dettagliate su ogni pagina. Parametri come la frequenza di aggiornamento, la priorità di una determinata pagina rispetto ad altre all’interno del sito, e la data di ultima modifica, vengono comunicati chiaramente attraverso il file XML.

Questa transizione dalle sitemap HTML alle sitemap XML ha portato a un grande passo in avanti nel modo in cui i motori di ricerca gestiscono l’indicizzazione e la scansione dei contenuti. Se in passato ci si affidava principalmente ai link interni per permettere ai crawler di “scoprire” nuove pagine, con l’avvento delle sitemap XML i proprietari dei siti potevano direttamente inviare ai motori di ricerca informazioni specifiche su quali pagine scansionare e come prioritarizzarle. Di fatto, le sitemap moderne hanno iniziato a giocare un ruolo cruciale nel garantire un’indicizzazione completa ed efficiente di tutte le pagine di un sito, grandi e piccoli.

Nel corso degli anni, le sitemap sono ulteriormente evolute per supportare nuove tipologie di contenuti. Oltre alle pagine tradizionali, oggi è possibile creare sitemap specifiche per immagini, video e news, aprendo nuove possibilità per garantire che ogni tipo di contenuto venga adeguatamente indicizzato. Questo è fondamentale non solo dal punto di vista tecnico, ma anche per offrire ai visitatori dei motori di ricerca contenuti sempre aggiornati e rilevanti, migliorando così l’esperienza di ricerca complessiva.

I diversi tipi di sitemap: HTML, XML e altro ancora

Come accennato, il mondo delle sitemap non si limita a un’unica soluzione valida per tutti. Nel corso del tempo, infatti, sono stati sviluppati diversi tipi di sitemap, ciascuno con uno scopo preciso e legato alle specifiche esigenze di indicizzazione.

Il formato più diffuso e utilizzato per interfacciarsi con i motori di ricerca è la sitemap XML, ma non è l’unica opzione a disposizione. Esistono anche sitemap HTML, pensate più per aiutare gli utenti a navigare in maniera intuitiva, e versioni più recenti e specializzate come le sitemap per immagini , video o addirittura news.

Le sitemap XML sono il gold standard per quanto riguarda l’interazione con i motori di ricerca. Inventato da Google nel 2005 e inizialmente chiamato Google Sitemaps, questo formato è stato poi adottato anche da altri motori di ricerca e si basa su uno specifico protocollo, regolamentato dalla Attribution-ShareAlike Creative Commons License, che ne ha reso possibile la diffusione.

Queste mappe non vengono visualizzate dagli utenti, ma lavorano dietro le quinte e sono state pensate proprio con l’obiettivo di fornire uno schema chiaro della struttura del sito a Google e agli altri search engine. Con il file XML, è possibile comunicare diverse informazioni chiave, tra cui la frequenza degli aggiornamenti (ad esempio, se una pagina viene modificata abitualmente), l’importanza relativa delle pagine, e l’esatta struttura dei contenuti. Questo è cruciale per facilitare l’indicizzazione delle pagine nei motori di ricerca e per garantire che le informazioni più recenti vengano rapidamente acquisite e aggiornate. Per molti siti web, avere una sitemap XML correttamente configurata è uno dei passi fondamentali per migliorare la propria SEO.

Di contro, la sitemap HTML è pensata più per l’utente finale e per la precisione per migliorare la user experience. Sebbene oggi sia meno diffusa grazie alla proliferazione di strumenti di navigazione sempre più avanzati, ha giocato – e in alcuni casi gioca ancora – un ruolo importante nei siti web di grandi dimensioni. Si presenta come una pagina web statica che contiene un elenco cliccabile di tutte le sezioni del sito. Mentre per Google una sitemap XML ha il compito di facilitare la scansione dei contenuti, la sitemap HTML aiuta gli utenti a scoprire contenuti nascosti o nascosti in sezioni più profonde del sito. Tuttavia, con la crescente complessità dei progetti digitali e l’aumento dell’importanza dei motori di ricerca come intermediari tra utente e sito, il ruolo di queste mappe è divenuto sempre più marginale. Di fatto, molte delle sitemap HTML sono state completamente sostituite da menu di navigazione ben strutturati o da potenti motori di ricerca interni.

Non possiamo però trascurare l’importanza delle sitemap specifiche per determinati contenuti. Ad esempio, la sitemap per immagini aiuta Google a scansionare e indicizzare tutte le immagini presenti sul sito, un aspetto fondamentale per chi punta a ottenere traffico significato anche da Google Immagini, specialmente in settori visivamente orientati come il design, la moda o la fotografia. Una voce relativa a un’immagine in una Sitemap può includere la posizione delle immagini presenti in una pagina.

Un altro esempio di file XML specializzato è la sitemap per video, che indica ai motori di ricerca la presenza di contenuti multimediali all’interno del sito. Una voce relativa a un video in una Sitemap potrebbe indicare la durata del video, la valutazione e la classificazione di idoneità per fascia d’età. Questo è decisivo per migliorare il posizionamento sui risultati di Google Video, specialmente per piattaforme o blog che poggiano su contenuti audiovisivi.

Infine, ci sono le sitemap news, utili per chi gestisce siti che pubblicano frequentemente articoli di attualità. Questa variante di sitemap segnala ai bot quali contenuti devono essere indicizzati più rapidamente, ad esempio per poter comparire all’interno della visibilità elevatissima garantita dalle sezioni news di Google. Una voce relativa a una notizia in una Sitemap può includere il titolo dell’articolo e la data di pubblicazione. È uno strumento indispensabile per giornali, blog di news e media digitali particolarmente dinamici, dove la freschezza dei contenuti è un fattore competitivo chiave.

Quali sono i formati di sitemap per Google

Le sitemap possono assumere formati diversi in base alle esigenze specifiche del sito e alla configurazione web utilizzata: ogni formato ha vantaggi e limitazioni, quindi è fondamentale scegliere quello che meglio si adatta alle proprie necessità.

Google supporta i formati di sitemap definiti dal protocollo Sitemap, con diversi gradi di estensibilità e funzionalità aggiuntive.

Il formato più versatile e diffuso è la sitemap XML. Questo tipo di sitemap è estremamente flessibile e si presta bene alla maggior parte dei siti perché non si limita a elencare le pagine del sito, ma può includere anche informazioni aggiuntive. Ad esempio, è possibile utilizzare le sitemap XML per fornire dettagli su immagini, video o contenuti di notizie. Questo formato è particolarmente utile per siti multilingua, poiché è capace di segnalare le versioni localizzate delle pagine. La maggior parte dei CMS, come WordPress o Joomla, può generare una sitemap XML automaticamente, grazie a plugin dedicati, il che rende il processo alla portata di molti utenti. Tuttavia, gestire le sitemap XML su siti di grandi dimensioni o con URL soggetti a frequenti modifiche può diventare complicato. Richiede maggiore attenzione, soprattutto quando si tratta di aggiornamenti frequenti o modifiche strutturali continue.

Un’opzione più facile da utilizzare, ma limitata in termini di estensibilità, è la sitemap RSS, mRSS o Atom 1.0. Questi formati sono abbastanza simili alle sitemap XML, ma sono spesso più semplici da generare automaticamente, poiché la maggior parte dei CMS crea i feed RSS e Atom senza bisogno di interventi aggiuntivi da parte dell’utente. Le sitemap RSS o Atom sono particolarmente utili per segnalare a Google informazioni sui video pubblicati sul sito, supportando quindi la necessità di video sitemap. Il principale svantaggio di questo formato risiede nella sua limitata capacità di fornire informazioni aggiuntive: mentre le sitemap XML possono includere dati relativi a immagini e notizie, questi formati più semplificati non lo consentono. Quindi, se il sito ha contenuti multimediali diversificati con immagini e notizie, potrebbe non essere la scelta migliore.

Infine, per chi cerca una soluzione semplice e immediata, esiste la sitemap di testo. Si tratta del formato più basico, che elenca semplicemente gli URL delle pagine in formato HTML o altre pagine che possono essere indicizzate. Questa soluzione è ideale per i siti di grandi dimensioni, poiché è molto facile da implementare e non richiede strutture complesse. Tuttavia, il formato testuale presenta significative limitazioni: può gestire solo contenuti testuali o HTML, senza estendibilità per immagini, video o notizie. Di conseguenza, pur essendo facile da mantenere, risulta poco versatile rispetto alle altre opzioni.

I limiti delle sitemap e altre informazioni utili

Le best practice relative alle sitemap, stabilite dal protocollo Sitemap, sono spesso ignorate o applicate in modo approssimativo, il che può compromettere l’efficacia dell’indicizzazione da parte dei motori di ricerca. Diverse di queste accortezze riguardano argomenti come i limiti di dimensioni, la corretta codifica e posizione delle sitemap e il formato degli URL contenuti all’interno di tali file.

Uno degli aspetti più critici è legato ai limiti di dimensioni delle sitemap. Ogni sitemap non dovrebbe superare i 50 MB (se non compressa) o includere più di 50.000 URL. Superare questi limiti significa che dobbiamo suddividere il file in più sitemap, ognuna dedicata a una specifica sezione o tipologia di contenuto. In questo caso, particolarmente frequente con siti estesi e complessi, può risultare utile creare un file indice delle sitemap: si tratta di un documento che consente di raggruppare più sitemap sotto un’unica struttura, agevolando quindi l’invio a Google tramite un solo file. Google permette di inviare più sitemap o più file indice sitemap, il che risulta strategico quando si desidera monitorare separatamente il rendimento di specifici contenuti all’interno della Search Console.

Anche la codifica e la posizione del file sitemap sono aspetti fondamentali. Le sitemap devono essere codificate in UTF-8, uno standard tecnologico comunemente accettato, per assicurare che tutti i caratteri speciali e gli accenti negli URL siano correttamente interpretati dai crawler dei motori di ricerca.

In merito alla posizione, possiamo tecnicamente ospitare il file sitemap in qualsiasi directory del sito, ma è preferibile pubblicarlo alla radice del dominio (ovvero, nella cartella principale del sito). Questo consente alla sitemap di influenzare tutti i file presenti nel sito. Se la sitemap viene invece pubblicata in una sottodirectory, avrà effetto solo sui file di quella specifica sezione. Se inviamo il file via Google Search Console possiamo comunque ospitare la sitemap dove preferiamo, ma pubblicarla a livello di root rimane la scelta consigliata per una copertura completa. Questa accortezza permette ai crawler di accedere a tutti i contenuti del sito senza limiti di directory.

Un altro fattore di grande importanza riguarda la gestione degli URL all’interno della sitemap. È sempre necessario utilizzare URL assoluti, quindi completi e ben strutturati, non versioni relative. Se, ad esempio, il nostro sito si trova su “https://www.example.com/“, l’URL incluso nella sitemap non deve essere indicato come “/mypage.html”, ma come “https://www.example.com/mypage.html“. In questo modo, ci assicuriamo che Google scansioni e indicizzi correttamente ogni singola pagina, senza ambiguità su come accedervi. Inoltre, è fondamentale che la sitemap contenga solo gli URL delle pagine che intendiamo visualizzare nei risultati di ricerca, ossia quelle che vogliamo siano indicizzate correttamente e che siano visualizzate come canoniche.

Se il nostro sito ha versioni separate per desktop e mobile, in genere è consigliato segnalare nella sitemap solo la versione canonica. Tuttavia, se le esigenze del sito richiedono di indicizzare entrambe le versioni (desktop e mobile), possiamo farlo, ma ricordiamoci di annotare chiaramente all’interno della sitemap quale URL corrisponde a ciascuna versione, in modo che Google possa comprendere correttamente la relazione tra le due.

A proposito di informazioni, la guida di Google chiarisce anche un altro aspetto: “l’utilizzo del Protocollo Sitemap non garantisce l’inclusione delle pagine web nei motori di ricerca”. Ovvero, non c’è certezza che le pagine presenti nel file della mappa siano tutte poi effettivamente indicizzate, perché Googlebot agisce secondo i propri criteri e rispettando i propri algoritmi complessi, che non sono influenzati dalla Sitemap.

Inoltre, la sitemap non influisce sul ranking delle pagine di un sito nei risultati di ricerca, ma la priorità assegnata a una pagina attraverso la Sitemap potrebbe potenzialmente rappresentare un fattore di ranking.

Quando e perché usare le Sitemap

Dopo queste premesse, a che serve in concreto usare una Sitemap?

In linea di massima, Google ci rassicura: se le pagine del sito sono collegate in modo corretto – e se le pagine che riteniamo importanti sono effettivamente raggiungibili tramite qualche forma di navigazione, che sia il menu del sito o i link inseriti nelle pagine – riesce solitamente a trovare la maggior parte dei contenuti.

Ad ogni modo, tutti i siti possono beneficiare di una scansione migliore, ma ci sono casi particolari in cui anche Google consiglia vivamente di usare queste mappe, come ad esempio siti più grandi o più complessi o file più specializzati.

A quali siti servono le Sitemap

In particolare, i siti molto grandi dovrebbero usare la Sitemap per comunicare le pagine nuove o aggiornate di recente ai crawler, che altrimenti potrebbero trascurare la scansione di queste risorse; ancor più utile la mappa se il sito ha molte pagine orfane o non ben collegate, che rischiano quindi di non essere considerate da Google.

La Sitemap è consigliata poi per i siti nuovi e con pochi backlink in ingresso: in questo caso, Google e il suo crawler potrebbero avere difficoltà a trovare il sito perché non si evidenziano percorsi tra i link del Web.

Infine, si invita a usare la mappa se il sito “utilizza contenuti multimediali, viene visualizzato in Google News o utilizza altre annotazioni compatibili con le sitemap”, aggiungendo che se “appropriato, Google può prendere in considerazione altre informazioni contenute nelle Sitemap per utilizzarle ai fini della ricerca”.

E quindi, ricapitolando, la sitemap è utile se:

- Il sito è grande, perché generalmente sui siti di grandi dimensioni è più difficile assicurarsi che ogni pagina sia collegata da almeno un’altra pagina del sito, e quindi è più probabile che Googlebot non rilevi alcune delle pagine nuove.

- Il sito è nuovo e riceve pochi backlink. Googlebot e altri web crawler eseguono la scansione del Web seguendo i collegamenti da una pagina all’altra, quindi in assenza di tali link Googlebot potrebbe non rilevare le pagine.

- Il sito ha molti contenuti multimediali (video, immagini) o viene visualizzato in Google News, perché possiamo fornire ulteriori informazioni ai crawler attraverso le specifiche sitemap.

A quali siti potrebbe non servire una sitemap

Se questi sono i casi in cui Google raccomanda fortemente l’invio di una sitemap, ci sono invece situazioni in cui non è così necessario provvedere a fornire il file. In particolare, potremmo non aver bisogno di una mappa del sito se:

- Il sito è “piccolo”e ospita circa 500 pagine o meno (calcolando solo le pagine che riteniamo debbano essere incluse nei risultati di ricerca).

- Il sito è completamente linkato internamente e Google può quindi trovare tutte le pagine importanti seguendo i link a partire dalla home page.

- Non pubblichiamo molti file multimediali (video, immagini) o pagine di notizie che desideriamo visualizzare nei risultati di ricerca. Le Sitemap possono aiutare Google a trovare e comprendere file video e immagini o articoli di notizie sul sito, ma non necessitiamo che questi risultati vengano visualizzati nella Ricerca, potremmo non inviare il file.

Come creare una sitemap

Creare una sitemap è un passaggio fondamentale e facilitare l’indicizzazione del sito da parte dei motori di ricerca e quindi potenzialmente migliorare la SEO. Come detto, serve per comunicare ai crawler tutte le pagine del sito in modo organizzato e completo e, soprattutto, per assicurarsi che queste vengano analizzate efficacemente.

Esistono diversi metodi per creare una sitemap, a seconda della struttura del sito e degli strumenti che si utilizzano, quindi vediamo insieme le opzioni più diffuse. Il primo step è decidere quali pagine del sito si vogliamo indicare e sottoporre a scansione da parte di Google, stabilendo la versione canonica di ogni pagina. In questo modo, indichiamo chiaramente ai motori di ricerca quali URL preferiamo mostrare nei risultati di ricerca – gli URL canonici – soprattutto se e quando gli stessi contenuti sono accessibili da URL diversi (evitando di indicare tutti gli URL che reindirizzano agli stessi contenuti e inserendo in sitemap appunto solo l’URL canonico).

Il secondo passaggio è la scelta del formato Sitemap da utilizzare, per poi metterci all’opera con editor di testo o software appositi, oppure affidarsi a un’alternativa più facile e veloce, alla portata di tutti.

Creare una mappa con Sitemap Generator

Se infatti i più esperti possono creare manualmente il file, in Rete si trovano molte risorse che consentono di generare sitemap in automatico: su Google è presente addirittura una (vecchia) pagina che elenca i Web Sitemap generator, suddivisi per “server-side programs”, “CMS and other plugins”, “Downloadable Tools”, “Online Generators/Services”, “CMS with integrated Sitemap generators”. Inoltre, sono segnalati anche gli strumenti per generare sitemap per Google News e “Code Snippets/Libraries”. Tuttavia, specifica la guida ufficiale, si tratta di una raccolta ormai datata e priva di manutenzione.

Qualunque sia la scelta, è fondamentale mettere subito la Sitemap creata a disposizione di Google, aggiungendola al file robots.txt o inviandola direttamente alla Search Console.

I generatori di sitemap online sono utili per chi gestisce siti di piccole o medie dimensioni e desidera generare una sitemap rapidamente senza dover installare software o componenti aggiuntivi. Tra i tool più noti troviamo XML-sitemaps.com, molto popolare grazie alla sua interfaccia semplice e intuitiva. È sufficiente inserire l’URL del proprio sito e lo strumento procederà a scansionare tutte le pagine, generando un file sitemap XML che potrà poi essere scaricato e caricato sul server del sito. Questo metodo è particolarmente indicato per chi non utilizza CMS o preferisce non alterare la struttura del proprio sito con plugin o strumenti di terze parti.

Se il nostro sito è basato su un CMS come WordPress, la creazione di una sitemap è ulteriormente semplificata grazie all’utilizzo di specifici plugin che gestiscono l’intero processo per te. Uno dei plugin più conosciuti e usati è Yoast SEO, che genera e aggiorna il file automaticamente ogni volta che aggiungiamo o modifichiamo contenuti sul sito.

Per chi desidera invece un maggiore controllo o si trova in situazioni particolari (come la gestione di un sito statico o un sito custom built), è possibile procedere alla creazione manuale di una sitemap. Questo processo richiede la redazione di un file XML che elenca le varie URL del sito, insieme a informazioni opzionali come la frequenza di aggiornamento e la priorità di scansione. Dal punto di vista tecnico, è sufficiente solo utilizzare un editor di testo come Blocco note di Windows o Nano (Linux, MacOS) e seguire la sintassi base; possiamo assegnare al file qualsiasi nome, purché i caratteri siano consentiti in un URL.

Creare manualmente una sitemap può essere utile per chi vuole includere solo determinate pagine o per chi ha esigenze specifiche di personalizzazione. Tuttavia, è importante rispettare le linee guida del sitemap protocol, lo standard definito da Google che regola come le informazioni dovrebbero essere organizzate e trasmesse ai motori di ricerca. Un file XML mal strutturato potrebbe causare problemi di indicizzazione, quindi è consigliabile verificare la correttezza del file prima di inviarlo.

Vale a questo proposito la sintesi di Google: a seconda dell’architettura e delle dimensioni del sito, possiamo

- Consentire al sistema CMS di generare una Sitemap.

- Creare manualmente la sitemap, se inseriamo meno di qualche decina di URL.

- Generare automaticamente la sitemap, se inseriamo più di qualche decina di URL. Ad esempio, possiamo estrarre gli URL del sito web dal rispettivo database ed esportarli nella schermata o nel file effettivo sul server web.

In ogni caso, indipendentemente dal metodo di creazione scelto, è fondamentale inviare la sitemap a Google, uno step che può essere eseguito tramite la Google Search Console, sotto la sezione apposita dedicata alla gestione delle sitemap. L’invio regolare della Sitemap consente di monitorare eventuali problemi di indicizzazione e tiene sempre aggiornato Google su eventuali cambiamenti o nuove aggiunte di contenuti.

Come inviare la sitemap a Google

Ricordando che l’invio di una mappa del sito è solo un suggerimento e non garantisce che Google scaricherà o utilizzerà la stessa per eseguire la scansione degli URL sul sito, ci rifacciamo ai passaggi indicati dalla guida ufficiale per scoprire i diversi modi per rendere disponibile la Sitemap a Google. Possiamo cioè:

- Inviare una mappa del sito in Search Console utilizzando il rapporto Sitemap, che ci consentirà di vedere quando Googlebot ha avuto accesso alla mappa del sito e anche potenziali errori di elaborazione.

- Utilizzare l’API di Search Console per inviare in modo programmatico una mappa del sito.

- Inserire la riga seguente in un punto qualsiasi del file robots.txt, specificando il percorso della mappa del sito, che Google troverà alla successiva scansione del file robots.txt.

- Utilizzare WebSub per trasmettere le modifiche ai motori di ricerca, incluso Google (ma solo se usiamo Atom o RSS).

I metodi per l’invio incrociato di sitemap per più siti

Se gestiamo più siti web, possiamo semplificare il processo di invio creando una o più Sitemap che includano gli URL di tutti i siti verificati e salvando le Sitemap in un’unica posizione. I metodi per questo invio multiplo e incrociato sono:

- Una singola mappa del sito che include URL per più siti web, inclusi siti di domini diversi. Ad esempio, la mappa del sito che si trova in https://host1.example.com/sitemap.xml può includere i seguenti URL.

- https://host1.example.com

- https://host2.example.com

- https://host3.example.com

- https://host1.example1.com

- https://host1.example.ch

Sitemap individuali (una per ogni sito) che risiedono tutte in un’unica posizione.

-

- https://host1.example.com/host1-example-sitemap.xml

- https://host1.example.com/host2-example-sitemap.xml

- https://host1.example.com/host3-example-sitemap.xml

- https://host1.example.com/host1-example1-sitemap.xml

- https://host1.example.com/host1-example-ch-sitemap.xml

Per inviare sitemap tra siti ospitate in un’unica posizione, possiamo utilizzare Search Console o robots.txt.

In particolare, con Search Console dobbiamo innanzitutto aver verificato la proprietà di tutti i siti che aggiungeremo nella mappa del sito, e poi crea una o più sitemap includendo gli URL di tutti i siti che desideriamo coprire. Se preferiamo, dice la guida, possiamo includere le mappe dei siti in un file indice delle sitemap e lavorare con quell’indice. Utilizzando Google Search Console, invieremo le sitemap o il file dell’indice.

Se preferiamo l’invio incrociato di Sitemap con robots.txt dobbiamo creare una o più sitemap per ogni singolo sito e, per ogni singolo file, includere solo gli URL di quel particolare sito. Successivamente, caricheremo tutte le sitemap su un singolo sito su cui abbiamo il controllo, ad esempio https://sitemaps.example.com.

Per ogni singolo sito, dobbiamo controllare che il file robots.txt faccia riferimento alla sitemap per quel singolo sito. Ad esempio, se abbiamo creato una mappa del sito per https://example.com/ e stiamo ospitando la mappa del sito in https://sitemaps.example.com/sitemap-example-com.xml, faremo riferimento alla mappa del sito nel file robots.txt in https://example.com/robots.txt.

Le best practices con le sitemap

La documentazione di Google riporta anche alcuni consigli pratici sul tema, riprendendo quando indicato dal citato sitemaps protocol, e in particolare si concentra su limiti di dimensione, posizione del file e URL inclusi nelle mappe.

- Per quanto riguarda i limiti, vale quanto scritto prima: tutti i formati limitano una singola Sitemap a 50 MB (non compressi) o 50.000 URL. Se abbiamo un file più grande o più URL, è necessario suddividere la Sitemap in più Sitemap di dimensioni inferiori e, facoltativamente, creare un file sitemap index e inviare solo questo indice a Google – oppure inviare più Sitemap e anche il file Indice Sitemap, in modo particolare se desideriamo monitorare le prestazioni di ricerca di ogni singola sitemap in Search Console.

- Google non bada all’ordine degli URL nella sitemap e l’unico limite è quello dimensionale.

- Codifica e posizione del file sitemap: il file deve essere codificato in UTF-8. Possiamo ospitare le sitemap ovunque sul tuo sito, ma consapevoli che una mappa del sito influisce solo sui discendenti della directory principale: pertanto, una sitemap pubblicata nella root del sito può influire su tutti i file del sito, motivo per cui è questa la posizione consigliata.

- Proprietà degli URL di riferimento: dobbiamo utilizzare URL completi e assoluti nelle Sitemap. Google eseguirà la scansione degli URL esattamente come elencati. Ad esempio, se il sito si trova in https://www.example.com/, non dobbiamo specificare un URL come /mypage.html (un URL relativo), ma l’URL completo e assoluto: https://www.example.com/mypage.html.

- Nella sitemap includeremo gli URL che desideriamo visualizzare nei risultati di ricerca di Google. Di solito, il motore di ricerca mostra gli URL canonici nei suoi risultati di ricerca, e possiamo influenzare questa decisione con le sitemap. Se disponiamo di URL diversi per le versioni mobile e desktop di una pagina, la guida consiglia di puntare a una sola versione in una Sitemap o, in alternativa, puntare a entrambi gli URL ma annotando gli URL per indicare le versioni desktop e mobile.

- Quando creiamo una sitemap del sito, comunichiamo ai motori di ricerca quali URL preferiamo mostrare nei risultati di ricerca e quindi gli URL canonici: quindi, se abbiamo lo stesso contenuto accessibile con URL diversi, dobbiamo scegliere l’URL preferito includendolo nella mappa del sito ed escludendo tutti gli URL che portano allo stesso contenuto.

Quali pagine escludere dalle sitemap

Sempre a proposito delle pratiche consigliate, non dobbiamo pensare che il file serva a contenere tutti gli URL del sito, e anzi ci sono alcune tipologie di pagina che andrebbero escluse per impostazione predefinita, perché poco utili.

In linea generale, nella sitemap andrebbero inclusi solo gli URL rilevanti, quelli che offrono valore aggiunto agli utenti e che desideriamo siano visibili nella Ricerca; tutti gli altri andrebbero esclusi dal file, anche se comunque ciò non assicura che siano “invisibili”, a meno di non aggiungere un tag noindex. Questo vale in particolare per:

- Pagine non canoniche

- Pagine duplicate

- Pagine di paginazione

- URL con parametri

- Pagine dei risultati di ricerca interna del sito

- URL creati dalle opzioni di filtro

- Pagine d’archivio

- Eventuali redirect (3xx), pagine mancanti (4xx) o pagine di errore del server (5xx)

- Pagine bloccate da robots.txt

- Pagine in noindex

- Pagine accessibili da un modulo di lead generation (PDF, ecc.)

- Pagine di utilità (pagina di accesso, pagine della lista dei desideri/carrello, ecc.)

Come generare una sitemap XML: passo dopo passo

La generazione di una sitemap XML può sembrare un’operazione complessa, ma in realtà è alla portata di tutti grazie ai numerosi strumenti esistenti. Procediamo quindi con una guida pratica, passo dopo passo, su come creare e gestire una sitemap in formato XML.

- Scegliere un generatore di sitemap

Il primo passo è quello di identificare e scegliere un generatore di sitemap XML. Oggi esistono numerosi strumenti, molti dei quali gratuiti, pensati per rendere l’operazione rapida e indolore, permettendo di generare una sitemap partendo semplicemente dall’URL del sito.

- Inserire l’URL del sito

Una volta selezionato il generatore, il passaggio successivo consiste nell’inserire l’URL del sito web che desideriamo indicizzare. Nella maggior parte dei casi, è sufficiente inserire l’indirizzo principale del sito. Il generatore avvierà una scansione delle pagine e dei link interni, identificando automaticamente i contenuti da inserire nella sitemap XML.

- Configurare le opzioni di scan

Molti generatori di sitemap consentono di configurare alcune opzioni di scansione. Ad esempio, è possibile specificare:

- Quale parte del sito deve essere inclusa o esclusa (utilizzando filtri sugli URL);

- La priorità delle pagine (ossia, quali pagine dovrebbero essere scansionate più frequentemente);

- La frequenza di aggiornamento delle pagine, che comunica ai motori di ricerca quanto spesso una determinata pagina viene modificata.

Queste opzioni sono cruciali per definire una gestione efficace del tempo di scansione dei crawler e assicurarsi che vengano valorizzate le pagine più importanti del sito nel corso del tempo.

- Generare la sitemap e scaricarla

Una volta configurate le opzioni, si può procedere generando la sitemap XML. Il generatore creerà un file XML contenente tutte le pagine del sito (o quelle selezionate), pronte per essere inviate ai motori di ricerca.

Ricordiamo di prestare attenzione ad alcune caratteristiche chiave da rispettare nella sintassi: in particolare, Google sottolinea che tutti i valori dei tag devono essere con codici di escape, come di norma nei file XML. Inoltre, i valori e sono ignorati, mentre il valore è considerato solo se è corretto in modo coerente e verificabile (ad esempio, confrontandolo con l’ultima modifica della pagina). Nel dettaglio, deve riflettere la data e l’ora dell’ultimo aggiornamento significativo alla pagina: ad esempio, un aggiornamento ai contenuti principali, ai dati strutturati o ai link sulla pagina in genere è considerato significativo, mentre un semplice aggiornamento alla data del copyright non lo è.

Terminata la generazione, scarichiamo il file sitemap.xml e salviamolo sul dispositivo.

- Caricare il file sul server

Dopo aver creato e scaricato la sitemap, sarà necessario caricarla sul server del sito. Solitamente, la cartella root del sito è il posto più comune e consigliato per inserire il file (es.: www.tuosito.com/sitemap.xml). Questo permette a eventuali crawler di accedere facilmente al file anche in modalità automatica.

- Notificare Google attraverso Search Console

Una volta caricata la sitemap XML sul server, il passaggio finale è quello di notificarla a Google per avviare il processo di scansione. Per farlo, accediamo alla Google Search Console, selezioniamo il sito che stiamo gestendo e andiamo alla sezione “Sitemap”. Qui possiamo inserire l’URL del file sitemap.xml e inviarlo. Google eseguirà una scansione del sito sulla base delle informazioni fornite nel file e registrerà eventuali problemi di accesso o errori di indicizzazione.

Segnalare regolarmente la sitemap attraverso Google Search Console è una pratica essenziale per monitorare l’indicizzazione del sito e correggere eventuali problemi di scansione: il report, infatti, ci consente anche di sapere quando Googlebot ha eseguito l’accesso alla Sitemap caricata e di scoprire potenziali errori di elaborazione.

In realtà, abbiamo anche altri modi per rendere la Sitemap disponibile a Google: ad esempio, possiamo usare l’API Search Console per inviare una Sitemap in modo programmatico, oppure inserire la riga di testo “Sitemap: https://example.com/my_sitemap.xml” in qualsiasi punto del file robots.txt, specificando il percorso della Sitemap – Google lo rileverà alla successiva scansione del file robots.txt. Inoltre, se usiamo il formato Atom o RSS, possiamo usare WebSub per trasmettere le modifiche ai motori di ricerca, tra cui Google.

Creare una sitemap senza errori: a cosa prestare attenzione

Creare una sitemap efficace non significa soltanto elencare le pagine del sito, ma richiede anche una gestione accurata che segua le best practice per evitare errori che possano influire negativamente sulla SEO.

La guida di Search Console ci viene incontro elencando i possibili problemi in cui possiamo incappare durante la configurazione del file e degli errori che, se non identificati e risolti in modo tempestivo, rischiano di compromettere l’indicizzazione del sito a causa di una scorretta comunicazione con i motori di ricerca.

Google offre una serie di strumenti e report per facilitare l’individuazione e la risoluzione degli errori, ma è fondamentale saperli interpretare correttamente. Vediamo quali sono gli errori più comuni che si possono incontrare e come possiamo gestirli efficacemente.

- Errori di recupero delle sitemap

Uno degli errori più frequenti è legato all’impossibilità da parte di Google di recuperare la sitemap. Questo può essere dovuto a vari motivi. In alcuni casi, la sitemap è bloccata da regole presenti nel file robots.txt: se la sitemap viene bloccata da robots.txt, Google ovviamente non ha accesso per la scansione agli URL elencati all’interno, e sarà necessario modificare tale configurazione per permettere ai crawler di accedere ai contenuti.

Un altro motivo frequente riguarda l’URL errato della sitemap, che può portare a un errore 404. In tal caso, è essenziale verificare che l’URL fornito sia corretto e che il file sitemap.xml sia effettivamente presente sul server, accessibile all’indirizzo indicato. Questo problema può essere rapidamente risolto provando a richiamare la sitemap direttamente attraverso il browser: se non viene caricata, c’è un errore nell’indirizzo o il file non è stato caricato correttamente.

Se invece il sito è soggetto a un’azione manuale da parte di Google, tutte le sitemap saranno bloccate fino a quando il problema non sarà risolto. Questi casi di azione manuale segnalano problemi gravi con il sito, come pratiche che violano le linee guida di Google, ed è necessario consultare il report dettagliato in Google Search Console per risolvere tali problemi prima di poter re-inviare la sitemap.

A volte, ci possono essere errori di recupero dovuti a un problema temporaneo sul server che ospita il nostro sito. La mancata disponibilità momentanea del server o un carico eccessivo di richieste può impedire a Google di accedere alla sitemap. In questi casi, gli errori di recupero possono spesso essere risolti semplicemente riprovando a inviare la sitemap dopo qualche minuto.

Infine, Google potrebbe riscontrare un errore a causa di una bassa domanda di scansione del nostro sito. Se un sito è poco performante e contiene contenuti di bassa qualità, Google potrebbe ridurre la sua disponibilità a scansionarlo frequentemente. Questo sottolinea ancora una volta quanto sia importante produrre contenuti di alta qualità, che coinvolgano gli utenti e siano coerenti e pertinenti con il target del sito.

- Errori di analisi delle sitemap

Quando Google riesce a recuperare una sitemap può comunque incorrere in errori durante l’elaborazione dei contenuti. Questi errori spesso indicano problemi di accesso o correttezza degli URL contenuti nella sitemap. È possibile che Google segnali degli URL non accessibili, che sono stati elencati nella sitemap ma che non sono effettivamente disponibili per la scansione. Per risolvere questo problema, è utile utilizzare lo strumento Controllo URL all’interno di Google Search Console, che ci permette di verificare perché un URL non risulta raggiungibile.

Un errore comune che risulta in URL non seguiti si verifica quando Google si trova di fronte a troppi reindirizzamenti. Se un URL nella sitemap indirizza a una serie eccessiva di reindirizzamenti, il crawler potrebbe non essere in grado di seguirli tutti, compromettendo l’indicizzazione della pagina finale. In casi del genere, è sempre meglio eliminare i reindirizzamenti multipli e aggiornare la sitemap con gli URL finali e corretti.

Altro errore frequente si verifica quando la sitemap contiene URL relativi invece che assoluti. Google preferisce lavorare con URL completi contenenti l’indirizzo completo del dominio, come “https://www.example.com/mypage.html“, piuttosto che con URL parziali come “/mypage.html”. Questo rende ogni URL chiaro e univoco, senza possibilità di ambiguità.

- Errori di struttura e formattazione

Tra gli errori più tecnici che si possono verificare, c’è la presenza di URL non validi all’interno della sitemap. Ogni URL deve essere codificato correttamente, privo di spazi, caratteri non supportati o formattazioni errate. Anche i caratteri come “&”, “<“, “>” richiedono l’uso di caratteri di escape, che possono essere facilmente introdotti con strumenti di validazione XML o con cura manuale. Una formattazione scorretta dell’URL può impedire la corretta interpretazione del file da parte di Google, compromettendo l’intera scansione.

Collegato agli errori di URL non validi vi è il caso di livelli di URL impropri. Ogni sitemap deve rispettare una gerarchia, quindi se la sitemap.xml si trova in una sottocartella del sito, tutti gli URL indicati dovranno trovarsi nella stessa cartella o nelle cartelle inferiori gerarchicamente. Non è possibile includere URL che appartengono a cartelle parallele o a livelli superiori.

Un altro errore strutturale frequente riguarda le sitemaps compresse: Google supporta la compressione dei file, ma se durante la compressione vengono generati errori di decompressione, la sitemap non sarà leggibile. In tal caso, la soluzione è comprimere nuovamente il file utilizzando lo strumento corretto (di solito gzip) e inviarlo di nuovo.

Tra gli errori più frequenti c’è anche la dimensione eccessiva della sitemap: se il file sitemap.xml supera il limite di 50 MB o contiene più di 50.000 URL, Google non sarà in grado di processarlo. In tali situazioni, è necessario suddividere la sitemap in più file più piccoli e collegarli tramite un sitemap index, che permette di gestire file più grandi attraverso un sistema di suddivisione.

- Errori relativi ai tag XML

Gli errori legati ai tag XML nella sitemap sono spesso frutto di errori di digitazione o formattazione errata. Ogni tag deve seguire rigide regole di formattazione e utilizzare solo i valori supportati dal protocollo sitemap. A volte, ad esempio, vengono utilizzati tag duplicati all’interno della stessa voce URL, generando confusione per il motore di ricerca. Ogni errore viene segnalato specificamente con la riga del codice in cui si è verificato, rendendo più semplice la localizzazione e la correzione.

Uno degli errori più comuni legati ai tag XML riguarda l’attribuzione di date non valide o in formati errati. Le date nella sitemap devono seguire il formato Datetime di W3C, che permette di specificare sia la data che l’ora. La specificazione dell’ora è facoltativa, ma quando viene utilizzata, è necessario che sia accompagnata dal corretto fuso orario per garantire una completa leggibilità.

- Errori legati alle sitemap per video e news

Le sitemap dedicate ai contenuti video possono presentare errori specifici, come l’assenza di una miniatura video o il mancato caricamento di un titolo descrittivo del video. Ogni video incluso nella sitemap dovrebbe essere associato a un titolo ma anche a un’immagine thumbnail di dimensione appropriata. Se la miniatura è troppo piccola o troppo grande, la richiesta di Google verrà respinta. Anche la gestione degli URL nelle voci video è importante: l’URL del video non può coincidere con quello della pagina del “player”. Se indicati entrambi, devono essere distinti, altrimenti Google non sarà in grado di interpretare correttamente la relazione tra gli elementi.

Le sitemap dedicate ai contenuti di Google News, invece, presentano limitazioni sul numero massimo di URL accettabili (fino a 1000), e ogni URL deve avere allegato un tag , che fornisce dettagli sull’entità giornalistica o editoriale che ha prodotto i contenuti.

- Risoluzione degli errori comuni delle sitemaps

Per risolvere tutti questi errori, Google offre lo strumento di Controllo URL nella Google Search Console, che permette un’analisi dettagliata di ogni URL presente nella sitemap, verificandone l’accessibilità e fornendo suggerimenti per risolvere i problemi riscontrati. Inoltre, con l’espansione della sezione Disponibilità della pagina, possiamo capire se Google riesce ad accedere correttamente agli URL o se incontra problematiche pratiche.

Usare strumenti di validazione delle sitemap, correggere errori di formattazione XML e assicurarsi che ogni URL sia correttamente configurato sono passaggi fondamentali per mantenere un sito accessibile e scansionabile, migliorando così l’efficacia dell’intero processo di indicizzazione.

Gli errori comuni da evitare nella creazione della sitemap

Allargando ancora il quadro sugli errori più comuni che si compiono nella compilazione di una sitemap, non possiamo non citare la duplicazione di URL: può infatti capitare che una stessa pagina venga inserita più volte nella sitemap con varianti diverse del suo URL (come con o senza “www”, o con diverse query string). Questa duplicazione non solo complica il processo di indicizzazione, ma può anche causare problemi di contenuti duplicati, che penalizzano il posizionamento nei motori di ricerca.

Un altro errore molto diffuso è l’inclusione nella sitemap di pagine che non dovrebbero essere indicizzate, come pagine di test o pagine temporanee. Queste pagine andrebbero bloccate dall’indicizzazione utilizzando file robots.txt o i tag noindex. Inserire pagine non rilevanti nella sitemap disperde l’attenzione dei crawler sui contenuti meno importanti piuttosto che su quelli prioritari, rallentando il processo di indicizzazione.

Un elemento critico da tenere in considerazione è anche la dimensione eccessiva della sitemap. Una sitemap XML dovrebbe rispettare certi limiti, come detto: il file non dovrebbe superare i 50.000 URL o i 50 MB di dimensione. In caso contrario, si corre il rischio che non venga scansionata correttamente da Google. Per siti particolarmente grandi, è possibile suddividere le pagine in più sitemap, utilizzando un sitemap index che le riepiloghi.

Creare una sitemap non vuol dire farlo una volta e poi dimenticarsene: l’errore più comune e più penalizzante è non aggiornare la sitemap regolarmente. Se una sitemap non viene aggiornata periodicamente per riflettere le nuove pagine del sito, o se dimentichiamo di rimuovere URL inesistenti, i motori di ricerca potrebbero non riuscire a trovare i contenuti più recenti o, peggio ancora, tentare di scansionare URL che portano a pagine di errore 404, con gli impatti negativi che ne conseguono sulle performance di indicizzazione e sulla SEO complessiva.

Errori più sottili, ma altrettanto pericolosi, riguardano la mancata gestione corretta dei parametri di priorità e della frequenza di aggiornamento delle pagine. Questi attributi, se ignorati o mal configurati, comunicano informazioni errate ai motori di ricerca, portando a una scansione incompleta delle pagine più importanti.

Sitemap, i 10 errori da non commettere su un sito

Se questi sono alcuni dei check generali e prioritari da fare per verificare che la sitemap che abbiamo creato e sottoposto a Google sia efficace e valida, può essere utile avere uno “specchietto” riassuntivo sui 10 errori di sitemap che possono pregiudicare le prestazioni di un sito e influenzare negativamente i risultati nella ricerca organica, così da poter capire più facilmente se nel nostro processo di creazione del file siamo incappati in una situazione simile.

- Sbagliare formato per la sitemap

L’errore più comune con la sitemap riguarda il formato: Google supporta diverse tipologie di file da inserire in Search Console, attendendo l’utilizzo del protocollo Sitemap standard in tutti i formati e non prevedendo (al momento) l’attributo nelle Sitemap. I formati accettati sono quello XML, il più diffuso, e poi ancora .txt (file testuale, valido solo se la mappa contiene URL di pagine web e non altre risorse), feed RSS 2.0, mRSS e Atom 1.0, ma Google supporta anche la sintassi espansa delle Sitemap per determinati contenuti multimediali.

- Usare codifiche e caratteri non supportati

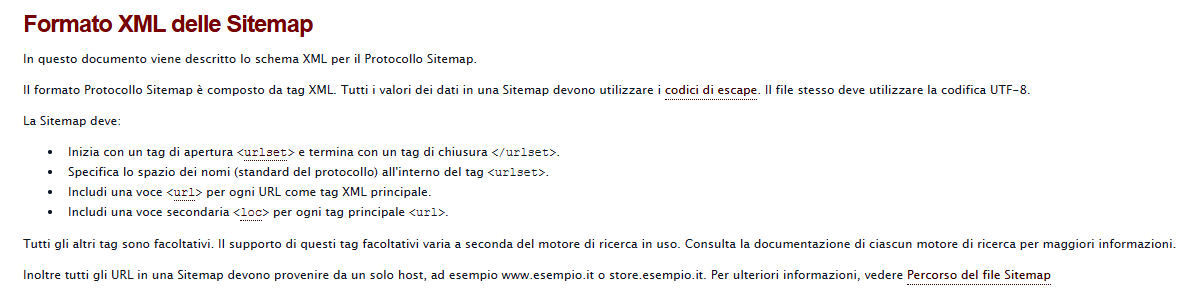

Una imprecisione simile, ma più specifica, riguarda il metodo di codifica dei caratteri usati per generare i file Sitemap: lo standard richiesto è la codifica UTF-8, che in genere si può applicate quando si salva il file, e la Guida di Google spiega che “tutti i valori dei dati (inclusi gli URL) devono utilizzare codici di escape” per alcuni caratteri critici (come si vede nell’immagine in pagina).

In dettaglio, una mappa può contenere soltanto caratteri ASCII, mentre non sono supportati caratteri ASCII maiuscoli né particolari codici di controllo e caratteri speciali (ad esempio, asterisco * e parentesi graffe {}). Se si usano questi caratteri nell’URL della Sitemap si genera un errore al momento di aggiungere la Sitemap.

- Superare le dimensioni massime dei file

Il terzo problema è frequente con i siti molto estesi, con centinaia di migliaia di pagine e tonnellate di contenuti: le dimensioni massime consentite per un file sitemap sono di 50.000 URL e 50 MB in formato non compresso. Quando il nostro file supera questi limiti, bisogna suddividere la mappa in Sitemap più piccole, creando poi un file Indice Sitemap in cui sono elencate le altre risorse e inviando a Google solo questo file. Questo passaggio serve a evitare “che il server venga sovraccaricato se Google richiede spesso la Sitemap”.

- Includere più versioni degli URL

È un inconveniente che si riscontra soprattutto con siti che non hanno completato perfettamente la migrazione da protocollo http a quello https, e quindi presentano pagine che hanno entrambe le versioni: se si includono entrambi gli URL nella Sitemap, il crawler potrebbe eseguire una scansione incompleta e imperfetta del sito.

- Inserire URL incompleti, relativi o non uniformi

È poi fondamentale comunicare correttamente agli spider dei motori di ricerca qual è il percorso preciso del collegamento da seguire, perché Googlebot e gli altri eseguono la scansione degli URL esattamente come sono indicati. Pertanto, inserire nel file Sitemap URL relativi, non uniformi o incompleti rappresenta un grave errore, perché genera link non validi. In termini pratici, questo significa che bisogna includere il protocollo, ma anche (se richiesto dal server web) la barra finale: l’indirizzo https://www.esempio.it/ rappresenta dunque un URL valido per una Sitemap, mentre www.esempio.it, https://example.com/ (senza usare il www) o ./mypage.html non lo sono.

- Includere ID di sessione nella Sitemap

Il sesto punto è piuttosto specifico: è la pagina delle FAQ del progetto sitemaps a chiarire che “l’inserimento di ID di sessione negli URL può comportare una scansione incompleta e ridondante del sito”. Dunque, includere nella tua Sitemap ID sessione di URL può provocare l’incremento della scansione duplicata di tali link.

- Sbagliare l’inserimento delle date

Sappiamo che i motori di ricerca, e in particolare Google, stanno attribuendo sempre maggiore attenzione all’inserimento delle date nelle pagine web, ma la gestione del “fattore tempo” può essere talvolta problematica per l’inserimento in Sitemap.

Il processo valido per l’inserimento delle date e degli orari del protocollo Sitemaps è usare la codifica W3C Datetime, che consente di gestire tutte le variabili temporali, compresi gli aggiornamenti periodici approssimativi, se pertinenti. I formati accettati sono, ad esempio, anno-mese-giorno (2019-04-28) o, specificando l’ora, 2019-04-28T18:00:15+00:00, indicando anche il fuso orario di riferimento.

Questi parametri possono evitare la scansione di URL che non hanno subito modifiche, e quindi ridurre i requisiti della larghezza di banda e della CPU per i server web.

- Non inserire attributi o tag XML obbligatori

Questo è uno degli errori che spesso vengono segnalati dal rapporto Sitemap della Search Console: in alcuni file possono mancare attributi XML obbligatori oppure tag XML obbligatori in una o più voci. All’inverso, è similmente sbagliato inserire tag duplicati in Sitemap: in tutti i casi, bisogna intervenire per correggere il problema e i valori degli attributi, per poi inviare nuovamente la Sitemap.

- Creare una sitemap vuota

Chiudiamo con due tipologie di errori tanto gravi quanto marchiani, che in pratica pregiudicano tutto quello che abbiamo detto di positivo e utile sulle mappe. Il primo è davvero da principianti, ovvero salvare un file vuoto che non contiene alcun URL e inviarlo alla Search Console: inutile aggiungere altro.

- Rendere una Sitemap non accessibile a Google

L’altro e ultimo problema riguarda un concetto fondamentale per chi lavora online e cerca di competere sui motori di ricerca: per assolvere al suo compito, la Sitemap “deve essere accessibile a e non deve essere bloccata da alcun requisito di accesso”, scrive Google, quindi tutti i blocchi e le limitazioni che sono riscontrate rappresentano un ostacolo al processo.

Un esempio è inserire nel file URL bloccati dal file robots.txt, che quindi non consente a Googlebot di accedere ad alcuni contenuti, che altrimenti non posso essere sottoposti alla normale scansione. Il crawler potrebbe anche avere difficoltà a seguire determinati URL, soprattutto se contengono troppi reindirizzamenti: il suggerimento in questo caso è di “sostituire il reindirizzamento nelle tue Sitemap con gli URL che dovrebbero essere effettivamente sottoposti a scansione” o, nel caso in cui tale redirect sia permanente, di usare appunto un reindirizzamento permanente, come si spiega nelle linee guida di Google.

Una guida per gestire le Sitemap con la Google Search Console

Gli strumenti della Google Search Console, e in particolare il Rapporto Sitemap, ci permettono di facilitare la comunicazione con i crawler del motore di ricerca, come spiegato anche da un episodio della webserie Google Search Console Training, in cui Daniel Waisberg ci accompagna alla scoperta di questo argomento.

In particolare, il Developer Advocate ci ricorda che Google supporta quattro modalità di sintassi espansa con cui possiamo fornire informazioni aggiuntive, utili per descrivere file e contenuti difficili da analizzare al fine di migliorarne l’indicizzazione: possiamo così descrivere un URL con immagini incluse o con un video, segnalare la presenza di lingue alternative o versioni geolocalizzate con le annotazioni hreflang, oppure (per i siti di news) usare una particolare variante che consente di indicare gli aggiornamenti più recenti.

Google e Sitemap

“Se non ho una Sitemap, Google può comunque trovare tutte le pagine del mio sito?”. Il Search Advocate risponde anche a questa domanda frequente, spiegando che una sitemap potrebbe non essere necessaria se abbiamo un sito relativamente piccolo e con una linking interna appropriata tra le pagine, perché Googlebot dovrebbe essere in grado di scoprire i contenuti senza problemi. Inoltre, potremmo non aver bisogno di questo file se abbiamo pochi file multimediali (video e immagini) o pagine di notizie che intendiamo mostrare nei risultati di ricerca appropriati.

Al contrario, in determinati casi una sitemap è utile e necessaria per aiutare Google a decidere cosa e quando sottoporre a scansione del tuo sito:

- Se abbiamo un sito molto grande, con il file possiamo indicare una priorità degli URL da scansionare.

- Se le pagine sono isolate o non ben collegate.

- Se abbiamo un sito nuovo (e quindi poco linkato da siti esterni) o con contenuti che cambiano rapidamente.

- Se il sito include molti contenuti rich media (video, immagini) o viene visualizzato in Google News.

Ad ogni modo, ci ricorda il Googler, l’uso di una sitemap non garantisce che tutte le pagine sia sottoposte a crawling e indicizzazione, anche se nella maggior parte dei casi fornire questo file ai bot del motore di ricerca può darci benefici (e di sicuro non dà svantaggi). Inoltre, le sitemaps non sostituiscono le scansioni normali e gli URL non inseriti nel file non sono esclusi dal crawling.

Come realizzare una sitemap secondo Google

Idealmente, il CMS che gestisce il sito può fare automaticamente dei file sitemap, usando dei plugin o delle estensioni (e ricordiamo il progetto per integrare le sitemap di default in WordPress), e Google stesso suggerisce di trovare un modo di creare sitemap in modo automatico anziché manualmente.

Ci sono due limiti alle sitemap, che non possono superare un numero massimo di URL (50mila per file) e una dimensione massima (50 MB non compressa), ma se necessitiamo di più spazio possiamo creare più sitemaps. Possiamo inoltre inviare tutte queste sitemaps insieme sotto forma di un file Indice Sitemap.

Il Rapporto Sitemap della Search Console

Per tenere sotto controllo queste risorse possiamo usare il Rapporto Sitemap in Search Console, uno degli strumenti per webmaster più utili, che serve per inviare a Google una nuova Sitemap per la proprietà, visualizzare la cronologia di invio, visualizzare eventuali errori riscontrati durante l’analisi e rimuovere file non più rilevanti. Questa azione rimuove la sitemap solo dalla Search Console e non dalla memoria di Google: per cancellare una sitemap dobbiamo rimuoverla dal nostro sito e fornire un 404; dopo vari tentativi, Googlebot smetterà di seguire quella pagina e non aggiornerà più la sitemap.

Lo strumento ci consente di gestire tutte le Sitemaps del sito, a patto che siano state inviate attraverso la Search Console, e quindi non mostra file scoperti attraverso robots.txt o altri metodi (che comunque possono essere sottoposti in GSC anche se già rilevati).

Il rapporto sitemap contiene le informazioni su tutti i file inviati, e in particolare l’URL del file relativo alla radice della proprietà, il tipo o formato (come XML, text, RSS o atom), la data di invio, la data dell’ultima lettura di Google, il crawl status (dell’invio o della scansione), il numero di URL rilevati.

Come leggere gli stati della sitemap

Il rapporto indica tre possibili stati dell’invio o della scansione della sitemap.

- Completato è la situazione ideale, perché significa che il file è stato caricato ed elaborato in modo corretto e senza errori e che tutti gli URL saranno messi in coda per la scansione.

- Presenta errori significa che la Sitemap potrebbe essere analizzata, ma presenta uno o più errori; gli URL che potrebbero eventualmente essere analizzati verranno messi in coda per la scansione. Cliccando sulla tabella del rapporto possiamo scoprire maggiori dettagli sui problemi e avere indicazioni sugli interventi di correzione.

- Impossibile recuperare, se qualche motivo ha impedito il recupero del file. Per scoprire la causa dobbiamo fare un test in tempo reale sulla Sitemap con lo strumento Controllo URL.

Sitemap XML, 3 passaggi per migliorare la SEO

Nonostante tutte le accortezze che possiamo usare, ci sono comunque situazioni in cui la sitemap presenta criticità che possono diventare un ostacolo per le prestazioni organiche; per evitare noie e problemi, ci sono tre passaggi fondamentali da valutare, che possono anche a migliorare la SEO, come suggerisce un articolo pubblicato da searchengineland che ci segnala una rapida checklist da seguire per le nostre sitemap fornite ai crawler dei motori di ricerca, utili a evitare errori come l’assenza di URL importanti (che potenzialmente quindi potrebbero non essere indicizzati) o l’inserimento di URL sbagliati.

Verificare la presenza degli URL prioritari e rilevanti

Il primo passo è verificare che abbiamo inserito nella sitemap tutti gli URL chiave del sito, quelli cioè che rappresentano il cardine della nostra strategia online.

Una Sitemap XML può essere statica, rappresentando quindi un’istantanea del sito Web al momento della creazione (e quindi non più aggiornata successivamente) oppure, in maniera più efficace, dinamica. La sitemap dinamica è preferibile perché si aggiorna automaticamente, ma le impostazioni devono essere controllate per assicurarci di non escludere sezioni o URL centrali per il sito.

Per verificare che le pagine rilevanti siano tutte incluse nella sitemap possiamo fare anche una semplice ricerca con il comando site: di Google, così da scoprire immediatamente se i nostri URL chiave sono stati correttamente indicizzati. Un metodo più diretto è usare alcuni strumenti di crawling con cui confrontare le pagine effettivamente indicizzate e quelle inserite nella sitemap sottoposta al motore di ricerca.

Controllare se sono inseriti URL da rimuovere

Di segno completamente opposto il secondo controllo: non tutti gli URL vanno inseriti nella sitemap XML ed è anzi meglio evitare di includere indirizzi che abbiano determinate caratteristiche, come

- URL con status code HTTP 4xx / 3xx / 5xx.

- URL canonicalizzati.

- URL bloccati da robots.txt.

- URL con noindex.

- URL di paginazione.

- URL orfani.

Una sitemap XML dovrebbe normalmente contenere solo URL indicizzabili, che rispondono con un codice di stato 200 e che sono collegati all’interno del sito Web. Includere altre tipologie di pagine, come quelle indicate, potrebbe contribuire a peggiorare il crawl budget e potenzialmente causare problemi, come l’indicizzazione di URL orfani.

Fare una scansione della sitemap con gli strumenti di crawling permette di evidenziare se ci sono risorse inserite in maniera errata e, quindi, di intervenire per rimuoverle.

Accertarci che Google abbia indicizzato tutti gli URL della sitemap XML

L’ultimo step riguarda il modo in cui Google ha recepito la nostra mappa: per avere un’idea migliore di quali URL siano stati effettivamente indicizzati, dobbiamo inviare la Sitemap in Search Console e usare il citato rapporto Sitemap e il rapporto sullo stato della copertura dell’indice, che ci offrono indicazioni sulla copertura del motore di ricerca.

In particolare, l’index coverage report ci permette di controllare la sezione degli Errori (che mette in luce problemi con le mappe come URL che generano un errore 404) e quella degli URL Esclusi (pagine che non sono state indicizzate e non appaiono su Google), indicando anche i motivi di questa assenza.

Se si tratta di pagine utili – non duplicate né bloccate – potrebbe esserci un problema di qualità, come i famosi thin content o contenuti sottili, o uno status code non corretto, in particolare per le pagine scansionate ma attualmente non indicizzate (Google ha scelto di non inserire per ora la pagina nell’Indice) e per le pagine rilevate, ma non indicizzate (Google ha provato a fare una scansione, ma il sito era sovraccarico), e quindi è il caso di intervenire con opportune ottimizzazioni onsite.