SafeSearch di Google: cos’è e come funziona il filtro

Letteralmente si traduce con “ricerca sicura” ed è il mezzo con cui Google prova appunto ad assicurare una navigazione priva di rischi ai suoi utenti: negli anni, SafeSearch si è imposto come uno strumento indispensabile per chi desidera mantenere un ambiente di navigazione online sicuro e pulito. Questo filtro non aiuta però solo a proteggere gli utenti, impedendo l’accesso a contenuti espliciti o inappropriati, ma può anche diventare una significativa preoccupazione per chi gestisce un sito web, perché essere filtrati da SafeSearch potrebbe infatti limitare la visibilità e il traffico delle proprie pagine. Ecco che, quindi, diventa fondamentale comprendere bene che cos’è SafeSearch, come funziona, come attivarlo e disattivarlo e quali sono le sue implicazioni, così da poter avere un approccio consapevole e proattivo in ogni situazione.

Che cos’è SafeSearch di Google

SafeSearch di Google è un sistema di filtri che agiscono a livello di browser per impedire la visualizzazione di contenuti inappropriati agli utenti. La funzione utilizza complessi algoritmi e tecniche di machine learning per analizzare e classificare miliardi di pagine web, assicurando che le informazioni visualizzate rispettino criteri di sicurezza prestabiliti; in questo modo, si propone di rendere più sicure le ricerche online, stabilendo un ambiente web più adatto per i minori e per chiunque desideri evitare incontri accidentali con contenuti sgradevoli.

Introdotti per la prima volta nel 2009, i filtri SafeSearch di Google sono il sistema con cui l’utente può modificare le impostazioni del browser per filtrare i contenuti espliciti dalla visualizzazione nei risultati di ricerca. Questa funzionalità è fondamentale per garantire che tipologie di utenti particolarmente sensibili – innanzitutto bambini, ma anche studenti o adulti all’interno di un’organizzazione – non siano esposti a materiali espliciti quando utilizzano il motore di ricerca di Google.

In pratica, questi filtri automatici bloccano la visualizzazione di contenuti espliciti, inclusi immagini e video pornografici, scene di violenza e altri tipi di materiali inappropriati o comunque potenzialmente offensivi. A partire da un aggiornamento di agosto 2023, la Ricerca Google contrassegna e sfoca automaticamente tali contenuti per tutti, come impostazione predefinita per gli utenti a livello globale.

Questo strumento è particolarmente utile per i genitori che desiderano proteggere i propri figli da contenuti non adatti alla loro età, ma è altrettanto utile per chiunque voglia evitare di imbattersi in contenuti indesiderati durante la navigazione, sia durante le ore di lavoro che nell’uso personale. Inoltre, può essere attivato o disattivato in qualsiasi momento, offrendo agli utenti un controllo completo sulla propria esperienza di navigazione.

In ottica SEO, SafeSearch può avere un impatto sul traffico proveniente dalla ricerca organica di Google, perché può appunto portare all’esclusione di risultati giudicati appunto espliciti, che vengono nascosti per parte degli utenti. Oltre che ai risultati organici standard, infatti, il filtro SafeSearch si applica ai risultati di Google immagini, e poi a video, pubblicità e persino interi siti Web, e quindi è un elemento da valutare all’interno di una strategia specialmente per quei siti che possono apparire border line.

A cosa serve il filtro SafeSearch di Google

Il significato di SafeSearch è intrinsecamente legato alla sicurezza sulla rete. Google ha introdotto questo filtro per fornire a tutti gli utenti una forma di protezione contro contenuti espliciti, e la sua capacità di filtrare automaticamente contenuti inappropriati attraverso algoritmi avanzati e machine learning lo rende un alleato potente per genitori, educatori, amministratori di rete e utenti consapevoli.

La realizzazione di questo sistema rientra nei più ampi sforzi del motore di ricerca di creare un ambiente di navigazione più sicuro, e dal 2009 il filtro SafeSearch è diventato uno strumento fondamentale per milioni di utenti in tutto il mondo, rappresentando un passo importante nella lotta contro la diffusione incontrollata di contenuti inappropriati su Internet. Google ha dimostrato di prendere sul serio la protezione degli utenti, soprattutto dei più giovani, e di essere disposto a investire risorse significative per garantire un’esperienza di navigazione sicura.

Anche se può servire a diversi scopi, infatti, il principale obiettivo di SafeSearch è proteggere gli utenti. Questa protezione è cruciale per genitori e educatori, che desiderano creare un ambiente sicuro per i più giovani. Anche in ambito aziendale, implementare SafeSearch sui dispositivi lavorativi può aiutare a mantenere standard professionali, evitando che i dipendenti accedano a contenuti inappropriati durante l’orario di lavoro. Inoltre, l’utilizzo di SafeSearch è utile per chiunque desideri mantenere un’esperienza di navigazione libera da sorprese indesiderate.

Chi è interessato da SafeSearch?

Molti utenti preferiscono non visualizzare contenuti espliciti nei propri risultati di ricerca, dichiara il documento ufficiale di Mountain View, che si caratterizza come pagina guida per capire il funzionamento di SafeSearch e arrivare alla risoluzione dei problemi più comuni.

In generale, è importante comprendere che l’attivazione di SafeSearch non riguarda solo gli utenti finali, ma ha implicazioni anche per i proprietari di siti web.

Se per gli utenti, SafeSearch è un valido alleato per navigare in sicurezza, per chi gestisce un sito web può essere una sfida, motivo per cui è fondamentale sapere come i contenuti vengono classificati da Google. Se un sito contiene materiale che potrebbe essere filtrato o pubblica risorse che possono apparire esplicite secondo gli algoritmi di Google, infatti, il proprietario potrebbe vedere un impatto significativo sul traffico organico, soprattutto se il target include gruppi che utilizzano comunemente SafeSearch, come famiglie e istituzioni educative.

Come funziona SafeSearch, il filtro ai contenuti espliciti in SERP

Dal punto di vista pratico, i filtri SafeSearch di Google consentono agli utenti di cambiare l’impostazione del browser per contribuire a filtrare i contenuti espliciti e impedirne la visualizzazione nei risultati di ricerca.

Nello specifico, SafeSearch è progettato per filtrare i risultati che portano a rappresentazioni visive di:

- Contenuti di natura sessuale espliciti di qualsiasi tipo, inclusi quelli pornografici

- Nudità

- Sex toy fotorealistici

- Servizi di escort o incontri sessuali

- Violenza o spargimenti di sangue

- Link a pagine con contenuti espliciti

La guida sottolinea che SafeSearch è progettato specificamente per filtrare le pagine che pubblicano immagini o video che contengono seni o genitali nudi, così come blocca le pagine con collegamenti, popup o annunci che mostrano o puntano a contenuti espliciti.

SafeSearch funziona grazie ai sistemi automatizzati di Google, che utilizzano l’apprendimento automatico e una varietà di segnali per identificare contenuti espliciti, comprese le parole sulla pagina web di hosting e all’interno dei link. Forse è superfluo sottolinearlo, ma SafeSearch funziona solo sui risultati di ricerca di Google, pertanto non blocca i contenuti espliciti che troviamo su altri motori di ricerca o in siti web visitati direttamente.

Non è quindi una semplice blacklist di siti web vietati, ma un sistema alimentato da intelligenza artificiale che lavora in background per esaminare continuamente nuove e vecchie pagine web, determinandone il livello di appropriatezza. Attraverso l’uso di tecniche di apprendimento automatico, Google è in grado di migliorare costantemente il filtro e rilevare nuovi contenuti espliciti o inappropriati. Questo approccio dinamico permette a SafeSearch di rimanere aggiornato con l’evoluzione del web, identificando anche contenuti che potrebbero sfuggire a una revisione manuale.

SafeSearch e i contenuti per adulti

Quando SafeSearch è attivo, Google esclude in modo automatico contenuti per adulti dai risultati di ricerca, concentrandosi su immagini, video e link espliciti. L’obiettivo è ridurre al minimo la possibilità che questi contenuti siano visti accidentalmente. Questo è estremamente importante non solo per bambini e adolescenti, ma anche per chiunque voglia evitare contenuti potenzialmente sconvolgenti o disturbanti. SafeSearch è supportato da report degli utenti e revisioni continue da parte del team di Google, assicurando che il filtro rimanga efficace e attuale.

Come attivare SafeSearch su Google: le indicazioni per gli utenti

Concentriamo innanzitutto sulle implicazioni lato utente.

Attivare SafeSearch è un processo semplice che può essere eseguito da qualsiasi dispositivo dotato di un browser internet. Su desktop, basta andare nelle impostazioni di ricerca di Google e abilitare l’opzione SafeSearch. Su dispositivi mobili, il processo è altrettanto semplice: accedere alle impostazioni del motore di ricerca e selezionare l’opzione dedicata. Questo strumento offre anche la possibilità di bloccare SafeSearch con una password, garantendo che la funzione rimanga attiva e non possa essere disattivata dai bambini o da chi utilizza il dispositivo in modo non autorizzato.

Le impostazioni SafeSearch possono quindi essere gestite all’interno del browser o nel proprio Account Google. Di base, ci sono tre possibili “condizioni” – Filtra, Sfoca o Off – che fanno riferimento in maniera intuitiva allo stato di attività o inattività della funzionalità.

Nello specifico, attivando Filtra è possibile bloccare i contenuti espliciti rilevati: questa è l’impostazione predefinita quando i sistemi di Google indicano che l’utente potrebbe avere meno di 18 anni (o se un minorenne esegue l’accesso dal suo account Google).

La selezione Sfoca permette di sfocare le immagini esplicite, ma potrebbe mostrare testo e link espliciti se sono pertinenti alla ricerca: è l’impostazione predefinita a livello mondiale, come detto in precedenza, ed è il nuovo standard dell’esperienza di Google Immagini. In pratica, offusca le immagini esplicite che appaiono eventualmente nei risultati di ricerca anche se il filtro SafeSearch non è attivato completamente.

Quando SafeSearch è impostato su Off, l’utente visualizzerà risultati pertinenti per la ricerca, anche di tipo esplicito.

Pertanto, quando l’utente imposta e attiva SafeSearch, Google filtrerà alcune (o tutte) le pagine dei siti che contengono appunto contenuti espliciti (tra immagini, video e testi) secondo le valutazioni algoritmiche.

Nella maggior parte dei casi, l’attivazione del filtro è una libera scelta manuale, che si può successivamente modificare intervenendo sulle opzioni delle preferenze di ricerca; in altre situazioni, però, ci potrebbe essere un blocco “a monte”, come nel caso di istituzioni, scuole, dipartimenti IT e altri contesti in cui un amministratore superiore può imporre il blocco ai livelli più bassi, o come detto per la navigazione dei minorenni.

Come funziona SafeSearch su Google Chrome

Google Chrome offre alcune impostazioni specifiche per la gestione di SafeSearch direttamente dal browser. Attraverso il Parental Controls di Chrome, gli amministratori di rete o i genitori possono configurare SafeSearch perché rimanga attivo indipendentemente dalle modifiche effettuate dagli utenti del browser. Questo è particolarmente utile per scuole e aziende, dove la compliance ai regolamenti di sicurezza è cruciale. Anche per gli utenti domestici, questa caratteristica rende più semplice mantenere SafeSearch attivo sulle configurazioni multi-utente.

Disattivazione di SafeSearch Google: i passaggi

Anche se SafeSearch offre protezione, ci sono situazioni in cui un utente potrebbe volerlo disattivare. Ad esempio, per chi ricerca contenuti per scopi educativi o giornalistici, potrebbe essere necessario accedere a informazioni che SafeSearch potrebbe filtrare erroneamente. Per disattivare SafeSearch, è sufficiente tornare nelle impostazioni di ricerca di Google e deselezionare l’opzione.

È importante essere consapevoli delle conseguenze: la disattivazione rimuove il filtro, esponendo potenzialmente l’utente a materiale inappropriato.

SafeSearch si attiva da solo: perché succede e come risolvere

A volte, SafeSearch può attivarsi automaticamente su un dispositivo. Questo succede principalmente in due casi: quando il dispositivo è collegato a una rete controllata da una scuola o un’azienda che impone impostazioni di filtro, o se è stato configurato durante l’installazione di app di controllo parentale. Se troviamo che SafeSearch si è attivato da solo e desideriamo disattivarlo, dobbiamo controllare le impostazioni di rete o le configurazioni dell’applicazione di parental control.

Disattivare queste impostazioni potrebbe richiedere permessi amministrativi, quindi è importante verificare di avere accesso completo al dispositivo.

Google SafeSearch e gestione dei siti web: possibili problemi e soluzioni

SafeSearch di Google è quindi uno strumento fondamentale per il filtraggio di contenuti espliciti, ma cambiando prospettiva può comportare una sfida non indifferente per chi gestisce un sito e vuole assicurarsi di non essere inavvertitamente escluso dai risultati di ricerca.

È infatti importante sottolineare che, per quanto sofisticato, il filtro SafeSearch non è infallibile: nonostante gli sforzi di Google, che ora applica i più recenti sistemi di apprendimento automatico per rilevare contenuti espliciti che dovrebbero essere filtrati, alcuni contenuti inappropriati potrebbero sfuggire al filtro e, aspetto più problematico per chi gestisce siti, a volte succede anche l’opposto, ovvero di filtri applicati a siti “incolpevoli”.

È evidente che questo secondo caso può essere particolarmente spinoso per chi gestisce i siti: la possibilità, per quanto remota, che un sito possa essere erroneamente etichettato come inappropriato dal filtro SafeSearch è da tenere presente sempre nelle valutazioni sulle fluttuazioni di traffico e rendimenti, perché questa situazione può provocare chiaramente un impatto negativo sulla visibilità del sito nei risultati di ricerca.

Quando infatti il filtro SafeSearch è attivato e i contenuti del sito vengono considerati espliciti, le pagine smettono di apparire in SERP per determinate query che portano al sito, senza che però ci siano comunicazioni da parte di Google che spieghino la situazione – in effetti, nello strumento Rimozioni di Search Console esiste la sezione Filtro SafeSearch, che però riporta “solo” una cronologia delle pagine del sito segnalate dagli utenti di Google come contenuti per adulti, un elenco che quindi può essere parziale.

Il primo problema dei siti con SafeSearch potrebbe quindi essere questo: d’improvviso, le visite organiche si contraggono in modo quasi inspiegabile.

Soprattutto se trattiamo temi “delicati” (con parole chiave o immagini che l’algoritmo associa a contenuti inappropriati), l’attivazione di SafeSearch sui dispositivi degli utenti è una possibilità da non scartare nell’analisi del traffico, ma a volte ci possono essere situazioni al limite, in cui la tagliola del filtro colpisce pagine malgiudicate sensibili, provocando una perdita di visibilità per quei contenuti nelle SERP e quindi un calo del traffico successivo al sito. Ciò capitava più spesso negli anni passati, quando SafeSearch poteva mal-interpretare alcuni termini o immagini e bloccare pagine neutre, oppure nascondere un intero sito anche se i contenuti espliciti interessavano solo una piccola quota di articoli.

È quindi importante determinare se i nostri contenuti sono identificati come espliciti dai complessi algoritmi del sistema, e possiamo eseguire due rapidi controlli a livello di pagina e di sito.

Per verificare se una singola pagina è bloccata da SafeSearch è sufficiente eseguire una ricerca che la faccia apparire nella Ricerca Google e cliccare su “attiva SafeSearch”: se in questo modo la pagina “sparisce” dai risultati, è probabile che sia interessata dal filtro SafeSearch per la query in questione.

Per scoprire invece se l’intero sito viene considerato come esplicito, possiamo attivare SafeSearch e usare l’operatore di ricerca site: (che sappiamo essere utile anche per verificare la corretta indicizzazione delle pagine normali). Se non appaiono risultati, significa che Google sta effettivamente filtrando l’intero sito attraverso la funzionalità SafeSearch.

Se invece abbiamo riscontrato un calo di traffico su determinati URL e ipotizziamo che la causa possa essere appunto l’erronea applicazione del filtro, possiamo usare il comando site: sugli URL incriminati e appurare la situazione, controllando se e quali pagine del dominio sono viste come esplicite.

Come ottimizzare il sito per SafeSearch

Per fortuna, in qualità di proprietari o gestori di un sito web, abbiamo alcuni modi che possiamo utilizzare per aiutare Google a comprendere la natura di sito e contenuti, seguendo in particolare i passaggi descritti nella guida ufficiale, che ci permettono di migliorare l’eventuale applicazione dei filtri SafeSearch al nostro progetto.

Nello specifico, la guida ci presenta i quattro metodi che abbiamo a disposizione per proteggere un sito che pubblica contenuti per adulti o comunque qualsiasi tipo di contenuto che potrebbe essere considerato esplicito e per consentire a Google comprendere la natura del sito e di identificare con maggior precisione questi argomenti da eventuali parti “sicure”: l’utilizzo del meta tag rating, il raggruppamento dei contenuti espliciti in una posizione separata, il permesso a Google di recuperare i file dei contenuti video e il permesso a Googlebot di eseguire la scansione senza verifica dell’età.

Questi passaggi servono a identificare le pagine esplicite ed, essenzialmente, ad attivare correttamente i filtri SafeSearch sul sito, che è la via più certa per evitare situazioni impreviste e spiacevoli. Come ci spiega anche Google, questi passaggi ci permettono di garantire che gli utenti visualizzino i risultati desiderati o che si aspettano di trovare, senza restar sorpresi quando visitano i siti mostrati nei risultati di ricerca, e allo stesso tempo supportano i sistemi di Google a riconoscere che l’intero sito non è di natura esplicita, ma pubblica anche contenuti non espliciti.

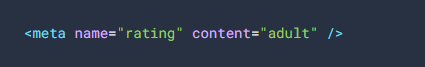

- Aggiungere metadati alle pagine con contenuti espliciti

Il primo consiglio di Google è aggiungere metadati a pagine con contenuto esplicito: uno dei segnali più forti che i sistemi del motore di ricerca utilizzano per identificare le pagine con contenuti espliciti è proprio il contrassegno manuale da parte degli editori di pagine o intestazioni con il meta tag rating o un’intestazione della risposta HTTP.

Oltre che content=”adult”, Google riconosce e accetta anche l’indicazione content=”RTA-5042-1996-1400-1577-RTA”, che è un modo equivalente e parimenti corretto per fornire la stessa informazione (non è necessario aggiungere entrambi i tag).

Il tag va aggiunto a qualsiasi pagina con contenuto esplicito: secondo Google, questa è l’unica cosa da fare “se il sito ha solo una quantità relativamente piccola di contenuto esplicito”. Ad esempio, se un sito di diverse centinaia di pagine presenta alcune pagine con contenuto esplicito, in genere è sufficiente contrassegnare tali pagine e non servono altri interventi, come raggruppare i contenuti in un sottodominio.

In alcuni casi, se utilizziamo un sistema CMS come Wix, WordPress o Blogger, potremmo non essere in grado di modificare direttamente il codice HTML oppure potremmo scegliere di non farlo; in alternativa, il CMS potrebbe avere una pagina di impostazioni per il motore di ricerca o qualche altro meccanismo per indicare ai motori di ricerca i tag meta.

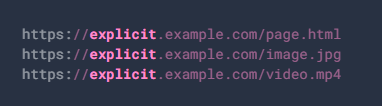

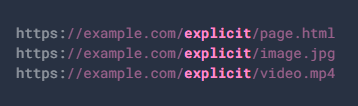

- Raggruppare le pagine esplicite in una posizione separata

Il secondo sistema per aiutare Google a focalizzare il suo filtro SafeSearch attiene alla struttura del sito ed è adatto a siti che pubblicano quantità significative di contenuto esplicito e non esplicito. In pratica, si tratta di separare e rendere evidente la distinzione tra questi contenuti anche a livello strutturale, utilizzando un sottodominio differente o una directory separata.

Ad esempio, spiega la guida, tutti i contenuti espliciti possono essere inseriti in un dominio o sottodominio separato come in questo caso:

Oppure, tutto il contenuto esplicito può alternativamente essere raggruppato in una directory separata, come in questo esempio:

Il documento chiarisce che non è necessario utilizzare la parola “esplicito” o (o l’inglese “explicit”) in una cartella o in un dominio, ma importa solo che il contenuto sia raggruppato e separato dal contenuto non esplicito. Se non c’è questa distinzione, infatti, i sistemi di Google potrebbero “determinare che l’intero sito sembri di natura esplicita” quindi potrebbero “filtrare l’intero sito quando SafeSearch è attivo, anche se alcuni contenuti potrebbero non essere espliciti”.

- Permettere a Google di recuperare i file dei contenuti video

L’altra operazione che può essere utile eseguire per ottimizzare il sito per SafeSearch è consentire a Googlebot di recuperare i file video, in modo che Google possa comprendere i contenuti video e offrire un’esperienza migliore agli utenti che non vogliono o non si aspettano di vedere risultati espliciti.

Queste informazioni vengono utilizzate anche per identificare meglio le potenziali violazioni delle norme relative ad abuso e sfruttamento sessuale di minori.

Se non permettiamo il recupero del file video incorporato, e se i sistemi automatici di SafeSearch indicano che la pagina potrebbe contenere materiale pedopornografico o altri contenuti multimediali vietati, Google potrebbe limitare o impedire la rilevabilità di pagine esplicite.

- Consentire a Googlebot di eseguire la scansione senza verifica dell’età

L’ultima opzione riguarda i contenuti protetti da una verifica dell’età obbligatoria: in questi casi, Google consiglia espressamente di consentire a Googlebot di eseguire la scansione senza attivare la verifica dell’età. A questo scopo, possiamo verificare le richieste di Googlebot e quindi pubblicare i contenuti senza verifica dell’età.

Identificare e risolvere i problemi con SafeSearch

Come detto, i sistemi di Google non sono (ancora) infallibili e gli algoritmi potrebbero erroneamente contrassegnare come espliciti dei contenuti neutri anche se abbiamo apportato le modifiche suggerite.

Prima di lanciare una “richiesta di aiuto” con intervento manuale dei tecnici della compagnia, dobbiamo seguire una serie di indicazioni preliminari, e in particolare:

- Attendere fino a 2-3 mesi dopo aver apportato una modifica, perché i classificatori di Google potrebbero aver bisogno di più tempo per elaborare tali interventi.

- Anche pubblicare immagini esplicite sfocate su una pagina può comunque portare a considerare esplicita la risorsa, se l’effetto blur può essere annullato oppure se rimanda a un’immagine non sfocata.

- La presenza di nudità per qualsiasi motivo, anche per illustrare una procedura medica, può far scattare il filtro, perché l’intento “non nega la natura esplicita di quel contenuto”.

- Il sito potrebbe essere considerato esplicito se pubblica contenuti generati dagli utenti che sono espliciti o se presenta contenuti espliciti iniettati da hacker che utilizzano parole chiave nascoste con cloaking o altre tecniche illecite.

- Le pagine esplicite non sono idonee per alcune funzionalità dei risultati di ricerca, come i </a href=”https://www.seozoom.it/rich-results-snippet-google-seo/”>rich snippet, gli snippet in primo piano o le anteprime video.

- Con il test degli URL pubblicati dello strumento Controllo URL in Search Console possiamo verificare che Googlebot riesca a eseguire la scansione senza attivare alcuna verifica dell’età.

La guida chiarisce poi un ultimo aspetto: SafeSearch si basa su sistemi automatici ed è possibile ribaltare le decisioni automatiche solo nei casi in cui il sito sia stato chiaramente classificato in modo errato da SafeSearch, che filtra erroneamente i contenuti pubblicato.

Se riteniamo di essere in questa condizione, e se sono trascorsi almeno 2-3 mesi da quando abbiamo seguito le indicazioni per l’ottimizzazione del sito, possiamo richiedere una revisione compilando un apposito modulo pubblicato online.