Meta Tags Robots and Robots.txt, best practices for the optimization

For those who do not have special skills in the field of technical SEO, at first glance the terms robots.txt and meta tag robots could confuse and apparently indicate the same thing; in reality, these elements are very different, while having a trait in common, to be instructions communicated to search engine robots. We try to deepen the importance of these factors with the best practices to follow in order not to make mistakes.

Robots.txt and Meta Tag Robots, differences and ways of use

There is a fundamental difference between the robots.txt file and the meta tag robots, as we already said in our specific focus on this topic. While the instructions for crawlers in the robots.txt apply to the entire site, meta tag robots are specific to the individual page.

As one searchenginejournal article explains, there is no better tool than the other to use in SEO perspective, but it is the experience and skills that can push you to prefer one method rather than the other depending on the case. For example, author Anna Crowe admits to using meta tag robots in many areas so “other SEO professionals might simply prefer the simplicity of the robots.txt file”.

What a robots file does

A robots.txt file is part of the robot exclusion protocol (REP) and tells crawlers (such as Googlebot) what to scan.

Google engages Googlebot to scan websites and record information on that site to understand how to classify it in search results. You can find the robots.txt file of any site by adding /robots.txt after the web address, in this way:

www.mywebsite.com/robots.txt

The file’s instructions

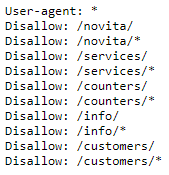

The first field that is displayed is that of the user-agent: if there is an asterisk *, it means that the instructions contained apply to all bots landing on the site, without exceptions; alternatively, it is also possible to give specific indications to a single crawler.

The slash after “disallow” signals to the robot the categories/sections of the site from which it must stay away, while in the allow field you can give directions on the scan.

The value of the robots.txt file

In the SEO consulting experience of the author, it often happens that customers – after the migration of a site or the launch of a new project – complain because they do not see positive results in ranking after six months; 60 percent of the time the problem lies in an outdated robots.txt file.

In practical terms, almost six out of ten sites have a robots.txt file that looks like this:

User-agent: *

Disallow: /

This instruction blocks all web crawlers and every page of the site.

Another reason why robots.txt is important is Google’s crawl budget: especially if we have a large site with low quality pages that we don’t want to scan from Google, we can block them with a disallow in the robots.txt file. This allows us to free up part of the Googlebot scan budget, which could use its time to only index high-quality pages, the ones we want to place in the SERPs.

In July 2019 Google announced its intention to work on an official standard for the robots.txt, but to date there are no fixed and strict rules and to orient you have to refer to the classic best practices implementation.

Suggestions for the management of the robots.txt file

And so, file instructions are crucial for SEO but can also create some headaches, especially for those who do not chew technical knowledge. As we said, the search engines scan and index the site based on what they find in the robots.txt file using directives and expressions.

These are some of the most common robots.txt directives:

- User-agent: * – This is the first line in the robots.txt file to provide crawlers with the rules of what you want scanned on the site. The asterisk, as we said, informs all the spiders.

- User-agent: Googlebot – These instructions are only valid for the Google spider.

- Disallow: / – This tells all crawlers not to scan the entire site.

- Disallow: – This tells all crawlers to scan the entire site.

- Disallow: / staging / : instructs all crawlers to ignore the staging site.

- Disallow: / ebooks / * .pdf : instructs crawlers to ignore all PDF formats that may cause duplicate content problems.

- User-agent: Googlebot

Disallow: / images / – This only tells the Googlebot crawler to ignore all the images on the site. - * – This is seen as a wildcard character representing any sequence of characters.

- $ : It is used to match the end of the URL.

Before starting to create the robots.txt file there are other elements to remember:

- Format the robots.txt correctly. The structure follows this scheme:

User-agent → Disallow → Allow → Host → Sitemap

This allows search engine spiders to access the categories and Web pages in the right order.

- Make sure that each URL indicated with “Allow:” or “Disallow:” is placed on a separate line and do not use spacing for separation.

- Always use lowercase letters to name the robots.txt.

- Do not use special characters except * and $; other characters are not recognized.

- Create separate robots.txt files for the various subdomains.

- Use # to leave comments in your robots.txt file. Crawlers do not respect lines with #.

- If a page is not allowed in robots.txt files, the fairness of the link will not pass.

- Never use robots.txt to protect or block sensitive data.

What to hide with the file

Robots.txt files are often used to exclude directories, categories or specific pages from Serps, simply using the “disallow” directive. Among the most common pages you can hide, according to Crowe, are:

- Pages with duplicated contents (often printer-friendly contents)

- Pagination pages.

- Dynamic pages of products and services.

- Account pages.

- Admin pages.

- Shopping cart

- Chat

- Thank you page.

How do Meta Tag Robots work

Also called meta robot directives, meta tag robots are snippets of HTML code, added to the <head> section of a Web page, which tell search engine crawlers how to scan and index that specific page.

These elements are composed of two parts: the first is name=”, which identifies the user-agent, the second is content=”, which signals to robots what the behavior should be

There are two types of elements:

- Meta tag robots.

They are commonly used by SEOs and allow you to report to user-agents to scan specific areas of the site.

Such as,

<meta name = “googlebot” content = “noindex, nofollow”>

tells Googlebot not to index the page in the search engines and not to follow any backlink.

Therefore, this page will not be part of the SERP and such commands can serve for example for a thank you page.

If you use different directives for different user-agents, you will need to use separate tags for each bot.

It is also essential not to place the robots meta tags outside the <head> section.

- X-robots tag

The x-robots tag allows you to do the same thing as the meta, but within the headers of an HTTP response.

Basically, it offers more functionality than meta robot tags, but you will need to access . php, . htaccess or server files.

For instance, Crowe explains, if you want to block an image or video but not the entire page, use x-robots tags.

Tips on the use of meta tag robots

Regardless of how we implement them on the site, there are also in this case some best practices to follow.

- Keep the distinction between uppercase and lowercase characters. Search engines recognize attributes, values and parameters in both upper and lower case: the author recommends sticking to lower case letters to improve code readability, a suggestion that SEO should keep in mind.

- Avoid multiple <meta> tags. The use of multiple metatags will cause conflicts in the code. To use multiple values in the same tag you must respect the syntax with the comma, as in the case of

<meta name = “robots” content = “noindex, nofollow”>.

- Do not use conflicting meta tags to avoid indexing errors. For example, if there are multiple lines of code with meta tags like this

<meta name = “robots” content = “follow”>

and this

<meta name = “robots” content = “nofollow”>

Only “nofollow” will be taken into consideration, because robots prioritize restrictive values.

Making the Robots.txt and Meta Robots work together

One of the biggest mistakes I see when I work on my clients’ websites, says Anne Crowe, is that the robots.txt file does not match what is stated in robot meta tags.

For example, the robots.txt file hides the page from indexing, but meta robot tags do the opposite.

Based on her experience, the author says that Google prioritizes what is forbidden by the robots.txt file. However, you can delete the non-conformity between robot meta tags and robots.txt clearly indicating to search engines which pages must be hidden.