Guida a LCP – Largest Contentful Paint, la metrica del caricamento

In italiano si traduce con visualizzazione elemento di maggiori dimensioni, ma è più nota l’espressione inglese, Largest Contentful Paint o LCP, ed è una metrica cruciale per la user experience perché misura il tempo che una pagina impiega a caricare l’elemento di contenuti di maggiori dimensioni nell’area visibile a seguito della richiesta di un utente. Non a caso, Google ha inserito LCP tra i primi Core Web Vitals o Segnali Web Essenziali, le metriche che sono diventate fattore di ranking con il Page Experience, e che ci impongono di concentrare l’attenzione sugli aspetti tecnici legati al calcolo e valutazione dell’esperienza offerta dalle pagine.

Che cos’è LCP, la metrica Largest Contentful Paint

LCP è un indicatore importante e user-centric per misurare la velocità di caricamento percepita dagli utenti, perché “segna il punto della timeline di caricamento della pagina in cui il contenuto principale della pagina si è probabilmente caricato”, dice Philip Walton nella preziosa guida su web.dev.

In concreto, il Largest Contentful Paint indica il tempo che serve a una pagina per caricare il suo contenuto più impegnativo della finestra presa in considerazione dal dispositivo; di solito, il contenuto più grande è un’immagine, un video oppure un elemento di testo di grandi dimensioni a livello di blocco.

Quindi, il LCP misura l’attesa necessaria all’utente per il rendering dell’elemento più grande e visibile, e un dato veloce “aiuta a rassicurare l’utente che la pagina sia utile”, perché è un segnale che l’URL è in fase di caricamento.

Un indicatore della reale velocità di caricamento percepita

Misurare la velocità con cui il contenuto principale di una pagina web viene caricato ed è visibile agli utenti è una delle sfide più impegnative per gli sviluppatori, che hanno tentato varie strade: come racconta Walton, metriche più vecchie “come load o DOMContentLoaded non sono buone perché non corrispondono necessariamente a ciò che l’utente vede sul proprio schermo”, e la stessa First Contentful Paint (FCP), pur essendo incentrata sull’utente, “cattura solo l’inizio dell’esperienza di caricamento” ed è quindi troppo generica, perché “se una pagina mostra una schermata iniziale o un indicatore di caricamento, questo momento non è molto rilevante per l’utente”.

È per questo che si è imposto il valore LCP che, calcolando il tempo di caricamento dell’elemento più grande e problematico in pagina, si dimostra un modo più accurato per misurare quando viene effettivamente caricato il contenuto principale di una pagina per come viene percepito dall’utente.

LCP e segnali web essenziali

Non sorprende, come dicevamo, la scelta di Google di inserire il Largest Contentful Paint tra i segnali web essenziali che permettono di misurare concretamente l’esperienza fornita dalle pagine: accanto all’indicatore per conoscere la stabilità visiva (Cumulative Shift Layout) e a quello della interattività (First input delay), quindi, Google aggiunge la misura del caricamento come discriminante per le pagine che offrono esperienze positive e quelle che, invece, sono carenti.

Come misurare LCP: punteggi e significato

La metrica Largest Contentful Paint riporta dunque il tempo di rendering necessario per l’immagine o blocco di testo più grande visibile all’interno della finestra.

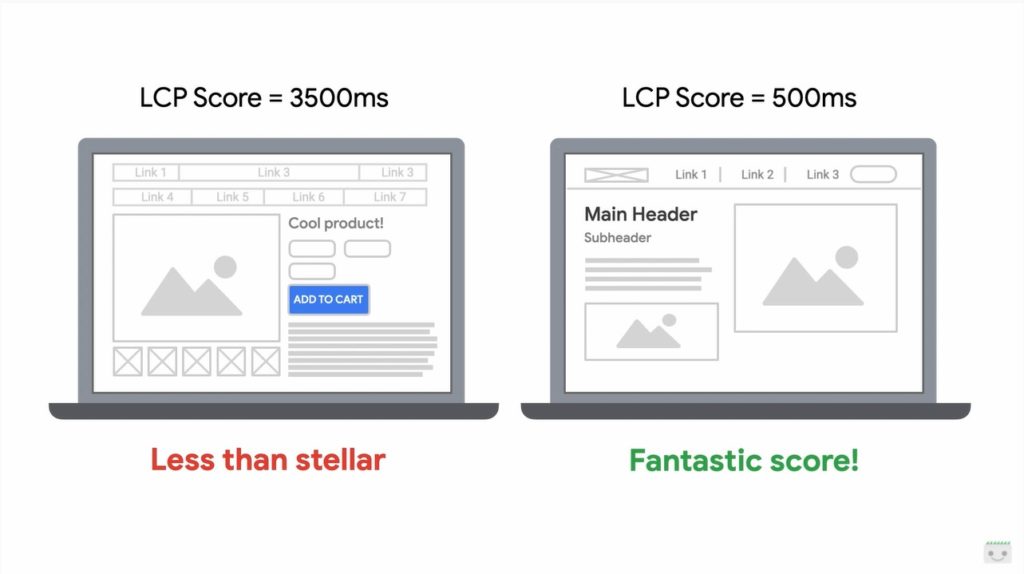

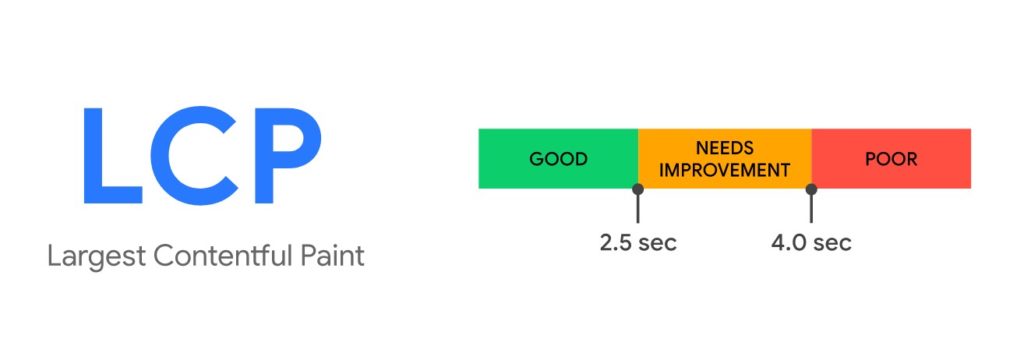

Un punteggio LCP è buono quando inferiore a 2,5 secondi, mentre è molto basso un valore maggiore di 4 secondi e “tutto ciò che sta nel mezzo deve essere migliorato”, secondo l’esperto.

Per fornire una buona esperienza utente, i siti dovrebbero sforzarsi di assicurare il LCP entro i primi 2,5 secondi dall’inizio del caricamento della pagina; per essere certi di raggiungere questo obiettivo per la maggior parte dei nostri utenti, una buona soglia da misurare è il 75° percentile dei caricamenti di pagina in un certo intervallo (LCP aggregate), segmentato tra dispositivi mobili e desktop.

Per fornire una buona esperienza utente, i siti dovrebbero sforzarsi di assicurare il LCP entro i primi 2,5 secondi dall’inizio del caricamento della pagina; per essere certi di raggiungere questo obiettivo per la maggior parte dei nostri utenti, una buona soglia da misurare è il 75° percentile dei caricamenti di pagina in un certo intervallo (LCP aggregate), segmentato tra dispositivi mobili e desktop.

In termini pratici, per fornire questo valore la Google Search Console monitora i tempi di caricamento e li ordina in modo crescente, dal più piccolo al più grande, prendendo come misura il valore del tempo che corrisponde al 75esimo percentile, il primo 75% dei tempi misurati. Tale scelta si spiega con la necessità di comprendere la più ampia maggioranza dei tempi registrati così da avere un riferimento sicuro che per la maggior parte degli utenti l’esperienza di caricamento è stata positiva.

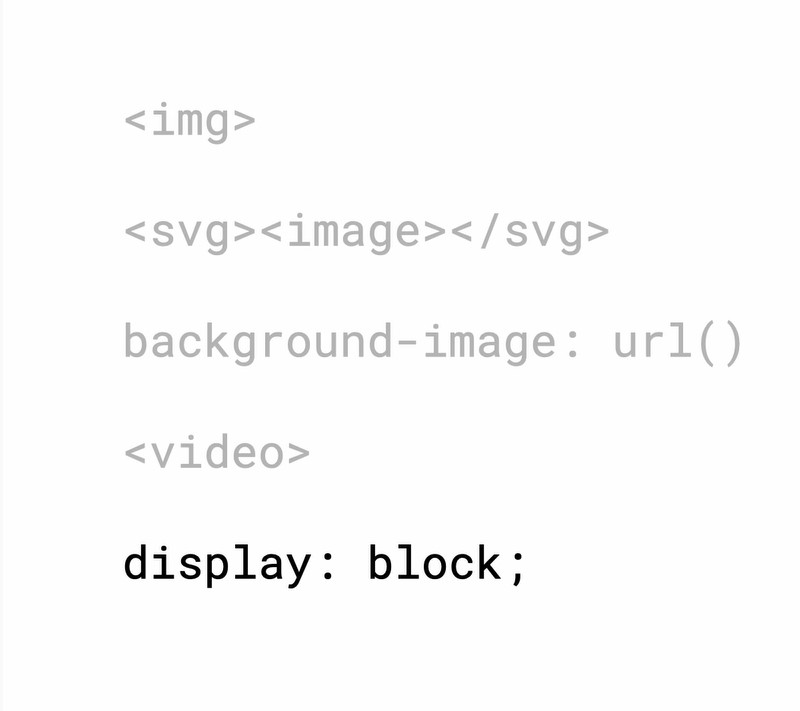

Quali elementi sono considerati

Come attualmente specificato nella Largest Contentful Paint API, i tipi di elementi considerati per Largest Contentful Paint sono pochi per scelta (per mantenere le cose semplici all’inizio, con possibilità di aggiungere altri elementi in futuro), ovvero:

- elementi <img>

- elementi <image> all’interno di un elemento <svg>

- elementi <video> (viene utilizzata l’immagine poster)

- Un elemento con un’immagine di sfondo caricata tramite la funzione url() (al contrario di un gradiente CSS)

- Elementi block-level contenenti nodi di testo o altri elementi secondari di testo a livello di riga.

Come si calcola la dimensione di un elemento

La dimensione dell’elemento segnalata per Largest Contentful Paint è in genere “la dimensione visibile all’utente all’interno della finestra”. Se l’elemento si estende al di fuori della viewport, o se uno qualsiasi degli elementi è tagliato o ha un overflow non visibile, quelle parti non vengono conteggiate per la dimensione dell’elemento.

Per gli elementi di immagine che sono stati ridimensionati dalla loro dimensione intrinseca (ovvero quella originale, nel linguaggio di Google), viene riportata la dimensione visibile o quella dimensione intrinseca “a seconda di quale sia la più piccola”. Ad esempio, le immagini “ridotte a una dimensione molto più piccola di quella intrinseca riporteranno solo le dimensioni in cui sono visualizzate, mentre le immagini allungate o espanse a una dimensione maggiore riporteranno solo le loro dimensioni intrinseche”.

Semplificando con un esempio: se carichiamo un’immagine di 2048 pixel di larghezza e 1152 pixel di altezza, 2048 x 1152 sono considerate dimensioni “intrinseche”. Se ridimensioniamo l’immagine a 640 x 360 pixel, 640 × 360 sarà la dimensione visibile; ai fini del calcolo della dimensione dell’immagine, Google utilizza la dimensione minore tra le immagini di dimensione intrinseca e visibile, ed è considerata una best practice fare in modo che la dimensione intrinseca dell’immagine corrisponda alla dimensione visibile, perché così la ricorsa sarà scaricata più velocemente e aumenterà il punteggio LCP.

Per gli elementi di testo, viene considerata solo la dimensione dei loro nodi di testo (il rettangolo più piccolo che racchiude tutti i nodi di testo).

Per tutti gli elementi, qualsiasi margine, riempimento o bordo applicato tramite CSS non viene considerato.

Gli strumenti per misurare il Largest Contentful Paint

LCP è una metrica facile da capire: è sufficiente osservare la pagina web e determinare qual è il blocco di testo o l’immagine più grande e quindi ottimizzarlo, rendendolo più piccolo o rimuovendo tutto ciò che può impedire un download rapido.

Il dato di LCP può essere misurato in laboratorio o sul campo ed è disponibile in vari strumenti:

- Strumenti sul campo (misurazioni effettive di un sito).

- Chrome User Experience Report

- PageSpeed Insights

- Search Console (rapporto Segnali web essenziali)

- Libreria JavaScript web-vitals

- Strumenti di laboratorio (forniscono un punteggio virtuale basato su una scansione simulata utilizzando algoritmi che approssimano le condizioni Internet che potrebbe incontrare un utente tipico su uno smartphone).

- Chrome DevTools

- Lighthouse

- WebPageTest

Ottimizzare il Largest Contentful Paint

Chiariti i principali aspetti descrittivi di questa metrica, andiamo a scoprire cosa possiamo fare in concreto per migliorare le performance su LCP e quindi velocizzare il rendering sullo schermo del contenuto principale della pagina per fornire una migliore user experience.

Secondo Google, le cause più comuni di un LCP scadente sono:

- Tempi di risposta del server lenti

- JavaScript e CSS che bloccano il rendering

- Tempi di caricamento delle risorse lenti

- Rendering lato client

Per ognuno di questi fronti, Houssein Djirdeh (sempre su web.dev) offre una guida dettagliata alla risoluzione del problema e all’ottimizzazione di Largest Contentful Paint.

Risolvere i problemi di lentezza del server

Maggiore è il tempo necessario a un browser per ricevere contenuti dal server, più tempo servirà per visualizzare qualsiasi cosa sullo schermo, e quindi aumentare il tempo di risposta del server “migliora direttamente ogni singola metrica di caricamento della pagina, incluso LCP”.

L’esperto consiglia di concentrarsi innanzitutto a migliorare “come e dove il tuo server gestisce i tuoi contenuti”, usando la metrica Time to First Byte (TTFB) per misurare i tempi di risposta del server e migliorando il dato in uno dei seguenti modi:

- Ottimizzare il server, analizzando e migliorando l’efficienza del codice server-side per influenzare direttamente il tempo necessario al browser per ricevere i dati (e risolvendo eventuali problemi di server sovraccarico).

- Indirizzare gli utenti a una rete CDN vicina (per evitare che gli utenti debbano attendere richieste di rete a server lontani).

- Cache asset: la memorizzazione della cache è adatta a siti con codice HTML statico, che non deve essere modificato a ogni richiesta, e impedisce la sua ricreazione inutile, riducendo al minimo l’utilizzo delle risorse.

- Servire pagine HTML cache-first: attraverso un service worker eseguito in background del browser è possibile intercettare le richieste dal server, memorizzando nella cache parte o tutto il contenuto della pagina HTML e aggiornando la cache solo quando il contenuto è cambiato.

- Stabilire in anticipo connessioni di terze parti: anche le richieste del server originate da terze parti possono avere un impatto su LCP, soprattutto se sono necessarie per visualizzare contenuti critici sulla pagina.

Intervenire su blocchi di rendering JavaScript and CSS

Script e fogli di stile sono entrambe risorse render blocking che ritardano FCP e di conseguenza LCP; Google invita a “differire qualsiasi JavaScript e CSS non critico per accelerare il caricamento del contenuto principale della tua pagina web”.

Più precisamente, il consiglio netto è di “rimuovere completamente qualsiasi CSS inutilizzato o spostarlo in un altro foglio di stile, se utilizzato su una pagina separata del tuo sito”. Un modo per farlo è utilizzare loadCSS per caricare i file in modo asincrono per qualsiasi CSS non necessario per il rendering iniziale, sfruttando rel = “preload” e onload.

Simile deve essere il lavoro per ottimizzare JavaScript, iniziando dallo “scaricare e offrire agli utenti la quantità minima di JavaScript necessario”, perché “riducendo la quantità di blocchi JavaScript si ottiene un rendering più veloce e, di conseguenza, un LCP migliore”.

Migliorare il caricamento delle risorse lenti

Esistono alcuni modi per garantire che file critici vengano caricati il più velocemente possibile, come ad esempio:

- Ottimizzare le immagini.

- Precaricare risorse importanti (come caratteri, immagini o video above the fold e CSS o JavaScript per percorsi critici).

- Comprimere file di testo.

- Fornire risorse diverse in base alla connessione di rete (Adaptive serving)

- Cache degli asset utilizzando un service worker

Ottimizzare il rendering client-side

Molti siti utilizzano la logica JavaScript lato client per eseguire il rendering delle pagine direttamente nel browser, ma questa scelta potrebbe generare un effetto negativo su LCP quando viene utilizzato un pacchetto JavaScript di grandi dimensioni.

In particolare, se non sono in atto ottimizzazioni per impedirlo, gli utenti potrebbero non vedere o non interagire con alcun contenuto sulla pagina fino a quando tutto il JavaScript critico non avrà terminato il download e l’esecuzione.

Quando si crea un sito con rendering lato client, Djirdeh suggerisce di apportare tre tipi di ottimizzazione:

- Ridurre al minimo JavaScript critico.

- Usare il rendering lato server.

- Usare il pre-rendering.

Come ottimizzare LCP, la guida di Google

Non è facile misurare il reale tempo di caricamento di una pagina, sia perché ci sono vari aspetti su cui soffermarsi, sia perché ci sono tante metriche che offrono solo una visione parziale, e quindi il Largest Contentful Paint può servire a dare una risposta definitiva alla questione e consentire a webmaster, sviluppatori e SEO di sapere con precisione quanto ci vuole per caricare una pagina dal punto di vista dell’utente. Proprio questo cambio di prospettiva è fondamentale, secondo Patrick Kettner di Google, che ci guida alla scoperta dei sistemi per misurare e migliorare il livello di LCP delle pagine del nostro sito, in maniera più approfondita rispetto alle indicazioni prima fornite e anche alla guida generale alla ottimizzazione dei Core Web Vitals.

La metrica LCP, per misurare il tempo di attesa percepito dagli utenti

I sistemi precedenti per misurare il caricamento delle pagine avevano il difetto della parzialità e dell’essere focalizzati solo su aspetti tecnici: nel nuovo appuntamento con la serie “Getting started with Page Experience” su Youtube, il Developer Advocate ricorda ad esempio che “alle persone che usano il nostro sito non interessa quando si attiva un dom event“, non ci aiuta un document.unload che resta una “pietra miliare abbastanza insignificante per un utente” né il “first contentful paint, perché la prima cosa che viene dipinta non è necessariamente sempre così utile”.

Ecco allora che ci supporta la metrica LCP, che consente di “fare un passo indietro rispetto ai problemi e fornire una risposta alle domande che contano di più per i nostri utenti sul web di oggi”, e in particolare di soddisfare la richiesta fondamentale di ottenere la migliore esperienza utente possibile. Con il Largest Contentful Paint, infatti, sappiamo “quando una pagina è utilizzabile o piuttosto quando gli utenti pensano che sia utilizzabile”, e questo ci offre il modo per conoscere “il punto preciso in cui la maggior parte degli utenti percepisce che una pagina web è stata caricata“.

Che cos’è LCP, la definizione e la spiegazione del termine

Kettner cerca poi di definire che cos’è il Largest Contentful Paint, la metrica che misura quanto velocemente il contenuto principale di una pagina si carica e renderizza (o dipinge) la maggior parte dei suoi elementi visivi sullo schermo.

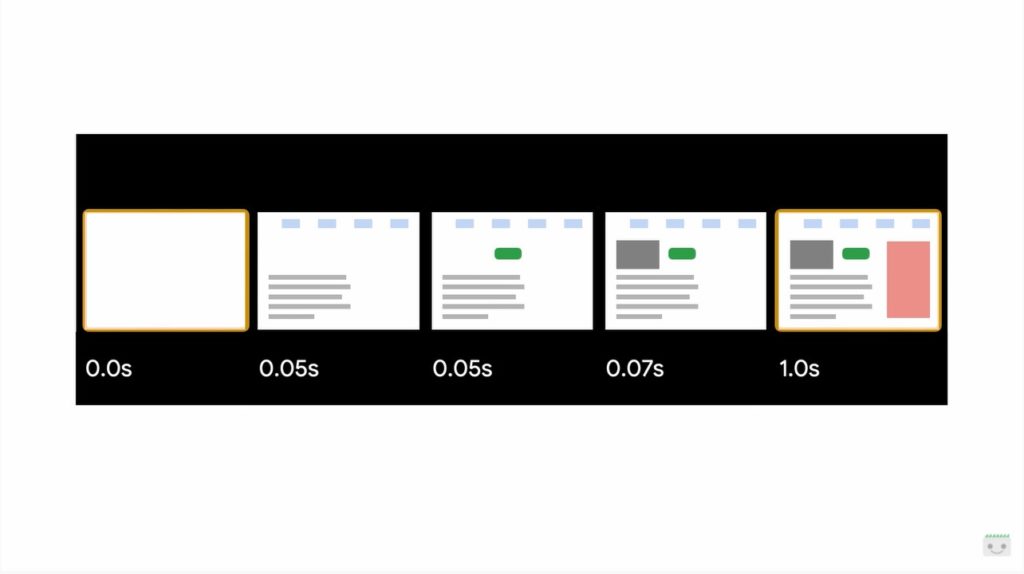

Il termine paint si riferisce a “un evento di pittura” (terminologia del browser): un evento paint si verifica “quando i pixel sullo schermo vengono renderizzati o dipinti ogni volta che un pixel cambia il colore sullo schermo” – e quindi, in sintesi, “quando un pixel cambia è un paint”.

Come per gli altri eventi web vital discussi in questa serie, gli eventi paint “sono esposti come performance che tracciamo e analizziamo attraverso le api browser performance observer”: ogni volta che il browser dipinge “sappiamo e capiamo che ogni singolo elemento della pagina è caricato”.

Contentful paint, invece, sono più precisamente gli eventi paint che disegnano i pixel di una manciata di elementi dom, e in particolare elementi image, gli elementi image utilizzati all’interno di svg, elementi con il background image, elementi video ed elementi block level (come nei display blocks) quando contengono testo.

In breve, contentful paint è un evento paint che dipinge il contenuto, mentre il largest contentful paint “è quando viene dipinto l’elemento che usa la maggior quantità di pixel di tutti gli elementi sullo schermo dell’utente”.

Come si misura il Largest Contentful Paint

Il valore di LCP si misura in secondi e indica per la precisione l’intervallo tra “il primo bite che viene caricato dalla pagina e l’evento finale di largest contentful paint”; non appena i nostri utenti toccano, fanno tap o interagiscono con la nostra pagina quella finestra di tempo si chiude e LCP smette di essere misurato.

Qualunque elemento abbia impiegato il più alto numero di secondi tra il primo byte e quando è stato dipinto è quello che viene riportato per il LCP per quell’URL; come ogni altra parte della Page Experience, ogni pagina del sito web ha il suo proprio punteggio LCP.

Quali sono i valori buoni di LCP

Come per il FID, anche in questo caso il punteggio di una pagina non influenza quello di un’altra, e quindi “la home page può avere un LCP basso, ma le pagine dei prodotti o gli articoli potrebbero avere risultati fantastici”.

È importante ricordare che tutti questi risultati “sono generati e raccolti dalle persone che usano il sito, quindi se vediamo che la pagina ha un LCP di un secondo, ciò è quello che gli utenti del mondo reale vedono quando visitano il sito”. Inoltre, questa metrica è solo “una parte della page experience, che a sua volta è solo uno dei molti input che la Ricerca ha all’interno della sua salsa segreta“, dice con ironia il Googler.

Ad ogni modo, dal punto di vista pratico, il valore obiettivo è garantire che il caricamento del LCP avvenga in meno di 2,5 secondi per almeno 75 delle sessioni sulla pagina. È questo che fa da discrimine tra pagine che offrono un valore LCP buono o cattivo, anche se il numero serve solo come riferimento per “confrontare siti simili per capire su quale gli utenti possono avere un’esperienza migliore”, perché non c’è una vera soglia che indica il superamento o il fallimento.

Per spiegare questo concetto, Kettner fa un esempio: se fossimo di fronte a due pagine identiche in ogni aspetto, una delle quali impiega il doppio del tempo per renderizzare e dipingere i contenuti, preferiremmo senza dubbio usare quella più veloce.

Come calcolare il Largest Contentful Paint

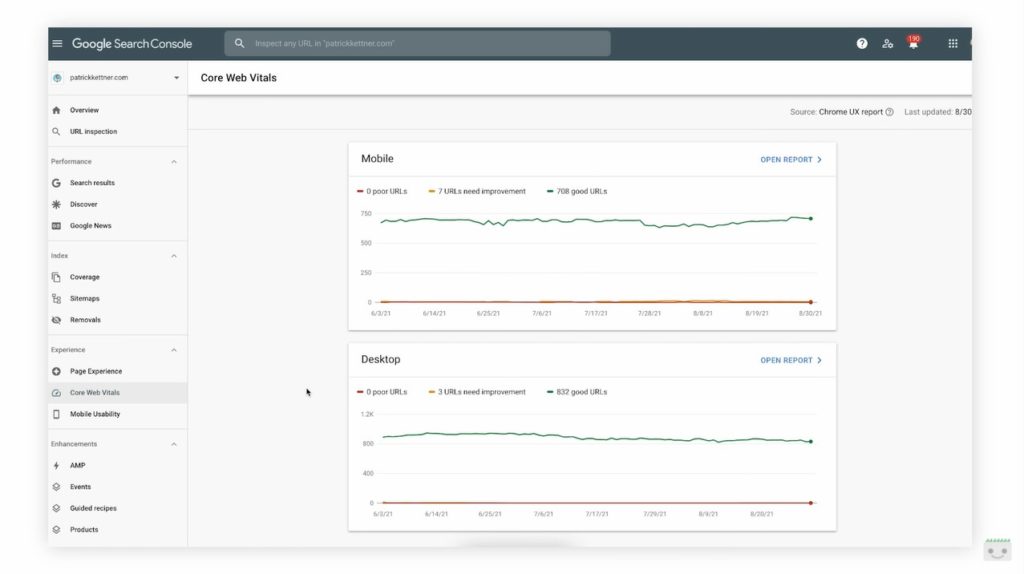

Per semplificare le operazioni di calcolo e tracciamento del LCP abbiamo a disposizione il Report Esperienza sulle pagine della Search Console, che mette a disposizione i dati su tutti i Core Web Vitals e sulla Page Experience.

Il rapporto segnala intuitivamente se gli URL della proprietà sono sotto l’obiettivo dei 2,5 secondi di caricamento attraverso grafici in colore verde; le linee in rosso indicano che le pagine hanno un caricamento più lento, e se invece vediamo delle tracce in giallo significa che alcuni URL necessitano di interventi migliorativi.

Un sistema per scoprire subito i punteggi e le loro motivazioni sono gli “strumenti per sviluppatori” nel browser, attivando l’overlay di core web vitals e caricando l’URL. In questo modo, ammette Kettner, vediamo anche il “primo potenziale problema dei risultati di LCP”, ovvero che i dati qui mostrati sono molto più veloci di quelli della Search Console.

Tuttavia, è bene sapere e ricordare che l’unico punteggio LCP che conta veramente è quello nel rapporto sull’esperienza sulle pagine, perché l’algoritmo di ricerca userà quella stessa informazione per le sue valutazioni. Questo è infatti il valore che proviene dai nostri utenti reali: quindi, se vediamo una discrepanza tra il dato all’interno del nostro rapporto della GSC e quello quando visualizziamo il sito, potremmo aver bisogno di modificare un po’ la configurazione di sviluppo per allineare meglio il punteggio che viene registrato; infatti, potremmo lavorare su una potente macchina di sviluppo, mentre i nostri utenti raggiungono il sito su telefoni vecchi di cinque anni.

Per questo motivo, a livello ideale quando lavoriamo sulla page experience, e soprattutto per i parametri dei Core Web Vitals, dovremmo testare i valori simulando dispositivi che sono gli stessi, o che almeno sono simili, a quelli della maggior parte dei nostri utenti (informazione che si può ricavare dagli analytics del sito). Non serve conoscere il modello esatto del dispositivo, ma solo avere una comprensione di qual è la più comune esperienza per le persone interessate a quella pagina del nostro sito (ad esempio se stanno usando un dispositivo di fascia alta o bassa, un browser più vecchio o più nuovo, quali sono le dimensioni del loro schermo eccetera). Se non abbiamo altri tool disponibili, chrome devtools ci permette di impostare l’emulazione di dispositivi mobili di fascia media o bassa, per avvicinarci a quelli degli utenti reali.

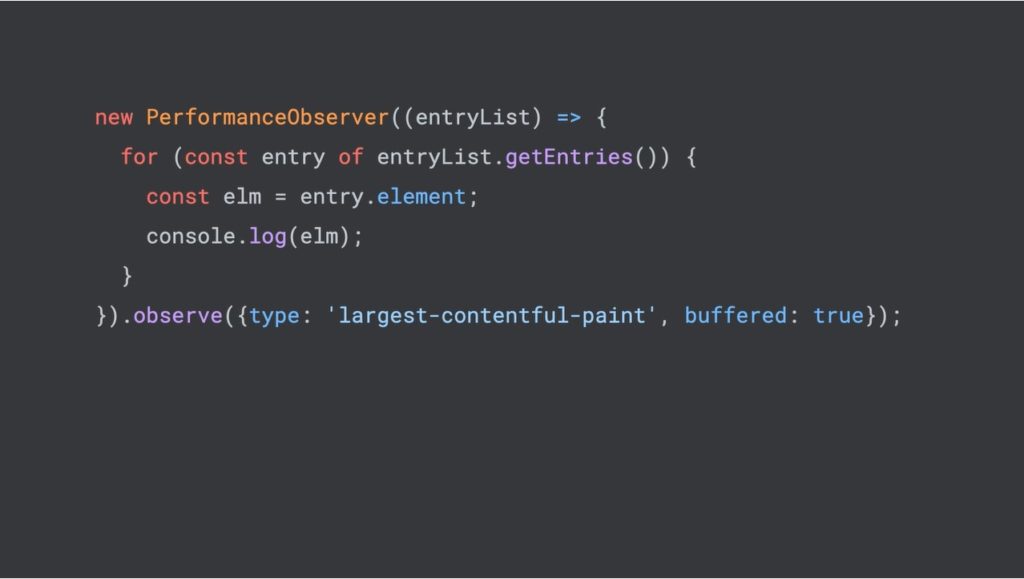

L’utilizzo dell’API PerformanceObserver

Quando il nostro rapporto LCP si avvicina maggiormente a quello che c’è effettivamente nel report, prosegue il Googler, possiamo utilizzare l’API PerformanceObserver per ottenere informazioni più dettagliate su questo evento LCP, come quale elemento preciso sta impiegando quel lasso di tempo per essere dipinto.

Guardando il tipo di largest contentful paint possiamo ripetere su ogni voce LCP, per controllare direttamente l’attributo element per ogni voce: questo ci darà l’effettivo nodo live dom che innesca l’evento LCP, che potrebbe essere uno qualsiasi dei tipi di dom, come immagini, video o anche solo testo. “Anche i font o caratteri tipografici possono effettivamente gonfiare il LCP ed è sicuramente un aspetto a cui prestare attenzione”, suggerisce Kettner.

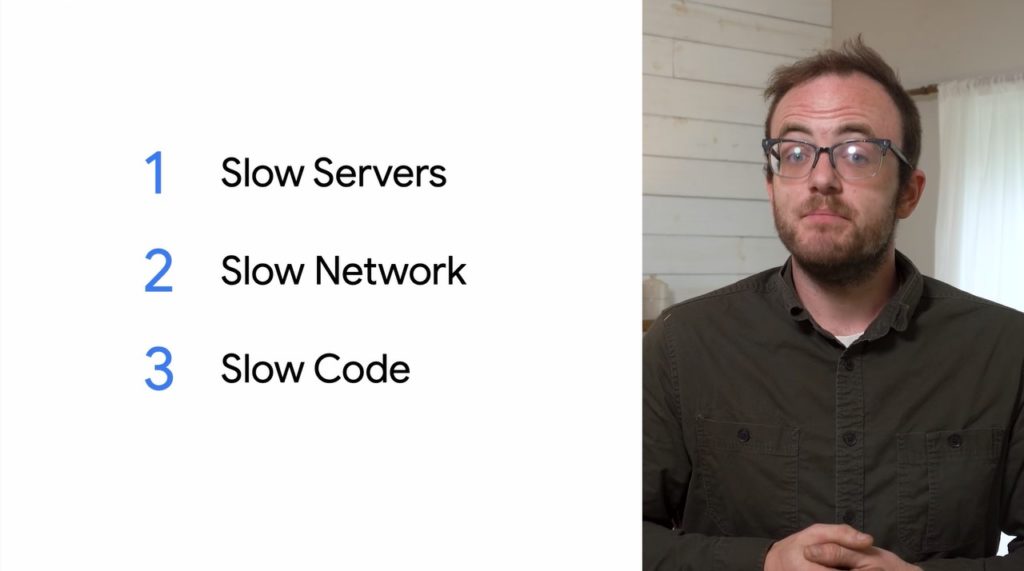

I tre principali problemi con LCP

Tutti i problemi con un rallentamento del Largest Contentful Paint si possono ridurre a tre categorie:

- Server lenti

- Network lenti

- Codice lento

Come affrontare e risolvere i problemi di lentezza del server

Il LCP misura “dal primo byte che il browser riceve fino a quando i nostri utenti interagiscono con la pagina”: quindi, se il nostro server è lento o semplicemente non completamente ottimizzato, stiamo gonfiando il nostro LCP ancora prima di avere la possibilità di iniziare a caricare il nostro codice browser.

L’ottimizzazione del server è un topic molto ampio, ma per iniziare possiamo anche limitarci a ridurre la logica e le operazioni del server a ciò che è davvero essenziale; accertarci che il CMS o qualunque sia il back end stia facendo caching delle pagine piuttosto che ricostruirle a ogni richiesta; verificare che i file statici (come immagini, fogli di stile e gli script) siano serviti con header long-lived caching per ridurre il numero di file che il server deve inviare più e più volte.

Come affrontare e risolvere i problemi di Network

Dopo che abbiamo verificato che i server siano nelle condizioni ottimali di funzionamento, possiamo passare a ottimizzare l’aspetto successivo, ovvero il Network.

“Anche se il nostro server è alimentato da un turbo e il nostro front end offre il massimo delle prestazioni, se la nostra rete è lenta comprometterà tutto quel lavoro”, spiega Kettner, che definisce essenziale usare un CDN o content delivery network, che sappiamo essere dei servizi che permettono di servire copie del contenuto del nostro server sui loro server.

Per fare un esempio pratico, se il nostro server è a San Francisco ma l’utente è a Lagos, anziché far passare ogni file attraverso oceani e continenti per ogni singola è più conveniente e performante usare un cdn, che copierà quei file e li memorizzerà in un server localizzato geograficamente molto più vicino all’utente finale.

Meno distanza “significa meno tempo speso sul filo, quindi i file si caricano più velocemente abbassando il nostro LCP”, sintetizza il Developer Advocate di Google.

Naturalmente, il modo più veloce per soddisfare una network request è “non lasciare mai il dispositivo dell’utente, quindi usare un service worker è una grande scelta per far caricare istantaneamente i nostri siti”. In estrema sintesi, i service worker sono speciali file javascript che permettono di intercettare e rispondere alle richieste di rete direttamente all’interno del browser, e sembrano dare ottimi riscontri (anche) nell’abbassare il valore di LCP.

Come affrontare e risolvere i problemi col codice front-end

Abbiamo “imparato che LCP misura solo ciò che è sullo schermo dell’utente e smette di essere segnalato dopo che c’è interazione con la pagina”: questo significa che l’elemento che innesca il nostro Largest Contentful Paint “sarà probabilmente in quella area iniziale mostrata nella parte superiore della nostra pagina quando l’URç viene caricato per la prima volta”, che chiameremo il viewport iniziale.

Il nostro compito è assicurarci che qualsiasi cosa venga renderizzata in quel viewport iniziale sia capace di farlo il più velocemente possibile, con vari interventi.

La prima cosa che possiamo fare è “rimuovere roba“, eliminare qualsiasi script e fogli di stile nell’head del nostro documento che non siano utilizzati in questa pagina e che possono bloccare o rallentare il browser mentre fa rendering di ciò che viene effettivamente utilizzato – stanno “divorando il nostro cruciale budget LCP”.

Andando oltre in questo intervento, anziché collegare file separati possiamo aggiungere direttamente il css e il javascript essenziali al viewport iniziale proprio dentro l’head: in tal modo, il browser non deve “trovare il nostro css, scaricarlo, analizzarlo e poi impaginare la pagina, ma semplicemente saltare direttamente al layout nel millisecondo che ci arriva”, così da ottimizzare un po’ di più le prestazioni di caricamento di ogni singola pagina.

L’utilizzo degli attributi async e defer

Se usiamo ancora script sulla pagina, possiamo usare defer e async, due attributi che possiamo aggiungere a qualsiasi tag di script e che fungono un po’ da segnali che possiamo dare al browser sui diversi modi in cui può accelerare il suo rendering. Kettner spiega che “un browser può fare solo una cosa alla volta, e per impostazione predefinita andrà dall’inizio alla fine del nostro codice scaricando e analizzando tutto più o meno una linea alla volta”.

Aggiungendo l’attributo async al nostro script tag, diciamo al browser che “non faremo affidamento su qualsiasi altra risorsa nella pagina, che può scaricare l’altra roba in background ed eseguirla non appena avrà finito di scaricare”: se lo script è importante per il sito e deve essere eseguito il prima possibile, l’attributo async è una ottima opzione, seconda solo all’iniezione dello script nell’head.

Se invece lo script “deve essere nella pagina, ma può aspettare un pochino” possiamo usare l’attributo defer. Gli script che usano async possono interrompere il browser dal renderizzare altre parti del dom, perché le eseguono nel secondo in cui hanno finito di scaricare; defer “è più educato”, perché dice al browser che può scaricare ed elaborare altre cose sulla pagina, ma non interromperà il browser per essere eseguito, perché attende che la pagina sia stata completamente analizzata (parsed).

L’attributo defer è una buona soluzione “per tutto ciò che non è critico per il nostro viewport iniziale, come le librerie, video player o widget utilizzati più in basso nella pagina”; rimuovere i download extra network va bene “ma non è sempre pratico, perché alcune risorse remote, come immagini o web font, non possono essere inserite in linea senza gonfiare davvero le dimensioni del nostro file”, chiarisce Kettner.

L’utilizzo di dns prefetch, preconnect e preload metadata

Se alcune delle nostre risorse sono ospitate su altri domini, come un cdn, possiamo accelerare il lavoro che il browser deve fare aggiungendo dns prefetch o preload metadata alla nostra pagina, due altri suggerimenti che possiamo condividere con il browser per “fargli fare più lavoro contemporaneamente”.

DNS prefetch è un segnale “che dice che avrò bisogno di scaricare contenuti da questo dominio in futuro: può sembrare un po’ sciocco, ma in realtà può aiutare un bel po’ a seconda di come il nostro sito è strutturato”.

È bene ricordare che i browser non sanno “come arrivare ad ogni singolo sito web nel mondo, e quando cercano qualcosa di nuovo usano un sistema chiamato DNS“: normalmente, quando il browser elabora il nostro codice scopre un URL di qualcosa che vogliamo scaricare; useranno allora i DNS per capire l’indirizzo IP del server di quel sito e quindi capire come raggiungerlo su Internet. Anche se questo processo è normalmente abbastanza veloce, richiede ancora tempo e “forse anche dozzine di viaggi attraverso lo spazio o intorno al mondo”: usando il dns prefetch diciamo al browser di fare tutto quel lavoro in background mentre elabora la pagina, in modo che nel momento in cui arriviamo a quell’url nel codice i dns siano già stati risolti, rendendo il nostro sito ancora più veloce.

Questo non è l’unico lavoro da fare: se stiamo seguendo le best practices e caricando solo contenuti su HTTPS, il browser deve iniziare quella che viene chiamata una handshake al server prima che i byte possano essere scaricati da esso. Questa stretta di mano avviene quando alcuni messaggi vengono passati avanti e indietro dal browser al server e viceversa per rendere sicura qualsiasi comunicazione che avviene tra di loro. Proprio come il DNS, avviene in modo incredibilmente rapido, ma possiamo renderlo ancora più veloce semplicemente aggiungendo un preconnect statement (una dichiarazione di preconnessione) alla nostra pagina.

Proprio come il dns prefetch, questo fa sapere al server che “va bene fare quel lavoro in background e che di conseguenza “possiamo tipo saltarlo una volta che ci arriviamo nel codice”.

Parallelizzare questi compiti di rete è particolarmente utile su reti lente o dispositivi meno potenti, due situazioni che possono facilmente far sì che il nostro LCP impieghi più tempo dell’obiettivo dei 2,5 secondi. Quando abbiamo contenuti remoti nel viewport iniziale, il prefetch dns e preconnect possono tagliare ancora più millisecondi dal nostro valore.

Un terzo metodo è quello del preload, “un altro pezzo di metadata che dice al browser che possiamo effettivamente scaricare e analizzare il contenuto, e che quindi sarà molto più impegnativo in termini di risorse”. Possiamo usare il preload su script, fogli di stile, immagini, video, web font, e quindi praticamente su tutto ciò che può innescare un evento LCP; tuttavia, usare il precarico su troppe cose può far impantanare il browser, facendolo funzionare peggio di come fa senza l’attributo, e quindi dobbiamo limitarlo solo su contenuti che sono estremamente importanti, come “le cose nel nostro viewport iniziale”.

LCP e immagini, i consigli di Google per evitare di sovraccaricare il caricamento

Secondo Kettner, “la causa più comune di un cattivo LCP sono le immagini“, che in genere costituiscono quasi la metà dei byte di una pagina media desktop o mobile”.

Senza entrare troppo nel dettaglio dei consigli di ottimizzazione delle immagini, il Googler comunque ci ricorda alcune best practices di base da seguire:

- Usare immagini efficienti, ovvero non spedire immagini che siano molto più grandi di quanto effettivamente necessitino per essere visualizzate e comprimere le immagini il più possibile. Alcuni strumenti (il video cita espressamente Squoosh) possono effettivamente comprimere automaticamente le immagini e controllare il degrado visivo, consentendoci di ottenere la versione più piccola possibile dell’immagine. Ogni byte risparmiato sul nostro viewport iniziale permette al nostro LCP di avvenire molto più velocemente.

- Aggiungere l’attributo loading=lazy alle immagini che non sono nel viewport iniziale, per segnalare al browser che possiamo ritardare il caricamento di queste immagini perché hanno meno probabilità di essere viste subito. Questo ci permette di liberare altre risorse per contenuti più critici, ma appunto dobbiamo essere certi di non usare l’attributo su contenuti che siano nel viewport iniziale, che altrimenti perderebbero la priorità generando un impatto negativo sul nostro LCP.

- Usare formati immagine “I browser e i server fanno una negoziazione dei contenuti: in pratica, ogni volta che un browser invia una richiesta a un server dice anche al server il tipo di contenuto che supporta, e questo è davvero utile perché possiamo usarlo per sapere se i browser dei nostri utenti possono supportare cose come webp o avif“, dei formati immagine moderni che possiamo usare per ridurre il peso delle immagini fino al 90% rispetto ai tradizionali jpeg. Così, quando il browser dell’utente sta analizzando il nostro codice e trova un’immagine, invia una richiesta al nostro CDN o server per scaricare quell’immagine e informa anche del supporto di formati file come webp o avif; sul server, noi possiamo controllare queste informazioni e, anziché rispondere con i file jpeg o png serviti ad altri browser, possiamo offrire un moderno formato di file più piccolo, che però può avere un impatto gigantesco sul nostro LCP. Secondo Kettner, può tagliare percentuali a due cifre dai byte che bisogna scaricare dal nostro sito.

Le pillole per la comprensione di LCP

L’ultima parte del video è dedicata all’elenco di alcuni chiarimenti finali che risultano dall’esplorazione del Largest Contentful Paint.

Innanzitutto, Kettner rimarca che LCP non prende in considerazione l’elemento più grande sullo schermo dell’utente, ma l’elemento con il maggior numero di pixel visibili: pertanto, se abbiamo ad esempio un elemento gigantesco che è ritagliato o la cui opacità è stata mandata a zero, quei pixel non visibili non vengono contati nella sua dimensione.

In secondo luogo, per evitare di danneggiare le prestazioni del browser quando controlliamo le nostre performance, LCP guarda solo la posizione iniziale e la dimensione di un elemento: può ancora essere contato se è renderizzato sullo schermo e poi viene spostato, e similmente se viene renderizzato fuori dallo schermo e poi viene animato sullo schermo non sarà contato da LCP.

Ancora: siccome il Largest Contentful Paint “è ciò che i nostri utenti stanno effettivamente vedendo“, potremmo ottenere alcuni punteggi LCP insolitamente cattivi se una pagina viene caricata nella finestra o tab di background. Queste pagine sono caricate molto più lentamente e con priorità più bassa rispetto alle schede in primo piano, quindi in caso di dubbio è meglio cercare negli analytics dei pattern che possano spiegare dei risultati strani.

Quarto edgecase segnalato da Kettner riguarda il peso che (ancora) hanno gli iframes: a causa del modello di sicurezza del web e almeno per default, non è possibile ottenere informazioni LCP da un iframe sulla pagina, ma per quanto riguarda l’interessamento del browser quel contenuto ha ancora un impatto sul valore LCP del nostro URL. Quindi, monitorando il LCP tramite PerformanceObserver potremmo effettivamente perdere gli eventi LCP che vengono segnalati dal browser, ma possiamo evitarlo aggiungendo dei core agli iframe e facendo in modo che riportino i loro valori all’iframe genitore. Oppure, soluzione più semplice e netta, conviene limitare gli iframe e tenerli fuori dal viewport iniziale perché “provocano solo mal di testa”.

Il quinto caso chiarisce che PerformanceObserver non emette eventi quando si arriva alla pagina dai pulsanti indietro o avanti del browser, perché quelle pagine sono memorizzate nella cache nel browser e, quindi, gli stessi eventi nella pipeline non vengono eseguiti. Comunque, il browser riporta ancora le informazioni LCP per quelle situazioni poiché per l’utente è ancora una page view.

L’ultimo suggerimento super importante riguarda un trucco per visualizzare un’anteprima dei nostri punteggi LCP: sappiamo che le informazioni canoniche sono aggiornate e ufficializzate solo ogni 28 giorni (così come tutti i dati del Page Experience), ma possiamo tenere traccia dell’andamento quotidiano usando i nostri analytics e il codice ObserverPerformance. Ad esempio, con Google Analytics possiamo creare un evento personalizzato e aggiungervi le informazioni LCP ogni volta che un visitatore arriva alla nostra pagina. In questo modo, avremo “risultati LCP aggiornati al minuto con cui monitorare qualsiasi miglioramento su cui stiamo lavorando, anziché aspettare un mese intero per sapere se abbiamo anche solo spostato l’ago”.