How to verify if your site is indexed on Google

It is a seemingly trivial question, but one that actually implies far from minimal relevance to the performance of our online project and on its actual visibility: “Does my site appear in Google’s search results?” And, consequently, what are the ways and techniques to check whether a site’s pages are properly indexed by the search engine? Let’s try to delve into these concepts related to the broader topic of indexing, which, as we know, is a key step for any page that intends to launch its climb up Google’s SERPs.

How to check if your site is listed on Google

How do I know that my site is searchable on Google? To put it in other words, are we sure that we are on Google, that we actually appear in its SERPs and that we can therefore participate in the race towards the mythical first position? With this question opens the fifth installment of Search for Beginners, the series of small videos curated by Google Search Central on YouTube dedicated to those who have (still) few skills in the world of the web, and in particular those who own or manage a small online project. And so, it may happen that such people who “have websites, whether for online activities or as a hobby,” do not know how to check their online presence and “sometimes find the site among Google Search results, but sometimes not.”

The importance of indexing on Google

Ranking, or to brutally put it, placing the pages of our site in the top positions of Google is, in a nutshell, the goal we all strive for and the ultimate goal of SEO. Before ranking, however, there is a decisive step that we must not forget and that we often take for granted, namely indexing, which technically represents the second phase of the mechanism of operation of Google Search, placed, so to speak, somewhere between crawling and ranking.

So it all starts with crawling, which is the process of scanning and downloading text, images and videos from pages found on the Internet by automated programs called crawlers, such as Googlebot, launched to discover new or updated pages to add to Google’s Index. During the next step, which is precisely indexing, Googlebot processes each page it scans to compile this index, which is a huge, constantly expanding database that includes all the words, content, and media files it sees and their location within each page, storing the information and making it quickly usable for subsequent calls.

When a user launches a query on Google, the engine’s automated system performs an index search to find responsive pages and returns the results it deems to be the most relevant and relevant to users. This is what we call ranking or positioning, the final process in which results are sorted hierarchically based on specific criteria, with the goal of returning information relevant to the user’s query

To clarify the importance of indexing, we can say that having a site but not entering the Index is equivalent to not being present on Google, and it is practically like being the holder of a telephone line whose number no one knows. The reason is quickly stated: by entering the Index our site enters the race toward visibility positions in SERPs, and it is then in the next stage of ranking that the game is actually played; that is, with indexing we have a chance to win the place in the sun, with ranking Google shows that it deems our pages worthy, reliable and relevant for certain queries, and then we actually start to make traffic.

Not all pages make it into the Index, however, and in the Google Search Essentials guidelines it is made clear that factors that can hinder indexing include settings that prevent Googlebot from finding the page and accessing it, page not working, and last but not least, presence of non-indexable content on the page. Other common indexing problems also include low quality of content on the page and site structure that makes crawling complicated (e.g., due to heavy use of JavaScript).

Using site: to check whether pages are indexed

And so, it is not a given that our site can actually be found on Google, regardless then of where it appears in the relevant SERPs, which leads to two more beginner questions, “Why is this happening? And how do I get my site to show up in Search?”

Fortunately, there is a quick and practical way to check whether Google knows about the site and whether the indexing of the site’s pages is happening correctly, which is the use of the *site: * command, which we know is one of the most useful advanced search operators on Google.

To do this, simply go to the Google home page from any browser and type in the search bar site: followed by the website address: this command specifies that the search is limited only to the indexed pages of that particular site. So, if we enter our website and get a list of several pages, it means that Google knows that this site exists and has already indexed several pages of it.

Check if it is indexed with www and without www

With the site: command, we can also find out if our site is indexed correctly or if a frequent error is present, namely the simultaneous presence on Google of two versions of our domain, with www and without the www (bare address).

Until a few years ago, the Google Search Console provided a preferred domain setting tool, and since then it is the search engine itself that automatically selects the canonical domain Url, the one we want to be used to index the pages of the site. Webmasters can suggest their preference through some tools (canonical, sitemap or redirect 301, for example), nevertheless it may happen that in the Index there are two versions of the same page, with or without www for example, and we have to try to solve the problem.

The problems of the double version of the site

Previously, it was unthinkable not to include the www (which we know stands for World Wide Web) to access non-local websites, whereas today this technical reason related to domain structure and associated services no longer makes sense, and we can even remove the www if we have set the domain’s DNS settings correctly and if we effectively signal the preferred version to Google via rel canonical or the other methods.

What we need to check is that our site is indexed correctly with only one of the two versions, the one that is the most effective and preferred (with or without www), while the simultaneous presence of both versions can be a problem for SEO.

In fact, not specifying a preferred domain could lead Google to treat the www and non-www versions of the domain as separate references to separate pages, i.e., as two different sites presenting the same content, with potential risks in terms of duplicate content and keyword cannibalization.

Separate page versions, how to use the site: command

In practical terms, simply go to Google and enter the command site: in the search box, typing in the site home with www and without. For example, site:www.sito.it and site:site.it.

If the site is indexed correctly, we will display indexed snippets from only one of the two versions, the one we set as canonical or so recognized by the search engine itself.

If, on the other hand, mixed results appear, it is a red flag: either the site has recently been transferred (and Google has not yet digested the change), or you need to better check all the settings to correct the error.

Also, as an additional tip, it is always important to remain consistent with the version of choice when, for example, we do link building or use communication from our social channels, spreading only and only the version in which the site actually loads, whether it is https://sito.it or https://www.sito.it.

Check the way the results are displayed

Another element to pay attention to is how the site appears on Google Search: is the description accurate and representative of what the site offers? Or are there aspects that need improvement? If the latter, you need to work on optimizing what users see when they perform searches for the keywords with which the site is ranked.

Check indexing on Google with Search Console

If we have access to Search Console data, we can use another alternative method to find accurate information about the pages that Google found on the site, those that were actually indexed, and any indexing problems encountered: this is the Index Coverage Report, which is crucial especially when our online project is of significant size – in particular, Google recommends its use for sites that have more than 500 pages.

In a nutshell, this tool lists all the pages in the property that Google has tried to crawl and index, indicating a status property for each URL (valid, excluded, valid with warnings, error) and then providing details for fixing any problems.

How to use the Index Coverage Status Report in the GSC

Accompanying us in the details of how this tool works comes to our support a special episode of Google Search Console Training, in which Daniel Waisberg presents precisely a guide to the use of the Google Index Coverage Status Report, which gives us a way to find out if indeed our pages are on Google or if there are errors that need to be corrected, and more precisely to understand if the pages of our site have been crawled and indexed by Google and if there are any problems encountered in this process.

The Index coverage status was created precisely to give us an overview of all the pages of our site that Google has indexed or tried to index, and it also alerts us by email when an indexing problem appears, although-it is important to know-you do not receive notifications in case of errors getting worse: so the Googler’s first advice is to check the report periodically to make sure everything is in order.

This tool is especially useful in the case of large sites, and it is the GSC guidelines themselves that define its use as “unnecessary” if our online project has less than 500 pages: in this case, in fact, it is easier to check whether the site appears on Google through the site: command described earlier.

Theoretically, when the site grows we should notice a gradual increase in the number of valid indexed pages: drops or spikes could result from problems. We should not expect all URLs on our site to be indexed, because our goal should be to have the canonical version of each page indexed; also, it may take Google a few days to index new content added to the site, but we can reduce the delay by manually requesting the process.

What the reported statuses mean

The default screen of the tool summarizes the indexing errors on the site, but we can also focus directly on the four reported types: error, valid with warning, excluded, or valid regularly, which are grouped and sorted by “status and reason,” working first to correct the problems that have the greatest impact on our project.

Thus, there are four possible status values for a page, and each has a specific reason:

- Error. There is a problem that prevents the page from being indexed, so it cannot appear among Google Search results, and thus results in loss of traffic to the site.

This is the case, for example, of a submitted URL that contains a “noindex” tag, or pages that return a 404 status code or errors with the server. Problems with pages submitted via sitemap are explicitly indicated to facilitate their correction.

- Valid with warning. The page may or may not appear in Google Search depending on a problem we need to be aware of.

For example, pages blocked in the robots.txt file are shown as a warning because Google is not sure if the blocking is intentional (in fact, we know that robots.txt directives are not the right way to block pages from being indexed, but we must use other methods).

- Valid. The page has been indexed and can appear among the search results: we don’t have to do anything except work on SEO optimization for better ranking!

- Excluded. The page has not been indexed and does not appear on Google, which believes it is an intentional or fair choice.

For example, the page contains a noindex statement (intentional choice), it could be a duplicate of another page already indexed (right choice) or it was not found because the bot encountered a 404 error.

To know and fix the errors

Pages with errors are those on which we should immediately focus our attention; the table of the report is sorted according to the seriousness of the problem and the number of pages affected by it, and clicking on the line we can verify the temporal distribution of the damage and a list of examples to deepen the aspect.

Once you made the corrections (personally or using the support of a developer, who we can give a limited access to the Search Console through the sharing of the link), we have to validate the changes by clicking on the appropriate button and wait for Google to process our work.

A useful tool for a successful strategy

Ultimately, the Google Index Cover Status Report is a useful and fundamental tool because it gives us clearer information about scanning and indexing decisions and how Google manages the contents of our site, but also because it allows us to discover technical problems in time, even on a large scale, and to intervene in order to prevent them leading us to drops in traffic.

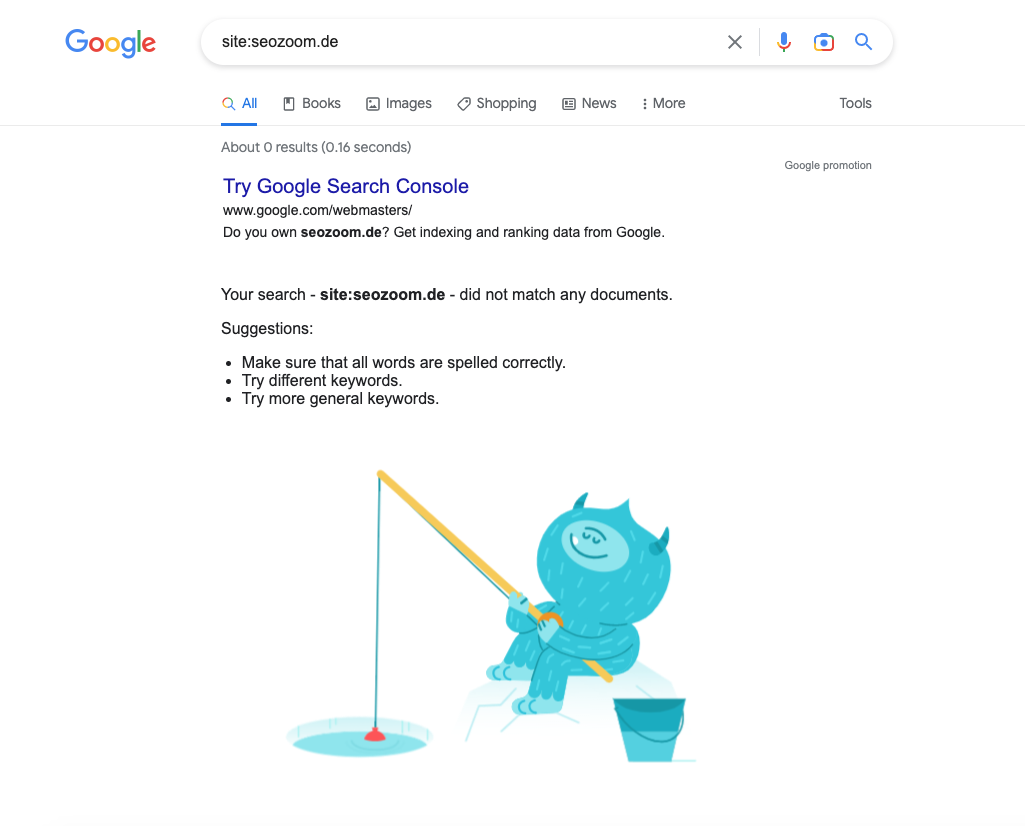

What to do if the site does not appear in Google

But what specifically to do if the pages of the site do not appear in the results of a search with site: or if there are errors in the Report? In this situation, there may be crawling and indexing problems, and then you need to take action to fix them.

The first remedy is to submit the sitemap and site URLs through Google Search Console, which as we know is the tool for managing online presence on Google Search.

It is also possible to test individual URLs using the URL Inspection tool, which provides information about a specific page, clarifies why Google’s indexing of the page is successful or not, and also allows us to check whether a URL is potentially indexable.

If we succeed in correcting the reported problems, Google will know about our website and include our pages within its Index, and then we have solved the technical steps that precede the work to improve its visibility, that is, the interventions with SEO techniques to achieve better results in SERPs. The first steps are quite simple: we can start by checking that the site appears in related searches, “like nice t-shirt, t-shirt store nearby or buy t-shirt online,” and verify that the page shown is actually the best and most relevant one among those on our site. Everything else is SEO.