How and why developers must use the Google Search Console

Search Console Training on Youtube, the guide to the Google Search Console created by Daniel Waisberg, Search Advocate of the company, starts again with a different approach: If the first season has focused on some of the most useful tools for webmasters and SEO available in the suite, the new episodes will have a more specific cut. And in fact it starts from a very precise theme, namely the description and analysis of the tools of the GSC that can serve developers to simplify and improve their work.

Google Search Console, advantages for developers too

Since it provides a lot of information on SEO optimizations, developers might think that the Search Console is not useful for them, Waisberg said, but it aims to refute this thesis.

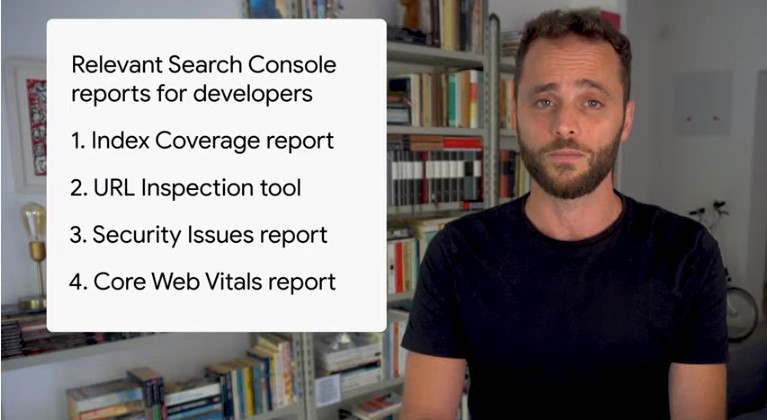

In the video he explains what are the most useful reports to help developers to build websites that are “healthy, discoverable and optimized for Google search”, and mentioning in particular four tools, analyzed in detail:

- The report on the Index coverage status, to find out the problems of indexing the search throughout the site.

- The URL Inspection tool to debug page-level indexing problems.

- The Security Issues report to find and fix issues affecting the site.

- The new Core Web Vitals Report, which analyzes Core Web Vitals to ensure that the site offers an excellent page experience to users.

Building a findable and indexable site

The first useful report to the developers mentioned in this episode is the Index Coverage, because for those who work in the development of a site it is crucial to know if Google can find and crawl pages.

“Small glitches can have a massive effect when it comes to allowing Googlebot to read websites,” says Waisberg: for example, “sometimes we see companies accidentally add a noindex tag to entire websites, or prevent scanning of content with errors in their robots.txt files”.

These problems can be easily discovered using the index coverage status report, which the Googler quickly describes again, also providing practical examples of the type of status displayed.

- Errors prevent the indexing of pages, which therefore will not appear in Google, leading to a potential loss of traffic for the site.

Examples of error are a 404 or 500 response status codes, or insert a noindex directive on a page sent to the Sitemap.

- Valid with notice are pages that, depending on the type of problem, may or may not be shown on Google and therefore should be examined.

For example, Google may find pages that are indexed, even if they are blocked by robots.txt: this is marked as a warning because it is not clear whether you intended to block the page from search results.

- Valid pages are those properly indexed, which can be displayed on Google Search.

- Excluded pages have not been indexed and will not appear in the Search, but Google thinks “that this is your intention or that it is the right thing to do”.

For example, the page has a consciously set noindex directive or appears according to Google as a duplicate of another page.

Checking the issues of single pages

In order to debug a problem with a specific page you can use the Inspect URL tool of the Google Search Console, which indicates the current status of the pages, allows you to test live a URL or ask Google to crawl on a specific page, and also to display detailed information about the resources uploaded on the page.

According to Waisberg, there are several important options available to developers, notably Request Indexing, which initiates a request for new indexing to Google (useful in case of changes to the page), and View Crawled Page, which allows you to verify the HTTP response and returned HTML code.

Improving the site’s health

If having a site that you can find and index in Google is the first step, the second is the work to optimize the health of the project: also in this case the GSC offers various tools for developers, who may have key data and guidance useful for their work.

This is the case with the Security Issues Report, which shows warnings when Google finds that the site may have been hacked or used in ways that could potentially harm a visitor or their device.

Examples of hacking include injecting malicious code into pages to redirect users to another site or to automatically create site pages with meaningless phrases full of keywords; an attack may also use social engineering technique, which can also fool users into doing something dangerous, such as revealing confidential information or downloading malicious software.

The new Core Web Vitals Report

The latest tool presented is the Core Web Vitals Report, which shows the performance of your site pages based on actual usage data (sometimes called field data).

The report is based on the first three metrics identified by Google for the Page Experience, and then:

- LCP, or Largest Contentful Paint, is the amount of time it takes to render the largest visible content element in the viewport, starting when the user requests the URL. This value is important because it tells the reader that the URL is actually loading.

- FID, or First Input Delay, is the time from the moment a user first interacts with the page, when they click on the link or touch the button, until the browser responds to that interaction. This is important in pages where the user has to do something, because it measures when the page has become interactive.

- CLS, or Cumulative Layout Shift, is the total share of page layout that moves during the loading phase; this score is rated from 0 to 1, where 0 means no change and 1 means the most changeable. It is an important metric because it is very annoying to have items that move while a user is trying to interact with the page.

The report is split between mobile and desktop and shows an aggregated report with more details, and it is important to know that a page is entered in the report only if it has a minimum amount of reporting data for any of these metrics.