Guide to the bulk data export from Google Search Console

The bulk data export is a fairly recent feature introduced in Google Search Console, which allows, as you might guess, data to be exported from the platform into Google BigQuery on an ongoing basis. Most likely to benefit from the feature are large websites with tens of thousands of pages, or those receiving traffic from tens of thousands of queries per day, but it is still useful for everyone to learn how to handle this opportunity, and a video from the Search Console Training series walks us through all the necessary steps.

Search Console and data export, a lesson from Google

Leading us through this in-depth study is, as usual, Daniel Waisberg, who in the latest episode of the YouTube lessons is devoted precisely to data exports from Search Console, analyzing the various opportunities available and making a specific focus on collective extraction, called “a powerful solution for archiving and analyzing Search Console data.”

How to export data from Search Console: the ways available to you

The first and easiest way to export data is through the user interface, the Search Advocate confirms us: most reports have an export button that allows us to export up to 1,000 rows.

Second, we can leverage Looker Studio and use the official Search Console connector to create dashboards with performance data. In this case, we can get up to 50,000 rows.

Third, the API allows performance pull, URL checking, sitemaps and site data; again, like Looker Studio, the limit is 50,000 rows.

Finally, the last and most powerful way to export performance data from Search Console is precisely the collective data export, which allows for the largest amount of data via GoogleBigQuery. Aside from anonymous queries, which are always filtered, this function has no row limits: we will get all the queries and pages we have on Search Console.

How to set up and take advantage of collective data export in GSC

After this brief comparison of current data export solutions in GSC, Waisberg gets to the heart of the lesson and shows us how to set up, manage, and leverage bulk data exports.

A bulk data export is a daily scheduled operation to derive Search Console performance data, which is available only to those who own a property in GSC, and includes all the data used by Search Console to generate performance reports. The data is exported to Google BigQuery, where you can run SQL queries for advanced data analysis or even export it to another system.

As we said, this feature is especially useful for large Web sites with tens of thousands of pages, or those receiving traffic from tens of thousands of queries per day, or both.

To use this feature, the Googler anticipates, we need to be familiar with Google Cloud Platform, BigQuery and, of course, Search Console.

Set up a new bulk data export

Before starting a new export, we need to be aware that it may incur costs for us or your organization, Waisberg warns: Google BigQuery has a free tier, but charges fees for some other operations (so need to refer to the service’s official pages).

On the practical side, bulk data export requires performing tasks in both Google Cloud and Search Console; the user interface shown in the video may change over time, but the overall process should remain similar.

The first step is then to prepare the BigQuery account to receive the data and configure the details in Search Console settings. Then we will move on to selecting the project we intend to work on within the Google Cloud console, searching and copying the project ID on the dashboard page; we also take care to verify that the BigQuery API is enabled.

Next, we grant BigQuery Job User and Data Editor permissions to the Search Console service account.

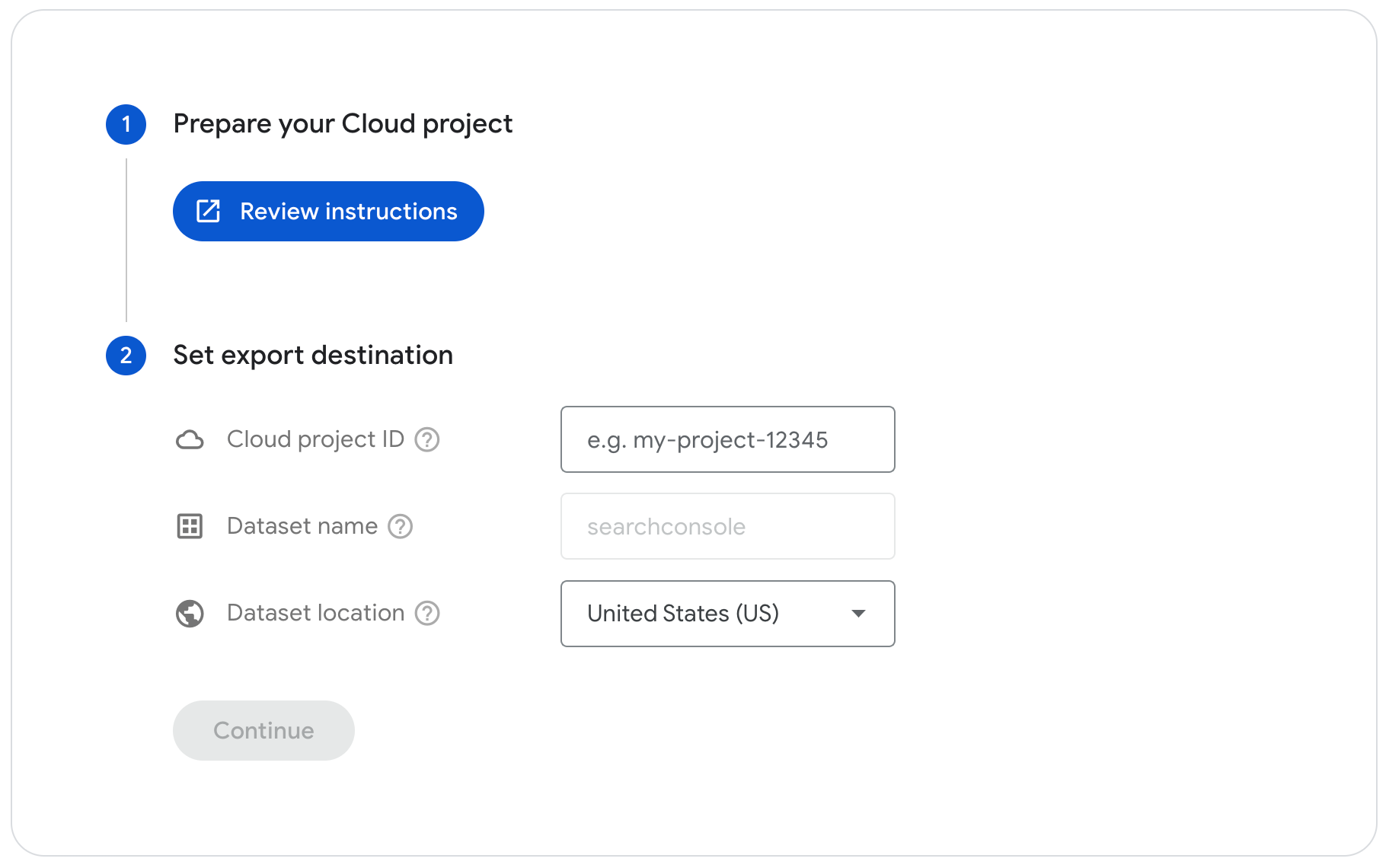

Now we go to the Search Console Settings page and, specifically, to the Collective Data Export setup page; we paste the Google Cloud project ID, select a dataset location from the list (which you will not be able to change later), and confirm the choices to launch a simulation of the export. This test is used to check for any errors in the process: if the simulation fails, in fact, we will receive an immediate warning regarding the problem detected – for example, a problem with access; if, on the other hand, everything works smoothly and the simulation is successful, Search Console should begin the export process within the next 48 hours.

Google considers bulk data export to be a new setup in the site and not a one-time operation, which is why it will send an email notification to all property owners when the setup is complete.

Managing bulk data export

After successful completion of configuration, our exports should arrive at the BigQuery project every day, and Waisberg urges us to pay attention to one element: the data will be accumulated forever per project unless we set an expiration date, and the export will continue until an owner deactivates it on the settings page or Search Console is unable to export the data due to errors-such as no permissions or exceeded quota in the Cloud project, which prevent successful export.

In the event of an error, however, all property owners will be notified both by email and the message box in Search Console. Google will continue to try to export every day for a few days, after which it will stop. We can also choose to monitor exports using BigQuery functionality.

The usefulness of bulk export from Search Console: tables and hints

Bulk data exports include performance data, showing important metrics about the site’s performance in Google Search, including search queries that show the site, page click-through rate, and in which countries we are getting the best results, all on a time basis. This opens Waisberg’s second video on this topic, which goes into detail about using the collective export feature, which allows for similar data to that available with the Search Analytics API, but potentially much larger, depending on the size of the site.

Another premise, basic knowledge of BigQuery and SQL is needed to use these functions, but still the operation is not too complex.

Guidelines for tables

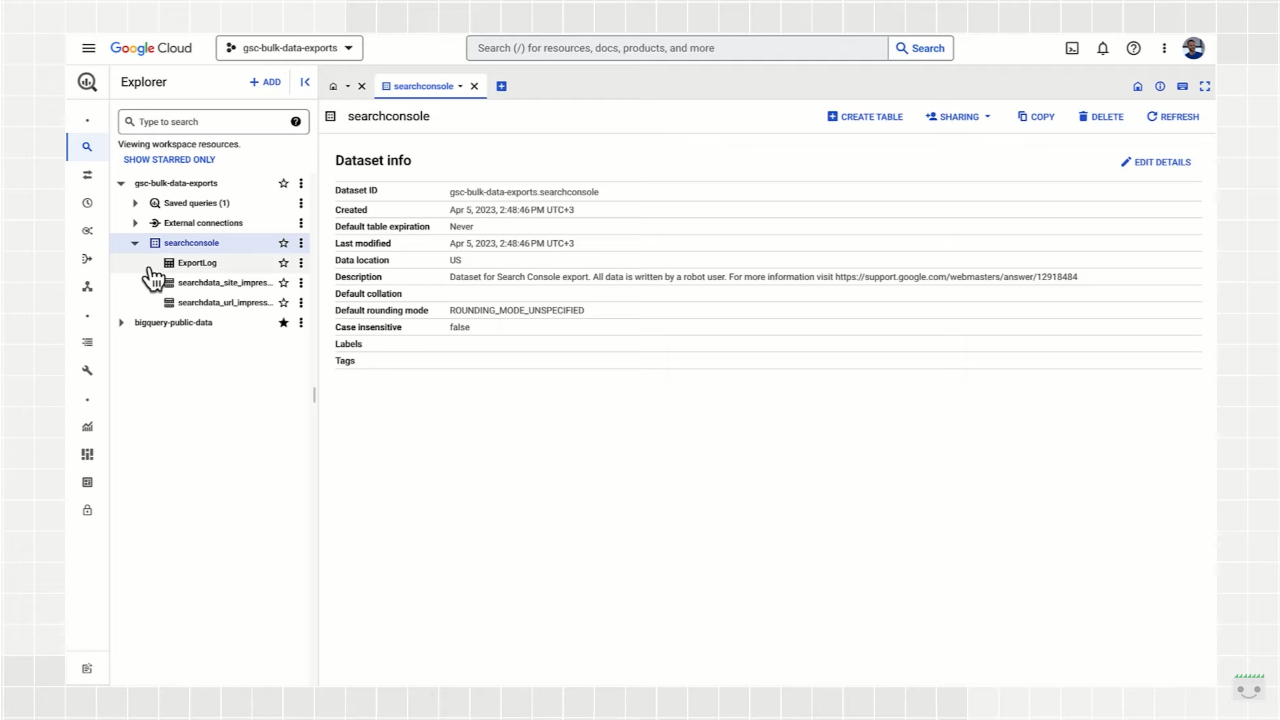

Search Console’s collective data exports create tables in the BigQuery project: each partition refers to a date, and each day we will receive a table update, which is useful for handling optimization work for queries more efficiently, rather than querying the entire table every day.

The first table we see is ExportLog, which is a record of what data has been saved for that day; obviously, unsuccessful export attempts are not recorded here, so they do not allow updating.

The second table is searchdata_site_impression, which contains the performance data for the property aggregated by property; therefore, if a query contains two URLs from the same site, only one impression is counted. In this table we will see fields such as date, search query, a boolean value showing whether the query is anonymous, country, search type, and device type, and for all these variables we can read the number of impressions, clicks, and the value of sum_top_position, which is the sum of the site’s highest position in the search results, where “0” is the first position on the results page.

The third table is searchdata_url_impression and contains all data on site performance aggregated by unique URL, and not at the domain level: this means, therefore, that if a query contains two URLs of the same site, two separate impressions are counted. This table is the largest of the three and, in addition to the fields already in the aggregate table by domain, includes the URL, a Boolean value that shows whether the URL is anonymized for Discover to protect user privacy, and several Boolean fields that indicate whether the URL was displayed with a specific search aspect.

The challenge of handling large data sets is that this activity can become very slow and time-consuming if you’re not careful, which is why Waisberg shares some SQL tips and best practices to apply when querying data.

Best practices for querying and understanding large data sets

Specifically, the Search Advocate explains that there are three very important best practices to follow when querying Search Console data on BigQuery.

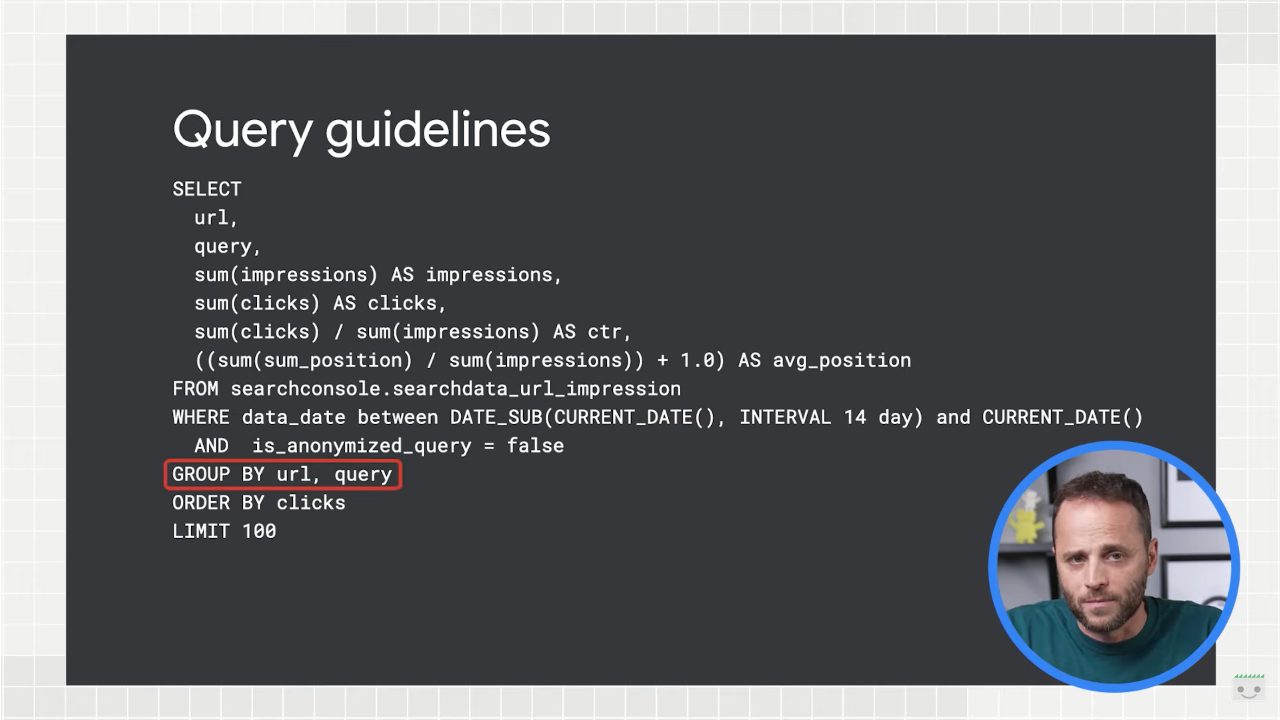

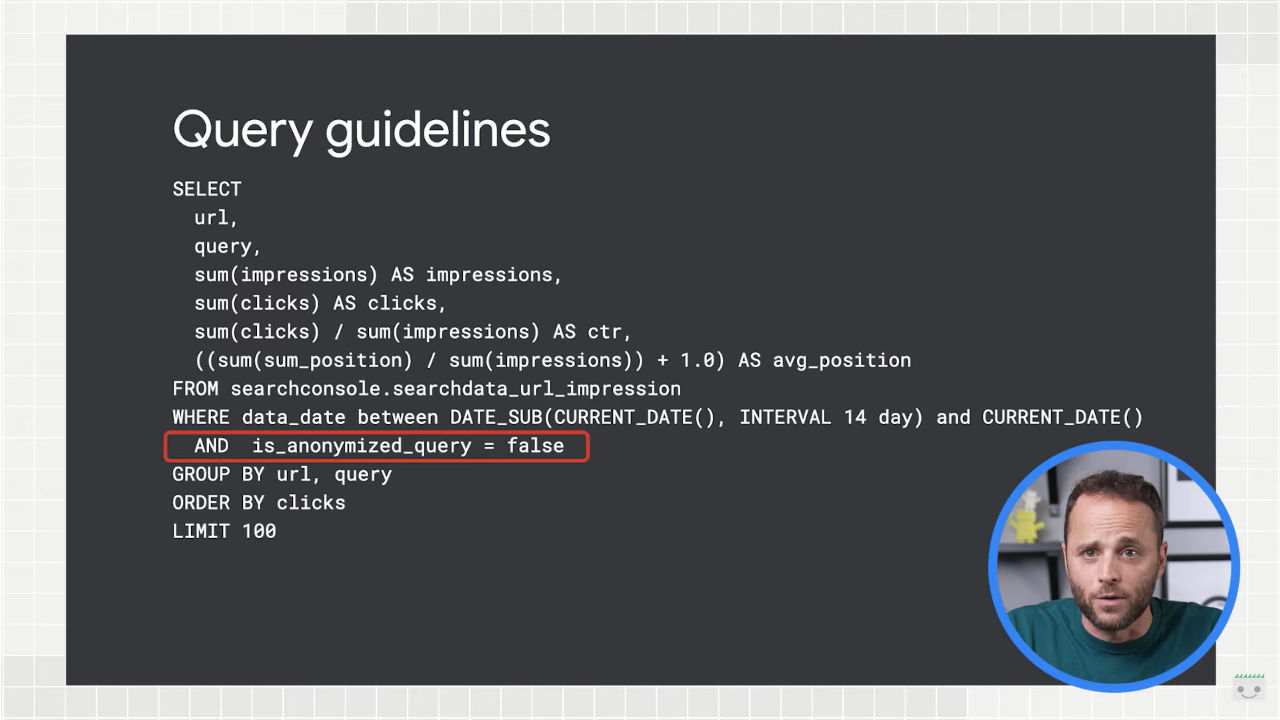

- Always use aggregation functions. There is no guarantee that table rows will be consolidated by date, URL, site, or any combination of keys-for example, we may have a query that appears more than once for the same day, so we should group by query to ensure that all clicks and impressions are actually counted. In the case in the image, the grouping affects both URLs and queries.

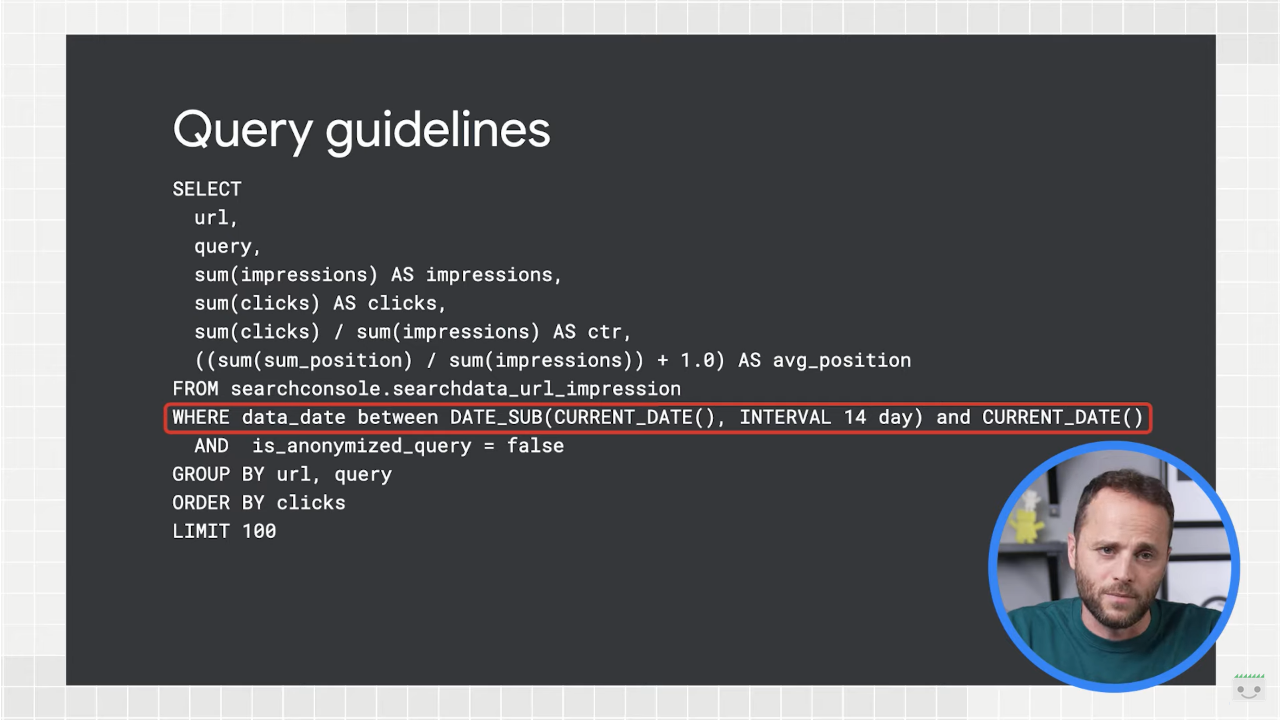

- Limit queries by date whenever possible to speed up queries and save on processing costs. We can do this by using a WHERE clause to limit the date range in the date partition table. In the example, the limitation is set to the last two weeks.

- Filter noquery strings to help reduce the size of the data query. We can do this by extracting data only for rows where the is_anonymized_query field is false.

Having access to so much data makes it all the more useful to “learn or upgrade skills with SQL,” Waisberg says in conclusion: we can do some “beautiful analysis” using this data, such as cluster analysis, machine learning, and other interesting things learned in statistics courses that can help us achieve the “up and to right” goal of exponential growth in returns on a graph.