Google SafeSearch, the filter to explicit content in the Search

It is a topic that can be confusing to site owners and SEO, but above all it is an aspect that can generate a negative impact to the organic traffic of sites that move on a thin line in the provision of explicit content, or that at least they can appear according to Google algorithms. Let’s talk about SafeSearch, the famous filter introduced by Google to limit the appearance of explicit results and content from the search engine SERPs, to try to understand what it is and how it works.

What is Google SafeSearch

Introduced for the first time in 2009, Google SafeSearch filters are the system by which the user can modify browser settings to filter explicit content from display in search results. In practice, they are automatic filters that block the display of content related to pornography or potentially offensive and inappropriate, and in particular with the August 2023 update, Google Search automatically flags and blurs such content for everyone as a default for users globally.

This tool is particularly useful for parents who want to protect their children from age-inappropriate content, but it is equally useful for anyone who wants to avoid running into unwanted content while browsing, whether during work hours or personal use. Moreover, it can be turned on or off at any time, giving users complete control over their browsing experience.

In an SEO perspective, this filter can have an impact on the traffic coming from the organic search of Google, because it can precisely lead to the exclusion of results deemed explicit, which are hidden for part of the users. In addition to standard organic results, the SafeSearch filter also applies to images, videos and websites, and is therefore an element to be evaluated within a strategy especially for those sites that may appear border line.

What Google’s SafeSearch filter is for

The implementation of this system is part of the search engine’s broader efforts to create a safer browsing environment, and since 2009 the SafeSearch filter has become an essential tool for millions of users worldwide, representing an important step in the fight against the uncontrolled spread of inappropriate content on the Internet. Google has shown that it takes the protection of users, especially younger users, seriously and is willing to invest significant resources to ensure a safe browsing experience.

Many users prefer not to display explicit content in their search results, states the official Mountain View document, which is characterized as a guide page for understanding how SafeSearch works and getting to the bottom of common problems.

How the explicit content in SERP filter works

From a practical standpoint, Google’s SafeSearch filters allow users to change their browser settings to help filter out explicit content and prevent it from being displayed in search results.

Specifically, SafeSearch is designed to filter out results that lead to visual representations of:

- Sexually explicit content of any kind, including pornographic content

- Nudity

- Photorealistic sex toys

- Escort services or sexual encounters

- Violence or bloodshed

- Links to pages with explicit content

The guide points out that SafeSearch is specifically designed to filter pages that post images or videos that contain breasts or naked genitalia, as well as block pages with links, popups or ads that show or point to explicit content.

SafeSearch works because of Google’s automated systems, which use machine learning and a variety of signals to identify explicit content, including words on the hosting web page and within links. Perhaps it goes without saying, but SafeSearch works only on Google search results, so it does not block explicit content we find on other search engines or on websites we visit directly.

How to enable SafeSearch and what the effects of the filter are

SafeSearch settings can be managed within your browser or in your Google Account. Basically, there are three possible “conditions”-Filter, Blur or Off-which intuitively refer to the active or inactive state of the feature.

Specifically, activating Filter allows you to block explicit content that is detected-this is the default setting when Google’s systems indicate that the user may be under 18 years old (or if a minor is logging in from their Google Account).

The Blur selection allows explicit images to be blurred, but may show explicit text and links if they are relevant to the search: this is the default setting worldwide, as mentioned above, and is the new standard of the Google Images experience. Basically, it obfuscates explicit images that eventually appear in search results even if the SafeSearch filter is not fully activated.

When SafeSearch is set to Off, the user will see relevant search results, even explicit ones.

Therefore, when the user sets and activates SafeSearch, Google will filter some (or all) pages of sites that contain precisely explicit content (including images, videos, and text) according to algorithmic evaluations.

In most cases, the activation of the filter is a free manual choice, which can later be changed by intervening in the options of the search preferences; in other situations, however, there may be an “upstream” blocking, as in the case of institutions, schools, IT departments and other contexts where a higher administrator may impose the blocking at the lowest levels, or as mentioned for the browsing of minors.

Google SafeSearch and website management: possible problems and solutions

It is important to emphasize that, however sophisticated, the SafeSearch filter is not foolproof: despite Google’s efforts, which now applies the latest machine learning systems to detect explicit content that should be filtered out, some inappropriate content may escape the filter, and, more problematically for those who manage sites, the opposite sometimes happens, i.e., filters are applied to “blameless” sites.

Clearly, this second case can be particularly thorny for those who manage sites: the possibility, however remote, that a site may be wrongly labeled as inappropriate by the SafeSearch filter should always be kept in mind when evaluating fluctuations in traffic and returns, because this situation can clearly cause a negative impact on the site’s visibility in search results.

In fact, when the SafeSearch filter is activated and the site’s content is considered explicit, pages stop appearing in SERPs for certain queries that lead to the site, but there is no communication from Google explaining the situation-in fact, there is a SafeSearch Filter section in the Search Console’s Removals tool, but it reports “only” a history of site pages flagged by Google users as adult content, a list that may therefore be partial.

The first problem with sites with SafeSearch could therefore be this: suddenly, organic visits contract almost inexplicably.

Especially if we are dealing with “sensitive” topics (with keywords or images that the algorithm associates with inappropriate content), activating SafeSearch on users’ devices is a possibility that should not be discarded in traffic analysis, but sometimes there can be borderline situations, where the filter’s trapdoor hits pages misjudged as sensitive, causing a loss of visibility for that content in the SERPs and thus a drop in subsequent traffic to the site. This happened more often in years past, when SafeSearch could misinterpret certain terms or images and block neutral pages, or hide an entire site even if explicit content affected only a small portion of articles.

It is therefore important to determine whether our content is identified as explicit by the system’s complex algorithms, and we can perform two quick checks at the page and site level.

To check whether an individual page is blocked by SafeSearch, simply perform a search that has it appear in Google Search and click on “enable SafeSearch”: if doing so causes the page to “disappear” from the results, it is likely to be affected by the SafeSearch filter for the query in question.

On the other hand, to find out if the entire site is considered to be explicit, we can activate SafeSearch and use the site: search operator (which we know is also useful for checking that normal pages are indexed correctly). If no results appear, it means that Google is actually filtering the entire site through the SafeSearch feature.

If, on the other hand, we have seen a drop in traffic on certain URLs and hypothesize that the cause may be precisely the misapplication of the filter, we can use the site: command on the offending URLs and ascertain the situation, checking whether and which pages in the domain are seen as explicit.

Tips for optimizing the site for SafeSearch

Fortunately, we have some ways that, as website owners or managers, we can use to help Google understand the nature of the site and content, specifically following the steps described in the official guide, which allow us to improve the eventual application of SafeSearch filters to our project.

Specifically, the guide presents us with the two methods we have at our disposal to protect a site that publishes adult content or otherwise any type of content that could be considered explicit and to allow Google to understand the nature of the site and to more accurately identify these topics from any “safe” parts: the use of the rating meta tag and the grouping of explicit content in a separate location.

These steps are used to apply SafeSearch filters to the site, which is the surest way to avoid unforeseen and unpleasant situations; as the guide says, they help ensure that users see the results they want to see or expect to find and are not surprised when they visit the sites shown in the search results, and at the same time support Google’s systems to recognize that the entire site is not explicit in nature, but also publishes non-explicit content.

How to use rating meta tag

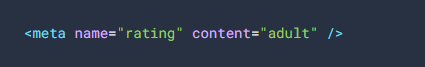

Google’s first advice is to add metadata to pages with explicit content: One of the strongest signals that search engine systems use to identify pages with explicit content is the manual marking by publishers of pages or headers with meta tag ratings.

In addition to content=”adult”, Google also recognizes and accepts the indication content=”RTA-5042-1996-1400-1577-RTA”, which is an equivalent way to provide the same information (it is not necessary to add both tags).

The tag should be added to any page with explicit content: according to Google, this is the only thing to do “if the site has only a relatively small amount of explicit content”. For example, if a site of several hundred pages has some pages with explicit content, it is usually enough to mark those pages and no other interventions are needed, such as grouping the contents in a subdomain.

In some cases, if we are using a CMS system such as Wix, WordPress, or Blogger, we may not be able to edit the HTML code directly or we may choose not to; alternatively, the CMS may have a search engine settings page or some other mechanism for telling search engines about meta tags.

Group explicit pages in a separate location

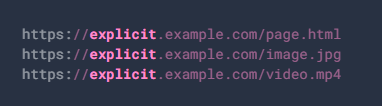

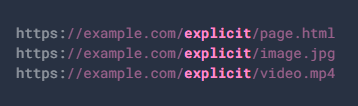

The second system to help Google focus its SafeSearch filter relates to the structure of the site and is suitable for sites that publish significant amounts of explicit and nonexplicit content: in practice, it is a matter of separating and making clear the distinction between these contents also at a structural level, using a different subdomain or a separate directory.

For example, explains the guide, all explicit content can be placed in a separate domain or subdomain as in this case:

Or, all explicit content can alternatively be grouped into a separate directory, as in this example:

The document makes it clear that it is not necessary to use the word “explicit” in a folder or domain, but only that the content is grouped and separated from the nonexplicit content. If there is no such distinction, in fact, Google’s systems could “determine that the entire site seems explicit in nature” so they could “filter the entire site when SafeSearch is active, even if some content might not be explicit”.

The additional guidance to improve site understanding

With the 2023 updates, the guide also delves into two other tasks that may be useful to perform to optimize the site for SafeSearch.

First, we can allow Googlebot to retrieve video files, so that Google can understand video content and provide a better experience for users who do not want or expect to see explicit results.This information is also used to better identify potential violations of regulations related to child sexual abuse and exploitation.

If we do not allow the embedded video file to be retrieved, and if SafeSearch’s automated systems indicate that the page may contain child pornography or other prohibited media content, Google may limit or prevent the detectability of explicit pages.

The other option concerns content protected by mandatory age verification: in these cases, Google expressly recommends allowing Googlebot to scan without enabling age verification.

Detection and troubleshooting with SafeSearch

As said, Google’s systems are not (yet) infallible and the algorithms could mistakenly mark as explicit neutral content even though we made the suggested changes

However, the guide invites you to check and keep in mind other conditions before proceeding to the “request for help”, and in particular:

- Wait up to 2-3 months after making a change because Google’s classifiers may need more time to process such interventions.

- Even posting explicit blurry images on a page can still lead to the resource being considered explicit if the blur effect can be undone or if it links to a non-blurry image.

- The presence of nudity for any reason, even to illustrate a medical procedure, can trigger the filter because the intent “does not negate the explicit nature of that content.”

- The site could be considered explicit if it publishes user-generated content that is explicit or if it features explicit content injected by hackers using hidden keywords with cloaking or other illicit techniques.

- Explicit pages are not eligible for some search result features, such as </a href=”https://www.seozoom.it/rich-results-snippet-google-seo/”>rich snippets, featured snippets, or video previews.

- With the published URL test of the URL Inspection tool in Search Console, we can verify that Googlebot succeeds in crawling without enabling any age verification.

The guide then clarifies one final point: SafeSearch relies on automatic systems, and it is only possible to overturn automatic decisions in cases where the site has clearly been misclassified by SafeSearch, which incorrectly filters published content.

If we feel that we are in this condition, and if at least 2-3 months have passed since we followed the directions for optimizing the site, we can request a review by filling out an appropriate form posted online.