Google EEAT: what it means and how to improve signals on pages

Experience, Expertise, Authoritativeness, Trustworthiness. This is what Google EEAT means, the system by which the search engine invites its quality raters to evaluate content on the Web, according to what was made official with the December 2022 update of the guidelines, which introduced the second E and led to the new conceptualization. These four simple letters conceal a deep meaning that ties in with Google’s goal of providing quality answers to search engine users’ queries, because they represent the paradigm by which algorithms and quality raters assess the levels of expertise expertise, authority, and reliability behind the content published online. Despite Google’s continued attention, the EEAT topic continues to be rather hostile for the SEO community, generating much confusion and misunderstanding about how it works and how Google uses this paradigm.Therefore, it is a case of clarifying information, delving into what this acronym means, why it affects all sites, what are the methods to strengthen the perception of the parameters what, finally, the clichés that it is the case to debunk and overcome in order not to be wrong.

Google EEAT: what the acronym is, what it means and what it is for

At a general level, Google pursues the mission of constantly improving the search experience of its users and meeting their expectations by providing “quality results” to queries, and the introduction (and formalization) of the EEAT paradigm serves precisely to provide a certain benchmark so that search results are indeed of high quality and respond in the optimal way to people’s search intentions.

The direct and most explicit reference to this concept can be found in the guidelines for quality raters, which, in the aforementioned December 2022 update, revolutionized the previous expression EAT by introducing the second E for Expertise, which then becomes one of the new cornerstones for determining the quality of a page. It is worth mentioning that the work of quality raters does not directly influence ranking, but nevertheless the guidelines provide useful suggestions for content creators and site owners who want to improve their pages for both Google and readers.

What Google says about EEAT

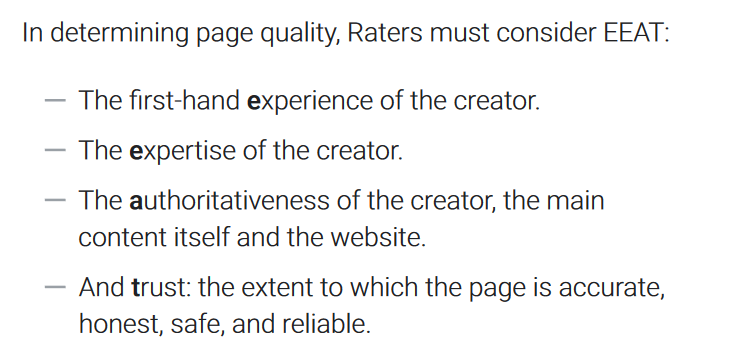

And so, in the new version of the document we read that raters must consider Experience, Expertise, Authoritativeness, and Trustworthiness to determine the quality of the page they are reviewing, and more specifically they must try to analyze and understand:

- The first-hand Experience of the creator.

- The Expertise of the creator.

- The Authoritativeness of the creator, of the main content itself and of the whole site.

- The Reliability, how accurate, honest, safe and trustworthy the page is.

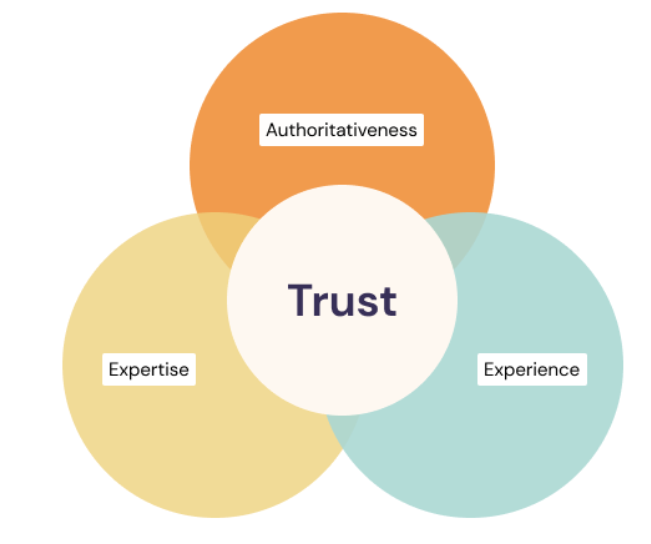

More specifically, trustworthiness is the most important element of this quartet, and Google specifically says that Trust is at the core of the E-E-A-T family. The type and amount of Trusts that are needed to achieve high levels of quality depend on the type of page, and for example:

- Online stores must have secure online payment systems and reliable customer service.

- Product reviews must be honest and written to help others make informed purchasing decisions (rather than to exclusively sell the product).

- Informational pages on clearly YMYL topics must be accurate to avoid harm to individuals and society.

- Social media posts on non-YMYL topics may not need a high level of trust, such as when the purpose of the post is to entertain the audience and the content of the post is not likely to cause harm.

The other elements of the EEAT family, and thus Experience, Expertise, and Authoritativeness, are defined as “important concepts that can support trust assessment,” and they represent:

- Experience: the extent to which the content creator has the necessary first-hand or life experience for the topic. Many types of pages are trustworthy and achieve their purpose when they are created by people with personal experience, says Google, which urges people to think about what inspires more trust between “a product review by someone who has personally tried the product or a review by someone who has not used it.”

- Expertise: the extent to which the content creator possesses the knowledge or skills needed for the topic. Different topics require different levels and types of expertise to be trustworthy, and in this case Google asks who inspires more confidence between “the advice of an experienced electrician or that of an old house enthusiast who has no knowledge of electrical wiring.”

- Authoritativeness: the extent to which the content creator or website is known as a reference source for the topic. Sometimes it is not possible to identify an official and authoritative website or content creator, but in some cases a specific website or content creator is among the most reliable and trustworthy sources. Examples cited by Google include,the profile page of a local business on social media-which may be the authoritative and trustworthy source for what is for sale right now-or the official page of the government agency for obtaining a passport, which is the single, official, and authoritative source for document renewal.

Experience, expertise, and authority may overlap for some types of pages and topics: for example, a person might develop expertise in a topic through first-hand experience accumulated over time, and different combinations of E-E-A may be relevant for different topics. Basically, Google invites its raters to consider the purpose, type, and topic of the page, and then to ask what would make the content creator a reliable source in that context.

The updated document also explains to raters what kind of information to look for in order to understand the EEAT of the content they are analyzing, and the referenced sources are:

- “About Us” page of the Web site or profile page of the content creator. The starting point is what the website or content creators say about themselves, which can be used to begin to understand whether what they are proposing is information coming from a reliable source.

- Independent reviews, references, news stories, and other credible pages about the website or content creators. The second step is to search, if any, for independent, reliable evidence about the content creator’s level of experience, expertise, authority, and trustworthiness to outside eyes, and then understand what others are saying about the website or content creators.

- Main page content, reviews and comments. Analyzing what is visible on the page is useful for certain types of content: for example, one can tell that a person is an expert in hairstyling by watching a video of him or her in action perfectly arranging another person’s hair or by reading user comments (commenters often highlight expertise or, conversely, point out shortcomings).

The history of the EEAT paradigm, formerly EAT

It was in 2014 that people began to talk about a new method for searching the quality of pages developed by Google, then progressively introduced also in the guidelines for quality raters, the document that serves precisely to guide the work of evaluators on behalf of Google, with the task of manually reviewing a set of Web pages and sending feedback on the quality level of those pages to the search engine.

Google uses the quality raters’ manual evaluations as training data for its self-learning ranking algorithms (what is called supervised machine learning) to identify patterns for high-quality content and sources.

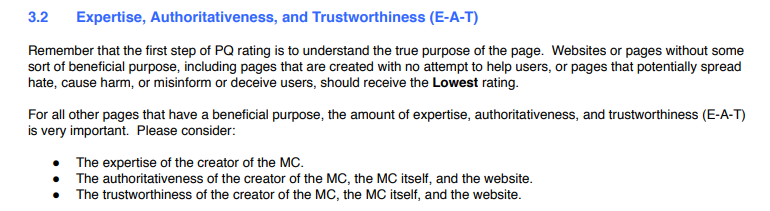

To be precise, it is in the 2014 edition of the Search Quality Guidelines that the phrase EAT, an acronym for Expertise, Authoritativeness and Trustworthiness, first appears, and then these concepts become central to raters’ evaluations and Google’s algorithm improvement work. In practice, it is from that point that officially E-A-T becomes a criterion that Google asks raters to use to measure how well a Web site offers content that can be trusted.

Specifically, those guidelines explained that “for all other pages that have a beneficial purpose, the amount of expertise, authority and trustworthiness (E-A-T) is very important,” and Google recommended that its raters consider:

- The E-A-T of the main content of the web page they are analyzing.

- The site itself.

- The creators of that website’s content.

“Remember that the first step in assessing page quality is to understand the true purpose of the page” and “sites or pages with no useful purpose whatsoever, including pages created without the intention of helping users, or that potentially spread hate, cause harm, deceive or misinform people should receive the lowest rating,” it read further.

Turning specifically to the old EAT acronym, the document said that “for all other pages that have a useful purpose, the amount of expertise, authority and trustworthiness (E-A-T) is very important.” In addition, Google added that “there are high E-A-T values in pages and websites of all kinds, even gossip sites, fashion sites, humor sites, but also in forums and Q&A pages,” because indeed “some types of information are found almost exclusively on forums and within discussions, where a community of experts can provide valuable perspectives on specific topics.”

What the E-A-T acronym meant to Google: the definitions of Experience, Authoritativeness, Trustworthyness

Let’s take a closer look at the three letters that made up the old EAT acronym coined by Google to search for quality in web pages.

Expertise referred to the competence a writer must demonstrate with respect to that specific subject matter, derived from having dealt with similar cases in his or her professional career.

The word Authoritativeness is perhaps more ambiguous, because many people immediately think of authority: they are actually talking about authority, understood as the ability to be recognized by the community as an expert in a field or subject.

Finally, Trustworthiness translates to reliability and is an element related to the purpose for which the site publishes certain content, to avoid the publication of false or inaccurate information or pages created for motivations related merely to the search for visibility or profit, without any useful purpose for the user.

Ultimately, then, Google’s goal with the introduction of EAT was the establishment of a system to protect users from low-quality results in SERPs that might offer harmful information, to ensure (and make sure) on the contrary that pages placed in its search pages have correct and verifiable content, especially in sensitive topics.

Explaining these issues was an in-depth article by Dave Davies on SearchEngineJournal, which comprehensively described the paradigm’s three criteria, giving us insights that are still valid today

- Experience / Expertise

This is the parameter that refers to the experience and expertise of the content creator, with specific reference to the individual page content to be judged and not to the site as a whole.

The evaluation criteria are not set in stone, but rather are based on common sense, context, and users’ intentions.

When searching for information on lung cancer, an in-depth study on causes, impact, statistics, and so on produced by a major medical foundation, institution, or government agency will likely score high.

If the user is looking for information about what it is like to live with this disease, a personal account from someone who has lived with partners or cared for sick relatives might meet the criteria for competence exceptionally well. It might be posted on a major site or forum, but its weight for this parameter would always be high.

Thus, the assessment of expertise looks at the context and the ability to address a topic competently so as to meet the needs and requirements of the users.

- Authoritativeness

Authoritativeness of content is judged by the value of the content itself and by the domain.

In general, this could be based on external signals such as links and their quality, citations and brand mentions, etc., either for the content of the particular page or for the entire domain. However, one must remember a 2015 Google patent that ranks search results based on entity metrics, specifically identifying some key metrics:

- Correlation, answering the question “how related are two entities?”

- Notability: “how notable is an entity in its domain?”

- Contribution: “how is an entity viewed by the world?” are there critical reviews, fame rankings, or other objective references to consider?

- Awards: “has the entity received awards?”

Reliability/Trustworthiness

The trustworthiness of the content is again judged by both the trust placed in the particular content and the trust enjoyed by the site as a whole.

It is similar to authority, but with some more specific aspects: rather than focusing primarily on the volume of quality references, it looks more at specific signals and sites.

One example used in the guidelines is the BBB, Better Business Bureau, a U.S.-based organization whose mission is to offer advice to consumers and collect information on companies to assess (and report on) their level of reputation. While not openly talking about using this rating as a positive signal, it states that “a negative rating based on a significant volume of users could be used as a negative signal” for site trustworthiness.

Google EAT and sites, the guidelines to follow

The Google document also offered very useful guidance for improving EAT levels on some specific types of sites:

- In medical advice, content should be written or produced by people/organizations with appropriate medical expertise or level certifications. Medical advice should be produced in a professional style and should be edited, revised, and updated regularly.

- In the news sector, articles should be written with journalistic professionalism and include factual content presented in a way that helps users better understand events. Generally, news sources with high EAT have published established editorial policies and robust review processes.

- News pages on scientific topics should be produced by people or organizations with appropriate scientific expertise and represent an established scientific consensus on issues where such a consensus exists.

- For pages on financial, legal, tax, etc. advice, content should come from reliable sources and be checked and updated regularly.

- Content such as home renovation and advice on family issues should also be written by experts or refer to experienced sources that users can trust.

- Finally, pages about hobbies would also need to show competence (expertise): it does not mean that you have to prove with evidence that you are capable in that particular field, but to make the competence and skills understood through the content posted.

The importance of E-A-T for Google and sensitive topics

Experience, expertise, authoritativeness and trustworthiness are thus criteria used by raters to assess the quality and credibility of content displayed in SERPs, providing useful signals for Google’s algorithm as well, which for its part uses various systems to determine whether a Web site is credible and whether the content it publishes is worthy of ranking high in Search results or even appearing in the Google Discover feed.

The weight of E-E-A-T is particularly felt in assessing the quality of YMYL (“Your Money or Your Life”) content, for which Google and its public voices have repeatedly emphasized the need to rely on expert authors: for certain types of searches, there is a huge possibility of sharing information that can have a crucial impact (even in a negative sense) on private aspects of people’s lives such as “happiness, health or wealth.”

Put another way, Google is concerned that its SERPs may show low-quality results with incorrect information or potentially fraudulent or misleading advice and opinions, on topics such as finance and investment, health and medicine, legal information, and so on, which can cause serious harm to users performing the search.

It is therefore primarily to protect people (and the quality of its results) that Google introduced the concept of E-A-T, later to become E-E-A-T, which then serves to increase the chances that placed sites offer a high level of expertise, competence, authority and reliability, especially in sensitive subjects such as medical, health and financial matters. Specifically, quality raters are asked to take special care in verifying the reliability and credibility and authority of sites that have pages that allow users to make purchases or transfer money (including home banking) and offer informative content on finance (such as investment advice, home buying, and so on), for the money aspect.

On the broader life front, however, medical and health information pages on various aspects (drugs, nutrition, diseases) are under observation; legal information pages (legal advice or information content on forensic topics such as divorce, wills, guardianship of minors and so on); and informed citizenship content (public/official information, updates on public laws and government programs, news on important topics in the areas of business, international events, politics and so on).

Another element to focus on is the distinction between Expertise and Experience: for the document, people who share their stories based on their direct experience can be considered reliable content in certain situations, but in others a more objective and qualified reference is always needed, namely expertise (which is also measured in relation to accuracy of information and alignment with expert consensus).

What EEAT means for web pages and SEO

In order to achieve good rankings on Google, it is therefore easy to assume that high levels of EEAT are needed, because the search engine wants to protect the user and make him safe when browsing pages, especially for such sensitive content, trying to offer him as an answer for such queries sites that really offer useful advice or a concrete solution to a problem in a transparent and safe way.

However, regarding the relationship between the EEAT paradigm, SEO, and ranking there are still some elements to understand thoroughly: first, EEAT influences rankings but is not a direct ranking factor, but rather a quality indicator, a framework that encompasses the many signals Google uses to evaluate and rank quality content.

In addition, Google does not use specific metrics or scores to calculate these characteristics, but its algorithms look for and identify signals on pages that may match the E-E-A-T perceived by humans.

It follows that in order to improve the EEAT of the site and creators, we cannot refer to precise and measurable technical interventions, but rather must try to conceptually align our content with the various signals used by Google’s automated systems that rank pages.

In addition to what has just been said, we can say that essentially with the EEAT criteria Google and its quality raters determine the value of a site: evaluators from outside the search engine look at pages with the guidelines on this point in mind, to see if the site offers a good user experience and if the content meets the required standards, is readable and in some way shareable and recommendable (even in a social sense). And so, these criteria relate only to how Google directs quality raters in their work to support the general improvement of the operation of the search engine’s algorithms, and are not for the purpose of making examinations of individual websites.

Google EEAT, the official clarifications

John Mueller has intervened on the issue several times, who, for example, in an old hangouts from 2020 reported by seroundtable specified that the search engine’s algorithm does not explicitly use structured data to strengthen the EAT of site pages, but he did not rule out that this result could be achieved indirectly, so to speak.

Specifically, Mueller responded to a question posed by a user asking for suggestions on how to ensure that readers and Google understand the validity of the authors of his site’s health pages. In his response, the Googler makes it clear that this issue that cannot be solved by simply “putting an element like specific structured data or meta tags” on a page and saying “well, my page is correct or my information is correct,” but needs more extensive and time-consuming work to first and foremost make users understand that the content is trustworthy or expertly produced.

Already in a previous appointment in 2019, Mueller himself said that the then EAT paradigm “is not something you have to optimize on your site for access by quality raters” because it is not a technical requirement.

Rather, for Mueller, the Expertise, Authoritativeness, Trustworthiness criteria is something related to quality and user experience, so you can also test directly with your users to see if they perceive content differently. For example, Google’s Senior Webmaster Trends Analyst suggested to “make it a point to analyze user behaviors and reactions to various on-page setups, and try to understand how you can best show that the people creating content for the website know what they are talking about, have credentials or other factors relevant and pertinent to the topic.”

EEAT impacts many areas in Google’s ranking algorithms

More recently, it was Hyung-Jin Kim, VP of Search at Google, who revealed that announced that Google “has been implementing EAT principles for ranking for over 10 years.” In his keynote at SMX 2022, Kim noted that (the then) “EAT is a template for how we evaluate a single site: we do it for every single query and every single result; it’s pervasive in every single thing we do.”

It is clear from this statement that EEAT is important not only for YMYL pages, but for all topics and keywords and seems to impact many different areas in Google’s ranking algorithms, because Google wants to optimize its search system to provide excellent content for respective search queries depending on the user’s context, making the most reliable sources stand out first (or only, ideally).

For EEAT recognition, quality raters must “do reputational research on sources“, assessing experience, expertise, authoritativeness, and reliability to determine whether the pages they are reviewing “meet the information needs based on their understanding of what that query was looking for,” considering elements “such as how authoritative and reliable that source seems to be on the topic in the query.”

Still too much confusion about the weight of criteria for SEO and ranking

Despite these clarifications, the concept of E-E-A-T of a site and the authors of its content continues to be quite hostile to the community, generating a lot of confusion and misunderstanding about how it works and how Google uses this paradigm, to the point that there are at least 10 clichés that are worth debunking and overcoming in order not to get it wrong.

Debunking these misconceptions and clichés about Experience, Expertise, Authoritativeness and Reliability is Lily Ray, who actually refers to the earlier version of the paper. Her analysis, however, is still relevant, partly because she had already guessed that Expertise also encapsulated part of Experience-while Google has since decided to better split the two concepts.

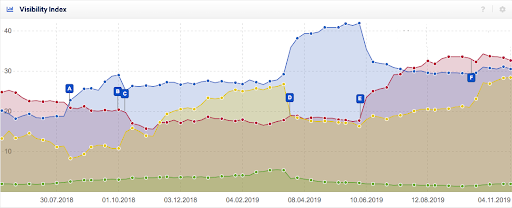

In the current version of the Quality Guidelines, updated as mentioned in December 2022, the expression E-A-T is mentioned 126 times in 176 pages, and more generally the paradigm has become an important topic of discussion in the SEO industry, especially in analyzing changes in organic traffic performance after Google’s broad core updates that followed the now famous one of August 1, 2018.

As Ray explains, SEO practitioners began to speculate (and Google later partially confirmed) that E-A-T (and now E-E-A-T) played a major role in the algorithmic updates, which seemed to noticeably impact YMYL (your money your life) sites that complained of significant problems in the search engine’s perceived parameters of competence, authority, and reliability.

As is often the case with the exchange of ideas within the SEO community, however, the discussion about E-A-T quickly led to confusion, misunderstandings, and misinterpretation of facts: for the expert, many of these misconceptions stem from a disconnect between what is theory and what is currently in Google’s algorithm.

Bringing up results with good E-A-T is a goal of Google and is what algorithms are supposed to do, but “E-A-T itself is not an explanation of how algorithms currently work“, she summarizes.

Top 10 clichés about Google EEAT

Through her experience, Lily Ray then identified 10 myths and misconceptions surrounding the topic, debunking them to clarify how E-E-A-T actually works and how Google is using it.

- E-E-A-T is not a single algorithm

Many people think that E-E-A-T is a single, stand-alone algorithm, but it is not. Back in 2019, in a famous Q&A at Pubcon, Gary Illyes said that “Google has a collection of millions of tiny algorithms working in unison to generate a ranking score,” and that many of these baby alghoritms “look for signals in pages or content “that can be conceptualized as E-A-T.”

Thus, E-A-T and today E-E-A-T is not a specific algorithm, although Google’s algorithms look for both offsite and onsite signals related to good or bad levels of such concepts, such as PageRank “which uses links on the Web to understand authority.”

- There is no such thing as an E-E-A-T score

Another commonplace concerns the presence of an E-E-A-T score, but on the same occasion mentioned earlier Illyes assured that Google has “no internal E-A-T score or YMYL score.”

In addition to there being no E-E-A-T score assigned by Google’s algorithms, we must remember that quality raters’ ratings do not directly influence the ranking of any individual website, as explicitly reiterated by Google on multiple occasions.

- E-E-A-T is not a direct ranking factor, and Experience, Expertise, Authoritativeness and Trustworthyness are not individual ranking factors

We have said that E-E-A-T is a relevant concept for content ranking, but still we cannot technically call it a direct ranking factor, one of the 200+ signals Google uses to determine a specific page’s position in its SERPs.

E-E-A-T cannot be measured directly and does not function in the same way as “page speed, HTTPS or keyword usage in title tags”, so its role in rankings is more indirect even if sensitive.

- Not all sites need to worry equally about E-E-A-T

In the guidelines for quality raters, Google explicitly clarifies that the level of E-E-A-T expected from a given site depends on the topics presented on that site and the extent to which its content is YMYL in nature and has potential dangerous purposes for people.

For example, Ray said, “high E-E-A-T medical advice should be written or produced by people or organizations with appropriate medical expertise or accreditation,” while a site focused on a hobby, such as sewing, photography or learning to play guitar, requires less formal expertise and will be held to a lower standard in terms of E-E-A-T analysis.

For businesses that deal with YMYL topics, which may have a direct impact on readers’ happiness, health, financial success or well-being, E-E-A-T is of utmost importance; e-Commerce sites are also considered YMYL by definition because they accept credit card information.

The old E-A-T Meter in the image helps illustrate the extent to which Expertise, Authoritativeness and Trustworthiness are important for Web sites in different categories.

- Improving E-E-A-T is not a substitute for other SEO optimizations

Focusing on Google’s perceived levels of E-E-A-T is not a substitute for routine SEO optimization work and alone does not improve the performance and visibility of site pages.

As we often say, to be successful, SEO must be based on a holistic strategy in which all interventions – onpage, such as technical SEO or content optimizations, and offpage such as high-quality link acquisition – must also be performed in order for E-E-A-T efforts to bring results.

Even for sites that have been negatively impacted by algorithm updates E-E-A-T is only one area to consider, and recovery from core updates requires improvements in many different areas of the site, starting with overall page and content quality through resolving user experience issues, reducing technical problems, and improving website architecture.

In addition, if a site contains serious technical problems such as slow page load times or difficulties with crawling or content rendering, Google may not even be able to index the pages properly.

Therefore, Ray advises, one should prioritize E-E-A-T among other SEO efforts only according to the severity of other problems that might affect our site’s performance.

- Adding author biographies is not a ranking factor per se

One of the most common and immediate strategies for improving E-E-A-T is to add an author reference in each piece of content and create a biography or dedicated page that explains who the authors are and why they can be trusted to make high-quality contributions.

This advice comes from what is written in the Quality Guidelines, where Google repeatedly recommends that quality raters review individual author biographies as a way to determine the extent to which the authors themselves are knowledgeable about the topics they write about.

Again, however, one point needs to be made clear: just adding author biographies is not in itself a ranking factor, because Google cannot recognize or retrieve information about every author but, as we know, reasons by entity.

John Mueller also suggested that author biographies are not a technical requirement, nor do they require a specific type of Schema markup to be effective, recommending rather that we try to understand what users’ expectations are of the site’s content and, in particular, how best to show that “the people who create content for the site are really great, know what they’re talking about, have credentials or whatever is relevant in the field.”

As mentioned, however, work needs to be done not so much on the person/author, but on the entity: although Google can recognize established authors in the Knowledge Graph, it may not have the same capabilities to recognize all authors, but it has been pursuing a number of initiatives related to authorship in recent years and so there may be evolutions for this functionality.

- Core updates do not affect only YMYL sites nor do they rely only on E-E-A-T

It’s another useful clarification: although indeed some broad core updates have overwhelmingly affected YMYL sites, particularly those in the health or medical sector, there are also other categories that have felt the impact – remember, for example, recipe food sites, which experience huge fluctuations after every major update since the August 2018 update, despite “having similar levels of EAT and usually being run by cooking enthusiasts who are all equally qualified to post recipes online.”

Lily Ray studied the performance of four competing recipe sites that saw major impacts during recent core algorithm updates, despite having similar levels of E-A-T. She found that many sites in the niche face a unique set of SEO challenges that extend beyond E-A-T, such as site architecture issues, overwhelming ads, and poor page load times, and it is these other issues that may most likely be responsible for the drop in performance during the core updates.

- E-A-T and E-E-A-T are not new elements

Even in the international SEO community, there are those who are distracted and think that the talk about E-A-T and now E-E-A-T is recent news, at best dating the start of this focus around 2018 and the now infamous medic update.

In fact, E-A-T was first introduced in the 2014 version of Google’s guidelines for quality raters, and since 2015 it has also become commonly used in SEO; moreover, Google’s efforts to reduce misinformation and bring out reliable, high-quality content predate the 2018 update, with several initiatives aimed at improving the reliability and transparency of its search results and reducing fake news.

In fact, Lily Ray reveals that 51 percent of websites, analyzed by one of her studies, that experienced a drop in performance during Google’s 2018-2019 broad core updates had already been negatively affected by the March 2017 Fred update.

- The August 1, 2018 core update is not called medic update

It was in all likelihood Barry Schwartz who christened the 2018 core update the “medic update“, noting a trend in the early stages of release, but this is an informal name and indeed core algorithm updates no longer have official names from Google other than those that identify their temporality.

With respect to the one in 2018, other digital marketers have called it “The E-A-T Update“, a name that is not only incorrect but also misleading, since E-A-T was not the only issue causing a drop in performance during that update.

- Addressing E-E-A-T takes time

SEO times are long in general, and even E-E-A-T is not something we can apply to the site and expect immediate positive results.

While with some SEO tactics, such as metadata optimization or technical troubleshooting, it is possible to see immediate increases in performance after Google crawls again and indexes updated content, E-E-A-T does not exactly work that way, as it is not a direct ranking factor.

Improving the perceived trustworthiness of our site is a resource-intensive activity that requires a significant investment of time and effort to complete, reiterates Lily Ray.

It takes time to improve trust with users, and search engines can take even longer to process such changes, especially for sites that have been affected by algorithm updates due to E-E-A-T issues.

Typically, Google makes its reevaluations of overall site quality only with the launch of the next major update, so any work done to improve E-E-A-T may take at least several months to begin bearing fruit.

However, what we need to understand and remember is that the benefits of improving E-E-A-T go beyond just SEO, because these aspects also serve to improve brand strength and user experience because people feel more confident that they can trust our website, our authors, and our brand.

How to improve the E-A-T of our pages.

While not technically a ranking factor, E-E-A-T is an important indicator of quality for a site’s pages, and there are still some strategies that can help improve Google’s perception of the level of experience, expertise, authority, and trustworthiness of our content and of us as authors.

However, we should not think about technical optimization interventions, we reiterate, because Google does not use specific metrics or scores to calculate these characteristics, but rather some systems and signals that can help the search engine’s algorithms understand (and evaluate well) our E-E-A-T.

The main tips and practical interventions on E-E-A-T

The first aspect to pay attention to is the creation of content itself, which, as Google’s guidelines also point out, must be updated consistently, especially if additional or different information has arisen that the user should know about. No less important is setting the page purpose for each individual page, trying to cover with an article a single topic with a lot of information.

It follows that, as we often repeat, content should be of quality, unique, complete, original, useful, and not duplicated from other sites or internal pages; it is best to avoid “thin content“, articles with scant information and low word count, while still referring to the criterion of “trying to give the right information in the right words.”

How to increase the authoritativeness of articles

To increase the page’s level of authority, links to relevant articles from authoritative sources can be included, signaling to Google the relevance of the content. Many then recommend including a biography of the author for each piece of editorial content published, which can provide a kind of guarantee of the expertise with respect to the subject matter, although it is not a criterion evaluated by Google from a ranking perspective.

Optimize structured data and take care of brand awareness

Investing in personal branding and brand awareness can serve to improve one’s EEAT score, just as it is useful to point out on the site any certifications and official accreditations in the fields of health, law, and so on. In addition, the inclusion of the right schema markup and structured data can help crawlers better frame onpage information and possibly prompt Google to favor the site over that of competitors.

The last point that SEO analysts seem to agree on is limiting the presence of User Generated Content to add more onsite relevance to one’s page: while not always a negative factor, hosting freely entered content by users exposes one to the risks of unreliable or provable information, counteracting EEAT criteria.

Leverage structured data to strengthen the perceived EAT of the site

A reliable and underestimated method that “we can use to improve not only EEAT, but also the overall organic performance” of sites is, again according to Lily Ray who is one of the most authoritative voices on the subject, to leverage schema.org structured data “to their maximum capacity.”

In this second insight, the SEO expert argues that there are several reasons why the proper use of structured data can also help improve EEAT, first and foremost because it “helps establish and solidify the relationship between entities, particularly between the various places and sites where they are mentioned online.”

It is Google itself that explains that providing these markups means helping the search engine, which can base its analyses on “explicit clues about the meaning of a page” that enable it to better understand its content and, more generally, to gather information about the web and the world.

In this way, in fact, we facilitate Google’s ability to evaluate the EEAT of a given page, Web site, or entity, since structured data and the relationship it creates can:

- Reduce ambiguity between entities.

- Create new connections that Google would not otherwise have made in its Knowledge Graph.

- Provide additional information about an entity that Google would not have obtained without it.

Bill Slawski’s thoughts

The article also reported some thoughts on the topic by the late Bill Slawski, who said that “structured data adds a level of precision that a search engine needs and might not otherwise understand because it lacks the common sense of a human being“.

Without certainty about which entities are included on a page, it can be a real challenge for search engines to accurately assess the experience, expertise, authority and reliability of those elements. In addition, structured data also help clarify ambiguities of entities with the same name, which is undoubtedly important when it comes to evaluating EEAT.

It is always Slawski, the best-known Google patent expert, who provided some practical examples, “When you have a person who is the subject of a page, and he shares a name with someone, you can use a SameAs property and point to a page about him in a knowledge base like Wikipedia.”

In this way, we can “clarify that when you refer to Michael Jackson you mean the King of Pop, and not the former director of U.S. national security,” clearly very different people. In addition, even “companies or brands sometimes have names they might share with others,” as in the case of the music group Boston “which shares a name with a city.”

The value of structured data to the search engine

It follows that structured data essentially serves to feed Google with crucial information about the topics of our site, as well as the people who contribute to it.

This is a crucial first step for the search engine to be able to accurately assess the reliability and credibility of our site and the creators of its content.

Implementing structured data for EEAT

There are several methods for implementing structured data on the site: in addition to JSON-LD (which is Google’s favorite), there are Microdata, RDFa, and, recently, even dynamic addition through JavaScript and Google Tag Manager.

For websites hosted on WordPress, in addition, the popular Yoast plug-in integrates many Schema features and continues to extend its support.

In any case, for the goal of improving EEAT, the method of implementing structured data is less important than the schema types marked on the website.

Indeed, the priority is to provide search engines with as much information about the credibility, reputation, and trustworthiness of the authors and experts who contribute to the content published on the site and who are part of our project. But EEAT is also about brand reputation and the experience users have on the site and when using our products or services.

What is the correct insertion process

The correct use of Schema markup – for example, with nesting, as Ray suggests – makes it easy to visualize the resulting schema and analyze it in Google’s testing tool, but also to understand (and have understood) the main entities on the page and their relationships to each other.

Nesting also eliminates the frequent problem of having multiple redundant or conflicting Schema types on the same page, which can depend on multiple plug-ins performing the operation simultaneously.

On a product page, for example, it is important to clearly describe and differentiate the relationships between the organization that publishes the Web site and the organization that produces the product: by placing data correctly in a nested structure, we can clearly describe the difference in their roles, rather than simply pointing out that they are both “on the page.”

What markup to use to increase site EEAT

There are various types and properties of schema that it is crucial to include on the site “to send the right signals to search engines about your organizations’ EAT,” says Lily Ray, who then lists 5 examples of some of the priority cases where using Schema helps to strengthen EAT-as before, these tips can also be applied to the new conceptualization of EEAT.

- Person

The first mention to EEAT in Google’s Quality Assessment Guidelines is the requirement for quality raters to consider the “experience,” “expertise,” and “authority of the lead content creator“.

This information can be communicated to search engines through the use of Person markup (designed for living, dead, immortal or fictional/fictional individuals), which includes dozens of options for properties to be listed, useful in providing more context about the person.

Many of these strongly support EEAT, such as in particular (but not limited to):

- affiliation

- alumniOf

- award

- brand

- hasCredential

- hasOccupation

- honorificPrefix

- honorificSuffix

- jobTitle

- sameAs

The invitation is to consider including the person schema with the above properties at least once when the founder, content creators and/or expert contributors are listed on the site, remembering that this information must mandatorily be displayed on the page as well, a prerequisite for compliance and to avoid manual action for structured data spam.

How to identify a person for Google

An author biography page is a good way to present this type of schema, which can also serve to disambiguate that person’s name from other identical names in Google’s Knowledge Graph. In this case, it pays to link to the graph url using the sameAs property.

This effort can give Google the minimum amount of confidence it needs to be sure it is showing the right knowledge panel for a specific individual in the event of queries related to him or her.

For some time now, Google has deprecated the sameAs markup for social profiles, but it is still possible to use it for other purposes, with links to:

- The individual’s knowledge graph URL.

- His or her Wikipedia page, a Freebase or Crunchbase profile.

- Other reliable sources where the individual is mentioned online.

Also, it is worth remembering that there are more search engines that use Schema than just Google, so listing social profiles using sameAs is probably still a good approach.

- Organization

The organization schema is arguably one of the best for supporting EEAT efforts and offers a variety of properties that can provide additional context about our company or brand, such as:

- address

- duns

- founder

- foundingDate

- hasCredential

- knowsAbout

- memberOf

- parentOrganization

Many companies implement the organization schema without taking advantage of these fields or the many other available properties. On the practical side, it would be useful to incorporate all this information into the most relevant page of our organization (usually an “About Us” or “Contact Us” page), marking it accordingly.

- Author

Author is a Schema property that can be used for any type that falls under the CreativeWork or Review classification, such as Article or NewsArticle.

This property is to be used as markup for the author’s signature on a piece of content. The types provided for author are Person or Organization, so if our site publishes content on behalf of the company it is important to indicate the author as an organization and not as a person.

- reviewedBy

The reviewedby property is a great opportunity to show good EEAT of a site: if we use expert reviewers on our content (e.g., medical or legal consultants), it might be useful to put their name on the page to give a signal of accuracy, using precisely the property to indicate the name of the person or organization.

This is a great approach to use when regular site authors may lack EEAT or a strong online presence, while instead the reviewers are real experts with a known online presence, and can help, for example, sites or pages dealing with YMYL topics, where the weight of expertise is strongest according to Google.

- Citations

Using the citations property we can list the other publications, articles or creative works cited within the content or links on the page. This is a good way to show search engines that we refer to authoritative and reliable sources to support our work, which is a great strategy for EEAT.

In addition, listing citations in Schema markup can help position the brand in relation to others with whom we are associating, and thus potentially provide Google with qualitative information about our trustworthiness.

Structured data to improve the EEAT of pages

The Schema.org library is continually being updated and expanded, so it is always useful to check for new features. And although they are not a direct ranking factor (as clarified several times publicly), Google consistently recommends using structured data as much as possible to help its search engine make sense of our site.

Indeed, it can be assumed that by better understanding the content and entities included in the site through structured data, Google will be able to optimize and improve its work to evaluate the overall quality of the site and its EEAT metrics.

EEAT signals for Google: 8 elements to add to site pages

Structured data can therefore help increase perceived E-E-A-T, and Andrei Prakharevich‘s contribution allows us to identify 8 more signals to add to pages to improve E-E-A-T for Google.

These simple interventions obviously only serve as support for our pages, to try to convince Google of the level of experience, expertise, authority, and reliability we have achieved, but certainly it is not enough just to create a few more pages or add additional information.

The starting point always remains the creation of quality content, possibly created by real experts: the next step is to use these strategies to communicate to Google the value of this content and try to benefit from it (also) in terms of ranking.

- Add (and curate) the author’s biography

One of the most common ways to lend authority to our content is to have it written by a proven expert or, at least, a person who can appear to be an expert. This is why it might be preferable to openly name the authors of the content instead of marking the text with the brand name or staff/editorial.

At present, best practices for making the author known include indicating the author’s name on the article page and then linking that name to a bio page, also posted on the same website.

This profile page, which serves to give importance to the name, helps us to display various information and – following the latest SEO trends – we can consider it almost like a microsite, adding a variety of media and links. Typically, the author’s bio page includes the following data:

- Photo

- Name

- Job Title

- Description

- Links to social media profiles

- Experience (years in the field, published work, projects, etc.)

- Other published articles on the site

- Linktree

In addition, we can take it a step further and strengthen the author information with structured data, as mentioned above.

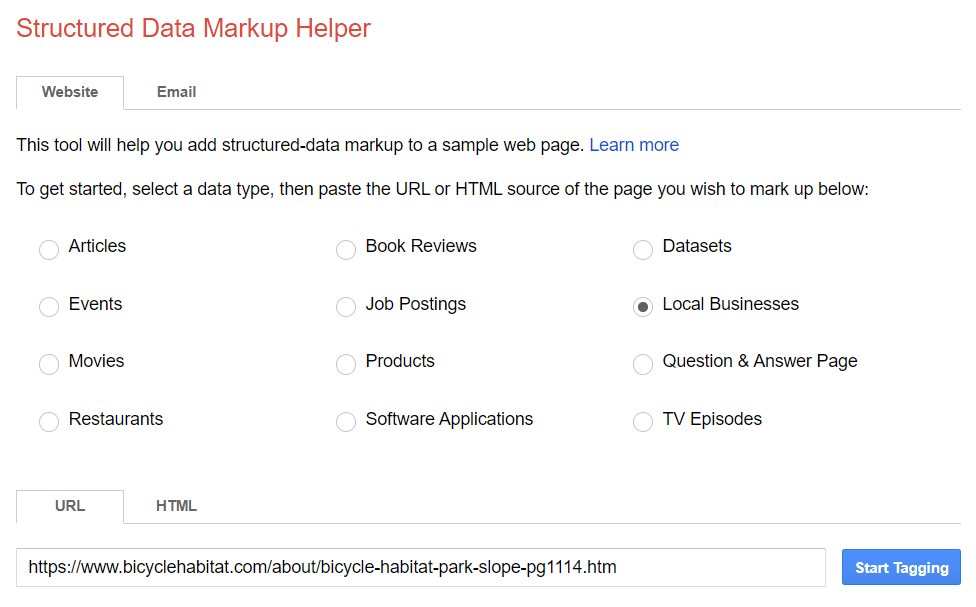

The article schema is used on the article page to highlight the author’s name and other details about the content itself: it can be added manually, through a plug-in or with Google’s Structured Data Markup Assistant.

On the author’s biography page, on the other hand, we can use the person scheme, which allows tagging all kinds of personal details (basically, those listed above, such as photos, descriptions, links, and so on), taking special care to properly implement the sameAs property that allows tagging links to other profiles of the same author, which helps Google recognize the person and establish them as an entity.

Currently, there is no possibility to use the Google tool to implement the person markup, which can therefore only added manually or with a dedicated plug-in.

- Adding a date to the article

Google has stated many times that the date of the article and the freshness of the article in general are not ranking factors, but nevertheless, the SEO community has carried out various experiments to verify or disprove these claims (e.g., changing the dates of all articles on a Web site or removing dates altogether), but no single strategy has been identified that works best for everyone.

In any case, what we do know is that the date of the article is considered important for accessing Google News and is listed in the transparency section of Google’s news service rules; although there is no certainty as to whether this relevance extends (or not) to non-news articles, it is nonetheless a sign that for Google, dates contribute to the credibility of content in general.

Therefore, also in the interest of full transparency, the advice is to include both the date of publication and the date the article was last updated, using the article schema to highlight it.

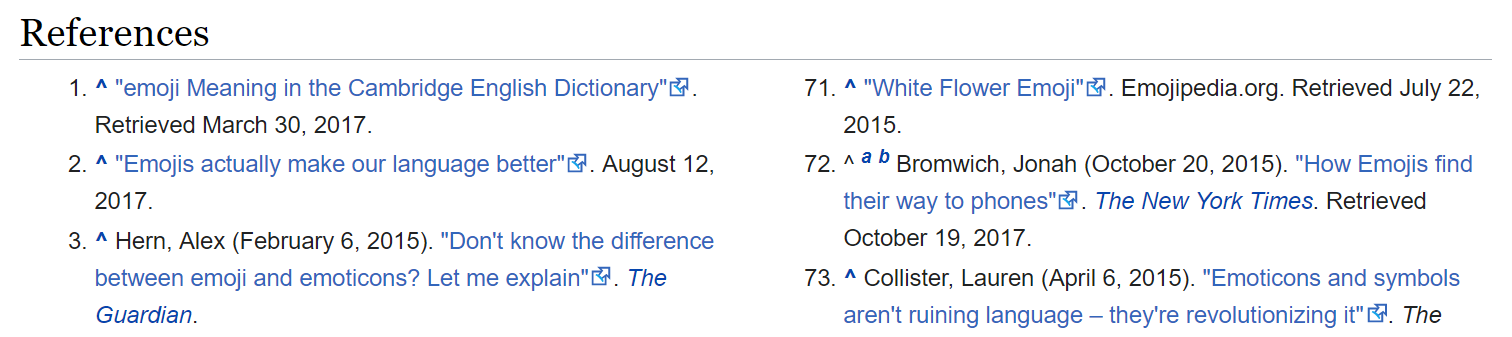

- Use of sources and citations

For Prakharevich, “the SEO community is fixated on gaining authority through backlinks, getting other pages to link to your page, but you can actually gain some authority by linking to other credible sources of information in your field.”

In his experience, Web site owners are often reluctant to link to other Web sites, “because they’re afraid of losing traffic and wasting link juice,” but this should not be a big concern “as long as you link to non-competing sites” and somehow “diminish the importance of those links by setting them up in a boring way that doesn’t encourage clicks”. For new sites in particular, the potential benefits of being associated with other authoritative sites “far outweigh the risks of pouring in a little link juice.”

There are some ways we can incorporate references to sources into our pages, such as including:

- Image credits.

- Citations from experts in the field.

- Properly anchored links within the text.

- Dedicated blocks of references at the end of the page.

For the expert, precisely the blocks of references are somewhat undervalued in “regular articles,” but it is “a waste, because they meet all the criteria for a perfect SEO device: the section is clearly titled for the benefit of Google, each source is correctly described, and the whole thing looks too boring for real readers to click on anything,” as in the example from Wikipedia.

- Insert policy pages

There are multiple Google documents that emphasize that having easily accessible policies and customer service pages is very important for the credibility and ease of use of a website, and quality raters are specifically tasked with looking for these pages in order to determine the trustworthiness of the site.

It follows, then, that it can be useful to create pages dedicated to all types of policies relevant to our site and to link to them in the footer, especially if we are running multiple promotions and customer loyalty programs.

Provide company details

Somewhat similar to the previous point, another sign of transparency is the disclosure of information about the company itself: whether we provide goods, services, advice, or even just information, users have an interest in seeing who is behind the site. Being transparent about site ownership is a strong EEAT signal-and, in the most practical sense, it also serves because there is someone to hold accountable if something goes wrong.

Some of the pages that can help us create a sense of transparency are:

- About (vision, mission, history, competitive advantages)

- Team

- Our office

- Contact details

These four pages tell the story of the company from different angles-“this is what we do, who we are, where we work, and this is how you can get in touch with us”-making it tangible, so to speak, and offering a sense of security to people browsing the site.

Again, structured data helps us so that Google recognizes our attempt at transparency, and we can tag the business details disclosed by our pages using Local Business markup.

- The approach to UGC, user-generated content

Having user-generated content on the site can be a way to “demonstrate that your website attracts real users and engages them enough to leave comments and reviews,” and so it can be worthwhile in terms of EEAT to allow visitors to leave comments, reviews, questions, and other types of UGC.

As we know, however, this is also one of the most common spam fronts, and Google may actually penalize the site because of an inadequately maintained comments section, but with some countermeasures, problems can be avoided – for example, by preventing automatic account creation, activating moderation features, and reviewing user content to look for any automatically generated posts, spam links, or malicious comments that might spread sensitive information or offend other users.

- Serving HTTPS pages

There is little doubt about the importance of HTTPS as a signal of a site’s trustworthiness, reaffirmed by both the Quality Rater Guidelines and Page Experience (of which it is one of the technical factors, along with Core Web Vitals, mobile optimization, and no interstitial).

Therefore, if we have not yet enabled HTTPS on the site, it is a good idea to check with the hosting provider (who often offer free SSL) or get a free SSL certificate from Let’s Encrypt, but it must be updated every six months.

- Taking advantage of external signals

Prakharevich’s last piece of advice “is a bit of a trick,” because external signals are not added to the website per se, but help strengthen the overall EEAT profile and can also be “controlled,” as in the case of the backlink profile and the Business Profile tab (formerly Google My Business).

The quality and size of the site’s backlink profile “are the only EEAT signals that Google can measure algorithmically,” but of course we must try to send the opposite signal, i.e., an unnatural profile with backlinks from spam pages or sites.

If we have a business location, then, a tab is critical, because this tool is now the ultimate business directory and the key place users will go to learn about a local business.

The quality of the Profile – that is, the completeness of the information, the number of reviews, and the average rating – are taken into account for its placing on Google Maps, as well as on other Google platforms. In addition, the business profile is also one of the places that quality raters check when evaluating a brand’s EEAT ranking, which is why it is crucial to curate the information entered well. A further step would be to incentivize user feedbacks by responding to positive reviews, trying to solve problems reported by negative ones, and encouraging our customer base to go to Google and actually leave a review.