Complete guide to robots.txt file, to control crawlers on the site

It is a small but crucial component that plays a key role in the optimization and security of websites: we are talking about the robots.txt file, the text document that has the main function of communicating with search engine robots, telling them which parts of the site can be examined and indexed and which should be ignored. Officially created on June 30, 1994 as the result of the work of a group of webmasters to establish a common standard for regulating crawler access to their sites, over time the file has evolved into the robots exclusion protocol we know today, becoming a site management tool that can be used by anyone with an online project who wants to communicate specific directives to search engine crawlers.

What is the robots.txt file

The robots.txt file is a text file that resides in the root directory of a website and is used to manage search engine crawler traffic to the site and, in particular, can communicate which parts of a website should not be explored or indexed.

Its purpose is to optimize crawling activity, preventing search engines from wasting time and resources on content that is not essential or not intended for indexing, such as sections of the site under development, confidential information, or pages that do not offer added value to search engines.

Take care of your site

It therefore provides specific instructions that can be general, i.e., applicable to all crawlers, or targeted to specific bots, outlining which URLs or sections of the site are off-limits: in this sense, it acts as a filter that directs bot traffic, ensuring that only relevant areas of the site are explored and indexed, so as to avoid an overload of requests from the crawlers themselves.

Put in more immediate words: the robots.txt file is a simple, small text file, located in the root directory of a site (otherwise the bots will not find it) and written in ASCII or UTF-8, that contains instructions for blocking all or some search engine spiders from crawling some or all pages or files on the site.

However, it is important to note that the robots.txt file is not a tool for hiding pages from search results, but rather serves to manage which resources are crawled by crawlers. In fact, it is a public file, which is accessed not only by the bots themselves, but also by human users: to view the robots.txt file of any site, we generally only need to add “/robots.txt” to the URL of the domain we wish to examine in the browser address bar.

Why it is called robots.txt: the history of the file and protocol

The robots.txt file is the practical implementation of the Robots Exclusion Standard (or REP) protocol, a standard used by websites to communicate with search engine crawlers since the early days of the World Wide Web, when website operators began to feel the need to provide precise instructions to robots, specifically asking them to apply parsing restrictions on site pages.

We can trace the “spark” of genesis back to a meeting that took place in November 1993 in Boston, during a conference of the fledgling World Wide Web: Martijn Koster, a Dutch researcher working at Nexor, a Web software company, proposed the idea of a standard protocol for regulating crawler access to Web sites. Koster already had experience with crawler issues, having created a web archive called ALIWEB, which is considered one of the first search engines. His proposal was motivated by the need to prevent server overload caused by crawlers and to protect sensitive web resources from being indexed.

Koster’s proposal was well received by the web community and members of the robots mailing list (robots-request@nexor.co.uk): as early as June 1994, a document was published describing the “Robots Exclusion Standard” and sanctioning the birth of the robots.txt file. This standard was not a formal protocol endorsed by a standards body, but rather a community agreement, which nonetheless was quickly adopted by the major search engines of the time, such as WebCrawler, Lycos, and later by Google. And even today, after 30 years, there is no real standard for the robots protocol.

Returning to the more practical aspect, the name “robots.txt” explains exactly the nature and functions of this document: it is a plain text file, in txt format precisely, dedicated to “robots,” i.e., the automated software agents of search engines that browse the Web for indexing purposes.

What the robots.txt file is for

But what is the usefulness of the robots file for SEO and, more generally, for the optimal management of a site?

As mentioned before, this document allows us to manage crawler requests from Google or other search engines, but more specifically it helps us optimize the crawl budget because it prevents bots from crawling similar or unimportant pages on the site. Search engine robots may spend limited time crawling the pages of a site, and if they waste time on parts of the site that do not bring value, they may fail to index the most important pages. With the robots.txt file, it is possible to effectively direct the attention of crawlers to content that really matters, ensuring better visibility in search results.

Therefore, this tool serves SEO professionals and site managers to apply parsing restrictions on the pages that are open to crawlers from various search engines for their regular activity; when set correctly, the rules prevent crawlers from taking up bandwidth and CPU time by parsing content not intended for publication in search results, allowing resources to be allocated to browsing pages that are more useful to the business.

The importance of the robots.txt file also goes beyond simply managing crawler accessibility: it represents a critical first step in search engine optimization, directly influencing what content is shown in search results while simultaneously keeping useless or even potentially harmful pages out of the index for site ranking. Proper implementation can significantly improve site crawling by search engines, ensuring that the most relevant pages are easily found by users.

Communication between site and bot is done through a very simple protocol, which explicitly states a list of pages and directories that spiders cannot pick up and, consequently, index in the common way.

It is important to note, however, that although most search engines respect the directions of the robots.txt file, adherence to this protocol is not mandatory, and some less scrupulous bots may ignore it.

The importance of this file for SEO

It is good to specify that each site can produce only a single robots.txt file, and as a general rule, it is always recommended to create a robots.txt file, even if we intend to give a green light to all spiders on all pages: the simple presence of the document in the site’s root directory will avoid the appearance of a 404 error.

In SEO and onpage optimization strategy, the robots file plays a central role because it allows us to manage crawler traffic to our site and, as explained by Google, “sometimes to exclude a page from Google, depending on the file type.”

Submitting a well-structured and well-set up robots.txt file saves the spider a lot of time, which can be spent better reading other parts of the site.

Warning: robots.txt does not always exclude pages from Google

There are other relevant caveats to approaching the creation and use of the file correctly: above all, we need to be aware that the robots.txt file can only prevent it from being crawled-and not always, as we shall see-and it is not meant to prevent a page from showing up in search results. Robots “is not a mechanism to exclude a web page from Google,” because to achieve this goal one must “use noindex instructions or tags or password-protect pages.”

Also, we said, following the guidelines in robots.txt files is not legally binding on crawlers: reputable search engines such as Google follow these guidelines to maintain good relations with website operators, but there is no absolute guarantee that every bot will follow the instructions. Therefore, to protect truly sensitive or private information, we need to take more robust security measures.

From a technical point of view, then, asking the spider to disregard a particular file does not mean hiding or preventing absolute access to that resource either, which can be found by users who know and type in the precise URL. If there are other pages that link back to a site page with descriptive text, that site resource might even still be indexed even if it has not been visited, generating a search result without a description.

The on-page image, taken from Google’s guides, highlights a Google search result that is missing information because the site prohibited Googlebot from retrieving the meta description but did not actually hide the page and prevent its indexing in Search.

Pages indexed despite robots exclusion

Thus, as we always read in Google’s official sources, “a page blocked by the robots.txt file can still be indexed if other sites have links pointing back to the page”: this means that potentially even blocked resources can appear in Google Search SERPs if the referring URL address and other related information such as anchor text is publicly available elsewhere.

Another case of “non-exclusion” could result from bots interpreting the instructions in the file differently, which is why it is important to “know the most appropriate syntax to apply to different web crawlers” to avoid these misunderstandings.

Ultimately, according to Google the only method “to ensure the total security of confidential information” on the site is to use “password protection of private files on your server” or other more specific tools as a blocking system.

How the robots.txt file works

The robots.txt file is a document in text format, a normal text file that conforms to the robots exclusion protocol.

One of the most important aspects is its simplicity: it consists of one or more rules, and each rule blocks or allows access by all or a specific crawler to a file path specified in the domain or subdomain where the file is hosted.

Unless otherwise specified in the document, crawling is implicitly allowed for all files and pages on the site. Instead, through rules, instructions known as “directives,” we can tell search engine robots which pages or sections of the site should be excluded from indexing.

Thus, the main readers of the robots.txt file are search engine crawlers, such as Googlebot from Google, Bingbot from Bing, and other smaller search engine bots, which search the robots.txt file before exploring a site, to figure out which areas are off-limits and which are open to indexing. As mentioned, however, it is important to note that the guidelines contained in the robots.txt file are based on a voluntary agreement between crawlers and websites, and not all bots respect these guidelines. In addition, by its characteristics the file does not provide effective protection against unwanted access-sensitive or private information should be protected through more secure methods such as authentication or encryption.

In general, Google says that small sites that do not require detailed crawling management may not need to implement a robots file; in fact, given also the simplicity of its creation and management, it is still advisable to implement this document for any type of site, including even small personal blogs. In fact, any project that wishes to optimize its online visibility and manage the indexing of its content can benefit from the use of a well-configured robots.txt file.

Even more so, sites hosting sensitive content or with areas that require registration have an additional reason to create the robots.txt file, which proves to be particularly useful in preventing these pages from being indexed and shown in search results. In addition, it is critical for sites that have limited server resources and want to make sure that crawlers do not overload their system, focusing only on the most relevant pages.

The rules and commands for blocking crawlers and pages

The instructions contained in the robots.txt file are guidelines for accessing sites and, in the case of GoogleBot and other reputable web crawlers, ensure that blocked content is not crawled or indexed (with the distinctions mentioned above), while there is no opposite rule for telling a spider to “fetch this page” all the time.

In practical terms, each file record includes two fields: “User-agent” to identify the specific crawler and “Disallow” to indicate the resources not to be explored, which serve to define the exclusion list. The user-agent field empowers you to create specific rules for different bots and exclude the activity of specific search engine crawlers: for example, you can allow Googlebot freedom to crawl but apply the exception rule only to the Bing bot.

In the disallow field, on the other hand, you can enter specific URLs or a pattern of site URLs that should be excluded from indexing by the robot/spider indicated above: in this way, for example, you can decide to exclude a folder containing confidential information from being crawled or to restrict access to certain crawlers to prevent server overloads.

The robots.txt file, as defined by the standard, was simple in its structure and easy to implement. Webmasters simply had to create a text file, name it “robots.txt,” and place it in the root directory of their Web site. Within the file, they could specify directives for crawlers, using “User-agent” and “Disallow” syntax.

The rules then inform about the bot to which they apply (user-agent), the directories or files that the agent cannot access, and those that it can access (allow command), assuming by default that a crawler can crawl all pages or directories that are not explicitly blocked by a disallow rule.

Differences between robots.txt file and robots meta tag

The robots file represents, so to speak, an evolution of the robots meta tag, a tool designed to communicate with all search engine spiders and invite them to follow specific directives regarding the use of the specific web page.

Also referred to as meta robots directives, meta robots tags are snippets of HTML code, added to the <head> section of a Web page, that tell search engine crawlers how to crawl and index that specific page.

There are many differences between these elements, but two are substantial: the meta tag in the HTML code is dedicated to the individual Web page, and therefore not referable to crawling over entire groups of pages or entire directories, and also does not allow for differentiating directions for individual spiders.

How to create a robots.txt file

Although some site hosting uses in-house systems to manage the information for the crawlers, it is still good to know the form and structure of the robots.txt file, which, as mentioned, is a plain text file that conforms to the Robot Exclusion Protocol.

In light of the definitions provided, we can then outline a guide on how to create a proper robots.txt file that can significantly improve the efficiency of site crawling by search engines and thus help optimize online visibility.

Be careful, however: creating a robots.txt file may seem straightforward, but it is important to remember that an incorrect setting can prevent search engines from accessing important parts of our website, negatively affecting our organic traffic.

- Understanding the syntax

The first step in creating a robots.txt file is to understand its syntax. The file is based on two key concepts: “User-agent” and “Disallow.” “User-agent” refers to the search engine robot to which the instructions are directed, while “Disallow” indicates the areas of the site that should not be scanned. Multiple “Disallow” directives can be specified for each “User-agent,” and if we do not wish to restrict access to any part of the site, we can simply use “Disallow:” without specifying a path.

In summary:

- User-agent identifies the search engine to which the rules refer. For example, “User-agent: Googlebot” refers specifically to the Google crawler.

- Disallow specifies which URLs or folders should not be crawled by the specified crawler. For example, “Disallow: /private-folder/” prevents access to the specified folder.

- Allow is used to grant access to specific parts of a site that would otherwise be blocked by a Disallow rule-for example, to explicitly allow access to a specific subfolder of an overriding blocked folder.

The syntax also has strict rules, beginning with the exclusive adoption of ASCII or UTF-8 characters and case-sensitive distinction; each file consists of one or more rules, which in turn are composed of several instructions to be individually reported on the rows.

- File creation

To create a robots.txt file, simply open a simple text editor that uses standard ASCII or UTF-8 characters such as Notepad on Windows or TextEdit on Macs-while it is inadvisable to use a word processor, which typically saves files in a proprietary format and may add unexpected characters or non-ASCII characters that can cause problems for crawlers.

We start typing the rules we wish to apply, following the syntax explained above. For example:

User-agent: * Disallow: /directory-segreta/

This example tells all search engine robots (indicated by the asterisk) not to scan or index the contents of the “directory-secret” directory.

One suggestion: the more specific the directives, the less chance of errors or misinterpretation by search engines.

Also, care should be taken not to indicate in the sitemap URLs blocked in robots, to avoid penalizing short-circuits for the site.

- Saving and uploading the file

To set up an effective robots.txt file, there are a few format and location rules to be followed. First, the file name must be precisely robots.txt, only one can exist per site, and comments are any line.

Then, after entering all the necessary rules, we save the document with the name “robots.txt.”

We upload the file to the web server via FTP or using the hosting provider’s control panel, and verify that it is located in the root of the website-this means that it should be accessible via the URL http://www.nostrosito.com/robots.txt. More precisely, the document must necessarily reside in the root directory of the website host to which it applies and cannot be placed in a subdirectory; if the root directory of the website cannot be accessed, blocks through meta tags can be used alternatively. The file can be applied to subdomains or non-standard ports.

- Verification

Once the robots.txt file has been uploaded, it is important to verify that it is properly configured and that search engines can access it. Tools such as Google Search Console offer features to test and verify the robots.txt file, allowing you to identify and possibly correct errors or accessibility issues.

What the robots.txt file does: tips for perfect SEO management

The robots.txt file thus plays a central role in the management and optimization of a website, and its proper configuration is essential for SEO because it can help ensure that search engines explore the site efficiently, respecting the directions provided by the project owners or managers.

To explore these practical aspects in more detail, we refer to an article by Anna Crowe on searchenginejournal, which analyzes and describes the main features of the robots file and offers really interesting insights for effective management.

The author first goes over the basics: a robots.txt file is part of the robots exclusion protocol (REP) and tells crawlers what should be crawled.

Google engages Googlebot to crawl Web sites and record information about that site to figure out how to rank it in search results. You can find the robots.txt file of any site by adding /robots.txt after the Web address, like this:

www.mywebsite.com/robots.txt

The first field that is displayed is that of the user-agent: if there is an asterisk *, it means that the instructions contained apply to all bots landing on the site, without exceptions; alternatively, it is also possible to give specific indications to a single crawler.

The slash after “disallow” signals to the robot the categories/sections of the site from which it must stay away, while in the allow field you can give directions on the scan.

The value of the robots.txt file

In the SEO consulting experience of the author, it often happens that customers – after the migration of a site or the launch of a new project – complain because they do not see positive results in ranking after six months; 60 percent of the time the problem lies in an outdated robots.txt file.

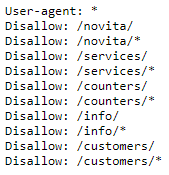

In practical terms, almost six out of ten sites have a robots.txt file that looks like this:

User-agent: *

Disallow: /

This instruction blocks all web crawlers and every page of the site.

Another reason why robots.txt is important is Google’s crawl budget: especially if we have a large site with low quality pages that we don’t want to scan from Google, we can block them with a disallow in the robots.txt file. This allows us to free up part of the Googlebot scan budget, which could use its time to only index high-quality pages, the ones we want to place in the SERPs.

In July 2019 Google announced its intention to work on an official standard for the robots.txt, but to date there are no fixed and strict rules and to orient you have to refer to the classic best practices implementation.

Suggestions for the management of the robots.txt file

And so, file instructions are crucial for SEO but can also create some headaches, especially for those who do not chew technical knowledge. As we said, the search engines scan and index the site based on what they find in the robots.txt file using directives and expressions.

These are some of the most common robots.txt directives:

- User-agent: * – This is the first line in the robots.txt file to provide crawlers with the rules of what you want scanned on the site. The asterisk, as we said, informs all the spiders.

- User-agent: Googlebot – These instructions are only valid for the Google spider.

- Disallow: / – This tells all crawlers not to scan the entire site.

- Disallow: – This tells all crawlers to scan the entire site.

- Disallow: / staging / : instructs all crawlers to ignore the staging site.

- Disallow: / ebooks / * .pdf : instructs crawlers to ignore all PDF formats that may cause duplicate content problems.

- User-agent: Googlebot

Disallow: / images / – This only tells the Googlebot crawler to ignore all the images on the site. - * – This is seen as a wildcard character representing any sequence of characters.

- $ : It is used to match the end of the URL.

Before starting to create the robots.txt file there are other elements to remember:

- Format the robots.txt correctly. The structure follows this scheme:

User-agent → Disallow → Allow → Host → Sitemap

This allows search engine spiders to access the categories and Web pages in the right order.

- Make sure that each URL indicated with “Allow:” or “Disallow:” is placed on a separate line and do not use spacing for separation.

- Always use lowercase letters to name the robots.txt.

- Do not use special characters except * and $; other characters are not recognized.

- Create separate robots.txt files for the various subdomains.

- Use # to leave comments in your robots.txt file. Crawlers do not respect lines with #.

- If a page is not allowed in robots.txt files, the fairness of the link will not pass.

- Never use robots.txt to protect or block sensitive data.

What to hide with the file

Robots.txt files are often used to exclude directories, categories or specific pages from Serps, simply using the “disallow” directive. Among the most common pages you can hide, according to Crowe, are:

- Pages with duplicated contents (often printer-friendly contents)

- Pagination pages.

- Dynamic pages of products and services.

- Account pages.

- Admin pages.

- Shopping cart

- Chat

- Thank you page.

Robots.txt and special files, the rules not to make mistakes

Still on the subject of managing the robots.txt file comes to our support one of the classic pills from #askGooglebot, the YouTube series in which John Mueller answers a question posed by the SEO community. In one episode, in particular, we talk about the robots.txt file and, to be precise, best practices on certain file types and extensions, such as .css, php.ini and .htacess, with the Googler explaining what is the right way to go in these cases. That is, whether it is better to let Googlebot have access or prevent it from crawling those pages.

It all starts, as usual, from a user’s question, asking the Search Advocate how to behave with respect to the robots.txt file and “whether to disallow files such as /*.css, /php.ini and even /.htaccess,” and then, more generally, how to handle these special files.

John Mueller first responds with his usual irony, saying that he “can’t prevent from preventing” access to such files (literally, “I can’t disallow you from disallowing those files”), and then goes into a bit more detail and offers his actual opinion, because that approach seems “to be a bad idea.”

The negative effects of unwanted blocking

In some cases, disallowing special files is simply redundant and therefore unnecessary, but in other circumstances it could seriously impair Googlebot’s ability to crawl a site, with all the negative effects that come with it.

Indeed, the procedure the user has in mind is likely to cause damage to the crawling ability of the Google bot, and thus impair page comprehension, proper indexing, and, not least, ranking.

What does disallow on special files mean

Mueller quickly explains what it means to proceed with that disallow and what the consequences can be for Googlebot and the site.

- disallow: /*.css$

would deny access to all CSS files: Instead, Google must have the ability to access the CSS files so that it can properly render the pages of the site. This is crucial, for example, to be able to recognize when a page is optimized for mobile devices. The Googler adds that “generally the don’t get indexed, but we need to be able to crawl them.”

So if the concern of site owners and webmasters is to disallow CSS files to prevent them from being indexed, Mueller reassures them by saying that this usually does not happen. On the contrary, blocking them complicates Google’s life, which needs the file regardless, and in any case even if a CSS file ends up being indexed it will not harm the site (or at any rate less than the opposite case).

- disallow: /php.ini

php.ini is a configuration file for PHP. In general, this file should be locked or locked in a special location so that no one can access it: this means that even Googlebot does not have access to this resource. Therefore, banning the scanning of /php.ini in the robots.txt file is simply redundant and unnecessary.

- disallow: /.htaccess

as in the previous case, .htaccess is also a file a special control file, locked by default that, therefore, offers no possibility of external access, not even to Googlebot. Consequently, there is no need to use disallow explicitly because the bot cannot access it or scan it.

Do not use a Robots.txt file copied from another site

Before concluding the video, John Mueller offers one last precise tip for proper management of the robots.txt file.

The message is clear: do not un-critically copy and reuse a robots.txt file from another site simply assuming it will work for your own. The best way to go about avoiding errors is to think carefully about “what parts of your site you want to avoid crawling” and then use the disallow accordingly to prevent Googlebot access.

The 10 mistakes to avoid with the robots.txt file

Simplify and improve your work

Before concluding, it is perhaps appropriate to devote a few more steps to this critical element of SEO and site management by going over what are the most common and worst mistakes with the robots file that can sink a project on the Web.

- Creating an empty robots file

In the guidelines for this tool, Google explains that the robots file is only necessary if we want to block permission for crawlers to crawl, and sites “without robots.txt files, robots meta tags or HTTP X-Robots-Tag headers” can be crawled and indexed normally. Therefore, if there are no sections or Urls that you want to keep away from crawling, there is no need to create a robots.txt file, and most importantly, you should not create an empty resource.

- Creating files that are too heavy

Excesses are never good: while an empty file is obviously nonsense, you should also steer clear of the diametrically opposite situation of making robots.txt too complex and heavy, which can pave the way for webmaster and Google problems.

The standard limits the file size to 500 kb, and Google makes it clear that it will ignore excess text. In any case, it should be remembered that Robots.txt files should always be short, precise and very clear.

- Blocking useful resources

From these premises we also understand the worst consequence of wrong use of the robots.txt file, which is to block the crawling of useful resources and pages of the site, which instead should be fully accessible to Googlebot and other crawlers for the business of the project. It sounds like a trivial mistake, but in reality it happens frequently to find potentially competitive URLs blocked, either unintentionally or due to a misunderstanding of the tool.

As we said, the file can be a powerful tool in any SEO arsenal because it is a valuable way to control and possibly limit how crawlers and search engine robots access certain areas of the site or content that does not offer value when found by users in searches.

However, one must be sure to understand how the robots.txt file works, just to avoid accidentally preventing Googlebot or any other bot from crawling the entire site and, as a result, not finding it in search results.

- Wanting to use the file to hide confidential information

The robots.txt file is public and can be viewed by average users: thinking of using it to hide confidential pages or pages containing user data is an error of concept, as well as a glaring mistake. Instead, other systems must be used to achieve this goal, starting with credential protection methods.

- Trying to prevent pages from being indexed

People often misinterpret the usefulness and function of the robots.txt file and believe that putting a URL in disallow will prevent the resource from appearing in search results. On the contrary, blocking a page in this way does not prevent Google from indexing it nor does it serve to remove the resource from the Index or search results, especially if these URLs are linked from “open” pages.

Such a misunderstanding causes the URL in Disallow to appear in SERPs, but lacking the right title and meta description (a field in which an error message appears).

- Using disallow on pages with noindex tags

A similar misunderstanding, again related to resources that you intend to block, concerns the use of the disallow command in Robots.txt on pages that already have a noindex meta tag setting: in this case, the result is exactly the opposite of the desired one!

In fact, the bot cannot correctly read the command that blocks the indexing of the page, and thus a paradoxical case could be generated: the URL with noindex meta tag could be indexed and rank in SERPs, because the disallow in the robots.txt file has rendered the other indications ineffective. The correct way to prevent a page from appearing in search results is to set the noindex meta tag and allow regular bot access on the resource.

- Blocking pages with other tags

Staying still in this type of error, we also mention restricting access to pages with rel=canonical or nofollow meta tags: as mentioned, blocking a URL prevents crawlers from reading the content of the pages and also the commands set, including the important ones just mentioned.

Therefore, in order to allow Googlebots and the like to accurately read and consider status codes or meta tags of URLs, one must avoid blocking such resources in the Robots.txt.

- Not checking status codes

Turning to some technical aspects related to this tool, there is one element to which you need to pay attention, that of page status codes. As reported in Google’s guides for developers, there are several HTTP result codes that can be generated by the scan, and to be precise:

- Code 2xx, positive result

In this case, a conditional allow scan statement is received.

- 3xx (redirect)

Usually, Google follows redirects until a valid result is found or a loop is detected. However, according to reports, there is a maximum number of redirect attempts (e.g., RFC document 1945 for HTTP/1.0 allows up to 5 redirects), after which the process stops and returns a 404 error. Handling of redirects from the robots.txt file to disallowed URLs “is neither defined nor recommended,” and handling of logical redirects for the robots.txt file “based on HTML content that returns a 2xx type error” is neither defined nor recommended, Mountain View says.

- 4xx (client errors)

All 4xx errors (including 401 “Authorization Denied” and 403 “Access Denied” codes) are treated the same way, i.e., assuming there are no valid robots.txt files and no restrictions: this is a scan instruction interpreted as “full allow.”

- 5xx (server error)

Google interprets server errors as “temporary errors that generate a full disallow scan instruction”: again, the scan request is repeated, until a different result code is obtained than the server error. Specifically, if Google “can determine that a site is misconfigured and returns a 5xx type error instead of 404 for missing pages, the 5xx error returned by that site is treated as a 404 error.”

To these types of issues must be added failed requests or incomplete data, which result from handling a robots.txt file “that cannot be retrieved due to network or DNS issues such as timeouts, invalid responses, restored/broken connections, HTTP split errors, and so on.”

- Not taking care of the file syntax

It is important to know and remember that the robots.txt file is case sensitive, meaning it is sensitive to differences between upper and lower case letters. This implies first naming the file correctly (so “robots.txt” with lower case and no other variations), and then making sure that all data (directories, subdirectories, and file names) are written without inappropriate mixing of upper and lower case.

- Not adding the location of a sitemap

We know (or should know) the importance of sitemaps, which are “a valid method of indicating content that Google should crawl and content that Google may or may not crawl,” as the search engine’s guides always remind us. Therefore, it is important to include the location of a sitemap within the robots.txt file to properly manage these activities according to your needs and requirements.