Crawl budget: what it is, how it impacts SEO and how to optimize it

It is the parameter that identifies the time and resources Google intends to devote to a website through Googlebot scans. The crawl budget is actually not an unambiguous and numerically definable value, as clarified repeatedly by the company’s official sources, but we can still take action to optimize it and to direct crawlers to the pages of our site that deserve to be crawled and indexed more frequently, so as to improve the overall effectiveness of our SEO strategy and search engine presence. Today we discuss a topic that is becoming increasingly central to improving site performance, but more importantly, the various strategies for optimizing the crawl budget and fine-tuning the attention the crawler can devote to content and pages that are priorities for us.

What the crawl budget is

The crawl budget refers to the amount of resources – time and processing capacity – that Google devotes to crawling the pages of a website.

Each time the Google Googlebot crawler passes over a site, it decides how many pages to scan and how often. This process has clear limitations, as Google has to balance the load on the site’s server resources and the crawl priority, which varies according to the characteristics of the entire domain.

What is the crawl budget for Google

Changing approach, the crawl budget or crawl budget is the number of URLs that Googlebot can (based on site speed) and will (based on user demand) crawl.

Conceptually, then, it is the frequency balanced between Googlebot’s attempts not to overload the server and Google’s general desire to crawl the domain.

From the perspective of those who own or maintain a site, effectively managing the crawl budget is therefore about controlling what pages are crawled and when they are crawled. A site that wastes resources of its budget on low-quality or marginally relevant pages may not get the best pages visited by the crawler at the right time, negatively affecting search engine rankings.

Put another way, taking care of this aspect could allow you to increase the speed at which search engine robots visit pages on your site; the greater the frequency of these passes, the faster the index detects page updates. Thus, a higher value of crawl budget optimization can potentially help keep popular content up-to-date and prevent older content from becoming obsolete.

As the official guide to this issue says , the crawl budget was created in response to a problem: the Web is a virtually infinite space, and crawling and indexing every available URL is far beyond Google’s capabilities, which is why the amount of time Googlebot can devote to crawling a single site is limited-that is, the crawl budget parameter. Also, not all crawled elements on the site will necessarily be indexed: each page must be evaluated, merged, and verified to determine whether it will be indexed after crawling.

Fundamentally, however, we must keep in mind the distinction between crawling or crawling and indexing, since not necessarily everything that is crawled will become part of Google’s index. This point is essential to understand that even if Google crawls our site efficiently, it does not mean that all detected pages will automatically be indexed and placed in the search results.

The definition of crawl budget

Defining and explaining the crawl budget is therefore particularly important for anyone who wants to understand how the process by which Google indexes a website really works. If we do not have in mind that Google works selectively, we are unlikely to improve our results. Delving into how we balance crawling allows us not only to avoid waste, but also to improve the priority we give to certain sections of our site.

From a theoretical perspective, the crawl budget is often misunderstood or misinterpreted. There is no single fixed number that we can adjust, but it is a combination of factors that can be optimized.

Understanding the meaning of crawl budget

Does Google like my website? This is the question everyone who has a website they want to rank on search engines should ask themselves. There are several methods to understand if indeed a site is liked by Big G, for example through the data within Google Search Console and the Crawl Statistics report, the tool that allows us to know precisely the crawl statistics of the last 90 days and to find out how much time the search engine spends on our site.

Put even more simply, in this way we can find out what is the crawl budget that Big G has dedicated to us, the amount of time and resources that Google devotes to crawling a site.

It follows that, intuitively, the higher this value, the more importance we have for the search engine itself. In practice, if Google crawls and downloads so many pages every day, it means that it wants our content because it considers it to be of quality and value for the composition of its SERPs.

Understanding the true meaning of the crawl budget then becomes an essential step in building a numbers-based optimization strategy. Without tracking and monitoring its data, even with tools such as Google Search Console or SEOZoom, it is not at all easy to understand whether resources are actually being wasted or whether we are encouraging truly targeted crawling on pages that generate quality traffic.

How to measure crawl budget

To recap, then, crawl budget is a parameter, or rather a value, that Google assigns to our site.

We can think of it just as a budget – of time and patience – that Googlebot has to crawl the pages of our site, and so we can also define it as the number of URLs that Googlebot can and wants to crawl, and it determines the number of pages that search engines scan during a search session for indexing.

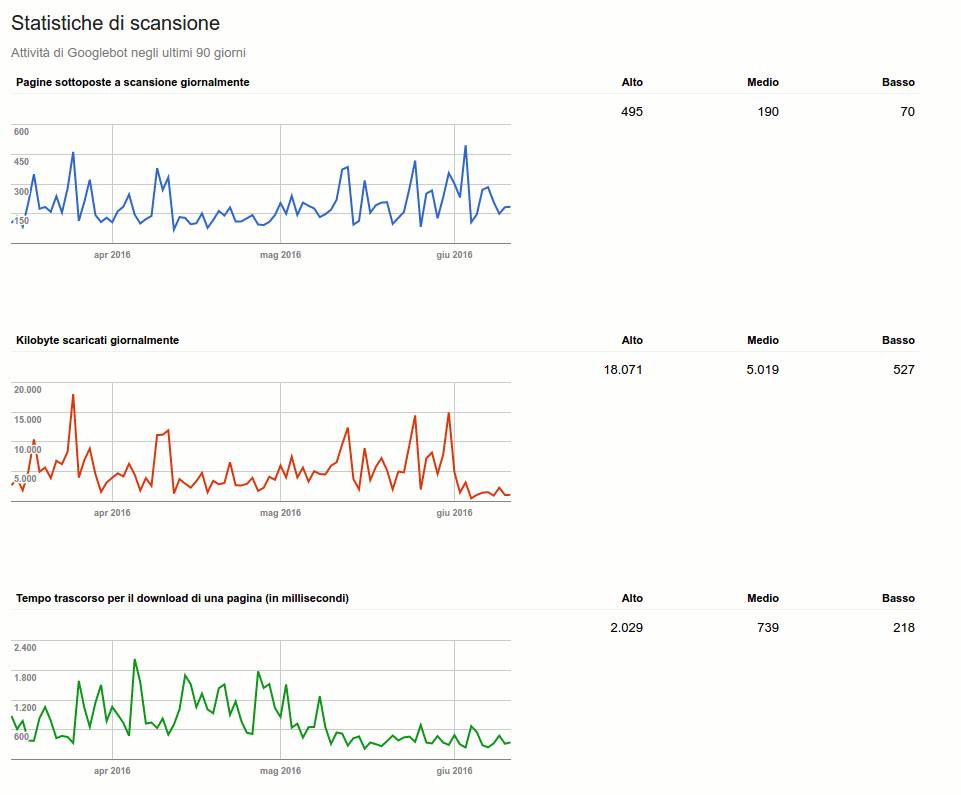

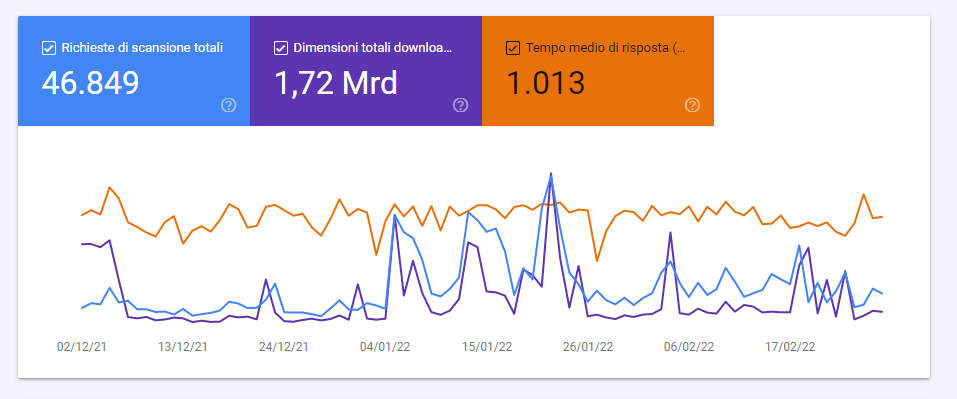

Through Search Console, it is possible to understand how many files, pages, and images are downloaded and scanned by the search engine each day. With this in mind, there are definitely two main values to consider, namely the number of pages Google scans each day and the time it takes to do so. Let’s go over this in detail:

- Pages crawled daily: the ideal value is to have a total number of pages crawled higher than the number of pages on the website, but even a tie (pages crawled equal to the number of pages on the website) is more than fine.

- Elapsed time for downloading: this mainly indicates the time it takes Googlebot to scan our pages, we should keep this value low by going to increase the speed of our website. This will also affect the number of kb downloaded by the search engine during scans, i.e. how easily (and quickly) Google can “download” the pages of a website.

The two images posted here also show the evolution of this domain: values that in 2016 (top screen) signaled average activity, in 2022 have instead become indicative of low demand.

Turning to the behind-the-scenes aspects, Google manages the crawl budget with the aim of balancing the crawl between the needs of its users and the efficiency of the servers on which the sites are hosted. In practical terms, Google considers two main elements: quantity and quality. On the one hand, it makes sure to send its Googlebot frequently enough to ensure that a site’s relevant pages are updated in its indexes (crawl demand); on the other hand, it monitors server load to avoid unnecessarily slowing down sites (crawl rate).

What are crawl rate and crawl demand

Google’s crawl budget is thus a finite resource, which is distributed over the various pages of a domain in an intelligent manner, the value of which depends on the crawl capacity limit (crawlrate) and crawl demand.

When we talk about Google crawl rate we refer to the speed at which Googlebot crawls the site. It is a parameter that Google tries to keep balanced at all times so as not to overload the server, thus interfering with the user experience on a site. However, the opposite factor also occurs: under certain circumstances, Google may decide to reduce its crawl rate if it detects performance problems or if the site is not providing up-to-date content. Hence the importance of monitoring not only the speed of one’s site, but also the regularity of updates.

To complete the picture of Google crawl budget management we find the concept of crawl demand. Although our site may be technically ready to receive many crawls, not all pages are equal in terms of interest to Google. Pages that are frequently updated or that are attracting a lot of traffic from relevant queries will be prioritized over stable pages where no significant changes occur.

For webmasters who wish to maintain complete control over site crawling, tools such as Google Search Console become indispensable. The platform makes it easy to visualize crawl data and understand which pages are using the crawl budget ineffectively, potentially slowing down site loading. In addition, when necessary, you can request Google to crawl the updated pages specifically again by taking advantage of the “request crawl” option in Search Console.

Fully understand Google’s crawl rate and crawl demand

Without going into too much technical detail, however, we can say a few more useful things about these issues.

By crawl rate we refer to the number of requests per second that a spider makes to a site and the time that passes between fetches, while crawl demand is the frequency with which such bots crawl. Therefore, according to Google’s official advice, the sites that need to be most careful about these aspects are those that are larger and have more pages or those that have auto-generated pages based on URL parameters.

Going deeper with the explanations, Googlebot’s job is to crawl each site without overloading its servers, and then it calculates this crawl capacity limit represented by the maximum number of simultaneous connections it can use to crawl a site and the delay between fetches, so that it still provides coverage of all important content without overloading its servers.

The limit on crawl rate or crawling capacity is first and foremost out of respect for the site: Googlebot will try never to worsen the user experience due to fetching overload. So, there is for each site a maximum number of simultaneous parallel connections that Googlebot can use to crawl, and the crawl rate can increase or decrease based:

- The health of the site and the server. In summary, if a site responds quickly, the limit goes up and Google uses more connections for crawling; if crawling is slow or server errors occur, the limit goes down.

- To the limit imposed by the owner in Search Console, remembering that setting higher limits does not automatically increase crawl frequency

- To the crawl limits of Google, which as mentioned has considerable, but not unlimited, resources and therefore must prioritize them to optimize their use.

Crawl demand, on the other hand, is related to popularity: URLs that are most popular on the Web tend to be crawled more frequently to keep them “fresher” in Google’s Index. In addition, the search engine’s systems try to prevent staleness, or URLs becoming obsolete in the index.

Generally, the aforementioned guide specifies, Google spends as much time as necessary crawling a site based on site size, update frequency, page quality, and relevance, commensurate with other sites. Therefore, the factors that play a significant role in determining crawl demand are as follows:

- Perceived inventory: In the absence of any indication from the site, Googlebot will try to crawl all or most of the known URLs on the site itself. If it finds a large number of duplicate URLs or pages that do not need to be crawled because they are removed, unimportant, or otherwise, Google may take longer than it should to crawl them. This is the factor we can keep most under control.

- Popularity: the most popular URLs on the Internet tend to be crawled more often to keep them constantly updated in the Index.

- Failure to keep up to date: systems scan documents frequently enough to detect changes.

- Site-level events, such as site migration, can generate increased demand for scanning to re-index content based on new URLs.

In summary, then, Google determines the amount of crawling resources to allocate to each site based on factors such as popularity, user value, uniqueness, and publishing capacity; any URLs that Googlebot crawls are counted for budget purposes, including alternative URLs, such as (the now obsolete) AMP or hreflang, embedded content, such as CSS and JavaScript, and XHR retrievals, which can then consume a site’s crawling budget. Moreover, even if the crawl capacity limit is not reached, Googlebot’s crawl rate may be lower if the site’s crawl demand is low.

The only two ways to increase the crawl budget are to increase the publication capacity for crawls and, most importantly, to increase the value of the site content to searchers.

Why crawl budget is important for SEO

At first glance, the concept of crawl budget may seem like a minor component of SEO. However, when we dive into the technical processes that really determine search engine rankings, we find that managing the crawl budget is more than marginal, because it can have an impact on the organic visibility and indexing of our pages.

When optimized, the crawl budget allows webmasters to prioritize which pages should be crawled and indexed first, in case the crawlers can analyze each path. Conversely, wasting server resources on pages that do not generate results and do not actually produce value produces a negative effect and risks missing out on a site’s quality content.

Simply put, the crawl budget represents the amount of resources Googlebot allocates to crawling a site over time. Each site has its own specific budget, which varies based on criteria such as the size of the site , the quality of the structure , and the popularity of its pages. Having a well-managed budget means making sure that the most important pages, those that generate traffic or conversions, are crawled frequently, while less relevant or outdated pages do not steal space.

The impact of crawl budget on SEO

From the SEO perspective, the crawl budget primarily affects how efficiently Googlebot is able to crawl the site and place pages in its index. It is not a parameter that directly affects the ranking algorithm, but the way we manage the crawl budget affects how much and which of our pages are crawled by Google. Inefficient use of this budget can compromise the identification of content that is important to our audience and, as a result, reduce our search engine visibility.

However, the crawl budget has a direct impact on how Googlebot scans and indexes our site: the number of pages it can see in a given time period is limited, and if we devote too many resources to pages of little value, we risk leaving out those pages crucial to our SEO strategy. For example, new pages on our site may remain unindexed for too long if Google continues to focus its resources on already indexed content or irrelevant URLs.

Or, if the crawler’s resources are wasted on duplicate pages, unoptimized sections, or low-importance URLs, we may see scenarios where key pages for our ranking are not indexed properly, hampering our site and making our efforts in terms of content creation and optimization in vain.

For large websites, such as e-commerce portals or content platforms, managing the SEO crawl budget becomes crucial. A portal with thousands of pages needs a clear priority in terms of crawling, as not all pages have the same weight to achieve strategic goals. Focusing only on quantitative page growth can lead to wasted resources, if the site is not structured to direct crawls to the URLs that really matter.

A strategic lever to make the site more efficient

The crawl budget serves precisely to avoid this: it allows us to make sure that Google devotes attention to the right pages, the ones that bring qualified traffic and generate results.

Another aspect related to the importance of the crawl budget from an SEO perspective is the overall efficiency of the site. Having a lean, properly structured site with good crawling impact ensures that Google can quickly understand which pages to visit and prioritize.

Cluttered structures, orphan or hard-to-access pages not only slow down crawling, but also complicate the discovery of that content that interests us most. An optimized site, on the other hand, facilitates the work of crawlers, favoring pages with greater value to users and those that are commercially strategic.

Using the crawl budget as a strategic lever for SEO is a key step in optimizing potential organic traffic. Our focus should be on maximizing the value of each resource, making sure that Google focuses its crawls on pages that can bring concrete results.

The goal is not just to get Googlebot to visit our site frequently, but to get it to spend its resources on the most useful pages. This can be reflected in rapid indexing of critical content, improving the user experience and, consequently, the organic ranking of our site.

Which sites should worry about crawl budget?

Not all websites need to worry about the crawl budget, and it is Google’s guidance that specifically emphasizes this point.

There are three categories of sites in particular that should be concerned about the crawl budget (the figures given are only a rough estimate and are not meant to be exact thresholds):

- Large sites (over one million unique pages) with content that changes with some frequency (once a week).

- Medium to large sites (over 10,000 unique pages) with content that changes very frequently (every day).

- Sites with a substantial portion of total URLs classified by Search Console as Detected, but not currently indexed.

It should be easy to see why for those who manage very large digital projects, such as large news portals, eCommerce sites with thousands of product pages, or blogs with a huge amount of content, it is critical to devote attention to this parameter in order to prevent valuable resources from being spent unnecessarily.

Indeed, large sites have a complexity that makes them more vulnerable to scanning issues. For example, it can happen that crawlers spend a lot of time on irrelevant or poorly trafficked pages, reducing the ability to crawl the really strategic pages for that site. The crawl budget of a site like a large eCommerce should be devoted first and foremost to those pages that convert, new product sheets or offers, which need to be continuously updated. In fact, it is not at all uncommon for low-quality or duplicate pages to waste crawl budget inefficiently, taking resources away from the better performing sections.

The type of site structure can also greatly influence the distribution of the crawl budget. In a portal or magazine, for example, prioritizing crawling by articulating recent articles is essential to ensure that submissions to readers remain relevant and fresh. If, on the other hand, we let crawlers stably scan old or out-of-date pages, we risk compromising results.

By managing the server budget more carefully, we can incentivize Google to spend more time crawling the sections that really matter, improving the performance of our structure and, ultimately, SEO results in the long run. This is why those who manage complex sites, such as editorial portals or online stores, need to be concerned about SEO crawl budget optimization to prevent dedicated crawler resources from being wasted on the wrong sections of the site or on low-quality pages.

Crawl budget optimization, what it means

Crawl budget optimization means making sure that Googlebot devotes most of its resources to the most important pages of the site, helping to improve both the overall ranking and the indexing speed of key content. There are various techniques and strategies we can implement to ensure that crawl resources are not wasted on irrelevant or low-quality pages.

On a general level, one of the methods to increase crawl budget is definitely to increase site trust. As we know well, among Google’s ranking factors is precisely the authority of the site, which also takes into account the weight of links: if a site is linked to it means that it is “recommended” and “popular,” and consequently the search engine interprets these links precisely as a recommendation and gives consideration to that content.

Also affecting the crawl budget is how often the site is updated, that is, how much new content is created and how often. Basically, if Google comes to a website and discovers new pages every day what it will do is reduce the crawl time of the website itself. A concrete example: usually, a news site that publishes 30 articles a day will have a shorter crawl time than a blog that publishes one article a day.

More generally, one of the most immediate ways to optimize the crawl budget is to limit the amount of low-value URLs on a Web site, which can as mentioned take valuable time and resources away from crawling a site’s most important pages.

Low-value pages include those with duplicate content, soft 404 error pages , faceted navigation and session identifiers, and then again pages compromised by hacking, infinite spaces and proxies and of course low-quality content and spam. So a first job that can be done is to check for these problems on the site, including checking crawl error reports in Search Console and minimizing server errors.

Interventions to optimize the crawl budget

On the practical front, one of the first interventions to increase the crawl budget that Google itself provides us with concerns managing the speed of the website, and in particular that of the server’s response in serving the requested page to Googlebot.

This means working on the robots.txt file, which allows us to tell Googlebot which pages can be crawled and which cannot, thus giving us direct control over the distribution of the crawl budget: by blocking the crawl of irrelevant pages (such as duplicate URLs, login or test pages), we can immediately reduce the load of the crawlers and direct them to the sections that really need attention. Intelligent use of the robots.txt file is one of the fundamentals of crawl optimization.

Another technical element to consider is the exploitation of internal link building: strategically using internal links between the different sections of the site helps to distribute the crawl budget evenly and to give Google guidance on which paths to follow. Pages with greater importance should be easily reachable, and linking pages together correctly improves crawl effectiveness.

Reducing the number of duplicate or low-quality pages is another must-do move: duplicate content or pages with low value not only steal resources from crawlers, but can also hurt overall site performance-which is why it is also important to better manage the “noindex ” tag for effective indexing. Optimizing the site hierarchy is also essential: a well-organized structure that allows search engines to quickly locate key pages makes crawling easier and ensures that resources are allocated correctly.

A final caveat concerns the management of redirects: if well managed, redirects can improve crawling performance by directing crawlers to new versions of pages, without wasting resources on failed crawl attempts. However, too many poorly managed redirects can slow down the process and create bottlenecks. Using Google Search Console to monitor redirects and improve performance according to the guidelines is a good practice to follow continuously to manage site crawling effectively and functionally.

How to improve crawling efficiency, Google’s advice

Google’s official document also provides us with a quick guide to optimizing some aspects of the site that may affect the crawling budget-emphasizing the premise that this aspect should “worry” only some specific types of sites, although obviously all other sites can benefit and optimization insights from these interventions as well.

Specifically, the tips are:

- Manage your inventory of URLs, using the appropriate tools to tell Google which pages should or should not be crawled. If Google spends too much time crawling URLs that are not suitable for indexing, Googlebot may decide that it is not worth examining the rest of the site (let alone increasing the budget to do so). Specifically, we can:

- Merge duplicate content, to focus crawling on unique content instead of unique URLs.

- Block URLcrawling using robots.txt. file, so as to reduce the likelihood of indexing URLs that we do not wish to be displayed in Search results, but which refer to pages that are important to users, and therefore to be kept intact. An example are continuously scrolling pages that duplicate information on related pages or differently ordered versions of the same page.

In this regard, the guide urges not to use the noindex tag, as Google will still perform its request, although it will then drop the page as soon as it detects a meta tag or a noindex header in the HTTP response, wasting scanning time. Likewise, we should not use the robots.txt file to temporarily reallocate some crawl budget for other pages, but only to block pages or resources that we do not want crawled. Google will not transfer the newly available crawl budget to other pages unless it is already reaching the site’s publishing limit.

- Returning a 404 or 410 status code for removed pages Google will not remove a known URL, but a 404 status code clearly signals to avoid rescanning that given URL; blocked URLs will continue to remain in the crawl queue, although they will only be rescanned with the removal of the block.

- Eliminate soft 404 errors . Soft 404 pages will continue to be crawled and negatively impact the budget.

- Keep the sitemaps up to date, including all content that we want crawled. If the site includes updated content, we must include the

tag. - Avoid long redirect chains, which have a negative effect on crawling.

- Check that pages load efficiently: if Google can load and display pages faster, it may be able to read more content on the site.

- Monitor site crawling , checking to see if the site has experienced availability problems during crawling and looking for ways to make it more efficient.

Regarding the latter, Google also summarizes what the main steps to monitor site crawling are, namely:

- Check if Googlebot is experiencing availability problems on the site.

- Check if there are pages that should be crawled and are not.

- Check whether scanning of parts of the site should be faster than it currently is.

- Improve the efficiency of site scanning.

- Handle cases of excessive site scanning.

How to improve the efficiency of site scanning

The guide then goes into valuable detail about trying to manage the scanning budget efficiently.

In particular, there are a number of interventions that allow us to increase the loading speed of the page and the resources on it.

As mentioned, Google’s crawling is limited by factors such as bandwidth, time, and availability of Googlebot instances: the faster the site response, the more pages are likely to be crawled. Fundamentally, however, Google would only want to crawl (or at any rate prioritize) high-quality content, and so speeding up the loading of low-quality pages does not induce Googlebot to extend crawling of the site and increase the crawling budget, which might happen instead if we let Google know that it can detect high-quality content.

A crucial aspect is monitoring crawling through tools such as the Crawl Statistics report in Google Search Console or analyzing server logs to check whether Googlebot has crawled certain URLs. Constant monitoring of crawl history becomes essential, especially if Googlebot is not detecting some important pages on our site. In those cases, it might be useful to analyze the alerts in the logs or in the “Crawl Statistics” report to see if the problems are related to server availability (e.g., an overload) that limits Googlebot’s capabilities. If the server is overloaded, it is advisable to temporarily increase resources, analyzing over the course of a few weeks whether this produces an increase in scan requests from Google.

Translated into practical terms, we can optimize pages and resources for crawling in this way:

- Prevent Googlebot from loading large but unimportant resources using the robots.txt file, blocking only non-critical resources, i.e., resources that are not important for understanding the meaning of the page (such as images for decorative purposes).

- Ensure that pages load quickly.

- Avoid long redirect chains, which have a negative effect on crawling.

- Check the response time to server requests and the time it takes to render pages, including the loading and execution time of embedded resources, such as images and scripts, which are all important, paying attention to voluminous or slow resources needed for indexing.

Again from a technical perspective, a straightforward tip is to specify content changes with HTTP status codes, because Google generally supports If-Modified-Since and If-None-Match HTTP request headers for crawling . Google’s crawlers do not send headers with all crawl attempts, but it depends on the use case of the request: when crawlers send the If-Modified-Since header, the value of the header is equivalent to the date and time of the last content crawl, based on which the server might choose to return an HTTP 304 (Not Modified) status code without a response body, prompting Google to reuse the version of the content that was last crawled. If the content is after the date specified by the crawler in the If-Modified-Since header , the server may return an HTTP 200 (OK) status code with the response body. Regardless of the request headers, if the contents have not changed since the last time Googlebot visited the URL, however, we can send an HTTP 304 (Not Modified) status code and no response body for any Googlebot request: this will save server processing time and resources, which may indirectly improve crawling efficiency.

Another practical action is to hide URLs that we do not wish to be displayed in search results: wasting server resources on unnecessary pages can compromise the crawling activity of pages that are important to us, leading to a significant delay in detecting new or updated content on a site. While it is true that blocking or hiding pages that are already crawled will not transfer crawl budget to another part of the site, unless Google is already reaching the site’s publishing limits, we can still work on reducing resources, and in particular avoid showing Google a large number of site URLs that we do not want crawled by Search: typically, these URLs fall into the following categories:

- Facet browsing and session identifiers: facet browsing typically pulls duplicate content from the site, and session identifiers, as well as other URL parameters, sort or filter the page but do not provide new content.

- Duplicate content, generating unnecessary crawling.

- Soft 404 pages.

- Compromised pages, to be searched and corrected through the Security Issues report .

- Endless spaces and proxies.

- Poor quality and spam content.

- Shopping cart pages, continuous scrolling pages, and pages that perform an action (such as pages with invitation to register or purchase).

The robots.txt file is our useful tool when we do not want Google to crawl a resource or page, blocking only those elements that are not needed for a long period of time or should not appear in search results. Also, if the same resource (e.g., an image or a shared JavaScript file) is reused on multiple pages, it is advisable to reference the resource using the same URL on each page so that Google can store and reuse that resource without having to request it multiple times.

On the other hand, practices such as assiduously adding or removing pages or directories from the robots.txt file to reallocate crawl budget for the site, and alternating between sitemaps or using other temporary hiding mechanisms to reallocate budget are not recommended. It is also important to know that indexing of new pages does not happen immediately: it will typically take up to several days before Googlebot detects new content, except for sites where rapid crawling is essential such as news sites. By constantly updating sitemaps with the latest URLs and regularly monitoring server availability , you can ensure that there are no bottlenecks that slow down crawling or indexing of important content.

How to reduce Google crawl requests

If the problem is exactly the opposite-and thus we are facing a case of excessive site crawling-we have some actions to follow to avoid overloading the site, remembering however that Googlebot has algorithms that prevent it from overloading the site with crawl requests. Diagnosis of this situation should be done by monitoring the server to verify that indeed the site is in trouble due to excessive requests from Googlebot, while management involves:

- Temporarily return HTTP 503 or 429 response status codes for Googlebot requests when your server is overloaded. Googlebot will try again to crawl these URLs for about 2 days, but returning “no availability” type codes for more than a few days will result in slowing down or permanently stopping crawling URLs on the site (and removing them from the index), so we need to proceed with the other steps

- Reduce the Googlebot crawl frequency for the site. This can take up to two days to take effect and requires permission from the user who owns the property in Search Console, and should only be done if in the Host Availability > Host Usage graph of the Crawl Statistics report we notice that Google has been performing an excessive number of repeated crawls for some time.

- If scan frequency decreases, stop returning HTTP response status codes 503 or 429 for scan requests; if you return 503 or 429 for more than 2 days.

- Perform scan and host capacity monitoring over time and, if necessary, increase the scan frequency again or enable the default scan frequency.

- If one of AdsBot’s crawlers is causing the problem, it is possible that we have created dynamic search network ad targets for the site and Google is attempting to scan it. This scan is repeated every two weeks. If the server capacity is not sufficient to handle these scans, we may limit the ad targets or request an increase in posting capacity.

Doubts and false myths: Google clarifies questions about crawl budget

The ever-increasing relevance of the topic has led, almost inevitably, to the emergence and proliferation of a great many false myths about the crawl budget, some of which are also listed in Google’s official guide: for example, among the definitely false news are that

- We can control Googlebot with the “crawl-delay” rule (actually, Googlebot does not process the non-standard “crawl-delay” rule in the robots.txt file).

- Alternate URLs and embedded content do not counttoward the crawl budget (any URL that Googlebot crawls is counted toward the budget).

- Google prefers clean URLs with no search parameters (it can actually crawl parameters).

- Google prefers old content, which carries more weight, over new content (if the page is useful, it stays that way regardless of the age of the content).

- With its QDF algorithm, Google prefers and rewards the most up-to-date content possible, so it pays to keep making small changes to the page (content should be updated as needed, and it does no good to make revamped pages appear by only making trivial changes and updating the date).

- Small sites are not crawled as frequently as large sites (on the contrary: if a site has important content that undergoes frequent changes, we often crawl it, regardless of size).

- Compression of sitemaps can increase the crawl budget (even if placed in .zipper files, sitemaps still need to be retrieved on the server, so Google does not save much in terms of time or effort when we send compressed sitemaps).

- Pages that show HTTP 4xx status codes waste crawling budget (apart from error 429, other pages do not waste crawling budget-Google tried to crawl the page, but received a status code and no other content).

- Crawling is a ranking factor (not so).

How to estimate a site’s crawl budget

Estimating a website’s crawl budget is a key exercise in understanding how Googlebot interacts with our pages and which sections of the site are taking up the most crawl resources.

The most effective tool for obtaining a crawl budget estimate is undoubtedly the oft-mentioned Google Search Console, which provides a number of reports dedicated to crawler behavior. In Google Search Console, crawl reports allow us to determine the number of URLs crawled, frequency, and any issues encountered when crawling specific pages.

Using these reports gives us a clear view of the amount of pages actually crawled, thus offering insights into how to improve the management of our site. The “Coverage” section of Google Search Console, in particular, gives us insights into how many pages on our site have been crawled and how many URLs have been excluded from crawling or are even in the “detected but currently not indexed” status. This data is valuable for identifying any deficiencies or inefficiencies in the crawling process and for taking corrective action, such as manually requesting a rectification with the “recrawl google” command.

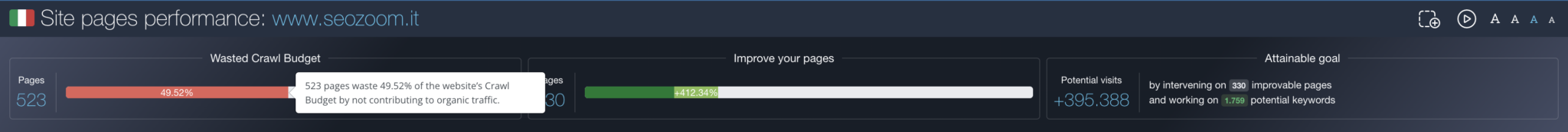

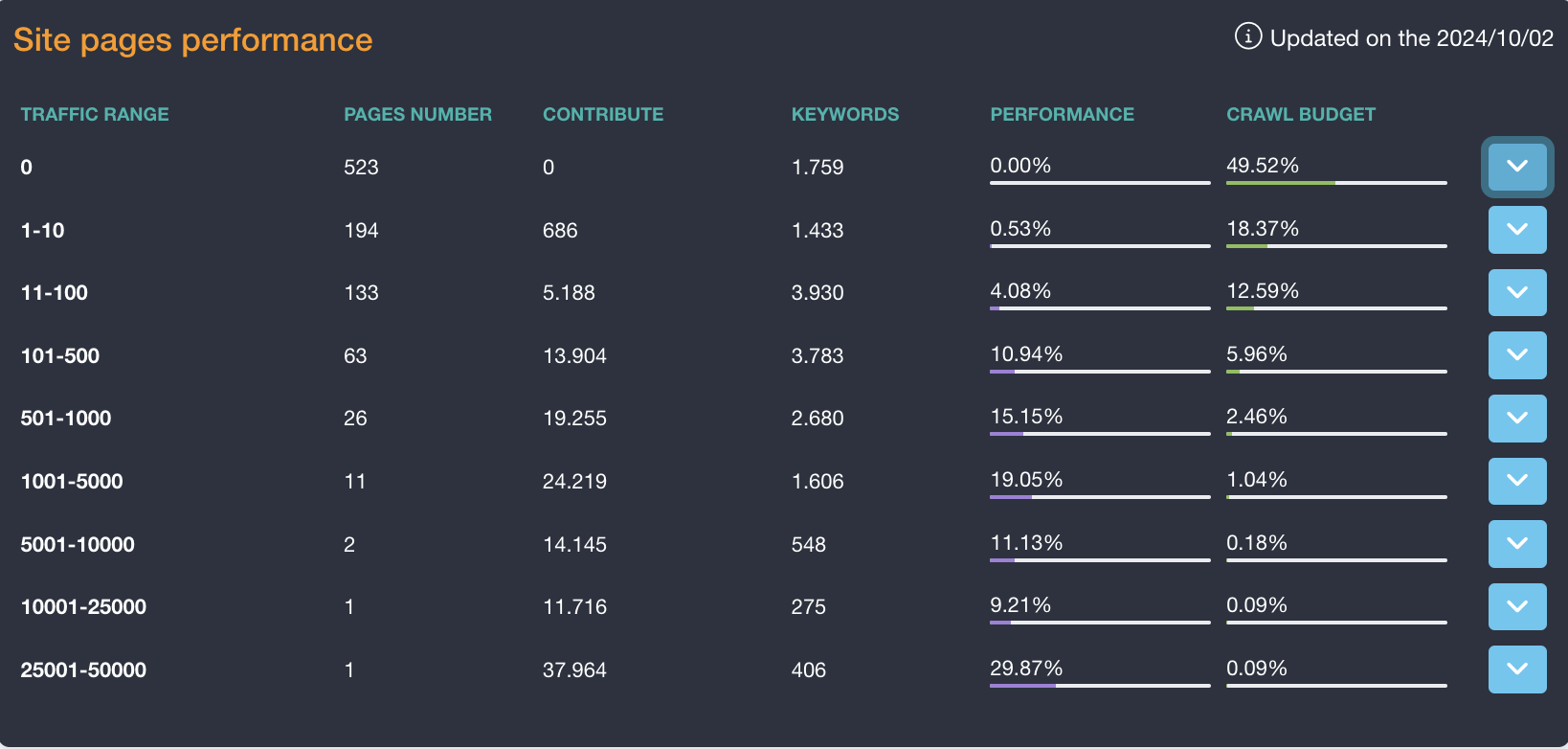

Another useful approach to estimate the crawl budget is through the use of advanced SEO tools such as SEOZoom. This tool provides insight into the performance of site pages and highlights which URLs consume the most crawl budget without contributing significantly to organic traffic. By analyzing groups of “high-consuming, low-performing” pages, it is possible to identify sections of the site that steal resources but do not bring value, allowing us to optimize the site’s search engine presence more consciously and strategically, thereby improving our SEO crawling ability.

SEOZoom’s tools for knowing and optimizing the crawl budget.

Delving specifically into the opportunities within our suite, we can take full advantage of SEOZoom ‘s Page Yield section to better manage the crawl budget and improve the efficiency of resources dedicated to crawling the site.

In this area, found within Projects, we can quickly monitor critical areas and get a clear overview of how Google is using time and resources to crawl site pages. SEOZoom provides us with a detailed estimate that highlights which URLs are being crawled most frequently but do not offer a contribution to organic traffic, allowing us to quickly identify pages that waste crawl budget because they do not bring added value in terms of ranking or search volume.

Through the available data, we can then understand which pages are really employing more crawling resources, comparing them to estimated monthly traffic and the number of keywords placed. This type of analysis allows us to intervene strategically, deciding whether to try to optimize pages that are consuming resources unnecessarily or, in some cases, remove them or reduce their importance to free up crawling resources. If a page does not perform well organically, we can easily check whether it is a resource that is in danger of not being crawled because it is not positioned with relevant keywords or if it is simply not visible to users.

Before taking drastic actions, however, it is important to have a complete and contextualized picture of the overall performance of resources: in this regard, it is useful to cross-reference the data with those of tools such as Google Search Console and Google Analytics. This way we can be sure that low traffic does not come from external channels such as social or referrals, and avoid eliminating pages that might be strategic from other points of view.

Another area of SEOZoom that proves extremely useful concerns the ability to identify pages that have high potential for improvement. SEOZoom gives us targeted guidance on which URLs can benefit fromSEO optimization, focusing especially on those pages that already rank well on Google but are not yet reaching the first page of results. Optimizing this content means maximizing both its traffic potential and its impact on the site’s overall crawl budget , because we could turn pages that are already present and crawling into real strengths for the site’s ranking in search results.

In addition, SEOZoom allows us to analyze page performance segmented by traffic groups, highlighting which parts of the site require more resources to crawl than the results they are generating. This gives us the ability to make informed decisions about which portions of the site to rationalize or boost, to ensure that Googlebot is using the time spent crawling productively, focusing on the pages that really attract traffic and interaction.

All of this is possible because of the clear visualization and organization of information in SEOZoom’s Crawl Budget section, which allows us to see how resources are distributed across different page categories and, most importantly, to see if we are wasting resources on unhelpful pages or on duplicate content, soft 404s, or compromised pages. By optimizing these critical areas, we can not only improve crawling, but also speed up search engine response with respect to site updates, promoting better indexing and an overall smoother and more profitable experience for visitors.

SEOZoom, therefore, gives us precise and focused insight into the site’s crawling resource utilization, and allows us to act proactively to optimize not only crawling, but the overall SEO efficiency of the site. With all this information at our disposal, we can easily find a powerful tool to support intelligent management of the crawl budget, which is essential to ensure that Googlebot always focuses on content that offers the most value, leaving aside those that instead consume resources without bringing concrete results.

These tips come in especially handy if we don’t have access to Search Console (e.g., if the site is not ours), because they still offer us a way to tell if the search engine is liking the site, thanks in part to the instant glance provided by Zoom Authority, our native metric that immediately pinpoints how influential and relevant a site is to Google.

The ZA takes into account many criteria, and thus not only pages placed in Google’s top 10 or the number of links obtained, and thus a high value equals an overall liking by the search engine, which rewards that site’s content with frequent visibility-and we can also analyze relevance by topic through the Topical Zoom Authority metric.

Optimizing crawl budget for SEO, best practices

An in-depth article on Search Engine Land by Aleh Barysevich presents a list of tips for optimizing the crawl budget and improving a site’s crawlability, with 8 simple rules to follow for each site:

- Don’t block important pages.

- Stick to HTML when possible, avoiding heavy JavaScript files or other formats.

- Fix redirect chains that are too long.

- Report URL parameters to Googlebot.

- Fix HTTP errors.

- Keep sitemaps up to date.

- Use rel canonical to avoid duplicate content.

- Use hreflang tags to indicate country and language.

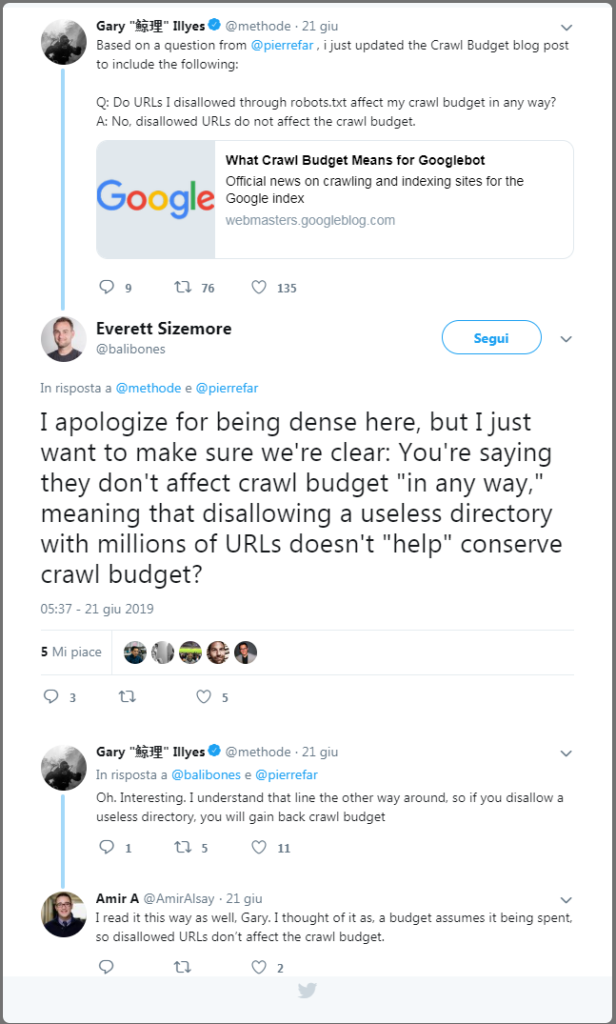

Another technical tip for optimizing a site’s crawl budget comes from Gary Illyes, who explains how setting the disallow on irrelevant URLs allows you to not weigh down the crawl budget, and therefore using the disallow command in the robots file can allow you to better manage Googlebot crawling. Specifically, in a Twitter conversation, the Googler explained that “if you use disallow on a useless directory with millions of URLs you gain crawl budget,” because the bot will spend its time analyzing and crawling more useful resources on the site.

The possible optimization interventions on the site

Delving deeper into the tips described above, we can then define some specific interventions that could help better manage the site’s crawl budget-nothing particularly “new,” because these are some well-known signs of website health.

The first tip is almost trivial, which is to allow crawling of the important pages of the site in the robots.txt file, a simple but decisive step to get the crawled and blocked resources under control. Equally, it is good to take care of the XML sitemap, so as to give the robots an easy and faster way to understand where internal links lead; remember to use only canonical URLs for the sitemap and to always update it to the most recent uploaded version of the robots.txt – while instead there is not much use in compressing the sitemaps, because they have to be retrieved on the server anyway and this does not amount to a noticeable saving in terms of time or effort for Google.

It would then be good to check – or avoid altogether – redirect chains, which force Googlebot to crawl multiple URLs: in the presence of an excessive share of redirects, the search engine crawler could suddenly terminate the crawl without reaching the page it needs to index. If 301s and 302s should be limited, other HTTP status codes are even more harmful: 404s and 410 pages technically consume crawl budget and, what’s more, also harm the site’s user experience. No less annoying are 5xx errors related to the server, which is why it is good to do a periodic analysis and health checkup of the site, perhaps using our SEO spider!

Another thought to be made is about URL parameters, because separate URLs are counted by crawlers as separate pages, and thus inestimably waste part of the budget and also risk raising doubts about duplicate content. In cases of multilingual sites, then, we need to make the best use of the hreflang tag, informing Google as clearly as possible of geolocated versions of pages, either with the header or with the

Finally, a basic choice to improve crawling and simplify Googlebot’s interpretation could be to always prefer HTML to other languages: although Google is learning to handle JavaScript more and more effectively (and there are many techniques for SEO optimization of JavaScript), old HTML still remains the code that gives the most guarantees.

The critical SEO issues of the crawl budget

One of the Googlers who has most often addressed this issue is John Mueller, who, in particular, has also reiterated on Reddit that there is no benchmark for Google’s crawl budget, and that therefore there is no optimal benchmark “number” to strive toward with site interventions.

What we can do, in practical terms, is to try to reduce waste on the “useless” pages of our site-that is, those that do not have keyword rankings or that do not generate visits-in order to optimize the attention Google gives to content that is important to us and that can yield more in terms of traffic.

The absence of a benchmark or an ideal value to which tender makes all the discussion about crawl budget is based on abstractions and theories: what we know for sure is that Google is usually slower to crawl all the pages of a small site that does not update often or does not have much traffic than that of a large site with many daily changes and a significant amount of organic traffic.

The problem lies in quantifying the values of “often” and “a lot,” but more importantly in identifying an unambiguous number for both huge, powerful sites and small blogs; for example, again theoretically, an X value of crawl budget achieved by a major website could be problematic, while for a blog with a few hundred pages and a few hundred visitors per day it could be the maximum level achieved, difficult to improve.

Prioritizing pages relevant to us

Therefore, a serious analysis of this “indexing search budget” should focus on overall site management, trying to improve the frequency of results on important pages (those that convert or attract traffic) using different strategies, rather than trying to optimize the overall frequency of the entire site.

Quick tactics to achieve this are redirects to take Googlebots away from less important pages (blocking them from crawling) and the use of internal links to funnel more relevance on the pages you want to promote (which, ça va sans dire, must provide quality content). If we operate well in this direction – also using SEOZoom’s tools to check which URLs are worth focusing on and concentrating resources on – we could increase the frequency of Googlebot passes on the site, because Google should theoretically see more value in sending traffic to the pages it indexes, updates, and ranks on the site.

The crawl budget considerations

It should be pretty clear that having the crawl budget under control is very important, because it can be a positive indication that Google likes our pages, especially if our site is being crawled every day, several times a day.

According to Illyes – the author back in 2017 of an in-depth discussion on Google’s official blog – however, the crawl budget should not be too worrisome if “new pages tend to be crawled the same day they are published” or “if a site has fewer than a few thousand URLs,” because this generally means that Googlebot crawling is working efficiently.

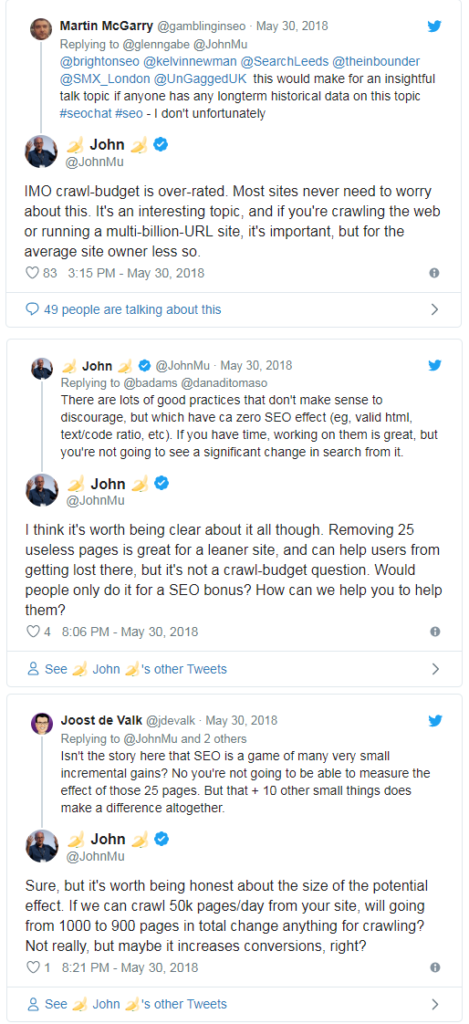

Other public voices at Google have also often urged site owners and webmasters not to be overly concerned about crawl budgets, or rather not to think exclusively about absolute technical aspects when performing onsite optimization efforts. For example, in a Twitter exchange John Mueller advises rather to focus first on the positive effects in terms of user experience and increased conversions that might result from this strategy.

To be precise, Search Advocat argues that there are many best practices for optimizing crawl budget, but they may have little practical effect: for example, removing 25 unnecessary pages is a great way to make sites leaner and prevent users from getting lost while browsing, but it is not something that should be done to improve crawl budget (crawl-budget question) or to hope for concrete ranking feedback.

How does crawl budget and rendering work? Google’s summary

And it is John Mueller himself who gives us some concise, but nonetheless interesting, pointers on crawl budget, techniques to try to optimize its management, and related aspects such as caching or reduction of embedded resources and their impact on site speed for users.

The starting point for this reflection, which is explored in depth in an episode of the #AskGooglebot series, stems from a user’s question via Twitter, asking if “heavy use of WRS can also reduce a site’s crawl budget.”

As usual, the Search Advocate clarifies the definitions of terms and activities, reminding that:

- WRS is the Web Rendering Service, which is the system Googlebot uses to render pages like a browser, so it can index everything the same way users would see it.

- Crawl Budget refers to the system that “we use to limit the number of requests we make to a server so that we don’t cause problems during our crawling.”

How Google manages a site’s crawl budget

Mueller again reiterates that crawl budget is not an issue that should concern all sites, because generally “Google has no problem crawling enough URLs for most sites.” And while there is no specific threshold or benchmark, in linra generally the crawl budget “is an issue that should concern mostly large websites, those with over a hundred thousand URLs.”

In general, Google’s systems can automatically determine the maximum number of requests a server can process in a given time period. This is “done automatically and adjusted over time,” Mueller explains, because “as soon as we see that the server starts to slow down or return server errors, we reduce the crawl budgetavailable to our crawlers.”

Google and rendering

The Googler also dwells on rendering, explaining to sites that search engine services must “be able to access embedded content, such as JavaScript files, CSS, files, images and videos, and server responses from APIs that are used on pages.”

Google makes “extensive use of caching to try to reduce the number of requests needed to render a page, but in most cases rendering results in more than just a request, so more than just an HTML file being sent to the server.”

Reducing embedded resources also helps users

Ultimately, according to Mueller, especially when operating on large sites it can help with crawling to “reduce the number of embedded resources required to render a page.”

This technique also allows us to deliver faster pages for users and thus achieve two important outcomes for our strategy.

Google’s insight on crawl budget.

The crawl budget was also the focus of an appointment with SEO Mythbusting season 2, the series in which Google Developer Advocate Martin Splitt tries to dispel myths and clarify frequent doubts about SEO topics.

Specifically, in the episode that had Alexis Sanders, senior account manager at the marketing agency Merkle, as guest, the focus went to definitions and from crawl budget management tips, a topic that creates quite a few difficulties of understanding for those working in search marketing.

Martin Splitt then began his insight by saying that “when we talk about Google Search, indexing and crawling, we have to make some sort of trade-off: Google wants to crawl the maximum amount of information in the shortest possible time, but without overloading the servers,” that is, finding the crawl limit or crawl rate.

To be precise, the crawl rate is defined as the maximum number of parallel requests that Googlebot can make simultaneously without overloading a server, and so it basically indicates the maximum amount of stress Google can exert on a server without bringing anything to a crash or generating inconvenience for this effort.

However, Google has to be careful only about other people’s resources, but also about its own, because “the Web is huge and we can’t scan everything all the time, but make some distinctions,” Splitt explains.

For example, he continues, “a news site probably changes quite often, and so we probably have to keep up with it with a high frequency: in contrast, a site about the history of kimchi probably won’t change as assiduously, because history doesn’t have the same rapid pace as the news industry.”

This explains what crawl demand is, which is the frequency with which Googlebot crawls a site (or, rather, a type of site) based on its likelihood of being updated. As Splitt says in the video, “we’re trying to figure out if we should crawl more often or if we can check in from time to time instead.”

The process of deciding this factor is based on Google’s identification of the site on the first crawl, when it basically “fingerprints” its content by seeing the topic of the page (which will also be used for deduplication, at a later stage) and also analyzing the date of the last change.

The site has the ability to communicate the dates to Googlebot-for example, through structured data or other time elements on the page-which “more or less tracks the frequency and type of changes: if we detect that the frequency of changes is very low, then we will not scan the site particularly frequently.”

Scanning frequency is not about content quality

Very interesting is the Googler’s next point: this crawl rate has nothing to do with quality, he says. “You can have great content that ranks great, that never changes,” because that the question here is ”whether Google needs to crawl the site frequently or whether it can leave it quiet for a period of time.”

To help Google answer this question correctly, webmasters have various tools at their disposal that give “hints”: in addition to the already mentioned structured data, one can use ETag or HTTP headers, which are useful for reporting the date of the last change in sitemaps. However, it is important that the updates are useful: “If you only update the date in the sitemap and we find that there is no real change on the site, or that they are minimal changes, you are of no help” in identifying the likely frequency of changes.

When is the crawl budget a problem?

According to Splitt, crawl budget is still an issue that should primarily concern or worry huge sites, “say with millions of urls and pages,” unless you “have a bad and unreliable server.” But in that case, “more than the crawl budget you should focus on server optimization,” he continues.

Usually, according to the Googler, people talk about crawl budgets out of hand, when there is no real related problem; for him, crawl budget issues happen when a site notices that Google discovers but does not crawl pages it cares about for an extensive period of time and those pages have no problems or errors whatsoever.

In most cases, however, Google decides to crawl but not index the pages because they are “not worth it due to the poor quality of the content present.” Typically, these urls are marked as “excluded” in Google Search Console’s Index Coverage Report, the video clarifies.

Crawl frequency is not an indicator of quality

Having a site crawled very frequently is not necessarily a help to Google because crawl frequency is not a signal of quality, Splitt further explains, because it is OK for the search engine even if “having something crawled, indexed, and that doesn’t change anymore” and doesn’t require any more bot passes.

Some more targeted advice comes for e-Commerce: if there are lots of small, very similar pages with related content, one should think about their usefulness by first asking whether their existence makes sense. Or, consider extending the content to make it better? For example, if they are just products that vary in one small feature, they could be grouped on one page with descriptive text that includes all possible variations (instead of having 10 small pages for each possibility).

Google’s burden on servers

The crawl budget relates to a number of issues, then: among those mentioned are precisely duplicate or search pages, but server speed is also a sensitive issue. If the site relies on a server that crashes from time to time , Google may have difficulty understanding whether this happens because of poor server characteristics or because of an overload of its requests.

On the subject of server resource management, then, Splitt also explains how bot activity (and thus what you see in the log files) works in the initial stages of Google discovery or when, for example, a server migration takes place : initially, an increase in crawling activity, followed by a slight reduction, which continues, thus creating a wave. Often, however, the server switch does not require a new rediscovery by Google (unless we switch from something broken to something that works!) and so the crawling bot activity remains as stable as before the switch.

Crawl budget and migration, Google’s advice

Rather tricky is also the management of Googlebot crawling activity during overall site migrations: the advice coming from the video to those in the midst of these situations is to progressively update the sitemap and report to Google what is changing, so as to inform the search engine that there have been useful changes to follow up and verify.

This strategy gives webmasters a little control over how Google discovers changes in the course of a migration, although in principle it is also possible to simply wait for the operation to complete.

What is important is to ensure (and make sure) that both servers are functioning and working smoothly, without momentary sudden collapses or error status queues; it is also important to set up redirects properly and verify that there are no relevant resources blocked on the new site through a robots.txt file that has not been properly updated to the new site post-migration.

How the crawl budget works

A question from Alexis Sanders brings us back to the central theme of the appointment and allows Martin Splitt to explicate how the crawl budget works and on which level of the site it intervenes: usually, Google operates on the site level, so it considers everything it finds on the same domain. Subdomains may be crawled sometimes, while in other cases they are excluded, while for CDNs “there is nothing to worry about.”

Another practical tip that comes from the Googler concerns the management of user-generated content, or more generally anxieties about the quantity of pages on a site: “You can tell us not to index or not to crawl content that is of low quality,” Splitt reminds us, and so for him crawl budget optimization is “something that is more about the content side than the technical infrastructure aspect,” (an approach that is in line with that of our tools!).

Do not waste Google’s time and resources

Ultimately, working on the crawl budget also means not wasting Google’s time and resources, including through a number of very technical solutions such as cache management.

In particular, Splitt explains that Google “tries to be as aggressive as possible when it’s caching sub-resources, like CSS, JavaScript, API calls, all those kinds of things.” If a site has “API calls that aren’t GET requests, then we can’t cache them, and so you have to be very careful about making POST requests or the like, which some APIs do by default,” because Google can’t “cache them and that will consume the site’s crawl budget more quickly.”