Analysis of technical SEO, how the SEOZoom spider works

What is a spider

What is a spider for

SEO technical analysis and on-page optimization are basic activities for any strategy that wants to be effective: if the main tools of SEOZoom allow to intervene on the limits of the contents or on the identification of the keyword research more useful to the project, With the new SEO Spider you can have an overview on the health of the site, a more detailed and in-depth check of what was previously obtained with the SEO audit, which will help identify the problems that needs to be analytically solved.

SEOZoom Spider’s characteristics

In short, SpiderMax allows you to perform a complete scan of the site (of any site, even if it is not included among the projects) just as Google’s crawler would do, and shows all the parameters invisible to the naked eye that describe the structural characteristics of the domain, starting with errors and warnings, flaws to be fixed, more or less serious problems, anomalies and obstacles that can compromise the site’s performance, but you need to be able to interpret the data in order to intervene with corrections.

The tool thoroughly analyzes each website and looks for errors in the code and incorrect use of tags important for SEO, identifying technical problems and evaluates each page by combining technical data with data identifying its authority on search engines.

In addition, compared to similar tools there is a big advantage: the SEOZoom spider is integrated into the suite and is fully usable from the web, and therefore there is no need to purchase or install any additional software because the scanning of websites is carried out by hundreds of our special spiders that do not slow down the work or waste users’ computer resources.

Learning to read and interpret data

As Ivano Di biasi wrote in a long post on Facebook, an “SEO Spider is something extremely complex, not only for those who create it but also for those who have to interpret its data“, because it force you to do that inevitable “step forward if you really want to be able to improve your own website”. Having summary mirrors and a “0 to 100 score that tells you whether things are good or bad” is useful and practical, but the SEO “is not just about scores taken out of a software”.

It is in fact necessary to take that step more, as we were saying, that is to acquire new knowledge and detect errors in the most hidden places, and SpiderMax will lead users in the process, not leaving them alone but rather supporting them in understanding how to intervene on websites.

Features of the spider

SEOZoom spider is a complete tool to improve the site, check the pages indexed by Google, locate structural errors, fix the various problems that exist and that can both annoy Googlebot and waste crawl budget: moreover, thanks to the integration with the other tools of the suite, it is possible to obtain information on each URL scanned, immediately displaying the number of keywords, traffic and other strategic details.

Activities of the spider

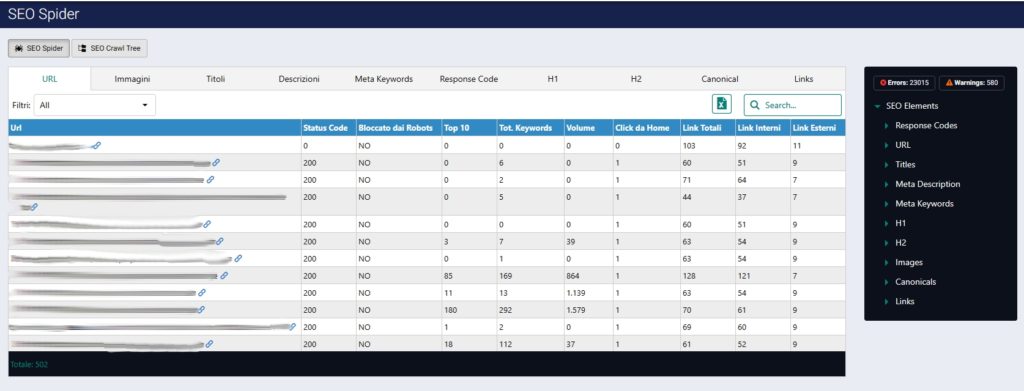

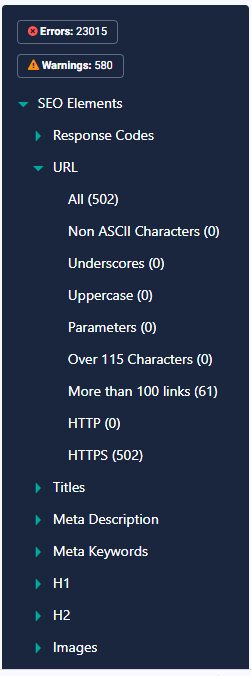

The crawling performed by the tool returns a series of data useful to understand which are the critical aspects that may be present and the areas on which to intervene for an SEO optimization. The main dashboard reports 10 fields analyzed by the tool, a.k.a URL, Images, Titles, Descriptions, Meta keywords, Response Code, H1, H2, canonical and Links, that represent the areas on which the activity of the spider has been focused.

URLs analysis

Correcting and improving site titles and descriptions

A spider to analyze every site page

A focus on visual SEO to optimize the images

Studying the tree structure of the site

SEOZoom’s SEO Spider to check your site

Ultimately, therefore, the new SEOZoom tool from stands to be an exceptional ally to make your online strategy more effective, a fantastic tool that spares you the need to purchase additional softwares.

As always, the suite offers users the right “tools” to be competitive and winning on the Web, features to be used carefully to get a precise picture on the health of the site and know the technical errors present on it.

Acting on critical issues, correcting problems and limiting all those that may be the aspects in contrast with Google’s guidelines certainly does not give the absolute guarantee of a front-page placement, but at the same time is the right basis on which to try and build the success of your project.

There is one last aspect worth dwelling on: to have a perfect site it is certainly good to limit the number of Spider alerts, but it is not necessarily necessary to aim for absolute zero! The most correct (and also most practical) approach starts from the assumption that Alerts and Errors are all technical elements related to the automatic settings of the tool, and thus human analysis is needed on individual sections to unearth errors and fix them, coupled with a broad and informed view of the technical aspects.

To put it more simply, not all items are a “problem” and do not carry equal weight: for example, and just quickly, a crucial page on the site whose indexing via robots has been inadvertently blocked is definitely an error, while the absence of alt text or duplicate H2 are minor items that should not impact ranking-or, at least, not in such a direct and clear-cut way-and therefore do not require timely and priority intervention.