Site structure and SEO, 10 ways in which organization influences visibility

We have defined it as a key to the success of the project, and in fact a clean and orderly organization allows Google and especially users to understand what all pages mean and how they are related and connected to each other. So let’s talk about the structure of the site, to see together 10 ways in which it can influence the SEO and help remove invisible obstacles that stand in the way of search engines trying to view and scan the domain.

The value of site structure

Today, being successful on search engines means going beyond the simple “have a clean and polished design”, as Maddy Osman reminds us on Search Engine Journal, according to who “find a balance between an aesthetically attractive website for customers and a structure designed for the visibility of search engines”, because without an organized website structure and that has a logical sense “other SEO efforts, content and even design might be useless”.

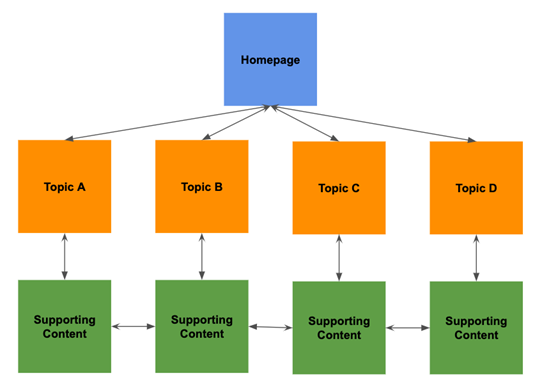

Usually, the conventional and popular structure is flat, but many also rely on a hierarchical architecture of the site, useful to immediately inform Google of the taxonomy set to categorize the elements present in the entire domain.

How the site structure influences the SEO

However, a good site structure can have a significant impact on SEO-side traffic and conversions, but it can also help the entire organization to better interact with users and create an experience in line with business goals, the products and the structure of the services.

Here is a list of the 10 aspects to take into account considering the relation between structure and SEO of our site.

- Crawlability, the ease of site scanning

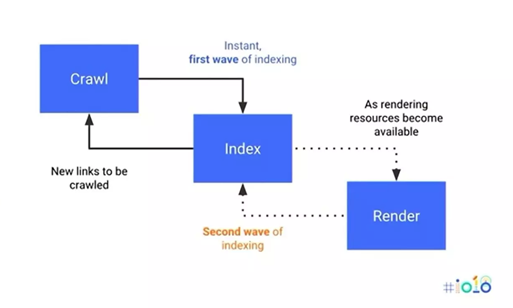

The first point on which the structure impacts is the crawlability of the site, namely its ability/ease to be scanned by search engine crawlers, that can perform their work on the entire text content of the domain to understand what the pages are about.

Part of this process is navigating through subpages and particular topics to understand the site as a whole, in which each page – to be considered subject to scanning – “must take a visitor somewhere else within the site, from one page to another”, without orphan pages.

An important principle for a good scan of the site is in fact that there are no dead ends that make the search engine robots block, and so we must make an effort to include internal links in each page, so as to create a bridge from one side to the other of the domain.

In concrete terms, we can use breadcrumbs and structured data, always keeping an eye on Google’s crawl budget to understand if we are wasting the time that the search engine dedicates us to empty or non-performing pages.

- URL structure

Setting the structure of URLs also has an influence on the SEO, because URLs are constituent elements of an effective site hierarchy, transmit equity through the domain and direct users to the desired destinations.

The best URL structures should be content-rich, meaning “easy to read by a user and search engine and contain target queries”. It is also important to try to keep Urls simple and not complicate them too much with too many parameters.

A suggestion for an effective structure is to replicate the logic throughout the website (without proceeding with changes in the structure of the URL if not inevitable), by sending an XML sitemap to search engines with all the most important Urls for which we wish to place ourselves.

- Security protocols

Having a secure site can help improve the rankings, especially since May – when the Page Experience Update will become fully operational, which also takes into account the adoption of HTTPS protocol to ensure the security of the site and user data.

The slight boost to the ranking (definition by John Mueller) is just one of the reasons why today almost 70% of websites worldwide already use HTTPS, as confirmed by the latest data from W3techs, since the transition to the new protocol also offers other benefits, such as, for example:

- Better experience for the user.

- Protection of user information.

- AMP implementation, only feasible with HTTPS.

- Effectiveness of PPC campaigns.

- Improved data in Google Analytics.

Moreover, the article reminds us, since last November Googlebot is scanning sites via HTTP/2.

- Internal linking

The basics of proper navigation require that users can switch from one page to another without any difficulty: if we have a large site with many pages, the challenge is to make these pages accessible with a few clicks, using navigation and pagination management techniques.

Usability experts suggest that you should only need three clicks to find a certain page, but this advice is a guideline and not a rule.

Internal linking helps users and search engines to discover a page and provide a flow between content and pages and the benefits of consciously using internal links for SEO are numerous:

- They allow search engines to find other pages via anchor text in which to exploit the keywords.

- They reduce the depth of the page.

- They offer users an easier way to access other content, ensuring a better experience.

- They can be used by search engines to give more value to pages in SERP.

One tip is not to just link old pages within new content, but to close the circle and insert internal links to new articles even in old content.

- Key content at the centre

Keyword research and content research are key parts of SEO and should be an essential part of how we build and structure the site from the start.

This ensures that understanding of the target audience, search behaviour and competitive topics are integrated into the structure and layout of the domain. At the optimum level, the main and crucial content of the site should be placed at the centre of the structure, as well as working to ensure that it is of the highest quality.

- Duplicate content

Duplicate content is harmful to SEO since Google can interpret it as spam.

The Google Search Console “is a useful tool to find and delete duplicate content on the website” and, as a general rule, you should avoid “posting duplicate content on your website or someone else’s”.

- User navigation and experience

The usability standards to which today’s sites tend are much stricter “compared to the first days of the Internet”: if a visitor comes across a badly built site, will avoid interacting further, also because no one wants to waste time on a site where you do not understand what to do or how to proceed.

If a user fails to find the information he is looking for (nor is helped in the search), will undoubtedly turn to a competitor site, also launching a sign of bad experience towards our site, that damages the SEO because they indicate to Google that the page might not be the most relevant or useful for that query, while on the contrary statistics that indicate a positive experience validate and strengthen the results in SERP.

Osman reminds us, in fact, that the way users interact with a site – through elements such as percentage of clicks, the time spent on the site and the bounce rate – is interpreted by Google for future search results.

The basics to provide a good user experience include:

- Align click-through to expectations.

- Make the desired information easy to find.

- Ensure that navigation makes sense and is intuitive.

The practical suggestion is to do various tests with unbiased visitors to determine how effectively usable the site is and determine how real users interact with the pages.

- Core Web Vitals

Developers, designers and SEOs should work together to comply with the values of Core Web Vitals, metrics that measure visual stability, interactivity and loading times to ensure a high quality user experience.

To date, less than 15% of sites meet benchmark standards, and this is a critical aspect to work on to improve performance before the algorithmic revolution.

- Taking care of mobile navigation

Ensuring that the site is adequate and structured for mobile users from different devices is not only a matter of design, but an essential part of the creation of the project.

The amount of work required may depend on developer resources, IT skills and business models, especially if the site is related to e-commerce and uses old slow-responsive platforms.

- Speed and performance

If the construction and structure of the site does not encourage a quick user experience, “SEO and budget results will be in a dive”: low loading speed, pages that do not respond and all that “takes time” for the user damages the work done by developers, content creators and SEO professionals.

A second delay in page loading time can mean fewer page and traffic views and a significant drop in conversions, as well as an unfulfilled user, summarizes the author. For this, it is important that all the people responsible for the project are in contact “to choose the right mobile design and website structure, such as responsive web design, and identify all the pros and cons to avoid high costs and costly errors”.

The SEO success of a site also depends on structure

In conclusion, according to Maddy Osman to achieve good SEO results it is important that “a website is structured in the most appropriate and hierarchical format for your users and your business”, without neglecting readability in the search.

For this reason, it is important to interact with design managers at the time of creation, not after, and plan in advance the contents to come from the early stages of conceptualization of the project: the goal is to align the architecture and design of the site to the SEO efforts, the way to achieve better results and success in SERP.