Dynamic rendering: what it is, how it works, limitations and alternatives

As useful as it is problematic: JavaScript is at the heart of web development today and is often considered an indispensable technology for building modern, dynamic and feature-rich sites. However, what simplifies and enriches the user experience can prove tricky for search engines: frameworks such as Angular, React or Vue.js generate client-side content that Googlebot and other crawlers struggle to crawl and index properly. To overcome this obstacle, dynamic rendering has been an essential intermediate solution for years, capable of translating complex content into search-engine readable versions. As web technologies have evolved, however, dynamic rendering has also revealed its limitations, so much so that Google itself discourages its practice, suggesting alternatives such as server-side rendering, static rendering andhydration. In this guide, we will look at why dynamic rendering has been a milestone for technical SEO, what challenges it poses today, and what are the most sustainable solutions to ensure proper indexing and excellence performance.

What is dynamic rendering

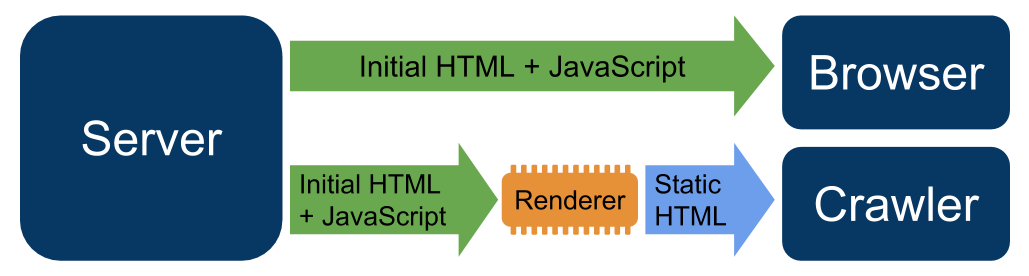

Dynamic rendering is a technique used to serve a different version of a web page depending on the type of visitor: while normal users are shown a page generated via client-side JavaScript, search engine bots are provided with a static HTML version , which is easier to scan and index. This distinction is what gives this rendering technique the term “dynamic,” since the content of the page changes dynamically based on the identity of the requesting user agent.

In other words, the server recognizes whether the visitor is a browser or a crawler and, based on this identification, decides to serve the most appropriate version to meet the needs of the surfer: an interactive, feature-rich site for end users, and an optimized, JavaScript-free page for search engines. This modularity of response allows sites to keep content accessible that crawlers would otherwise fail to interpret correctly.

Dynamic rendering emerged as a solution to handle the technical difficulties that arose with the increased use of modern JavaScript frameworks , such as React, Angular and Vue.js, which generate client-side content that is not immediately visible to search engines. This approach was an immediate and effective answer to crawling and indexing limitations, but today it is considered a temporary solution and no longer recommended, even by Google.

Dynamic rendering: definition and purposes

Dynamic rendering was created to solve one of the main obstacles posed by sites built with JavaScript: the difficulty of crawlers in viewing and interpreting content generated through client-side code. Unlike a human user who correctly views the page by running scripts on their browser, search engine bots often see an empty or incomplete version of a site, with drastic consequences forindexing and organic ranking.

In fact, it is generally argued that JavaScript rendering is user-friendly, but not bot-friendly, especially when crawlers are faced with large Web sites, because there are still limitations to processing JavaScript on a large scale while minimizing the number of HTTP requests.

Thus, the cornerstone of dynamic rendering is the ability to differentiate the content served based on the visitor. To do this, the server analyzes the user agent included in the received HTTP request and, based on its “identity,” decides which version to display:

- If the visitor is a real user on a browser, the page is generated normally by client-side rendering, taking advantage of JavaScript technologies to deliver interactive and dynamic content.

- If the visitor is a crawler (such as Googlebot or Bingbot), the page is pre-rendered server-side and then served as static HTML, devoid of JavaScript to ensure faster and more complete crawling and indexing.

In practice, dynamic rendering acts as an “interpreter” between sites developed with modern frameworks and search engines. This technique has been particularly popular because it overcomes the limitations of crawl budget (resources used by search engines to crawl a site) and render budget (resources to render a page), improving the indexing ability of pages with complex JavaScript.

One of the main areas of application of dynamic rendering is large sites or sites with frequently updated content, such as e-commerce platforms, news portals and digital catalogs. With this technique, it is possible to correctly transfer all content to Google and other search engines, ensuring that no essential data is lost along the crawling process.

The history of dynamic rendering: when it was introduced and why

The official introduction of dynamic rendering dates back to 2018, when Google presented this technique during the Google I/O conference . At that time, search engines were still showing significant difficulties in efficiently processing JavaScript-generated content , especially in situations with high technical complexity or on large sites.

The problem was twofold: on the one hand, not all crawlers were able to execute the JavaScript needed to display the content; on the other hand, the rendering process involved long times and high costs in terms of computational resources. In addition, search engines had to deal with the problem of deferred indexing: many pages were queued for slower rendering, with the risk that some would remain partially invisible for days or even weeks.

In response to these issues, Google promoted dynamic rendering as a temporary and pragmatic solution. Google’s John Mueller called the technique “the principle of sending normal client-side rendered content to users and sending fully server-side rendered content to search engines” and explained that, in this way, users would continue to receive client-side rendered pages , while search engines would get a staticalized HTML version, ready for immediate crawling. This approach was considered a major advance in addressing the growing gap between the needs of modern sites and the capabilities of crawlers.

Despite the initial positive reception, dynamic rendering was accompanied by a specific caveat: it was a compromise solution and not a practice destined to last into the future. The evolution of search engines and the advent of technologies such as server-side rendering (SSR) and static rendering would soon make these techniques obsolete, solving the root problems without the need to handle different versions of the same pages.

How dynamic rendering works

As described, dynamic rendering is based on a fundamental principle: serving different content based on the type of visitor, distinguishing between crawlers and human users. This distinction is made byanalyzing the user agent, a key part of the HTTP request sent to the server, which serves as an “identifier.”

The goal of dynamic rendering is precisely to adapt the content to the specific needs of the recipient: end users will receive the JavaScript version of the page with full interactivity, while search engines will get a static HTML version , simplified for easier crawling and indexing. So let’s delve into the technical architecture and specific mechanisms that make this process possible.

The technical process of dynamic rendering

The operation of dynamic rendering consists of several technical steps, which enable the server to serve the appropriate version of the content. These are the main steps:

- User agent identification

Whenever a browser or crawler requests a Web page, the server examines the user agent present in the HTTP request. The user agent is a text string that identifies the software used by the visitor (browser, crawler, or other) and allows the server to discern whether it is a human user or a bot.

- If the request comes from a crawler known as a Googlebot, the server routes it to a pre-rendering service.

- If, on the other hand, the requestor is a browser, the page is served normally including the JavaScript intended for client-side rendering.

- HTML static version generation

When a crawler is detected, the server leverages a pre-rendering tool to generate a fully rendered HTML version of the page. This is done through software such as Rendertron, which executes the JavaScript of the page just as a browser would, but returns an optimized HTML file devoid of dynamic code. The result is a page ready to be easily read by crawlers.

- Serving the appropriate version

Once the static version is generated, the server returns it to the requesting search engine. In parallel, users continue to receive the client-side generated version with all the necessary interactive features. In this way, content is made available to both human visitors and search engines without significant compromise.

This process ensures that content is dynamically but precisely managed to fit the specific needs of those receiving it, improving indexing of pages with complex JavaScript while preserving the user experience.

How to correctly implement dynamic rendering: the step-by-step guide

Implementing dynamic rendering can pose a significant technical challenge, especially for those who approach this solution with limited experience. Indeed, proper configuration is essential to ensure that content served to search engine bots is consistent, complete, and ready for effective indexing, while avoiding errors that could penalize the site.

In fact, to properly configure dynamic rendering, it is necessary to follow a series of operational steps that cover both the preparation of the server and the integration of specific tools for generating HTML versions to be served to crawlers.

The first fundamental step is the configuration of a middleware, that is, a special tool that acts as an intermediary between the requests received by the server and the generation of static HTML content. One of the most popular and widely used tools for this function is Rendertron, a dynamic Chromium-based headless renderer designed to create pre-rendered static copies of pages. Rendertron can be easily integrated on common web servers, such as Apache or Nginx, through the appropriate middleware. Once installed, the middleware will perform “filtering” of incoming requests, distinguishing between crawlers and users based on the user agent of the HTTP request. This step is essential to ensure that the bots always get the static HTML version.

Another critical element of the implementation is the creation of an HTML cache to reduce loads on the server. When bots request large amounts of pages in a short time, processing each on-demand request could cause significant slowdowns or even lead to server timeout. To avoid this overload, it is advisable to integrate a central cache to store pre-rendered versions of pages. In this way, static content will be immediately available for subsequent requests, speeding up response times and significantly improving operational efficiency.

Finally, precise mapping of user agents is essential, so as to properly distinguish legitimate crawlers from browsers used by human visitors. Each user agent must be identified and registered in a whitelist that includes the major search engine bots, such as Googlebot, Bingbot and Linkedinbot. This mapping can be defined through middleware such as Rendertron’s or manually configured based on user agent strings.

A typical configuration might involve custom rule-based request control that establishes the difference between crawlers and real users. For instance:

if userAgent.matches(botUserAgents) {

servePreRenderedContent();

} else {

serveClientRenderedContent();

}With this logic, the server dynamically routes requests, ensuring that each type of visitor receives the correct version of the page, optimized for their needs.

Verification of configurations

Once the technical implementation is complete, the work cannot be said to be finished without a rigorous verification phase , to make sure that dynamic rendering works properly and that search engine bots actually receive the optimized content. For this, Google provides a number of key tools.

The first tool to use is the Advanced Results Test, accessible through theGoogle Search Console. This test allows you to check whether the structured data on the page is displayed correctly in the HTML version served to the crawlers. Data such as JSON-LD tags, breadcrumbs and metadata are particularly sensitive and must be carefully analyzed to intercept any errors or omissions in the pre-rendering.

Another essential tool is the URL Check Tool, always available in Search Console. This tool allows you to explore in detail how Googlebot sees and interprets a specific page on your site. Using it, you can verify that the content provided in the static HTML version matches what the user would see in the client-side rendered version. In addition, with the “View as Google” feature, it is possible to compare side-by-side the version of the page served to users with the one sent to the bots, identifying any discrepancies.

In addition to Google’s tools, third-party tools can be adopted to test dynamic rendering performance, such as webpagetest.org, which allows you to simulate requests from different user agents and analyze server response time. However, it is always essential to focus on the official tools provided by Google, as they reflect the views of the leading search engine used globally.

If, during verification, problems such as incomplete content, missing metadata or high loading times emerge , immediate action should be taken. Some of the most common problems can be solved by reducing the number of dynamic resources required by the server or by further optimizing the pre-rendering middleware.

A thorough verification process not only ensures that dynamic rendering works as intended, but also serves to prevent possible SEO penalties resulting from technical errors or differences between content served to users and content submitted to search engines.

The tools for implementing dynamic rendering

To ensure the proper functioning of dynamic rendering, it is then essential to use tools that allow generating and serving static HTML versions from JavaScript content. These tools, called dynamic renderers, are designed to improve the efficiency of the process and facilitate the integration of the technique into existing workflows.

Some of the most popular software include:

- Rendertron

Rendertron is an open source solution developed by Google, based on Chromium headless. It allows client-side rendering of rendered pages into static HTML versions ready for scanning. It is often used in conjunction with middleware that automatically identifies crawlers to perform request forwarding.

- Strengths: Great compatibility with different technology stacks and affordable configuration.

- When to use it: ideal for large sites or projects with limited budgets that need a free solution.

- Puppeteer

Puppeteer is a Chromium-based library , designed to automate complex tasks such as dynamic rendering and testing of JavaScript-generated content. Compared to Rendertron, it offers greater customization and flexibility, making it particularly suitable for sites with complex architectures that require special configurations.

- Strengths: customization capabilities and advanced automation.

- When to use it: perfect for development teams with high technical skills that need a highly adaptable solution.

- prerender.io

prerender.io is a cloud service that allows you to implement dynamic rendering without requiring complicated configurations or direct IT team involvement. It is ideal for small or medium-sized sites that want a plug-and-play solution.

- Strengths: Ease of configuration and speed of implementation.

- When to use it: Suitable for small-scale projects with limited technical resources available.

The limitations of dynamic rendering and Google’s position.

Although dynamic rendering has been an innovative solution in the past, today Google explicitly calls it an outdated technology and no longer recommended in the long term. This position is the result of evolving rendering technologies and the increased ability of crawlers to process JavaScript, which make dynamic rendering less necessary and more problematic than modern alternatives.

Google clarified its position on dynamic rendering back in 2022, updating official documentation with an unequivocal statement, “Dynamic rendering is a temporary solution and is not recommended for the long term.” Although this technique has played an important role in facilitating the indexing of JavaScript-heavy sites, its structural limitations make it less competitive with current technological alternatives, such as server-side rendering (SSR) or static rendering.

Google’s main reasons for discouraging its use involve three basic issues.

- Technical complexity and duplication of resources

Implementing dynamic rendering requires managing two separate versions of the website, one for users (client-side rendered) and one for search engines (server-side pre-rendered). This duplication generates complexity in configuration, maintenance and development. Any changes applied to the site must be replicated across both versions, increasing the risk of inconsistencies in content and URLs served. The infrastructure required to manage such a complex workflow also requires highly skilled technical teams, high resources, longer implementation times and incremental costs, making it less sustainable for many organizations.

- Increased load on the server and network

Dynamic rendering introduces an additional level of processing that can put pressure on the server, especially on large sites or sites with particularly high traffic. When a crawler sends thousands of requests to the site, the server must quickly generate static HTML versions through tools such as Rendertron or Puppeteer, causing an overhead that can slow down the overall site response. This effect not only compromises the efficiency of the dynamic rendering itself, but can also harm the experience of human users, who may find themselves browsing a less responsive site.

- Superior and more efficient alternatives

The evolution of rendering technologies has made dynamic rendering an obsolete and, in the long run, inefficient solution. Today, tools such as server-side rendering or static rendering can provide a fully rendered page to both users and search engines, eliminating the need to distinguish between different versions of content and improving the overall efficiency of technical SEO.

For example:

- Server-side rendering (SSR) dynamically generates a complete server-side page, serving it to both types of visitors, without duplicating content.

- Static rendering creates pre-rendered versions of pages during the site build phase, reducing server-side load and improving loading speed.

Dynamyc rendering: why it is obsolete and most common issues

In addition to the structural reasons presented by Google, dynamic rendering is plagued by a number of technical issues that make it complex to implement and maintain correctly, make it obsolete, and most frequently penalize this technique.

Configuration errors

One of the most common problems in implementing dynamic rendering is the possibility of misconfiguring pre-rendering processes or the distinction between user agents. Incorrect configuration can lead to:

- Incomplete or missing content: pages served to crawlers may be empty or partially rendered due to suboptimal compatibility between the pre-rendering tools and the site.

- Content misalignment: user and crawler versions may differ, causing SEO ambiguity and duplication risks .

Solving these problems requires significant time and resources, especially on complex or dynamic sites.

High response times

Processing the static HTML version requires significant computational resources, especially for sites with large volumes of traffic. Pre-rendering tools, such as Puppeteer or Rendertron, can slow down the process when they have to run heavy JavaScript or handle large numbers of simultaneous requests. These high response times can have several negative impacts:

- Reduced crawl index: due to slow page generation, crawlers may reduce the frequency and number of content crawled.

- Incompleteindexing : some pages may not be indexed in a timely manner, compromising the SEO visibility of the site.

In these cases, using a cache for pre-rendered pages can alleviate the problem, but introduces additional technical complexities.

Problems with structured data and advanced components

Sites that use advanced JavaScript components , such as Shadow DOM , may experience specific difficulties in the pre-rendering process. Tools such as Rendertron do not always fully support these elements, which can result in the generation of inconsistent or incomplete HTML versions.

Another limitation concerns structured data, such as JSON-LD tags, which may not be interpreted correctly by renderers. This negatively affects visibility in Google rich snippets and other advanced SERP features.

For all this, dynamic rendering can no longer be considered an optimal solution to address JavaScript-related indexing problems. Technical complexity, implementation and maintenance costs, and the availability of more advanced alternatives make it progressively less relevant.

When dynamic rendering is still useful

Although dynamic rendering has been recognized by Google as a transitional and outdated solution compared to more advanced alternatives, however, there are still specific situations in which this technique can be a viable option . In particular, dynamic rendering finds application as a temporary solution in contexts where time, budget, or technical complexity hinder the immediate implementation of more modern technologies, such as server-side or static rendering.

In short, there are cases where this technique can still be an emergency tool , when the main goal is to solve indexing problems in a timely manner without disrupting the entire site infrastructure.

A relevant example concerns large sites, such as e-Commerce, where content changes frequently with continuous updates related to products, prices, or availability. In these situations, any delay in indexing can lead to a loss of visibility and a direct impact on revenue. Dynamic rendering, in this case, addresses the immediate need to make content readily readable by crawlers, regardless of the complex architecture of the site or the time required to implement more modern and scalable solutions.

Another frequent application context is web pages that use JavaScript structures that are particularly complex or incompatible with the processing capabilities of crawlers. Modern frameworks such as Angular or React, which allow the creation of highly interactive interfaces, may present difficulties in crawling, with the risk that some of the content may not even be seen by search engines. Through dynamic rendering, problematic content can be transformed into ready-to-scan static HTML versions, ensuring that the full information value of the page is received.

Finally, dynamic rendering can be useful in contexts where an organization’s technical or operational resources are limited. Not all teams have the specific skills to implement solutions such as server-side rendering; likewise, budget constraints or tight timelines may prevent in-depth structural interventions. In these situations, dynamic rendering is a viable compromise, allowing critical issues to be circumvented in the short term and indexing to be improved until more advanced and sustainable solutions can be adopted.

In all these scenarios, however, it is important to keep in mind that dynamic rendering does not eliminate the underlying JavaScript-related problems, but merely buffers the immediate difficulties. For this reason, it should be considered an emergency intervention, to be applied with awareness of its limitations and as part of a clear plan to migrate to better performing technical approaches in the long term.

Difference between temporary solution and long-term strategy

Dynamic rendering, as we have seen, is primarily a temporary workaround, designed to handle emergencies related to JavaScript content indexing. However, it should be made clear that it cannot be considered a viable strategy for long-term projects, especially in an ever-changing technological landscape. Its nature as a “bridge” solution stems precisely from the inability of this technique to solve fundamental problems related to the very structure of client-side rendered content, limiting itself to offering a basic level of search engine compatibility. This inherent limitation makes it inadequate to address the growing needs in SEO and UX.

From an operational point of view, dynamic rendering introduces technical and managerial stress, as it requires maintaining two separate versions of each piece of content: one for human users and one for crawlers. Not only does this type of approach increase the risk of inconsistencies and synchronization errors between the two versions, such as differences in content or problems in URLs, but it also imposes dual management, which results in additional burden on the development team and rising costs on the business side. These issues are particularly critical for organizations aiming for overall process optimization, as dynamic rendering ends up becoming a drag on project scalability.

In contrast, more modern rendering strategies, such as server-side rendering or static rendering, provide significantly superior solutions from both technical and strategic perspectives. Indeed, these techniques eliminate the problem of duplicate versions, providing a single, fully rendered page that is identical for users and crawlers. This approach ensures greater content consistency, reduces operational costs associated with duplication, and greatly improves site response times, with clear benefits in terms of both user experience and SEO.

Another decisive aspect in the difference between dynamic rendering and long-term solutions lies in their ability to adapt to future standards. While dynamic rendering represents a legacy of the past, born to fill a technical gap, techniques such as server-side and static rendering are designed to be scalable, high-performance, and compatible with ongoing technological advances. For example, SSR not only ensures that all content is immediately visible to search engines, but also offers improved loading speed for users by fully processing the page on the server. Similarly, static rendering allows pre-rendered pages to be created during the build phase and then distributed over the network via CDN (Content Delivery Network), ensuring extremely fast loading times and reducing the load on the servers.

It is essential, therefore, that dynamic rendering be adopted with the understanding that it is not an end-all solution, but rather a temporary means of addressing contingent constraints while planning a migration to more robust technology approaches. The transition from dynamic rendering to modern strategies should be part of a comprehensive vision, taking into account not only SEO needs but also the overall improvement of site performance and user experience. This transition requires initial investment in technical resources, but brings tangible benefits in the long run, reducing operational complexity and providing greater resilience to technological evolutions.

What future for dynamic rendering? Alternative solutions and general assessments

The limitations of dynamic rendering, as well as the increasing effort required to implement and maintain it, have prompted both Google and SEO professionals to move toward more advanced and sustainable technical solutions. In recent years, methods such as server-side rendering, static rendering , and hydration have become increasingly relevant, offering a way to definitively solve JavaScript-related crawling and indexing problems. Unlike dynamic rendering, these technologies eliminate many of the difficulties associated with dual page management, simplifying both the technical architecture and operational aspects.

- Server-Side Rendering (SSR)

Server-Side Rendering is a technique that allows pages to be fully pre-rendered on a server before they are sent to the user’s browser or crawler. In this approach, the server processes JavaScript code, generates complete static HTML , and delivers a ready-made version of the page to both users and search engines.

Compared to dynamic rendering, SSR has distinct advantages. By eliminating the need to serve different content based on the user agent, this technique ensures uniformity between the version intended for users and that for crawlers. This approach minimizes the risk of content inconsistencies and improves the overall experience from both navigational and SEO perspectives. In addition, SSR improves site performance because it provides fully loaded content as early as the first load, with generally lower response times than dynamic rendering.

SSR is particularly useful for sites that requireimmediate indexing of dynamic content, such as news portals or continuously updated product catalogs. However, implementing this technique can be technically complex and requires specific skills to properly configure server-side rendering, especially in large contexts.

- Static rendering

Static rendering is another high-performance alternative to dynamic rendering. In this approach, site pages are pre-rendered during the build process and then stored as static files on a server or CDN. These versions are already fully optimized and require no further processing by the server or client at the time of the request.

One of the major advantages of static rendering is its structural simplicity. Because all content is generated in advance, there is no need to process server-side or client-side JavaScript to display content, significantly reducing loading time and load on the server. This approach is ideal for sites with less frequently updated content, such as static blogs or marketing pages.

However, static rendering may be less suitable for highly dynamic sites that require continuous updates, where the need to regenerate static pages with each change could become burdensome. To mitigate this limitation, modern tools include hybrid rendering capabilities , which combine static and dynamic to handle specific content more flexibly.

- Hydration

Hydration is a technique that combines the advantages of static and client-side rendering. In this approach, a page is initially pre-rendered (usually with static rendering) and then enriched with client-side JavaScript features to add interactivity. Hydration allows for improved initial page loading speed, as static content is delivered immediately, while interactive scripts are executed later in the user’s browser.

This type of approach is particularly useful for applications that require advanced functionality without sacrificing fast response times. For example, an e-commerce site might pre-render its product catalogs in a static format and use hydration to manage dynamic filters, interactive forms, or tailored customization features.

However, hydration requires a balance between the amount of JavaScript executed client-side and optimizing loading times, making it a more complex solution than pure static rendering.

Which solution to adopt for your site?

The choice between SSR, static rendering and hydration depends on the specific needs of the site and the available technical resources. However, all these techniques share one thing in common: they completely abandon the idea of serving separate versions of content to users and crawlers, eliminating one of the main critical issues of dynamic rendering.

Adopting these alternatives ensures not only greater management simplicity, but also faster loading times , superior performance and better adaptability to current technological standards. Search engines such as Google clearly favor sites that reduce the technical complications associated with JavaScript, making these technologies the foundation of modern SEO strategies for years to come.

The features of client-side rendering and server-side rendering

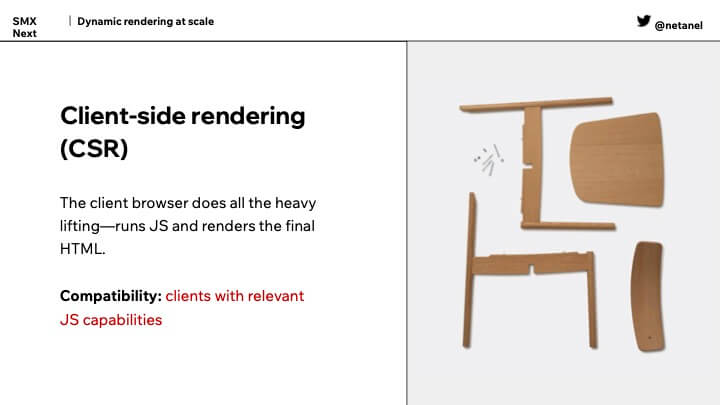

In a talk at SMX Next, Nati Elimelech (tech SEO lead at Wix) provided an overview of how JavaScript works for client-side, server-side, and dynamic rendering, highlighting some interesting aspects reported by this article on Search Engine Land.

The first step is to understand what happens with client-side rendering: when a user clicks on a link, his or her browser sends requests to the server on which the site is hosted. In the case of JavaScript frameworks, that server responds with something slightly different, because it provides an HTML skeleton, i.e., “just the basic HTML, but with lots of JavaScript, basically telling the browser to run JavaScript to get all the important HTML.” The browser then produces the displayed HTML-the HTML used to build the page the way the end user actually views it.

According to Elimelech, this process is reminiscent of assembling Ikea furniture, because the server basically tells the browser, “These are all the pieces, these are the instructions, build the page,” thus moving all the hard work is shifted to the browser instead of the server.

Client-side rendering can be great for users, but there are cases where a client does not execute JavaScript and therefore the user agent cannot get the entire content of the page: when it happens to Googlebot or a search engine crawler the situation is obviously dangerous for SEO, because it can preclude the visibility of the page in SERPs.

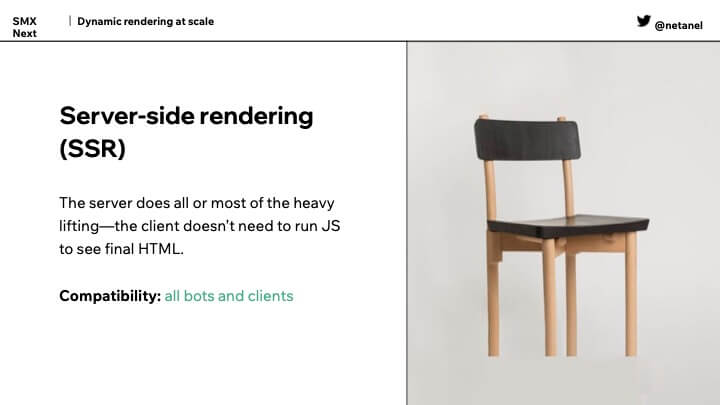

For clients that do not execute JavaScript, you can use server-side rendering, which moves the execution of precisely “all that JavaScript” to the server; all resources are requested on the server side, and the user’s browser and the search engine bot do not need to execute JavaScript to get the HTML fully rendered. Therefore, server-side rendering can be faster and less resource-intensive for browsers.

Using the same analogy as in the previous example, Elimelech explains that “server-side rendering is like providing guests with a real chair they can sit in instead of pieces to assemble,” and when we perform server-side rendering we essentially make the HTML visible to all types of bots and clients, who will be able to see the “important final rendered HTML” regardless of JavaScript capabilities.

In this sense, dynamic rendering represents “the best of both worlds” described earlier, Elimelech says, because it allows the “transition between client-side rendered content and pre-rendered content for specific user agents,” as this diagram also explains.

In this case, the user’s browser runs JavaScript to get the HTML displayed, but still benefits from the advantages of client-side rendering, which often includes a perceived increase in speed. On the other hand, when the client is a bot, server-side rendering is used to serve the fully rendered HTML and “see everything that needs to be seen.”

Thus, site owners are still able to offer their content regardless of the client’s JavaScript capabilities; since there are two streams, then, they can also optimize them individually to better serve users or bots without affecting the other.

Dynamic rendering, FAQ and doubts to be clarified

Dynamic rendering is a technique that has raised many questions among developers, SEO specialists and webmasters, especially regarding its practical use and future implications. We try to collect and answer the main frequently asked questions to clarify doubts and offer a more complete overview of its features, advantages, limitations and its role in the current technological landscape.

- Is dynamic rendering considered cloaking?

No, dynamic rendering is not considered cloaking, as long as certain basic rules are followed. Google defines cloaking as a practice in which two completely different versions of a page are intentionally served: one for users and another for search engines, with the goal of manipulating SERP results, which is why it falls under the prohibited practices of black hat SEO. In dynamic rendering, on the other hand, the two versions (the one for crawlers and the one for real users) must be substantially similar in content, varying only in their technical presentation mode.

An acceptable example would be the removal of unnecessary JavaScript widgets or graphical effects from the version served to the bots, provided that the fundamental content and meaning of the page remain the same. Transparency is key: any technical differences are accepted only if they are designed to improve crawling and indexing, not to fool search engines or users.

- Which sites can still benefit from dynamic rendering?

Although dynamic rendering has been downgraded to a temporary solution, there are specific contexts in which it can still be a useful resource. Sites that can benefit from it include:

- E-commerce with large inventories or frequently updated content. In these cases, dynamic and constantly updated content presents a challenge for indexing and crawling.

- Applications with particularly complex JavaScript interfaces. When core content is dynamically displayed via frameworks such as Angular or React and is difficult for bots to interpret.

- Projects where developers have limited technical resources or time. For smaller companies or those with small budgets, dynamic rendering can be an affordable temporary solution until resources are available to adopt superior technologies.

However, it should be emphasized that dynamic rendering is not a lasting solution, and every site should plan from the outset to migrate to more modern and scalable alternatives.

- How much does dynamic rendering impact indexing times?

Dynamic rendering can significantly reduce or worsen indexing times, depending on how it is implemented. On the one hand, it can speed up crawling by offering a static, optimized version to crawlers, solving JavaScript-related interpretation issues. On the other, if misconfigured, rendering could introduce higher response times, penalizing both crawl budget and crawl frequency.

For example, overloaded servers generating on-demand pre-rendered content could slow the response toward search engine bots, causing a delay in page indexing or, in the worst cases, even missed crawl attempts. Using a strategic cache for HTML versions reduces this problem.

- Will Google continue to support dynamic rendering?

At the moment, Google still supports dynamic rendering, but its official position is clear: this technique is considered a temporary workaround, not a permanent solution. As search engines improve their ability to process JavaScript on a large scale, dynamic rendering is likely to become less and less necessary and potentially unnecessary.

Google’s 2022 documentation update emphasized that newer technologies, such as server-side, static and hydration rendering, represent preferable and more sustainable alternatives for addressing the challenges of indexing complex JavaScript content.

- Does dynamic rendering impact users?

No, dynamic rendering has no direct impacts on user experience. Because browsers always receive the standard client-side rendered version, the behavior and interactive features of the site remain unchanged for real visitors. This technique only affects how crawlers access the content and does not affect the design, navigation, or perceived performance of users.

However, indirect risk must be considered: if the dynamic rendering configuration causes server-side slowdowns (e.g., due to the high load generated by pre-rendering) or implementation errors, users may also experience delays in loading pages. Careful system optimization is always necessary to avoid these problems.

- Is dynamic rendering safe?

Yes, dynamic rendering is generally considered safe, as crawlers access only the public and indexable content of the site, without interacting with critical or confidential systems. In addition, the separation of crawler and user versions means no exposure of sensitive or private information.

However, to avoid security risks, it is important to properly configure access and verify that only legitimate bots, such as Googlebot or Bingbot, can request the static version. This can be done by configuring user agent whitelisting and monitoring any suspicious requests.

- Why hasn’t the SEO community adopted dynamic rendering in a big way?

Dynamic rendering was initially embraced enthusiastically by the SEO community, but its use has remained limited due to several factors. First, the technical complexity of configuration and management has disincentivized many companies without adequate resources. Creating two versions of the same page increases costs and requires specialized skills, which are not always available in non-technical SEO teams.

In addition, the availability of more advanced technologies, such as server-side and static rendering, has further reduced the need to adopt dynamic rendering. Many SEO professionals prefer to invest directly in more scalable and sustainable options, leaving dynamic rendering as a last resort for exceptional situations.

- Is it difficult to switch from dynamic rendering to an alternative solution?

Switching from dynamic rendering to a modern technology, such as SSR, static rendering or hybrid rendering with hydration, can be complex but is certainly doable with good planning. The biggest hurdle lies in the need to redesign significant parts of the site infrastructure, moving from a model that separates content (crawlers/users) to one that unifies them.

The important thing is to approach this transition gradually, starting with the most strategically important pages (e.g., product pages of an e-commerce or blog posts) and testing each change with SEO tools to make sure there is no loss of visibility or indexing. A good practice is to work closely with the development team to identify the most suitable technology and plan a realistic roadmap for the migration.