Site traffic: what it is, how to monitor it and detect any drop

It is not just a set of isolated numbers nor just a question of quantity: a site’s traffic is an essential indicator through which we can understand what works and what doesn’t in our digital project, because it allows us to understand Google’s approval rating, analyze user interactions, investigate where they come from, what pages they prefer and what behaviors they follow. But it is not enough to just collect the data: to stay on track and ensure steady growth we also need to understand the sources of traffic-organic, paid, direct or referral-and analyze how they affect the overall performance of the site. We then need to be proactive, because the (bad) day will surely happen when we find ourselves observing a decrease in traffic, and then comes into play the need to know where to look to analyze the causes of this decline, knowing that every small change has the potential to destabilize user flows to our site. In short, it is time to focus on analyzing a site’s traffic, to better understand what it means and to explore what tools we can use to track it and diagnose any anomalies.

What is website traffic

A website’s traffic is the total number of visitors accessing the site, regardless of which channel they come from.

Each visit can originate from different sources such as search engines, advertisements, links from other sites or direct access. Monitoring traffic allows you to assess your site’s visibility and performance, providing useful information to improve its effectiveness and optimize user acquisition strategies.

And so, web traffic represents the flow of visitors that land on a site through different channels and sources; on a practical level, it translates into the total number of hits a page receives in a given time frame, but the term encompasses a much more complex dynamic, because it is not just about counting visits, but about understanding where they come from and how users arrive at our content.

On the one hand, traffic is one of the main indicators of the health of a site, useful for measuring the effectiveness of the strategies adopted, whether SEO, SEM, advertising campaigns or marketing actions. On the other, knowing how visitors reach the site allows us to determine whether we are correctly targeting our attention to the right traffic sources or whether an adjustment in strategies is needed.

What are the main sources of web traffic

In general, we can trace the sources of web traffic to four broad categories: organic traffic, paid (or paid) traffic, direct traffic and referral traffic. Understanding these different types allows us to have a more defined view of how well a site is really performing and which areas to focus our interventions on.

Each channel has its own role and peculiarities, and incorporating them into complementary strategies helps boost the visibility and success of the site, in the short and long term.

Generally speaking, organic traffic is probably the most coveted source, especially for those working in SEO: in addition to being generated for free (at least from a direct economic point of view), it can in fact guarantee sustainable and stable results over time, provided that effective and up-to-date SEO strategies are put in place. Paid traffic , on the other hand, guarantees instant results, but with a level of temporariness subject to the budget, while referral and direct traffic tell us about the link between our content, our network and the importance of the brand.

Regardless of the source, the detailed analysis of a site’s traffic gives us a picture of its overall health: if we observe a continuous influx of data from the various channels, it means that the site has a solid base of visibility, while any declines in specific segments should make us think about which strategies are less effective and may need an overhaul. Measuring each type of traffic, therefore, represents not only a quantitative metric, but a vital indicator to better target improvement and optimization efforts .

When we analyze web traffic, distinguishing and understanding the different sources from which visitors come is therefore one of the priority aspects, also to have a clear view on which type is best suited to our strategies. To monitor organic traffic, we can use analytical tools – Google Analytics or Google Search Console, among the first – or SEO tools based on statistical and predictive calculations, such as SEOZoom.

- Organic traffic

Organic traffic is the lifeblood of many digital strategies. It includes all visitors who land on a website from search engines – such as Google, Bing or Yahoo – without the aid of paid advertising campaigns and therefore without spending budget directly on advertisiting: it is a signal that our pages are well positioned in search results, attracting clicks naturally thanks to the quality of organic ranking, without additional advertising costs. The visitor, then, does a specific search on Google, finds our page among the results, clicks and lands on the site: here is a visit coming from organic traffic.

Growing organic traffic implies that our site, over time, has been able to respond effectively to users’ search queries, gaining visibility and credibility in the eyes of Google.

The main advantage of this source is sustainability in the long term: SEO requires long initial times to get results, but once the brand is established it then allows for the generation of continuous and consistent visits, without the need for further direct economic investment, unlike paid campaigns. While this impacts investment for an initial period to create and achieve these positions, the benefits can continue over time if the site can maintain and improve its presence in the SERP.

- Paid traffic

Paid traffic is that generated through paid advertisements, such as campaigns created through Google Ads or other advertising platforms, including via social media. These visitors click on sponsored links as a result of an economic investment aimed at promoting specific pages. So in this case, in order to get users to view content, we pay a certain amount for each click we receive. It is a very important source of traffic for those who want immediate results or for those who need to launch a new site, because it creates instant visibility and allows them to quickly reach a specific audience. However, paid traffic involves ongoing and often high costs: as long as we keep the campaigns active, we will continue to receive traffic, but once the funds run out, the flow of new visitors will stop almost immediately. Unlike organic, this channel cannot sustain itself in the long run without continuous investment. Relying only on paid traffic can therefore be costly in the long run, without moving the same level of sustainability and continuity that SEO strategies and organic traffic do. The visits obtained will be valuable, but tied hand-in-hand with the available budget.

- Direct traffic

Direct traffic collects all those visitors who access the site by typing the URL directly into their browser, without going through other external sources such as search engines or social. This type of traffic correlates closely with brand awareness and with the recognizability of the site or individual page: if a user remembers the name of the site and types it in directly without going through a search engine, it means that he or she is familiar with and trusts our brand, product or services offered. Direct traffic often comes from already loyal users or from offline marketing actions, such as direct inclusion of the URL on promotional materials or classic advertising. While this type of traffic is a reliable source of users withprior experience of the site, it does not offer the same opportunities for growth compared to organic traffic, as its potential for increase is limited by brand awareness. It can also include clicks from bookmarks or emails that are not properly tracked with tracking codes.

- Referral traffic

Referral traffic comes from visitors coming to our site by clicking on a link found on another domain; it is therefore generated from links on other sites that point to specific content in our domain. This type of visit is an indication of how much our site is cited and linked to around the web, often related to link building campaigns or valuable content that other sources find useful to share with their users. Referral links come from partner sites, blogs or other external platforms that cite or link back to our content: capturing traffic that can be traced back to such sources not only improves the visibility of the site, but often brings a boost in terms of credibility and trust in the industry, also facilitating search engine rankings.

Checking how this traffic develops can provide key information about the effectiveness of our online relationships and the site’s ranking in relevant networks.

Why it is important to monitor web traffic

Web traffic tells us a lot about the performance of our site and allows us to understand whether we are intercepting and meeting the needs of our audience, and proper monitoring of user flow allows us to know how they access our pages, where they come from, and what they do once they land on the site.

This type of analysis is not only used to measure how many people visit the site, but more importantly to understand the impact of our strategies, from the SEO side as well as from the marketing side, to accurately and as objectively as possible assess KPIs such as engagement, interest and overall performance of the pages of our online project.

One of the most interesting aspects of traffic monitoring is that it allows us to find out which sources are contributing the most visitors and how each of them affects our key metrics. When we see growth in organic traffic, for example, we can infer that our SEO strategies are working and that content is well optimized to adequately respond to user queries on search engines; conversely, a drop in organic traffic might alert us to the need to revise our keywords or check changes in Google’s algorithm, which can impact overall visibility.

No less important is to take into account other sources of traffic, such as paid traffic, which, by its nature, requires ongoing management and careful monitoring: to assess the effectiveness of a marketing campaign, we need to know exactly how many visits it produces, but also how many of these visits translate into conversions, whether they are sales, leads or other desired actions. In this way, we can optimize the budget invested, avoiding waste and ensuring that resources are used efficiently.

Constant traffic monitoring also helps us to refine the user experience: monitoring metrics such as bounce rate or average time spent on the site allows us to understand whether we are delivering content that truly engages users or whether, on the contrary, there are pages that lead them to leave the site too quickly. When we notice that users are spending more time on specific content and interacting with it more, we can adjust our strategy and replicate this success on other sections of the site.

Site traffic drops: what to do when we lose visitors

Beyond the cautions put in place, in our experience of managing a website we may sooner or later find ourselves faced with an unexpected reduction in traffic.

We open Search Console or Analytics one morning and find that site traffic has dropped, and that traffic from Google Search in particular is dropping.

And no matter how hard we try, reason, intervene and harp, our site continues to gradually lose organic traffic.

Identifying the causes of the problem is the first step in reversing this negative trend and bringing the flow of visitors back to normal: a decline in organic traffic can depend on several reasons, some of which are related to factors internal to the site (technical problems or manual actions), while others are related to broader changes, such as algorithmic changes by Google or changes in users’ search trends.

Declining sites: frequent causes and Google’s advice

But what is the reason for this sudden, slow descent and how can we understand the reasons behind the drop in traffic?

In our support comes Google, which presents a series of tips for analyzing the reasons that can lead to the loss of organic traffic, with 5 causes that are considered the most frequent, and a guide (including graphics) that visually shows the effects of such declines and helps us to analyze the negative fluctuations in organic traffic to try to respond and lift our site.

The issue has also been addressed on a special page in Google’s documentation,in which Daniel Waisberg explains analytically what negative traffic fluctuations look like and what they mean for a site’s volumes.

In a nutshell, according to the Search Advocate a decline in organic search traffic “can occur for a number of reasons and most of them can be reversed,” although “it may not be easy to figure out exactly what happened to your site.”

Just to help us understand what is affecting our site’s traffic, the Googler has sketched out some examples of drops and how they can potentially affect our traffic.

The shape of the graphs for a site losing traffic

For the first time, then, Google is showing what various types of organic traffic drops really look like through screenshots of Google Search Console performance reports, adding a number of tips to help us deal with such situations. It is interesting to note the visual difference that exists between the various graphs, a sign that each type of issue generates characteristic and distinctive fluctuations that we can recognize at a glance.

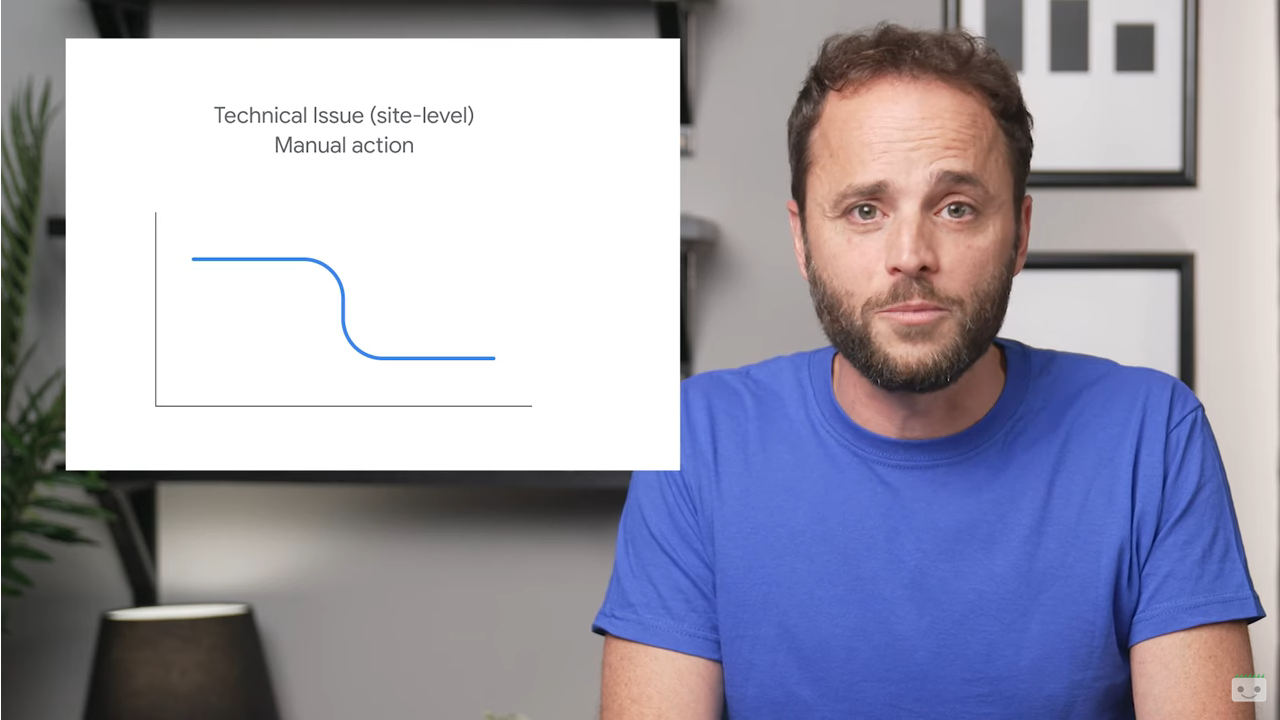

In particular, site-level technical problems and manual actions generally cause a huge and sudden drop in organic traffic (top left in the image).

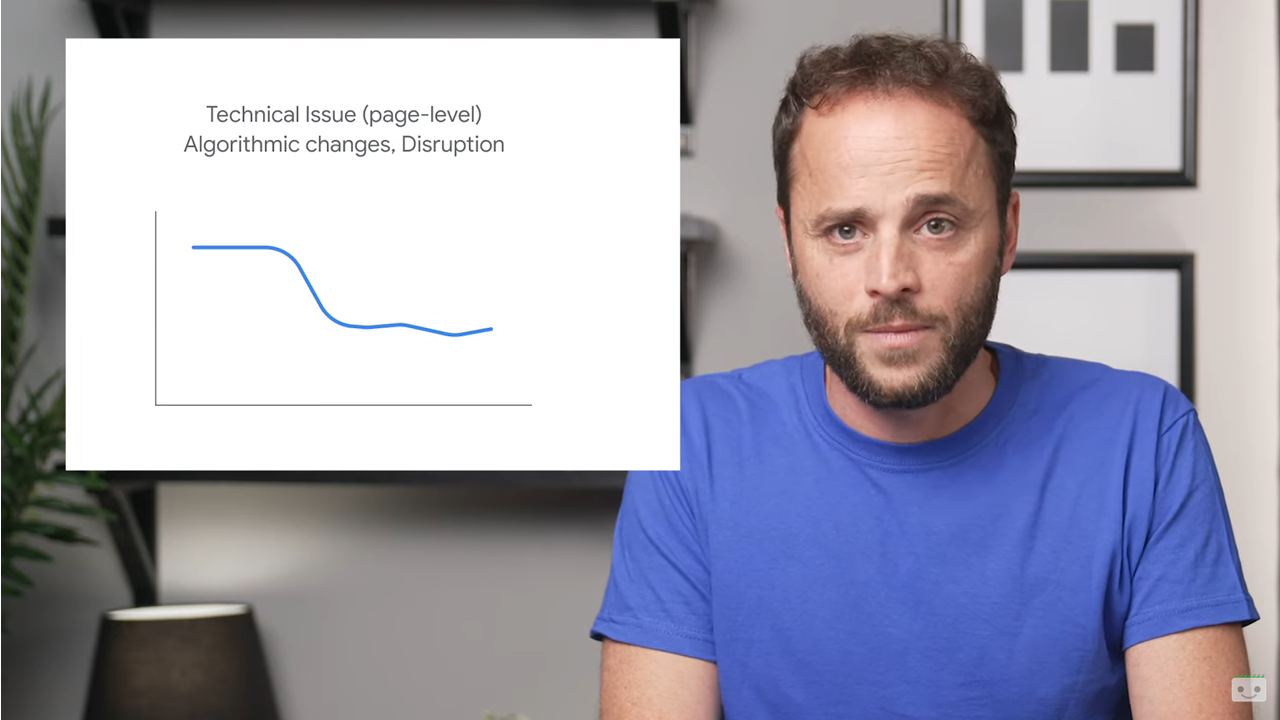

Technical problems at the page level, an algorithm change such as a core update or a change in search intent cause a slower drop in traffic, which tends to stabilize over time.

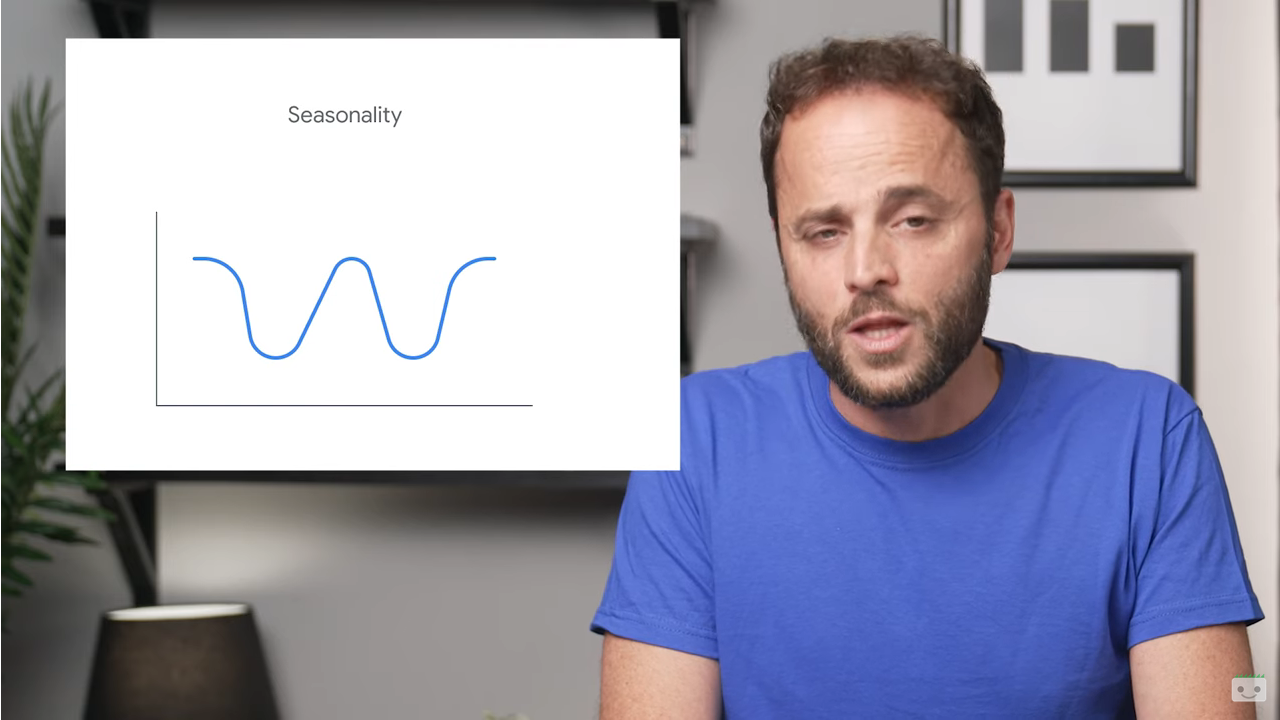

Then there are the changes related to seasonality, trending and cyclical fluctuations that cause graphs with curves characterized by ups and downs.

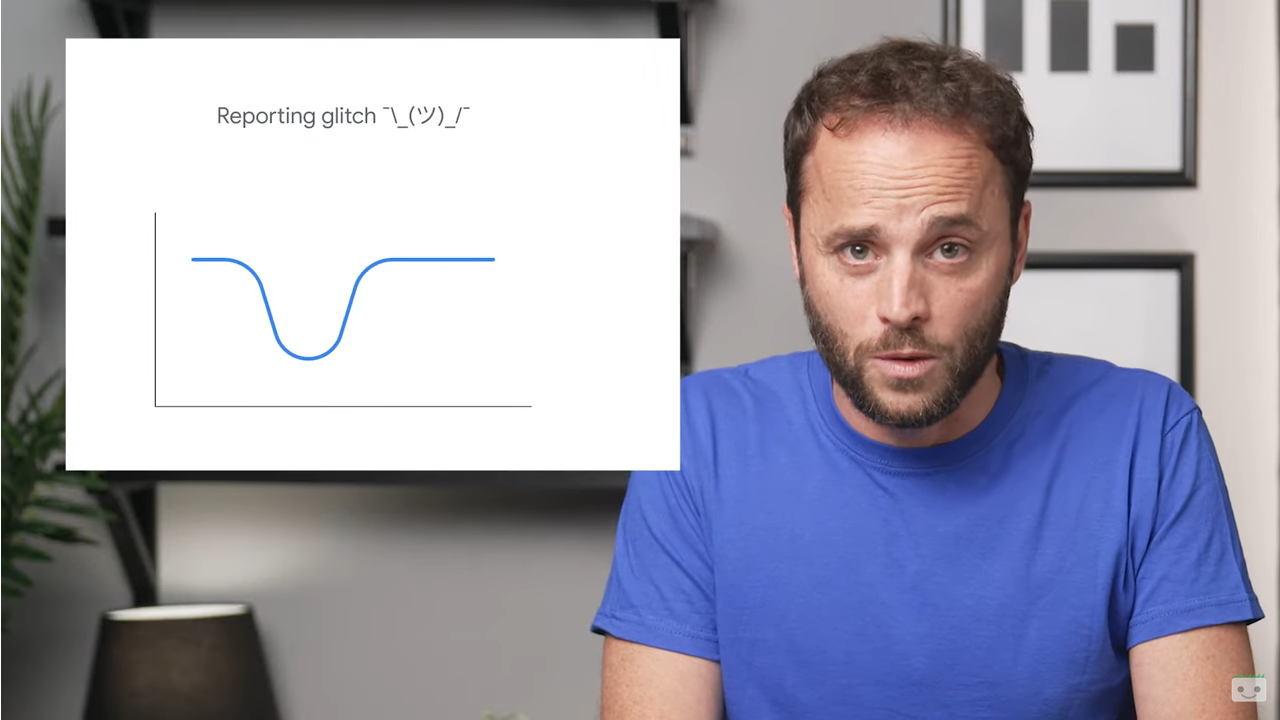

Finally, the last graph shows the oscillation of a site flagged for technical problems in Search Console and then returned to normal after correction.

Very trivially, recognizing exactly what is the cause of the drop in organic traffic is a first step toward solving the problem.

The seven causes of SEO traffic loss

According to Waisberg, there are eight main causes of declining search traffic:

- Technical problems. Presence of errors that may prevent Google from crawling, indexing or publishing our pages for users, such as server unavailability, errors in retrieving robots.txt files, 404 pages and more. Such problems can affect the entire site (e.g., if the site is down) or the entire page (as with a misplaced noindex tag, which causes Google to not crawl the page and generates a slower drop in traffic). To identify the problem, Google suggests using the crawl statistics report and page indexing report to find out if there is a corresponding spike in detected problems.

- Security problems. When a site is affected by a security threat, Google might alert users before they reach its pages with warnings or interstitial pages , which can reduce search traffic. To find out if Google has detected a security threat on the website, we can use the Security Issues report.

- Spam Problems . A site’s failure to comply with Google ‘s guidelines, or Google’s detection of practices that violate spam-related rules-via automated systems or a human review-could result in manual action, and then a penalty or even removal of some of the pages or the entire domain from Google Search results. To see if this is why our site is losing organic traffic, we can check the Google Search spam rules and the Manual Actions report on Search Console, remembering that Google’s algorithms might consider violations of the rules even without manual action.

- Algorithmic Updates. Google “constantly improves the way it evaluates content and updates its publishing and ranking algorithms in search results accordingly,” Waisberg says, and core updates and other minor updates can change the performance of some pages in Google Search results. Basically, if we suspect that a drop in traffic is due to an algorithmic update, it is important to understand that there may be nothing wrong with our content. SERP positions are not static or fixed, and indeed Google search results are dynamic in nature because the web itself is constantly changing with new and updated content. This constant variation can cause both improvements and declines in organic traffic in Search. In order to determine whether we should make a change to our site, the guide suggests analyzing the main pages of the site in Search Console and assessing the drop in ranking, and in particular:

-

- A small drop in ranking, e.g. decrease from 2 to 4: Do not take radical action. A small drop in ranking occurs when there is a slight change in position in the top results (e.g., a drop from position 2 to 4 for a search query). In Search Console, we may see a significant drop in traffic without a significant change in impressions. Small fluctuations can occur at any time (we might even move up in position, all without doing anything), and Google recommends avoiding making radical changes if the page retains good performance.

- Significant drop in position, e.g. decrease from 4 to 29: Evaluate interventions to reverse course. A significant drop in position occurs when we notice a major drop in the top results for a wide range of terms (e.g., if we go from the top 10 results to position 29). In cases like this, Google encourages us to independently evaluate the entire website as a whole (and not just individual pages) to make sure that the content is useful, reliable, and designed for people. If we have made changes to the site, it may take some time before they take effect (a few days or even several months). For example, it may take months for systems to determine that a site is currently producing content that is useful in the long run. In general, the guide recommends waiting a few weeks to analyze the site again in Search Console and find out if the actions have had a positive effect on the ranking position. In any case, we must remember that Google that does not guarantee that changes made to the website will result in a noticeable impact on search results. If there is more worthy content, it will continue to rank well by the search engine’s systems.

- Site moves and migrations. Then there is another specific case where the site may lose traffic, that is, when a migration has just been completed : if we change the URLs of existing pages on the site, Waisberg clarifies, we may in fact experience fluctuations in ranking while Google crawls and indexes the site again. As a rule of thumb, moving a medium-sized website may take up to several weeks before Google notices the change, while larger sites may take even longer.

- Seasonality and changes in search interest. Sometimes, changes in user behavior “alter the demand for certain queries, due to a new trend or seasonality over the year.” Therefore, a site’s traffic may decline simply because of external influences, due to changes in search intent identified (and rewarded) by Google. To identify the queries that have experienced a drop in clicks and impressions, we can use the performance report, applying a filter to include only one query at a time (choosing the queries that receive the most traffic, the guide explains), and then check Google Trends searches to see if the drop has affected only our site or the entire web.

How to diagnose a drop in Google Search traffic.

According to Waisberg, the most efficient way to figure out what has happened to a site’s traffic is to open its Search Console performance report and look at the main graph, which contains a lot of useful information.

A graph “is worth a thousand words” and summarizes a lot of information, the expert adds, and besides, the analysis of the shape of the line will already tell us a lot about the potential causes of the movement.

If both impressions and clicks have decreased, we start by comparing our graph with previous ones to immediately identify in which case we are finding ourselves. If, on the other hand, impressions remain the same, but clicks decrease, there may be a problem with “interest” on the part of users: that is, our pages may not have the best possible headline and snippet, and thus users do not understand the content of the page, or perhaps other sites have achieved a more interesting and appealing advanced result that wins clicks.

To perform this analysis, the article suggests 5 pointers:

-

- Change the date range to include the last 16 months, to analyze the decline in traffic in context and exclude that it is a seasonal decline that occurs each year around vacation vacations or a trend.

The following graph, taken like the others on the page from Google’s guide, shows a graph of performance with annual seasonality (16 months of data): it jumps out at you how the recent drop occurred exactly like the previous year.

- Compare the drop period with a similar period, comparing the same number of days and preferably the same days of the week, to review exactly what has changed-for example, we can compare the last 3 months with previous period or the last 3 months on a yearly basis. By clicking on all tabs we can find out if the change occurred only for specific queries, URLs, countries, devices, or search aspects.

In the image below we see a three-month comparison performance graph: it jumps out at you how the decline in traffic is evident when comparing the full line (last three months) with the dotted line (previous three months).

- Analyze the different types of searches separately , to understand whether the decline found occurred in Google Search, Google Images, Video tabs, or Google News.

- Monitor average position in search results. In general, Waisberg says, we should not focus too much on “absolute position”-impressions and clicks are ultimately the yardsticks for evaluating a site’s effectiveness. However, if we notice a dramatic and persistent drop in position, we might start to self-assess our content to see if it is useful and reliable.

- Look for patterns in the pages affected by the drop. The Pages table can help us to identify the possible presence of patterns that could explain the origin of the drop. For example, an important factor is to find out whether the drop occurred in the entire site, in a group of pages, or even in just one very important page of the site. We can do this analysis by comparing the drop period with a similar period and comparing the pages that lost a significant amount of clicks, select “Click Difference” to sort by the pages that lost the most traffic. If we find that it is a site-wide problem, we can further examine it with the Page Indexing report; if the drop affects only a group of pages, we will instead use the URL checking tool to analyze these resources.

Study the context of the site

Digging deep into the reports, also using Google Trends or SEO tools such as ours in support, can help to understand in which category our traffic drop falls, and in particular to find out if this traffic loss is part of a larger industry trend or if it is happening only for our pages.

There are two particular aspects to analyze regarding the context of action of our site:

- Changes in search interest or new product. If there are big changes in what and how people search (we had a demonstration of this with the pandemic), people may start looking for different queries or use their devices for different purposes. Also, if our brand is a specific e-Commerce online, there may be a new competing product that cannibalizes our search queries.

- Seasonality. As we know, there are some queries and searches that follow seasonal trends; for example, those related to food, because “people search for diets in January, turkey in November, and champagne in December” (at least in the U.S.) and different industries have different levels of seasonality or peak season.

Analyzing query trends

Waisberg suggests using Google Trends to analyze trends in different industries, because it provides access to a largely unfiltered sample of actual search queries made to Google; moreover, the data is “anonymized, categorized and aggregated,” allowing Google to show interest in topics from around the world or down to the city level.

With this tool, we can check the queries that are driving our site traffic to see if they have obvious declines at different times of the year.

The example in the image below shows three types of trends:

- Turkey has a strong seasonality, peaking each year in November (Thanksgiving season, for which turkey is the signature dish).

- Chicken shows some seasonality, but less pronounced.

- Coffee is significantly more stable and “people seem to need it throughout the year.”

Also within Google Trends we can discover two other interesting insights that could help us with search traffic:

- Monitor the top queries in our region and compare them to the queries from which we receive traffic, as shown in the Search Console performance report. If queries are missing in the traffic, check if the site has content on that topic and make sure it is crawled and indexed.

- Check for queries related to important topics, which might bring up related queries on the rise and help us prepare the site properly, such as adding related content.

How to analyze traffic declines with Search Console.

Daniel Waisberg himself devoted a specific episode of the Search Console Training series on YouTube to explaining the main reasons behind traffic declines from Google Search, specifically explaining how to analyze the patterns of traffic declines to the site by using the Search Console performance report and Google Trends to examine general trends in one’s industry or business niche.

According to Google’s Search Advocate, becoming familiar with the main reasons behind organic traffic declines can help us move from monitoring to an exploratory approach toward finding the most likely hypothesis about what is happening, the crucial first step in trying to turn things around.

The 4 common patterns of traffic drops

Waisberg echoes the graphics and information from his article, adding some useful pointers.

- Site-level technical problems/Manual actions

Site-level technical problems are errors that can prevent Google from crawling, indexing, or presenting pages to users. An example of a site-level technical problem is a site that is not working: in this case, we would see a significant drop in traffic, not just from Google Search source.

Manual actions, on the other hand, affect a site that does not follow Google’s guidelines, affecting some pages or the entire site, which may then be less visible in Search results.

- Page-level technical problems / Algorithmic updates / Changes in user interest

Different is the case of page-level technical problems, such as an unintentional noindex tag. Since this problem depends on Google crawling the page, it generates a slower drop in traffic than site-level problems (in fact, the curve is less steep).

Similar aspect is determined by declines due to algorithmic updates, the result of Google’s continuous improvement action in its way of evaluating content. As we know, core updates can impact the performance of some pages in Google Search even over time, and usually this does not take the form of a sharp drop.

Another factor that could lead to a slow decline in traffic is a disruption or change in search interest: sometimes, changes in user behavior cause a change in demand for certain queries, for example, due to the emergence of a new trend or a major change in the country you are observing. This means that site traffic may decrease simply because of external influences and factors.

- Seasonality

The third graph identifies the effects of seasonality, which can be caused by many reasons, such as weather, holidays or vacations: usually, these events are cyclical and occur at specific regular intervals, that is, on a weekly, monthly or quarterly basis.

- Glitch

The last example shows reporting problems or glitches: if we see a major change followed immediately by a return to normal, it could be the result of a simple technical problem, and Google usually adds an annotation to the graph to inform us of the situation.

Tips for analyzing the decline pattern

After discovering the decline (and after the first, and inevitable, moments of panic…), we need to try to diagnose its homes and, according to Waisberg, the best way to understand what has happened to our traffic is to examine the Search Console performance report, which summarizes a lot of useful information and gives us a way to see (and analyze) the shape of the variation curve, which in itself gives us some clues.

Specifically, the Googler advises us to expand the date range to include the last 16 months (to view beyond 16 months of data, we need to periodically export and archive the information), so that we can analyze the decline in traffic in context and ensure that it is not related to a regular seasonal event, such as a holiday or trend. Then, for further confirmation, we should compare the fall period with a similar period, such as the same month of the previous year or the same day of the previous week, to simplify the search for what exactly has changed (and preferably trying to compare the same number of days and the same days of the week).

In addition, we need to navigate through all the tabs to find out where the change occurred and, in particular, to understand whether it affected on specific queries, pages, countries, devices, or Search Aspect. Analyzing the different types of search separately will help us understand whether the drop is limited to the Search system or whether it also affects Google Images, Video, or the News tab.

Investigate general trends in the business sector.

The emergence of major changes in the industry or country of business may push people to change the way they use Google, that is, to search using different queries or use their devices for different purposes. Also, if we sell a specific brand or product online, there may be a new competing product that cannibalizes search queries.

In short, understanding traffic declines also comes strongly from analyzing general trends for the Web, and Google Trends provides access to “a largely unfiltered sample of actual search queries made to Google,” with insights that are also useful for image search, news, shopping, and YouTube.

In addition, we can also segment the data by country and category so that the data is more relevant to our website’s audience.

From a practical point of view, Waisberg suggests checking the queries that are driving traffic to the website to see if they exhibit noticeable declines at different times of the year: for example, food-related queries are very seasonal, because-in the United States, but not only there-people search for “diets” in January, “turkey” in November, and “champagne” in December, and basically different industries have different levels of seasonality.

Why is my site losing traffic?

The direct question about why a site may be losing organic traffic was also the focus of an interesting Youtube Hangout in which John Mueller answered a user’s doubt, related to a problem plaguing his site: “Since 2017 we are gradually losing rankings in general and also on relevant keywords, ” he says, adding that he has done checks on the backlinks received, which in half of the cases come from a single subdomain, and on the content (auto-generated through a tool, which has not been used for a few years).

The user’s question is therefore twofold: first, he asks Mueller’s advice on how to halt the gradual decline in traffic, but also a practical suggestion on whether to use the link disavow to remove all subdomain backlinks, fearing that such an action could cause further effects to ranking.

Identifying the causes of the decline

Mueller starts with the second part of the question, and answers sharply, “If you remove with disavow a significant part of your natural links there can definitely be negative effects on ranking,” once again confirming the weight of links for ranking on Google.

Coming then to the more practical part, and thus to the possible causes of the drop in traffic and positions, the Googler thinks that the proposed content can be good (“if it is extra informative documents regarding your site, products or services you offer”) and therefore there is no need to block or remove it from indexing. Therefore, at first glance, it can be said that “neither links nor content are directly the causes of a site’s decline.”

SEO traffic, the reasons why a site loses ranking

Mueller articulates his answer more extensively, saying that in such cases, “when you’re seeing a kind of gradual decline over a fairly long period of time,” there may be some kind of “natural variation in ranking” that can be driven by five possible reasons.

- Changes in the Web ecosystem.

- Algorithm changes.

- Changes in user searches.

- Changes in users’ expectations of content.

- Gradual changes that do not result from big, dramatic Web site problems.

Google’s ranking system is constantly moving, and there are always those who go up and those who go down (one site’s ranking improvement is another’s ranking loss, inevitably). That being said, we can delve into the causes mentioned in the hangout.

When a site loses traffic and positions

Ecosystem changes, as interpreted by Roger Montti on SearchEngineLand, include situations such as increased competition or the “natural disappearance of backlinks” because linking sites have gone offline or removed linking, and in any case all factors external to the site that can cause loss of ranking and traffic.

Clearer reasoning about algorithm changes, and we know how frequent broad core updates are also becoming, which redefine the meaning of a Web page’s relevance to a search query and its relevance to people’s search intent.

It is precisely the users who are the “key players” in the other two reasons mentioned: we are repeating this almost obsessively, but understanding people’s intentions is the key to making quality content and having a high-performing site, and so it is important to follow query evolutions but also to know the types of content that Google is rewarding (because users like them).

How to revive a site after traffic loss

Mueller also offered some practical pointers for getting out of declining traffic situations: the first suggestion is to roll up our sleeves and look more carefully at the overall issues of the site, looking for areas where significant improvements can be made and trying to make content more relevant to the types of users that represent our target audience.

More generally, Google’s Senior Webmaster Trends Analyst tells us that it is often necessary to take a “second look” at the site and its context, not to stop at what might appear to be the first cause of the decline (in the specific case of the old hangout, backlinks) but to study in depth and evaluate all the possible factors influencing the decline in ranking and traffic.

Algorithms, penalties and quality for Google, how to understand SEO traffic declines

Far more comprehensive and in-depth is the advice that came from Pedro Dias, who worked in the Google Search Quality team between 2006 and 2011 and who in an article published in Search Engine Land walks us through applying the right approach to situations of SEO traffic drops. The starting point of his reasoning is that it is crucial to really understand whether traffic drops due to an update or whether other factors such as manual actions, penalties and so on intervene, because only by knowing their differences and the consequences they generate – and thus identifying the trigger of the problem – can we react with the right strategy.

Understanding how algorithms and manual actions work

The former Googler premises that his perception may therefore be currently outdated, as things at Google are changing at a more than rapid pace, but, nonetheless, on the strength of his experience working at “the most searched input box on the entire Web,” Dias offers useful and interesting insights into “what makes Google work and how search works, with all its nuts and bolts.”

Specifically, he analyzes “this tiny input field and the power it wields over the Web and, ultimately, over the lives of those who run Web sites,” and clarifies some concepts, such as penalties, to teach us how to distinguish the various forms and causes by which Google can make a site “crash”

Google’s algorithms are like recipes

“Unsurprisingly, anyone with a Web presence usually holds their breath whenever Google decides to make changes to its organic search results,” writes the former Googler, who then reminds us how ‘being primarily a software engineering company, Google aims to solve all its problems on a large scale, not least because it would be ’virtually impossible to solve the problems Google faces solely with human intervention.”

For Dias, “algorithms are like recipes: a set of detailed instructions in a particular order that aim to complete a specific task or solve a problem.”

Better small algorithms to solve one problem at a time

The likelihood of an algorithm “producing the expected result is indirectly proportional to the complexity of the task it has to complete”: this means that “most of the time, it is better to have several (small) algorithms that solve a (large) complex problem by breaking it down into simple subtasks, rather than a giant single algorithm that tries to cover all possibilities.”

As long as there is an input, the expert continues, “an algorithm will run tirelessly, returning what it was programmed to do; the scale at which it operates depends only on the available resources, such as storage, processing power, memory and so on.”

These are “quality algorithms, which are often not part of the infrastructure,” but there are also “infrastructure algorithms that make decisions about how content is scanned and stored, for example.” Most search engines “apply quality algorithms only at the time of publication of search results: that is, the results are evaluated only qualitatively, at the time of service.”

Algorithmic updates for Google

At Google, quality algorithms “are seen as filters that target good content and look for quality signals throughout Google’s index,” which are often provided at the page level for all websites and can then be combined, producing “scores at the directory or hostname level, for example.”

For Web site owners, SEOs and digital marketers, in many cases, “the influence of algorithms can be perceived as a penalty, especially when a Web site does not fully meet all quality criteria and Google’s algorithms decide instead to reward other, higher quality Web sites.”

In most of these cases, what ordinary users see is “a drop in organic performance, ‘ which does not necessarily result from the site being ’pushed down,” but more likely “because it has stopped being rated incorrectly, which can be good or bad.”

What is quality

To understand how these quality algorithms work, we must first understand what quality is, Dias rightly explains: as we often say when talking about quality articles, this value is subjective, it lies “in the eye of the beholder.”

Quality “is a relative measure within the universe in which we live, depending on our knowledge, experience and environment,” and “what is quality for one person, probably may not be quality for another.” We cannot “tie quality to a simple context-free binary process: for example, if I am dying of thirst in the desert, do I care if a water bottle has sand at the bottom?” the author adds.

For websites, it is no different: quality is, fundamentally, providing “performance beyond expectations (Performance over Expectation) or, in marketing terms, Value Proposition.”

Google’s ratings of quality

If quality is relative, we must try to understand how Google determines what is responsive to its values and what is not.

Actually, says Dias, “Google does not dictate what is and what is not quality: all the algorithms and documentation Google uses for its Webmaster Instructions (which have now merged into Google Search Essentials, ed.) are based on real user feedback and data.” It is also for this reason, we might add, that Google employs a team of more than 14 thousand Quality Raters, who are called upon to assess precisely the quality of the search results provided by the algorithms, using as a compass the special Google quality rater guidelines, which also change and are updated frequently (the last time in July 2022).

We also said this when talking about search journey: when “users perform searches and interact with websites on the index, Google analyzes user behavior and often runs multiple recurring tests in order to make sure it is aligned with their intent and needs.” This process “ensures that the guidelines for websites issued by Google align with what search engine users want, they are not necessarily what Google unilaterally wants.”

Algorithm updates chase users

This is why Google often states that “algorithms are made to chase users, ‘ recalls the article, which then similarly urges sites to ’chase users instead of algorithms so that they are on par with the direction Google is moving in.”

In any case, “to understand and maximize a website’s potential for prominence, we should look at our websites from two different perspectives, Service and Product.”

The website as a service

When we look at a website from a service point of view, we should first analyze all the technical aspects involved, from code to infrastructure: for example, among many other things “how it is designed to work, how technically robust and consistent it is, how it handles the communication process with other servers and services, and then again the integrations and front-end rendering.”

This analysis alone is not enough because “all the technical frills do not create value where and if the value does not exist,” Pedro Dias points out, but at best “they add value and make any hidden value shine through.” For this reason, advises the former Googler, “you should work on the technical details, but also consider looking at your Web site from a product perspective.”

The site as a product

When we look at a Web site from a product perspective, “we should aim to understand the experience users have on it and, ultimately, what value we are providing to distinguish ourselves from the competition.”

To make these elements “less ethereal and more tangible,” Dias resorts to a question he asks his clients, “If your site disappeared from the Web today, what would your users miss that they would not find on any of your competitors’ Web sites?” It is from the answer that we can tell “whether you can aim to build a sustainable and lasting business strategy on the Web,” and to help us better understand these concepts he provides us with this image-Peter Morville’s User Experience Honeycomb, modified to include a reference to the specific concept of EEAT that is part of Google’s Quality Assessor Guidelines.

Most SEO professionals look deeply into the technical aspects of UX such as accessibility, usability, and findability (which are, in effect, about SEO), but tend to leave out the qualitative (more strategic) aspects, such as Utility, Desirability, and Credibility.

At the center of this hive is Value (“Value”), which can only be “fully achieved when all other surrounding factors are satisfied”: therefore, “applying it to your web presence means that unless you look at the whole holistic experience, you will miss the main goal of your website, which is to create value for you and your users.”

Quality is not static, but evolving

Complicating matters further is the fact that “quality is a moving target,” it is not static, and therefore a site that wants “to be perceived as quality must provide value, solve a problem or need” consistently over time. Similarly, Google must also evolve to ensure that it is always providing the highest level of effectiveness in its responses, which is why it is “constantly running tests, pushing quality updates and algorithm improvements.”

As Dias says, “If you start your site and never improve it, over time your competitors will eventually catch up with you, either by improving their site’s technology or by working on the experience and value proposition.” Just as old technology becomes obsolete and deprecated, “over time, innovative experiences also tend to become ordinary and most likely fail to exceed expectations.”

To clarify the concept, the former Googler gives an immediate example: “In 2007, Apple conquered the smartphone market with a touch screen device, but nowadays, most people will not even consider a phone that does not have a touchscreen, because it has become a given and can no longer be used as a competitive advantage.”

And SEO also cannot be a one-off action: it is not that “after optimizing the site once, it remains optimized permanently, ‘ but ’any area that supports a company must improve and innovate over time in order to remain competitive.”

Otherwise, “when all of this is left to chance or we don’t give the attention needed to make sure all of these features are understood by users, that’s when sites start to run into organic performance problems.”

Manual actions can complement algorithmic updates

It would be naïve “to assume that algorithms are perfect and do everything they are supposed to do flawlessly,” admits Dias, according to whom the great advantage “of humans in the battle against machines is that we can deal with the unexpected.”

That is, we have “the ability to adapt and understand abnormal situations, and understand why something may be good even though it might seem bad, or vice versa.” That is, “humans can infer context and intention, while machines are not so good.”

In the field of software engineering, “when an algorithm detects or misses something it shouldn’t have, it generates what is referred to as a false positive or false negative, respectively”; to apply the right corrections, one has to identify “the output of false positives or false negatives, a task that is often best done by humans,” and then usually “engineers set a confidence level (threshold) that the machine should consider before requesting human intervention.”

When and why manual action is triggered

Inside Search Quality there are “teams of people who evaluate results and look at websites to make sure the algorithms are working properly, but also to intervene when the machine is wrong or cannot make a decision,” reveals Pedro Dias, who then introduces the figure of the Search Quality Analyst.

The job of this Search Quality Analyst is “to understand what is in front of him, examining the data provided, and making judgments: these judgment calls may be simple, but they are often supervised, approved or rejected by other Analysts globally, in order to minimize human bias.”

This activity frequently results in static actions aimed at (but not limited to):

- Create a data set that can later be used to train algorithms;

- Address specific and impactful situations, where algorithms have failed;

- Report to website owners that specific behaviors fall outside the quality guidelines.

These static actions are often referred to as manual actions, and they can be triggered for a wide variety of reasons, although “the most common goal remains countering manipulative intent, which for some reason has successfully exploited a flaw in the quality algorithms.”

The disadvantage of manual actions “is that they are static and not dynamic like algorithms: that is, the latter run continuously and react to changes on Web sites, based only on repeated scanning or algorithm refinement.” In contrast, “with manual actions the effect will remain for as long as expected (days/months/years) or until a reconsideration request is received and successfully processed.”

The differences between the impact of algorithms and manual actions

Pedro Dias also provides a useful summary mirror comparing algorithms and manual actions:

| Algorithms – Targeting resurfacing value – Large-scale work – Dynamic – Fully automated – Indefinite duration |

Manual actions – Aim to penalize behavior – Address specific scenarios – Static Manual + Semiautomatic – Defined duration (expiration date) |

Before applying any manual actions, a search quality analyst “must consider what he or she is addressing, assess the impact and the desired outcome,” answering questions such as:

- Does the behavior have manipulative intent?

- Is the behavior egregious enough?

- Will the manual action have an impact?

- What changes will the impact produce?

- What am I penalizing (widespread or single behavior)?

As the former Googler explains, these are issues that “must be properly weighed and considered before even considering any manual action.”

What to do in case of manual action

As Google moves “more and more toward algorithmic solutions, leveraging artificial intelligence and machine learning to both improve results and combat spam, manual actions will tend to fade and eventually disappear completely in the long run,” Dias argues.

In any case, if our website has been affected by manual actions, “the first thing you need to do is figure out what behavior triggered it, ‘ referring to ’Google’s technical and quality guidelines to evaluate your website against them.”

This is a job to be done calmly and thoroughly, because “this is not the time to let a sense of haste, stress and anxiety take over”; it is important to “gather all the information, clean up errors and problems on the site, fix everything and only then send a request for reconsideration.”

How to recover from manual actions

A widespread but mistaken belief is “that when a site is affected by a manual action and loses traffic and ranking, it will return to the same level once the manual actions are revoked.” That ranking, in fact, is the result (also) of the use of impermissible tools (which caused the penalization), so it makes no sense that after cleaning and revoking the manual action, the site can return exactly where it was before.

However, the expert is keen to point out, “any Web site can be restored from almost any scenario,” and cases where a property is considered “unrecoverable are extremely rare.” It is important, however, that recovery begin with “a full understanding of what you are dealing with,” with the understanding that “manual actions and algorithmic problems can coexist, ‘ and that ’sometimes, you won’t start to see anything before you prioritize and solve all the problems in the right order.”

In fact, “there is no easy way to explain what to look for and the symptoms of each and every algorithmic problem,” and so “the advice is to think about your value proposition, the problem you are solving or the need you are responding to, not forgetting to ask for feedback from your users, inviting them to express opinions about your business and the experience you provide on your site, or how they expect you to improve.”

Takeaways on algorithmic declines or resulting from manual actions

In conclusion, Pedro Dias leaves us with four pills to summarize his (extensive) contribution to the cause of sites penalized by algorithmic updates or manual actions.

- Rethink your value proposition and competitive advantage, because “having a Web site is not in itself a competitive advantage.”

- Treat your Web site like a product and constantly innovate-“if you don’t move forward, you will be outdone, whereas successful sites continually improve and evolve.”

- Research your users’ needs through User Experience: “the priority is your users, and then Google,” so it is helpful to “talk and interact with your users and ask their opinions to improve critical areas.”

- Technical SEO is important, but by itself it won’t solve anything: “If your product/content has no appeal or value, it doesn’t matter how technical and optimized it is, and so it’s crucial not to lose the value proposition.”

Site traffic: what to do when other sources drop

After carefully analyzing the possible causes of organic traffic loss, it is useful to also try to provide some insight into the declines in traffic affecting the other sources that contribute to a site’s success, such as paid, direct and referral traffic. Each can be affected by a number of variables, both internal and external, and constant monitoring allows us not only to identify any problems but also to take timely corrective action.

- Paid traffic: declines and remedies

The first sign of a decline in paid traffic is often related to a reduction in the effectiveness of advertising campaigns. It could be misconfiguration, failure to optimize keywords, or inadequate investment; pausing or ending a campaign without proper planning, or simply not optimizing it in real time with respect to the results collected, can quickly lead to a decrease in clicks and conversions.

Indeed, the balance between budget and performance is crucial: if campaign funds are insufficient or the cost per click (CPC) is too high compared to competitors, ads may quickly run out of exposure, causing a decline in planned visits.

In addition, there are other factors that may result in reduced performance, such as the targeting of campaigns being too generic or landing pages not converting, indirectly generating an increased bounce rate. If the landing page does not provide an adequate experience, even the highest number of clicks will not turn into concrete results. At the same time, constantly monitoring the ad quality score allows you to identify relevance issues between keywords, ad text, and landing page, the disconnect of which could lead to a decline in traffic and clicks.

A clear remedy is to update the optimization strategy. Refining the keywords on which the ad auction focuses, increasing or reallocating budget to better performing campaigns, and optimizing the landing page are crucial steps. One must regularly test different combinations of ads and creative copy to identify the best configurations with the help of periodic reports. This continuous optimization process will help bring the ratio of visits to conversions back into balance.

- Direct traffic: causes of decline and corrective action

In the case of a drop in direct traffic, we are often faced with a decrease in user interest or familiarity with the site. Slumps in this item can be a symptom of a weakening of the brand, the exhaustion of any promotional campaigns aimed at building audience loyalty, or problems related to the user experience of the site.

It therefore becomes important again to analyze and improve the branding and the experience of repeat users. When users stop returning directly to our domain, it could mean that the loyalty activities are no longer sufficiently engaging or that the incisiveness of the brand in their daily lives has waned. Technical problems on the site, difficult navigation or unsatisfactory loading speed could also discourage repeat visitors, leading them to look for alternatives with greater usability.

To reverse this trend, it is useful to strengthen actions that increase brand awareness and improve interaction with already loyal audiences. Incentivizing direct visits through email marketing campaigns, regular, quality content, and revitalizing engagement strategies through other sources as well (e.g., social media or PR) or updating the site to ensure that usability is always optimal are all effective strategies. If the site loses interest, it may also be worth exploring new engagement formulas to keep the audience’s attention alive.

- Referral traffic: identifying issues and correcting them

The causes of a decline in referral traffic can also be many, but frequent is the loss of relevance or functionality of backlinks. If the links that generate traffic to our site become less accessible (e.g., due to an SEO error in the source site or because that page has been taken down), the flow of users coming through those referrals decreases.

Another potential cause concerns the importance of partnerships and the level of link quality and authenticity: partnerships that were previously effective may no longer generate the same return if they are not kept robust or updated. Changes in link earning policy or algorithm updates (especially on outbound links) may negatively affect them.

Debugging broken links and reviewing existing partnerships can help resume these dynamics. An audit with SEOZoom will help uncover the source of weak or underperforming links. From there, fixing the errors or entering into new partnerships not only restores traffic, but can boost it further.