Sitemap: what it is, what it is for, how to make it and manage it

We can define it as a real map that helps search engines make their way inside our Web site, easily and efficiently finding (and analyzing) the URLs entered. The sitemap is a crucial element in building a positive dialogue between our site and Googlebot, especially in uncovering pages that are relevant to us. While less visible than other site components, its function is therefore vital, and our insights today lead us to address what a sitemap is, how it is made, why it can be useful to communicate it to Google and other search engines, and how it can affect the long-term success of a website by ensuring proper indexing of content.

What is a sitemap?

A sitemap is a file that contains all the URLs of a site, listed according to a hierarchy set during creation. It also contains precise details about the pages such as date of last update, frequency of changes, and any other language versions of the pages.

The definition of sitemap is therefore easy to understand, because this document is literally a map of the site, useful for search engines and, in some cases, for users as well.

Initially, in fact, its sense was to facilitate users’ navigation, like a real map, but its usefulness also extends to crawling and indexing by search engine crawlers, who can thus understand the structure of the site sooner and better thanks to the information they find about the relationships between pages, so that they can more easily explore a site’s content and identify it for indexing.

More specifically, this file is a signal of which URLs on our site we want Google to scan and can provide information about newly created or modified URLs. This facilitates the inclusion of all pages in the Index and SERPs, especially in the case of complex or large sites.

The meaning of the sitemap

So if we ask ourselves “what is a sitemap” , the answer is that it is a document, usually in XML format , that tells crawlers which pages exist on the site, which need priority during crawling, and how often those pages are updated. Providing a sitemap to search engines helps ensure that all relevant content on our site is visited regularly, without some pages remaining hidden.

There are various types of sitemaps, but the best known and most widely used is undoubtedly the XML sitemap, usable exclusively by search engines. You can view this type of sitemap by usually visiting the URL of the site followed by “/sitemap.xml”. For example, if we are interested in finding the sitemap of a specific site we can try adding this suffix to the root address, as in https://www.seozoom.it/sitemap_index.xml .

In parallel, there are also HTML sitemaps, which are designed more for the end user. These provide a list format display of the site’s pages, which is useful in the case of very large sites where navigation can be confusing. However, they are less used today, prevailing the importance of the XML sitemap for SEO optimization issues .

Thus, beyond its technical function, the strategic function of the sitemap is to be a central tool for improving the digital visibility of our site, ensuring that all pages-or at least the most relevant ones-are “read” by search engines.

What is the sitemap used for and why is it important for SEO?

The sitemap represents a key point of contact between our website and the search engines. We should not think of it simplistically as a “list of pages,” but rather as an active tool for optimizing the flow of information between our site and search engines, useful in consolidating our online presence.

With access to this document, a search engine crawler such as Googlebot can crawl the site more efficiently because it has at its disposal an overview of the available content, with indications of the resources present and the path to reach them.

In principle, web crawlers can find most of the content if the pages of a site are linked properly.However, using a map is a sure way for search engines to understand the entire structure of a site more quickly and accurately.

The main reason lies in its ability to make it easier to index the pages of our site, making it much easier for Google and other search engines to scan every piece of content available. When we create a sitemap, we are providing an organized guide that allows crawlers to go through the site architecture, clearly perceiving which pages exist, which should be prioritized, and how often they are updated.

The most obvious benefit of an SEO sitemap is precisely to ensure that no page remains “invisible” to search engines. This is especially useful for large sites or those with a complex URL structure, where some pages may be difficult for Google bots to discover.

Another crucial aspect of the sitemap is that it allows us to signal to search engines the pages that we consider most meaningful to the user and the business. This is possible thanks to parameters such as priority and frequency of updates, which are communicated to the crawlers through the XML file. These aspects affect indexing: by regularly updating the sitemap, we can let Google know that new pages or changes on existing pages are available and should be crawled again.

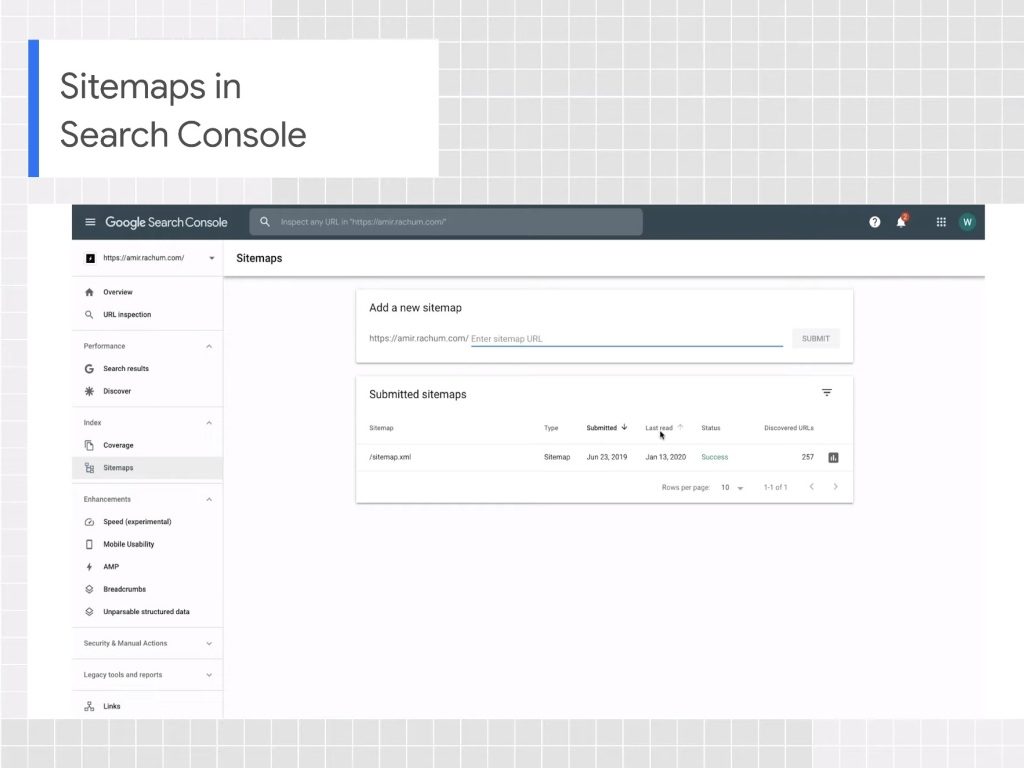

Integrating the sitemap with Google Search Console makes the whole process even more powerful. Once the sitemap is generated , we can submit it to Google through Search Console, providing search engines with official access to all of our site’s resources. This step is not only a way to optimize indexing, but also allows us to monitor any errors or changes not picked up by the crawlers. If a page is not indexed correctly or has been rejected for some problem, Google Search Console notifies us immediately, allowing us to promptly resolve the critical issues.

The history of the sitemap: how it was born and how it has evolved

Sitemaps were born almost simultaneously with the development of the web. In the early years, when sites were still relatively simple and less populated with content, webmasters relied on HTML sitemaps as the only way to organize and facilitate navigation. These pages were essentially lists of links to all sections of the site, designed more for users than for search engines. HTML sitemaps often acted as a “table of contents,” allowing site visitors to quickly access relevant sections or more hidden pages. However, as content grew and became more complex, this type of approach began to demonstrate its limitations.

In fact, as the Web evolved, the need to improve communication with search engine crawlers became increasingly pressing. As the number of web pages increased, search engines such as Google were faced with the challenge of rapidly mapping and indexing a growing volume of sites, increasingly rich in content. It was in this context that XML sitemaps were introduced in the late 2000s.

Unlike previous HTML maps , XML sitemaps were not intended for users, but were designed exclusively for search engines. These sitemaps are structured files on a standard protocol (the sitemap protocol), which allows crawlers to understand not only the hierarchy and structure of the site, but also more detailed information about each page. Parameters such as the frequency of updates, the priority of a particular page over others within the site, and the date of last modification are clearly communicated through the XML file.

This transition from HTML sitemaps to XML sitemaps has led to a major step forward in the way search engines handle content indexing and crawling. Whereas in the past we relied primarily on internal links to allow crawlers to “discover” new pages, with the advent of XML sitemaps , site owners could directly send search engines specific information about which pages to crawl and how to prioritize them. In fact, modern sitemaps began to play a crucial role in ensuring complete and efficient indexing of all pages on a site, large and small.

Over the years, sitemaps have further evolved to support new types of content. In addition to traditional pages, it is now possible to create specific sitemaps for images, videos and news, opening up new possibilities for ensuring that each type of content is properly indexed. This is critical not only from a technical standpoint, but also to provide search engine visitors with content that is always up-to-date and relevant, thus improving the overall search experience.

The different types of sitemaps: HTML, XML and more

As mentioned, the world of sitemaps is not limited to a one-size-fits-all solution. In fact, over time, different types of sitemaps have been developed, each with a specific purpose related to specific indexing needs.

The most popular and widely used format for interfacing with search engines is the XML sitemap, but it is not the only option available. There are also HTML sitemaps, designed more to help users navigate intuitively, and newer, more specialized versions such as sitemaps for images , videos or even news.

XML sitemaps are the gold standard when it comes to search engine interaction. Invented by Google in 2005 and initially called Google Sitemaps, this format has since been adopted by other search engines and is based on a specific protocol, governed by the Attribution-ShareAlike Creative Commons License, which has made it possible.

These maps are not viewed by users, but work behind the scenes and were designed precisely with the goal of providing a clear outline of site structure to Google and other search engines. With the XML file, several key pieces of information can be communicated, including the frequency of updates (for example, whether a page is routinely changed), the relative importance of pages, and the exact structure of content. This is crucial to facilitateindexing of pages in search engines and to ensure that the latest information is quickly captured and updated. For many websites, having a properly configured XML sitemap is one of the key steps to improving their SEO.

In contrast, the HTML sitemap is designed more for the end user and more accurately to improve the user experience. Although it is less common today due to the proliferation of increasingly advanced navigation tools, it has played-and in some cases still plays-an important role in large Web sites. It takes the form of a static web page that contains a clickable list of all sections of the site. While for Google an XML sitemap is meant to facilitate content crawling, the HTML sitemap helps users discover hidden or hidden content in deeper sections of the site. However, with the increasing complexity of digital projects and the growing importance of search engines as intermediaries between user and site, the role of these maps has become increasingly marginal. In fact, many of the HTML sitemaps have been completely replaced by well-structured navigation menus or powerful internal search engines.

However, we cannot overlook the importance of content-specific sitemaps. For example, the sitemap for images helps Google to scan and index all the images on the site, which is critical for those aiming to get meaningful traffic from Google Images as well, especially in visually oriented fields such as design, fashion, or photography. An image entry in a Sitemap can include the location of images on a page.

Another example of a specialized XML file is the sitemap for video, which indicates to search engines the presence of multimedia content within the site. A video entry in a sitemap could indicate the video’s duration, rating, and age-appropriateness ranking. This is crucial for improving ranking on Google Video results , especially for platforms or blogs that rest on audiovisual content.

Finally, there are news sitemaps, which are useful for those who manage sites that frequently publish news articles. This sitemap variant tells bots which content needs to be indexed more quickly, for example, in order to appear within the very high visibility provided by Google’s news sections. A news item in a sitemap can include the title of the article and the date of publication. It is an indispensable tool for newspapers, news blogs and particularly dynamic digital media, where fresh content is a key competitive factor.

What are the sitemap formats for Google

Sitemaps can take different formats depending on the specific needs of the site and the web configuration being used: each format has advantages and limitations, so it is essential to choose the one that best suits your needs.

Google supports sitemap formats defined by the Sitemap protocol, with varying degrees of extensibility and additional functionality.

The most versatile and popular format is the XML sitemap. This type of sitemap is extremely flexible and lends itself well to most sites because it not only lists the pages of the site, but can also include additional information. For example, XML sitemaps can be used to provide details about images, videos, or news content. This format is particularly useful for multilingual sites because it is capable of reporting localized versions of pages. Most CMSs, such as WordPress or Joomla, can generate an XML sitemap automatically, thanks to dedicated plugins, which makes the process within the reach of many users. However, managing XML sitemaps on large sites or sites with URLs subject to frequent changes can become complicated. It requires more attention, especially when it comes to frequent updates or continuous structural changes.

An easier option to use, but limited in terms of extensibility, is the RSS sitemap, mRSS or Atom 1.0. These formats are quite similar to XML sitemaps, but are often easier to generate automatically, since most CMSs create RSS and Atom feeds without the need for additional user intervention. RSS or Atom sitemaps are particularly useful for reporting information to Google about videos posted on the site, thus supporting the need for video sitemaps. The main disadvantage of this format lies in its limited ability to provide additional information: while XML sitemaps can include image and news data, these more simplified formats do not allow it. Thus, if the site has diverse multimedia content with images and news, it may not be the best choice.

Finally, for those looking for a simple and straightforward solution, there is the text sitemap. This is the most basic format, which simply lists the URLs of HTML or other pages that can be indexed. This solution is ideal for large sites, as it is very easy to implement and does not require complex structures. However, the text format has significant limitations: it can only handle text or HTML content, with no extensibility for images, videos or news. As a result, while it is easy to maintain, it is not very versatile compared to the other options.

The limitations of sitemaps and other useful information

Best practices related to sitemaps, established by the Sitemap protocol, are often ignored or loosely applied, which can compromise the effectiveness of indexing by search engines. Several of these caveats relate to topics such as size limits, proper encoding and location of sitemaps, and the format of URLs contained within these files.

One of the most critical aspects is related to the size limits of sitemaps. Each sitemap should not exceed 50 MB (unless compressed) or include more than 50,000 URLs. Exceeding these limits means that we have to split the file into several sitemaps, each dedicated to a specific section or type of content. In this case, which is particularly common with large and complex sites, it can be useful to create a sitemap index file : this is a document that allows multiple sitemaps to be grouped under a single structure, thus facilitating submission to Google via a single file. Google allows you to submit multiple sitemaps or multiple sitemap index files, which is strategic when you want to separately monitor the performance of specific content within Search Console.

The coding and location of the sitemap file are also key aspects. Sitemaps must be encoded in UTF-8, a commonly accepted technology standard, to ensure that all special characters and accents in URLs are correctly interpreted by search engine crawlers.

Regarding location, we can technically host the sitemap file in any directory on the site, but it is preferable to publish it at the root of the domain (i.e., in the root folder of the site). This allows the sitemap to affect all files on the site. If the sitemap is instead published in a subdirectory, it will only affect the files in that specific section. If we submit the file via Google Search Console, we can still host the sitemap wherever we prefer, but publishing it at the root level remains the recommended choice for complete coverage. This expediency allows crawlers to access all content on the site without directory limits.

Another factor of great importance concerns the management of URLs within the sitemap. It is always necessary to use absolute URLs, thus complete and well-structured, not relative versions. If, for example, our site is located at“https://www.example.com/”, the URL included in the sitemap should not be listed as “/mypage.html” but as“https://www.example.com/mypage.html”. This way, we ensure that Google scans and indexes each page correctly, without ambiguity about how to access it. In addition, it is critical that the sitemap contain only the URLs of the pages we intend to display in search results, i.e., those that we want to be indexed correctly and displayed as canonical.

If our site has separate desktop and mobile versions, it is generally recommended to report only the canonical version in the sitemap . However, if the needs of the site require us to index both versions (desktop and mobile), we can do so, but remember to clearly note within the sitemap which URL corresponds to each version so that Google can correctly understand the relationship between the two.

Speaking of information, Google’s guide also clarifies another aspect: “using the Sitemap Protocol does not guarantee the inclusion of web pages in search engines.” That is, there is no certainty that the pages in the sitemap file are all then actually indexed, because Googlebot acts according to its own criteria and respecting its own complex algorithms, which are not affected by the Sitemap.

Furthermore, the sitemap does not affect the ranking of a site’s pages in search results, but the priority assigned to a page through the sitemap could potentially be a ranking factor.

When and why to use sitemaps

After these premises, what is the practical use of using a Sitemap?

Generally speaking, Google reassures us: if the pages of the site are linked properly-and if the pages we think are important are actually reachable through some form of navigation, be it the site’s menu or links embedded in the pages-it can usually find most of the content.

In any case, all sites can benefit from better crawling, but there are special cases where even Google strongly recommends using these sitemaps, such as larger or more complex sites or more specialized files.

What sites need sitemaps for

In particular, very large sites should use the sitemap to communicate new or recently updated pages to crawlers, who might otherwise neglect crawling these resources; even more useful is the map if the site has many orphan or poorly linked pages, which are therefore at risk of not being considered by Google.

The sitemap is then recommended for new sites with few incoming backlinks: in this case, Google and its crawler may have difficulty finding the site because no paths between Web links are highlighted.

Finally, it encourages using the map if the site “uses multimedia content, is displayed in Google News or uses other sitemap-compatible annotations,” adding that if “appropriate, Google may consider other information contained in the sitemaps to use for search purposes.”

So, to recap, the sitemap is useful if:

- The site is large , because generally on large sites it is harder to make sure that every page is linked from at least one other page on the site, and thus Googlebot is more likely to miss some of the new pages.

- The site is new and receives few backlinks. Googlebot and other web crawlers crawl the Web by following links from one page to another, so in the absence of such links Googlebot may not detect the pages.

- The site has a lot of multimedia content (video, images) or is displayed in Google News, because we can provide additional information to the crawlers through specific sitemaps.

Which sites may not need a sitemap

While these are the cases where Google strongly recommends submitting a sitemap, there are situations where it is not so necessary to provide the file. In particular, we may not need a sitemap if:

- The site is “small”and hosts about 500 pages or less (calculating only the pages we think should be included in search results).

- The site is fully internally linked and Google can therefore find all the important pages by following the links starting from the home page.

- We do not publish many media files (videos, images) or news pages that we wish to display in search results. Sitemaps can help Google find and understand video and image files or news articles on the site, but we do not need these results to be displayed in Search, we may not submit the file.

How to create a sitemap

Creating a sitemap is a critical step and make it easier for search engines to index the site and thus potentially improve SEO. As mentioned, it is used to communicate all the pages of the site to the crawlers in an organized and complete manner and, most importantly, to make sure that they are analyzed effectively.

There are several methods for creating a sitemap, depending on the structure of the site and the tools you use, so let’s look at the most popular options together. The first step is to decide which pages of the site we want to point to and have crawled by Google, establishing the canonical version of each page. In this way, we clearly indicate to the search engines which URLs we prefer to show in the search results- the canonical URLs – especially if and when the same content is accessible from different URLs (avoiding pointing to all URLs that redirect to the same content and inserting in sitemap precisely only the canonical URL).

The second step is to choose the sitemap format to use, and then get to work with text editors or special software, or rely on an easier and faster alternative that is affordable for everyone.

Creating a Sitemap with Sitemap Generator

If in fact the more experienced can create the file manually, there are many resources on the Net that allow you to generate sitemaps automatically: there is even a (old) page on Google that lists Web Sitemap generatedr, broken down by “server-side programs,” “CMS and other plugins,” “Downloadable Tools,” “Online Generators/Services,” and “CMS with integrated Sitemap generators.” In addition, tools to generate sitemaps for Google News and “Code Snippets/Libraries” are also reported. However, the official guide specifies, this is an outdated and maintenance-free collection.

Whatever the choice, it is essential to make the created sitemap available to Google right away, either by adding it to the robots.txt file or by submitting it directly to Search Console.

Online sitemap generators are useful for those who manage small or medium-sized sites and want to generate a sitemap quickly without having to install software or add-ons. Among the best-known tools are XML-sitemaps.com, which is very popular because of its simple and intuitive interface. Simply enter the URL of your site and the tool will proceed to scan all pages, generating an XML sitemap file that can then be downloaded and uploaded to your site’s server. This method is particularly good for those who do not use CMSs or prefer not to alter the structure of their site with third-party plugins or tools.

If our site is based on a CMS such as WordPress, creating a sitemap is further simplified by using specific plugins that handle the entire process for you. One of the most popular and widely used plugins is Yoast SEO, which generates and updates the file automatically every time we add or edit content on the site.

On the other hand, for those who want more control or are in special situations (such as managing a static site or a custom built site), you can proceed to manually create a sitemap. This process requires writing an XML file that lists the various URLs of the site, along with optional information such as update frequency and crawl priority. Technically, it is only necessary to use a text editor such as Windows Notepad or Nano (Linux, macOS) and follow the basic syntax; we can assign any name to the file as long as the characters are allowed in a URL.

Creating a sitemap manually can be useful for those who want to include only certain pages or for those who have specific customization needs. However, it is important to respect the guidelines of the sitemap protocol, the standard defined by Google that governs how information should be organized and transmitted to search engines. A poorly structured XML file could cause indexing problems , so it is advisable to check that the file is correct before submitting it.

Google’s summary applies in this regard: depending on the architecture and size of the site, we can

- Allow the CMS system to generate a sitemap.

- Manually create the sitemap, if we enter less than a few dozen URLs.

- Automatically generate the sitemap, if we enter more than a few dozen URLs. For example, we can extract the website URLs from the respective database and export them to the actual screen or file on the web server.

In any case, regardless of the method of creation chosen, it is essential to submit the sitemap to Google, a step that can be performed through the Google Search Console, under the special section dedicated to the management of sitemaps. Sending the sitemap on a regular basis allows you to monitor any indexing issues and keeps Google updated on any changes or new content additions.

How to submit the sitemap to Google

Remembering that submitting a sitemap is only a suggestion and does not guarantee that Google will download or use it to crawl URLs on the site, we refer to the steps outlined in the official guide to discover the different ways to make the sitemap available to Google. That is, we can:

- Submit a sitemap in Search Console using the Sitemap report, which will allow us to see when Googlebot accessed the sitemap and also potential processing errors.

- Use the Search ConsoleAPI to programmatically submit a sitemap.

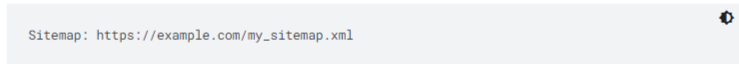

- Enter the following line anywhere in the robots.txt file , specifying the location of the sitemap, which Google will find the next time it scans the robots.txt file.

- Use WebSub to transmit changes to search engines, including Google (but only if we use Atom or RSS).

Methods for cross-submitting sitemaps for multiple sites

If we manage multiple websites, we can simplify the submission process by creating one or more sitemaps that include the URLs of all verified sites and saving the sitemaps in a single location. Methods for this multiple, cross-site submission are:

- A single sitemap that includes URLs for multiple websites, including sites from different domains. For example, the sitemap located at https://host1.example.com/sitemap.xml may include the following URLs

- .https://host1.example.com

-

- https://host2.example.com

-

- https://host3.example.com

-

- https://host1.example1.com

-

- https://host1.example.ch

Individual sitemaps (one for each site) that all reside in one location.

-

- https://host1.example.com/host1-example-sitemap.xml

-

- https://host1.example.com/host2-example-sitemap.xml

-

- https://host1.example.com/host3-example-sitemap.xml

-

- https://host1.example.com/host1-example1-sitemap.xml

-

- https://host1.example.com/host1-example-ch-sitemap.xml

To send sitemaps between sites hosted in a single location, we can use Search Console or robots.txt.

Specifically, with Search Console we must first have verified the ownership of all the sites we will add in the sitemap, and then create one or more sitemaps by including the URLs of all the sites we wish to cover. If we prefer, the guide says, we can include the sitemaps in a sitemap index file and work with that index. Using Google Search Console, we will submit either the sitemaps or the index file.

If we prefer to cross-submit sitemaps with robots.txt, we need to create one or more sitemaps for each individual site and, for each individual file, include only the URLs for that particular site. Next, we will upload all sitemaps to a single site over which we have control, e.g., https://sitemaps.example.com.

For each individual site, we need to check that the robots.txt file references the sitemap for that individual site. For example, if we have created a sitemap for https://example.com/ and are hosting the sitemap in https://sitemaps.example.com/sitemap-example-com.xml, we will reference the sitemap in the robots.txt file in https://example.com/robots.txt.

Best practices with sitemaps

Google’s documentation also gives some practical advice on the topic, echoing when indicated by the sitemaps protocol mentioned above, and in particular focuses on size limits, file location, and URLs included in sitemaps.

- Regarding limits, what was written earlier applies: all formats limit a single Sitemap to 50 MB (uncompressed) or 50,000 URLs. If we have a larger file or more URLs, we need to split the sitemap into several smaller sitemaps and optionally create a sitemap index file and send only this index to Google-or send multiple sitemaps and also the Sitemap Index file, especially if we want to monitor the search performance of each individual sitemap in Search Console.

- Google does not care about the order of URLs in the sitemap and the only limitation is size.

- Encoding and location of the sitemap file: the file must be encoded in UTF-8. We can host sitemaps anywhere on your site, but be aware that a sitemap only affects the descendants of the root directory: therefore, a sitemap published in the root of the site can affect all the files on the site, which is why this is the recommended location.

- Properties of referring URLs: we must use complete and absolute URLs in sitemaps. Google will crawl the URLs exactly as listed. For example, if the site is located at https://www.example.com/, we should not specify a URL such as /mypage.html (a relative URL), but the full and absolute URL: https://www.example.com/mypage.html.

- In the sitemap we will include the URLs we wish to display in Google search results. Usually, the search engine shows canonical URLs in its search results, and we can influence this decision with sitemaps. If we have different URLs for the mobile and desktop versions of a page, the guide recommends pointing to only one version in a sitemap or, alternatively, pointing to both URLs but noting the URLs to indicate the desktop and mobile versions.

- When we create a sitemap of the site, we tell the search engines which URLs we prefer to show in the search results and then the canonical URLs: therefore, if we have the same content accessible with different URLs, we must choose the preferred URL by including it in the sitemap and excluding all URLs that lead to the same content.

Which pages to exclude from sitemaps

Still on the subject of recommended practices, we should not think that the file serves to contain all the URLs of the site, and indeed there are some types of pages that should be excluded by default, because they are not very useful.

As a general rule, only relevant URLs, those that offer added value to users and that we want to be visible in the Search should be included in the sitemap; all others should be excluded from the file, although in any case this does not ensure that they are “invisible,” unless a noindex tag is added. This is particularly true for:

- Non-canonical pages

- Duplicate pages

- Pagination pages

- URLs with parameters

- Pages of internal site search results

- URLs created by filter options

- Archive pages

- Any redirects (3xx), missing pages (4xx) or server error pages (5xx)

- Pages blocked by robots.txt

- Pages in noindex

- Pages accessible from a lead generation form (PDF, etc.)

- Utility pages (login page, wishlist/cart pages, etc.).

How to generate an XML sitemap: step by step

Generating an XML sitemap may seem like a complex task, but it is actually within everyone’s reach thanks to the many existing tools. So let’s proceed with a practical, step-by-step guide on how to create and manage an XML sitemap .

- Choosing a sitemap generator

The first step is to identify and choose an XML sitemap generator. Today there are numerous tools, many of them free, designed to make the task quick and painless, allowing you to generate a sitemap simply from the site URL.

- Enter the site URL

Once the generator has been selected, the next step is to enter theURL of the website we wish to index. In most cases, simply enter the main address of the site. The generator will start a scan of the pages and internal links, automatically identifying the content to be included in the XML sitemap.

- Configure scan options

Many sitemap generators allow you to configure certain scan options. For example, you can specify:

- Which part of the site should be included or excluded (using filters on URLs);

- The priority of pages (i.e., which pages should be scanned most frequently);

- The page refresh rate , which tells search engines how often a particular page is changed.

These options are crucial for defining effective management of crawler crawl time and ensuring that the most important pages on the site are valued over time.

- Generate the sitemap and download it

Once the options have been configured, you can proceed by generating the XML sitemap. The generator will create an XML file containing all (or selected) pages of the site, ready to be submitted to search engines.

Remember to pay attention to some key features to be respected in the syntax: in particular, Google emphasizes that all tag values must be escape-coded, as is the norm in XML files. In addition, the

Once the generation is finished, we download the sitemap.xml file and save it to the device.

- Upload the file to the server

After creating and downloading the sitemap, you will need to upload it to the site’s server . Usually, the root folder of the site is the most common and recommended place to put the file (e.g.: www.yoursite.com/sitemap.xml). This allows any crawlers to easily access the file in automatic mode as well.

- Notify Google through Search Console.

Once the XML sitemap has been uploaded to the server, the final step is to notify Google to start the crawling process. To do this, we access Google Search Console, select the site we are managing and go to the “Sitemap” section. Here we can enter the URL of the sitemap.xml file and submit it. Google will scan the site based on the information provided in the file and log any access problems or indexing errors.

Regularly reporting the sitemap through Google Search Console is an essential practice for monitoring the indexing of the site and correcting any crawling problems.The report, in fact, also lets us know when Googlebot has accessed the uploaded sitemap and uncover potential processing errors.

In fact, we also have other ways to make the Sitemap available to Google: for example, we can use the Search Console API to submit a Sitemap programmatically, or insert the text line “Sitemap: https://example.com/my_sitemap.xml” anywhere in the robots.txt file, specifying the Sitemap path – Google will detect it the next time the robots.txt file is crawled. Also, if we use the Atom or RSS format, we can use WebSub to transmit the changes to search engines, including Google.

Creating an error-free sitemap: what to pay attention to

Creating an effective sitemap is not only about listing the pages of the site, but also requires careful management that follows best practices to avoid errors that can negatively affect SEO.

Search Console’s guide comes to our aid by listing possible problems we may run into while configuring the file and errors that, if not identified and resolved in a timely manner, risk compromising siteindexing due to improper communication with search engines.

Google offers a number of tools and reports to facilitate the identification and resolution of errors, but it is critical to know how to interpret them correctly. Let’s look at the most common errors that can be encountered and how we can handle them effectively.

- Sitemap retrieval errors

One of the most common errors is related to Google’s inability to retrieve the sitemap. This can be due to various reasons. In some cases, the sitemap is blocked by rules in the robots.txt file : if the sitemap is blocked by robots.txt, Google obviously does not have access to crawl the URLs listed within, and you will need to change that configuration to allow crawlers to access the content.

Another frequent reason concerns the wrong sitemap URL , which can lead to a 404 error. In such a case, it is essential to verify that the URL provided is correct and that the sitemap.xml file is actually present on the server, accessible at the given address. This problem can be quickly solved by trying to retrieve the sitemap directly through the browser: if it does not load, there is an error in the address or the file was not loaded correctly.

If, on the other hand, the site is subject to a manual action by Google, all sitemaps will be blocked until the problem is resolved. These instances of manual action signal serious problems with the site, such as practices that violate Google’s guidelines, and it is necessary to consult the detailed report in Google Search Console to resolve such problems before the sitemap can be resubmitted.

Sometimes, there may be retrieval errors due to a temporary problem on the server hosting our site. Momentary server unavailability or an excessive load of requests may prevent Google from accessing the sitemap. In these cases, retrieval errors can often be resolved by simply retrying to submit the sitemap after a few minutes.

Finally, Google may encounter an error due to low crawl demand for our site. If a site is underperforming and contains low quality content, Google may reduce its willingness to crawl it frequently. This again emphasizes how important it is to produce high-quality content , which engages users and is consistent and relevant to the site’s target audience.

- Sitemap analysis errors

When Google succeeds in retrieving a sitemap , it can still run into errors while processing the content. These errors often indicate problems with the access or correctness of the URLs contained in the sitemap. It is possible for Google to flag inaccessible URLs, which have been listed in the sitemap but are not actually available for crawling. To resolve this issue, it is helpful to use the URL Check tool within Google Search Console, which allows us to check why a URL is not found to be reachable.

A common error that results in unfollowed URLs occurs when Google is faced with too many redirects. If a URL in the sitemap addresses too many redirects, the crawler may not be able to follow them all, compromising the indexing of the final page. In such cases, it is always best to eliminate multiple redirects and update the sitemap with the final, correct URLs.

Another frequent error occurs when the sitemap contains relative instead of absolute URLs . Google prefers to work with complete URLs containing the full domain address, such as“https://www.example.com/mypage.html”, rather than partial URLs such as “/mypage.html.” This makes each URL clear and unique, with no possibility of ambiguity.

- Structure and formatting errors

Among the more technical errors that can occur is the presence of invalid URLs within the sitemap. Each URL must be coded correctly, free of spaces, unsupported characters or incorrect formatting. Even characters such as “&”, “” require the use of escape characters, which can be easily introduced with XML validation tools or manual care. Incorrect formatting of the URL can prevent Google from correctly interpreting the file, compromising the entire crawl.

Linked to invalid URL errors is the case of improper URL levels. Each sitemap must adhere to a hierarchy, so if the sitemap.xml is located in a subfolder of the site, all the URLs indicated must be in the same folder or in the lower folders hierarchically. URLs belonging to parallel folders or higher levels cannot be included.

Another frequent structural error concerns compressed sitemaps: Google supports file compression, but if decompression errors are generated during compression , the sitemap will not be readable. In such a case, the solution is to compress the file again using the correct tool (usually gzip) and send it again.

Among the most frequent errors is also the excessive size of the sitemap: if the sitemap.xml file exceeds the 50 MB limit or contains more than 50,000 URLs, Google will not be able to process it. In such situations, it is necessary to split the sitemap into several smaller files and link them via a sitemap index, which allows larger files to be handled through a split system.

- Errors related to XML tags

Errors related to XML tags in the sitemap are often the result of typing errors or incorrect formatting. Each tag must follow strict formatting rules and use only the values supported by the sitemap protocol. Sometimes, for example, duplicate tags are used within the same URL entry , generating confusion for the search engine. Each error is reported specifically with the line of code where it occurred, making it easier to locate and correct.

One of the most common errors related to XML tags involves theattribution of invalid dates or in incorrect formats. Dates in the sitemap should follow W3C’s Datetime format , which allows both date and time to be specified. Time specification is optional, but when it is used, it must be accompanied by the correct time zone to ensure complete readability.

- Errors related to sitemaps for videos and news

Sitemaps dedicated to video content may have specific errors, such as the absence of a video thumbnail or failure to load a descriptive title for the video. Each video included in the sitemap should be associated with a title but also with an appropriately sized thumbnail image. If the thumbnail is too small or too large, Google’s request will be rejected. URL management in video entries is also important: the URL of the video cannot coincide with that of the “player” page. If both are indicated, they must be distinct, otherwise Google will not be able to correctly interpret the relationship between the items.

Dedicated sitemaps for Google News content, on the other hand, have limitations on the maximum number of acceptable URLs (up to 1,000), and each URL must have an attached

- Resolution of common sitemaps errors

To resolve all these errors, Google offers the URL Checker tool in Google Search Console, which allows a detailed analysis of each URL in the sitemap, checking its accessibility and providing suggestions for resolving the problems found. In addition, with theexpansion of the Page Availability section, we can understand whether Google is able to access URLs correctly or whether it is encountering practical issues.

Using sitemap validation tools , correcting XML formatting errors and making sure each URL is properly configured are key steps to keep a site accessible and scannable, thus improving the effectiveness of the entire indexing process .

Common mistakes to avoid when creating the sitemap

Widening the picture further on the most common mistakes made when compiling a sitemap, we cannot fail to mention duplicate URLs: in fact, it may happen that the same page is included several times in the sitemap with different variants of its URL (such as with or without “www,” or with different query strings). This duplication not only complicates the indexing process, but can also cause problems with duplicate content, which penalizes search engine rankings .

Another very common error is the inclusion in the sitemap of pages that should not be indexed, such as test pages or temporary pages. These pages should be blocked from indexing using robots.txt files or the noindex tags . Including irrelevant pages in the sitemap disperses the attention of crawlers to less important content rather than priority content, slowing down the indexing process .

A critical element to consider is also the excessive size of the sitemap. An XML sitemap should respect certain limits, as mentioned: the file should not exceed 50,000 URLs or 50 MB in size. Otherwise, you run the risk that it will not be scanned correctly by Google. For particularly large sites, you can split the pages into several sitemaps, using a sitemap index that summarizes them.

Creating a sitemap does not mean doing it once and then forgetting about it: the most common and most penalizing mistake is not updating the sitemap regularly. If a sitemap is not updated periodically to reflect new pages on the site, or if we forget to remove nonexistent URLs, search engines may fail to find the most recent content or, even worse, attempt to crawl URLs that lead to 404 error pages, with the resulting negative impacts on indexing performance and overall SEO.

More subtle, but equally dangerous, errors involve failure to properly manage page priority and refresh rate parameters. These attributes, if ignored or misconfigured, communicate incorrect information to search engines, leading to incomplete crawling of the most important pages.

Sitemap, the 10 mistakes not to make on a site

If these are some of the general and priority checks to be made to verify that the sitemap we have created and submitted to Google is effective and valid, it can be useful to have a summary “mirror” of the 10 sitemap errors that can impair a site’s performance and negatively affect the results in organic search, so that we can more easily understand if in our process of creating the file we have run into a similar situation.

- Getting the sitemap format wrong

The most common mistake with the sitemap concerns the format: Google supports different types of files for inclusion in Search Console, expecting the use of the standard Sitemap protocol in all formats and not providing (at the moment) the

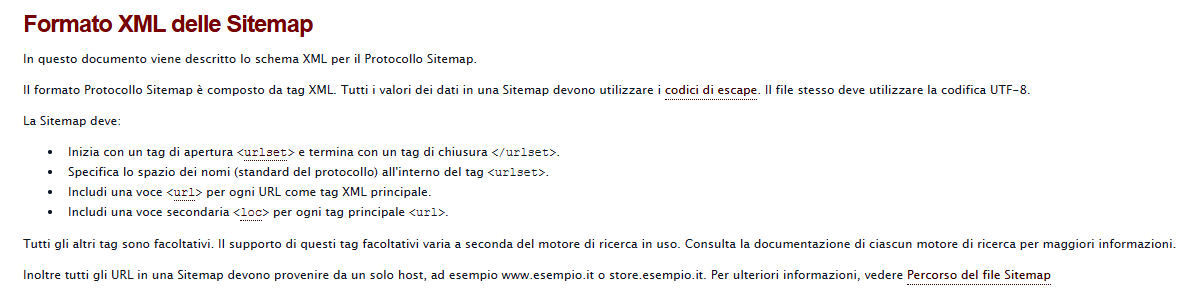

- Using unsupported encodings and characters

A similar, but more specific, inaccuracy concerns the method of encoding characters used to generate Sitemap files: the required standard is UTF-8 encoding, which can generally be applied when saving the file, and Google’s Help explains that “all data values (including URLs) must use escape codes” for some critical characters (as seen in the image on the page).

In detail, a sitemap can contain only ASCII characters, while upper case ASCII characters and special control codes and characters (e.g., asterisk * and curly brackets {}) are not supported. Using these characters in the Sitemap URL will generate an error when adding the Sitemap.

- Exceeding the maximum file size

The third problem is common with very large sites with hundreds of thousands of pages and tons of content: the maximum size allowed for a sitemap file is 50,000 URLs and 50 MB in uncompressed format. When our file exceeds these limits, we need to split the sitemap into smaller sitemaps, then creating an Index Sitemap file where the other resources are listed and sending only this file to Google. This step is to avoid “overloading the server if Google requests the Sitemap often.”

- Including multiple versions of URLs

This is especially an inconvenience with sites that have not perfectly completed the migration from http to https protocol, and therefore have pages that have both versions: if you include both URLs in the sitemap, the crawler may scan the site incompletely and imperfectly.

- Include incomplete, relative, or inconsistent URLs.

It is then critical to properly communicate to search engine spiders what is the precise link path to follow, because Googlebot and others crawl URLs exactly as they are given. Therefore, including relative, non-uniform or incomplete URLs in the sitemap file is a serious mistake, because it generates invalid links. In practical terms, this means that you have to include the protocol, but also (if required by the web server) the final slash: the address https://www.esempio.it/ thus represents a valid URL for a Sitemap, while www.esempio.it, https://example.com/ (without using the www) or ./mypage.html are not.

- Include session IDs in the Sitemap

The sixth point is quite specific: it is the sitemaps project FAQ page that makes it clear that “including session IDs in URLs may result in incomplete and redundant crawling of the site.” So, including session IDs of URLs in your sitemap can result in increased duplicate crawling of those links.

- Misplacing dates

We know that search engines, and in particular Google, are placing increasing emphasis on date insertion in web pages, but handling the “time factor” can sometimes be problematic for Sitemap insertion.

The valid process for inserting dates and times in the Sitemaps protocol is to use the W3C Datetime encoding, which allows all time variables to be handled, including approximate periodic updates if relevant. Accepted formats are, for example, year-month-day (2019-04-28) or, specifying the time, 2019-04-28T18:00:15+00:00, also indicating the reference time zone.

These parameters can avoid scanning URLs that have not changed, and thus reduce bandwidth and CPU requirements for web servers.

- Do not include mandatory XML attributes or tags.

This is one of the errors often flagged by the Search Console Sitemap report: mandatory XML attributes or mandatory XML tags may be missing in one or more entries in some files. Conversely, it is similarly wrong to include duplicate tags in Sitemap: in all cases, action must be taken to correct the problem and attribute values, and then resubmit the sitemap.

- Creating an empty sitemap

Let’s close with two types of errors that are as serious as they are egregious, and that basically undermine everything positive and useful we have said about sitemaps. The first one is really a beginner’s one, which is saving an empty file that contains no URL and sending it to Search Console-no need to add more.

- Making a Sitemap not accessible to Google

The other and final problem concerns a fundamental concept for those working online and trying to compete on search engines: to fulfill its task, the Sitemap “must be accessible to and not be blocked by any access requirements,” Google writes, so all the blocks and limitations that are encountered are a hindrance to the process.

An example is including URLs in the file that are blocked by the robots.txt file, which then does not allow Googlebot to access some content, which otherwise cannot be crawled normally. The crawler may also have difficulty following certain URLs, especially if they contain too many redirects: the suggestion in this case is to “replace the redirect in your Sitemaps with the URLs that should actually be crawled” or, in case such a redirect is permanent, to use precisely a permanent redirect, as explained in Google’s guidelines.

A guide to managing sitemaps with Google Search Console

Google Search Console’s tools, and in particular the Sitemap Report, allow us to facilitate communication with the search engine’s crawlers, as also explained by an episode of the webseries Google Search Console Training, in which Daniel Waisberg walks us through this topic.

In particular, the Developer Advocate reminds us that Google supports four modes of expanded syntax with which we can provide additional information, useful for describing files and content that are difficult to parse in order to improve their indexing: we can thus describe a URL with images included or with a video, indicate the presence of alternative languages or geolocated versions with hreflang annotations , or (for news sites) use a particular variant that allows us to indicate the most recent updates.

Google and Sitemap

“If I don’t have a sitemap, can Google still find all the pages on my site?”. The Search Advocate also answers this frequently asked question, explaining that a sitemap may not be necessary if we have a relatively small site with appropriate internal linking between pages, because Googlebot should be able to discover the content without problems. Also, we may not need this file if we have few media files (videos and images) or news pages that we intend to show in the appropriate search results.

On the contrary, in certain cases a sitemap is useful and necessary to help Google decide what and when to crawl your site:

- If we have a very large site , with the file we can indicate a priority of URLs to scan.

- If the pages are isolated or not well connected.

- If we have a new site (and therefore little linked from external sites) or one with rapidly changing content.

- If the site includes a lot of rich media content (video, images) or is displayed in Google News.

In any case, the Googler reminds us, using a sitemap does not guarantee that all pages will be crawled and indexed, although in most cases providing this file to search engine bots can give us benefits (and certainly does not give disadvantages). In addition, sitemaps do not replace normal crawling, and URLs not included in the file are not excluded from crawling.

How to make a sitemap according to Google

Ideally, the CMS running the site can make sitemap files automatically, using plugins or extensions (and we recall the project to default integrate sitemaps into WordPress), and Google itself suggests finding a way to create sitemaps automatically rather than manually.

There are two limits to sitemaps, which cannot exceed a maximum number of URLs (50 thousand per file) and a maximum size (50 MB uncompressed), but if we need more space we can create multiple sitemaps. We can also submit all these sitemaps together in the form of an Index Sitemap.

The Sitemap Report in Search Console

To keep track of these resources we can use the Sitemap Report in Search Console, one of the most useful webmaster tools, which is used to submit a new sitemap to Google for ownership, view the submission history, view any errors found during the analysis, and remove files that are no longer relevant. This action removes the sitemap only from the Search Console and not from Google’s memory: to delete a sitemap we have to remove it from our site and provide a 404; after several attempts, Googlebot will stop following that page and will no longer update the sitemap.

The tool allows us to manage all sitemaps on the site, as long as they have been submitted through Search Console, and therefore does not show files discovered through robots.txt or other methods (which can still be submitted in GSC even if already detected).

The sitemap report contains information about all submitted files, and specifically the URL of the file relative to the root of the property, the type or format (such as XML, text, RSS, or atom), the date of submission, the date of the last Google read, the crawl status (of the submission or crawl), and the number of URLs detected.

How to read sitemap states

The report indicates three possible states of sitemap submission or crawling.

- Completed is the ideal situation, because it means that the file has been uploaded and processed correctly and without errors, and that all URLs will be queued for crawling.

- Submit Errors means that the sitemap could be parsed but has one or more errors; URLs that could possibly be parsed will be queued for crawling. By clicking on the report table, we can find out more details about the problems and get pointers to corrective actions.

- Unable to recover, if some reason prevented the file from being recovered. To find out the cause we need to do a real-time test on the sitemap with the URL Checker tool.

XML sitemap, 3 steps to improve SEO

Despite all the precautions we can use, there are still situations in which the sitemap presents critical issues that can become a hindrance to organic performance; to avoid boredom and problems, there are three basic steps to evaluate, which can also to improve SEO, as suggested by an article published by searchengineland that points us to a quick checklist to follow for our sitemaps provided to search engine crawlers, useful to avoid errors such as the absence of important URLs (which could potentially therefore not be indexed) or the inclusion of wrong URLs.

Verify the presence of priority and relevant URLs

The first step is to verify that we have included in the sitemap all the key URLs of the site, that is, those that represent the cornerstone of our online strategy.

An XML sitemap can be static, thus representing a snapshot of the Web site at the time of creation (and therefore not updated later) or, more effectively, dynamic. The dynamic sitemap is preferable because it updates automatically, but the settings must be checked to ensure that we are not excluding sections or URLs central to the site.

To check that relevant pages are all included in the sitemap we can also do a simple search with Google’s site: command, so we can immediately find out if our key URLs have been properly indexed. A more direct method is to use some crawling tools with which to compare the pages actually indexed and those included in the sitemap submitted to the search engine.

Check whether URLs to be removed are included

Of completely opposite sign is the second check: not all URLs should be included in the XML sitemap and it is indeed better to avoid including addresses that have certain characteristics, such as

- URLs with HTTP status codes 4xx / 3xx / 5xx.

- Canonicalized URLs.

- URLs blocked by robots.txt.

- URLs with noindex.

- URL pagination .

- Orphan URLs.

An XML sitemap should normally contain only indexable URLs, which respond with a 200 status code and are linked within the Web site. Including other page types, such as those indicated, could contribute to worse crawl budgets and potentially cause problems, such as indexing orphan URLs.

By crawling the sitemap with crawling tools, it is possible to highlight if there are any incorrectly inserted resources and, therefore, take action to remove them.

Making sure that Google has indexed all the URLs in the XML sitemap.

The last step is about how Google has transposed our sitemap: to get a better idea of which URLs have actually been indexed, we need to submit the sitemap in Search Console and use the aforementioned Sitemap report and index coverage status report, which give us insights into the search engine’s coverage.

In particular, the index coverage report allows us to check the Errors section (which highlights problems with the sitemaps as URLs that generate a 404 error) and the Excluded URLs section (pages that have not been indexed and do not appear in Google), also indicating the reasons for this absence.

If these are useful pages-not duplicated or blocked-there may be a quality problem, such as the famous thin content , or an incorrect status code, particularly for pages that have been crawled but are not currently indexed (Google has chosen not to include the page in the Index for now) and for pages that have been detected but not indexed (Google tried to crawl, but the site was overloaded), and then it is time to intervene with appropriate onsite optimizations.