Guide to on-page SEO and major onsite optimization efforts

Work on the page and all the elements that make it up and distinguish it (and over which we have direct control) before intervening on off-site activities: this is one of the pieces of advice we offer to those approaching the world of SEO and one of the cornerstones that guides our consulting strategy for website optimization. SEO is a complex discipline, bringing together different but related elements that, directly or indirectly, can affect site performance, but work related to on-page SEO still remains crucial to success in terms of ranking and traffic, despite Google’s constant evolutions, because it concerns factors that affect the way a web page turns out efficiently and performing.

What is on-page SEO

From a literal point of view, the expression on page SEO or on site SEO refers to the optimization of all elements within a web page for search engine ranking and for users.

When we talk about SEO, or Search Engine Optimization, we are referring to a set of techniques and strategies designed to improve a website’s visibility on search engines. On-page SEO, in particular, concerns all those activities that we can carry out directly on our web pages to make them more attractive in the eyes of Google and other search engines.

Thus, it is an extensive and complex activity, intervening both at the content and on the HTML source code of a page that can be optimized, and it affects a long series of components such as titles, meta, URLs, but also parameters of the architecture, readability and usability of the page, up to the text, keywords and internal links, concluding then with the structured data that are gaining more and more weight in the construction of the semantic Web.

In this sense, and in practice, everything that we can directly optimize within a web page falls under the purview of on-page SEO, which then takes the form of the opposite branch of SEO to off-page SEO, which, as the name explains, focuses instead on optimizing signals that occur outside the website we are working on. In the background then is technical SEO, which seeks to improve aspects related to the more general management and organization of the site as a whole, evaluating factors such as page and site speed, duplicate content, site structure, crawling and indexing regularity.

On page SEO, main factors

We know that Google takes into account about 200 ranking factors for its SERPs, and on our guide we have also indicated which are the elements to work on in order to optimize the on page aspects of both a site and a page. SEO specialists and analysts, though, thinks that there are 5 fundamental areas of intervention to enhance on page SEO, that should always be assessed and verified when planning and monitoring a site.

A site is an organic ecosystem

The premise is that a site is an organic ecosystem made up of lots of different entities, as well as SEO is: therefore, to only focus on a single aspect might not offer the desired results. Not even links and editorial contents, whether pivotal in order to rank, represent the only factor evaluated for placement by search engines, that are becoming more and more complex and able to read plenty other parameters. pertanto, concentrarsi solo su un aspetto rischia di non offrire i risultati sperati.

And for that, having lots of backlinks could still be useless to make a slow site providing a negative user experience perform better, as well as the presence of decent quality contents could be penalized by intrusive interstitial on a mobile device, as we previously tried to explain with the specific articles we wrote about these topics.

On page SEO optimization, 5 factors to consider

Recently, Kristopher Jones on Search Engine Land tried to compile a list of the 5 main on page SEO elements to always focus our attention on and to never overlook for a site’s optimization. They are subjects we already covered on our insights too, because the author quotes, in order:

- Content

- User Engagement

- Technical Structure

- Interlinking

- Mobile Responsivity.

The work to optimize on page content

It always starts from the content, then, that remains “king” to use an (old by now) expression originally credited to Bill Gates (that on 1996 said “content is the king” speaking about his predictions on the future of the Internet). In this area, the first step to improve on page elements is to intercept search intent, given the fact that the content relevance to the user’s intent is surely one of the most important parameters for Google and, consequently, for the reader landing on the page.

Suggestions to optimize content

In this sense, the work of optimization starts from the deep understanding of the kind of intent tied to the page’s keywords (informational, transactional or navigational), to subsequently analyze the SERP and hence understand which are the search engine’s assessments, to then make correlated adjustments, also using SEOZoom tools like the intent gap.

Obviously fundamental is a keyword research targeted both to match the intent and to provide semantically related keywords in order to enrich content with minor topics, trying to fully answer all possible questions.

Interventions on tags

A core role is (yet again) the one of SEO meta tags, such as title and headers, that are very much needed to specify the intent and syntax of the document, to sort out what info to provide to users and search engines, to make the pages easier to scan and to captivate the reader’s attention. The optimization of these aspects can be focused on entering main keywords within tag titles, slug URLs, page titles and using correlated keywords within section headers (H2, H3, H4).

How to optimize the pages’ user engagement

If this first point regarded SEO copywriting‘s activities and abilities in detail, the on page optimization of user engagement refers to a wider spectrum of expertise; the metrics to assess are pages per session, the rebound frequency and the Click-Through Rate, that depends both on search engines and the user’s behaviour, that should not only be enticed on the page, but also convinced to stay and interact with the offered content.

Enhancing the pages per session

As well-known, Pages per Session is the metric that assesses the number of viewed pages before the user leaves the site and is displayed inside Google Analytics alongside the average time lenght of the session (meaning the average time spent by a user on the site). These values help us understand how much a page is interactive and engaging from the navigation point of view and to understand which are the possible mistakes influencing the selling funnel or blocking conversions, or (for a blog or news site) which articles do not spark any interest and are being left mid-reading without even trying anything else.

In order to improve these parameters we need to analyze those pages with high bounce rates and to study specific opportunities to encourage readers to prolong the length of the session or the display of other pages; for instance, some call to action can be incorporated to promote conversions or supply more navigational options, like the suggestion of correlated articles or simple internal referenced links.

Working on bounce rates and CTR

The bounce rate or rebound frequency indicates the level of appreciation of the users towards a landing page or web site, and it is an ambiguous value: a high bounce rate on an eCommerce could mean that the pages are not engaging and do not match the user intent, while on an informational site people could immediately be satisfied and get the answer they were looking for in no time. In order to try and optimize this aspect, though, there are small technical interventions it is possible to perform, like deleting all invasive interstitials and pop-up ads, enhancing loading time or writing texts for landing pages relevant to search queries.

Improvements for CTR

On the CTR side, in the past few weeks we talked about the doubts the entire SEO community has on this value, that stands as the very first interaction a user has with the site: a low click-through rate could mean that the message is not relevant to the user search, as well as that the meta description or title tag are not that convincing either. The improvement work on this specific rate can focus on the integration of an exact match keyword in both the title tag and meta description, in adding right from the description some indication profitable to users (promotional offers, discounts and so on) and, simply, in filling in suitable lengthed tags to avoid being cut by Google Search display.

How to make the technical structure of the page more performing

The technical structure of the site can also have an impact on user engagement and the ranking of the keywords, because without a strong architecture the web of contents might not hold up and, consequently, collapse. The elements to focus on in this area are the so-called crawlability, and the safety and cleanliness of URLs.

Simplifying Googlebot’s scans

Many sites tend to make search engine crawlers‘ lives harder, that daily try to do their job navigating sites through the links supplied by the Sitemap and available on the home page: the first on page technical optimization step is, then, to make sure that the site can actually be scanned and that the crawl budget is optimized and well invested, detecting all those site pages that must have priority of scanning and indexing, in the event that the crawlers could analyze each path.

Equally useful is to block every page we would like not to show to the spiders, sorting them under the disallow file of the robots.txt file; moreover, it is recommended to clean each and every redirect chain and to set parameters for dynamic URLs.

Optimization and safety

As we know, the HTTPS protocol guarantees the safety of transactions made through a site and represents a soft factor of ranking to Google; sometimes, and we were talking about it on sites’ migration errors too, on a site can exist mixed connections or HTTP pages that not always redirect to the right resource. In order to solve these critical issues we need to deeply analyze the site, identify those specific errors, include the sitemap inside the robots.txt file despite any user-agent command and, lastly, rewrite the .htaccess file to redirect the whole web site’s traffic towards a specific domain using the right HTTPS URL.

Fixing wrong connections

Another on page optimization action concerns the correction of all those possible cases of redirected, not working or straight broken links, that might impact on load speed, indexing and crawl budget. A site scan allows to discover all the different existing problems and their related status codes: generally, we should always work to obtain clean URL structures with status code 200, while obviously 4xx and 5xx errors need to be fixed.

A possible improvement is the redirect 301 from damaged pages in order to move users to a more appropriate page, as well as useful can be to create a customized 404 page with a valid URL towards an alternative resource. For those status code 5xx that are currently impacting on URLs we instead need to immediately contact the hosting provider to signal our problems and request clarifications and corrections.

The importance of internal links to on page SEO

The interconnection of a site allows to answer to a series of SEO goals such as the improvement of crawlability, the attention to the UX, a more effective content management and the creation of a in-home link building. The internal linking basically supports us in crating doors allowing both spiders and users to pass through all those paths planned with technical SEO.

A classic area of use of internal links is the deep link one, meaning to channel links from higher category pages towards orphan pages, that can benefit from this kind of authority and then succeed in indexing; furthermore, this strategy could also be useful to sort readers out towards other contents of interest, inviting them to deepen other aspects of a topic or to discover different sections of the site.

Pay attention to mobile navigation and responsivity

The last area of intervention of the on page SEO optimization concerns the care we have to (necessarily, by now) devote to users navigating from mobile devices: in the era of mobile-first index it is absolutely crucial to be competitive on this side, nowadays a priority to Google too.

The enhancing work on responsivity moves on three fronts: first and foremost, on the implementation of a responsive web design for our site, that matches all the criteria and guidelines required on this subject. Other actions may be improving page speed by reducing onsite resources or using CDNs to reduce latency in transferring users to resources (as well as to lighten the load of certain resources on the server hosting the site).

Google unveils the 3 main factors of onsite SEO

The centrality of onsite SEO is easily guessed, then, not least because (as mentioned) this discipline groups together all the interventions that we can do directly within our site, over which we therefore have total and full control, as well as being those that are most easily executable even without special technical expertise.

Therefore, it is not surprising to find out that several times Google has more or less explicitly urged webmasters and SEOs to focus first or mainly on certain elements of on-page SEO, also offering precise indications and advice on what is most useful to do to improve the visibility of our project. One of the most interesting moments in this regard came thanks to the SEO Mythbusting series on YouTube, in which Martin Splitt, one of the most recognizable and public faces of the American company, addressed precisely SEO topics to “dispel myths” and offer some official and source-verified news, so to speak; in the very first episode, Googler Martin Splitt revealed what are the 3 main onpage SEO factors on which he invites to focus attention and work.

On-site SEO, the basic tips for optimization

The cue comes during an interview/chat between Splitt and developer Juan Herrera, who initially asks the Googler introductory questions such as “what is a search engine,” “what does indexing mean,” “why is it important for a site to appear high in SERPs,” and “how does Google determine which results are relevant to users” (the answer is “through the more than 200 ranking factors,” among which Splitt explicitly mentions title, meta description, actual on-page content, images, and links).

The 3 main SEO factors for ranking

It is in the second part that the topics we were talking about are addressed, when the Googler answers the precise question “what are the top 3 things I need to do to make my site easier to discover?” and, concretely, offers useful guidance for everyone to understand what are the 3 most important SEO factors on which Google focuses attention.

Page content is the priority element

Rather than pointing to “this or that framework” (an answer that might have been expected from a developer), Google’s Martin Splitt cites content as the main factor for ranking in SERP: for him (speaking officially as the voice of Google) a site must offer really good, quality content that has a purpose (purpose) for the user.

Continuing his description of the main ranking factor, Splitt explains that a page’s content should be something users need and/or want, adding with a joke “the ultimate is to offer something they absolutely need and want, like ice cream.”

SEO purpose, the importance of the purpose of the page

So, the optimal situation for Google is when “the content says where you are, what you do, how you help me with what I’m trying to achieve,” while a self-referential page, where it just says “we’re a great company full of great products” serves no purpose for the user.

More specifically, a site must try to “serve the purpose of the people it wants to attract and reach the target audience you want them to interact with your content,” says Splitt, who then adds an important reference about language. For him, you have to make sure you are using “the words I will use” as a user. The Googler says that “if you use a very specific term for your ice cream, say like Smooth Cream 5000, I user won’t search for it because I don’t know it” and I just need ice cream.

The reference to purpose in SEO will not come as a surprise to experts and discerning readers, since already in Google’s guidelines to quality raters there is an entire chapter explaining how the beneficial purpose of a page should be evaluated, particularly for those referring to YMYL topics.

Use the best language and keywords to deliver content relevant to the search intent

Thus, in an optimal content it is good to use “somewhere” the keyword as specific as the name of the ice cream invented by Splitt, “because if I search for that trademark I can find it,” but if the person is “exploring” the Internet looking for an ice cream without having a specific brand in mind one must be able to intercept this intent and speak the language the user speaks.

This advice serves to describe both the importance of relevance in content and the ability to be able to understand what intentions are driving users’ searches so that you can offer them pages that meet their needs, purposes and requirements (the purpose).

Martin Splitt’s words also help define another basic concept: in SEO work, one should never focus too much on keywords and neglect purpose instead. Keywords are important, but there are so many other factors that influence a site’s ranking, and being able to understand what users are looking for and what they need is certainly a sound strategy for getting results.

Main SEO factors, what are they according to Google

The second and third factors outlined by Martin Splitt for achieving good rankings on Google are much more technical and in some ways disorienting, and concern the use of meta data and site performance, respectively.

Nurturing meta tags that describe content

Regarding meta data, the developer advocate of the Google Search Relations team says it is critical for a site to have and display “meta tags that describe your content,” such as a meta description that “gives you the ability to have a little snippet in the search results that allows people to figure out which of the many results might be the ones that best help them” get answers to their need.

Optimize titles and meta descriptions

No less useful are “page titles that are specific to the page you are offering.” So, it’s not advisable to “have a title for everything,” indeed “the same title is bad,” jokes Splitt, who then adds further that “if you have titles that change with the content you’re showing, that’s great. And frameworks have ways to do that.”

Performance as an SEO factor

Lastly, the third SEO factor for ranking unveiled by Google is site performance, which for Splitt “are are are great! We’re talking about them constantly, but we’re probably missing the fact that they’re also important for getting discovered online.”

The interviewer at this point interjects to ask if interventions to improve performance “not only make my site faster, but also make my site more visible to others?” and Splitt’s answer is quite surprising. “Correct,” he says, “because we want to make sure that people who click on search results choosing your page can get that content quickly,” which is why performance is “one of many factors” Google looks at carefully when ranking sites.

How to improve site performance

In practical terms, to take action on performance Martin Splitt strongly recommends checking “hybrid rendering or server-side rendering” several times because that is what generally gets content to users the fastest. In addition, some bots (but not GoogleBots) may not interpret and scan JavaScript, so it would be better to offer dynamic rendering that makes the code change so as not to intervene more heavily on the whole structure and still ensure correct interpretation.

For Google, the elements to improve performance are first to increase the speed at which you display content and the value of the first contentful paint shown on the page, and then to take care of server optimization, optimization of caching strategies, making sure that “your scripts don’t have to run for like 60 seconds to grab everything they need.” These are general tips that precede in time the introduction of the Google Page Experience, which definitely introduced technical page performance as one of the ranking factors (although then, upon closer inspection, the actual impact on the visibility of these elements was small and less than the premise).

Meta description and speed as ranking factors?

We called these two points “unsettling” because generally (and for a long time) meta description has not been considered a pure ranking factor, but rather a useful element for user experience and attracting user clicks at the expense of competitors in SERPs. Could mentioning meta tags in this context (and not mentioning, for example, links) mean that something is changing in Google’s interpretation, or is this just Martin Splitt’s personal consideration?

Similarly, in various speeches Splitt’s colleague John Mueller had talked about performance and, more specifically, just the speed of a site in reference to search engine rankings, saying that the advantage of having so many ranking factors was that “everything doesn’t have to be perfect.”

According to what Mueller says, the speed of a site is certainly important, but it may not be prioritized over other elements that the search engine values as more useful for user experience. In practice, if a user finds a slow site useful and profitable at the expense of other fast ones, Google’s algorithm should take these elements into consideration.

In Martin Splitt’s words, however, we talk about performance as a top SEO factor: probably, however, the Googler is not talking specifically about algorithmic ranking factors, but rather about elements to be studied and optimized to improve the site as a whole.

In the end, and in truth, Martin Splitt’s words have perhaps raised some doubts about ranking, rather than resolving and clarifying them: in any case, the guidance provided by the Googler is certainly useful to know what to focus on in the on-page optimization work of the site and what aspects to improve in order to try to get better results.

On-page SEO optimization, SEOZoom’s tools

If these are the theoretical information (with some hints of practical techniques) to approach on-page SEO optimization, in this concluding part we roll up our sleeves to find out how the section of SEOZoom dedicated precisely to this aspect works, with which to improve the management of the various interventions on pages.

As our own Ivano Di Biasi recalled in presenting (some) of the aspects that have characterized our platform since its inception, SEOZoom was the first SEO tool in the world to offer a view of a website’s performance no longer based on keyword rankings like the trivial rank trackers, but on the performance of every single web page on the site. Then international competitors appreciated and imitated on-page tracking, but in the meantime, our development work did not stop and raised the bar again for on-page SEO tools, the so-called on-page SEO tools.

How to use SEOZoom as an on page SEO tool

In the new SEOZoom 2.0 suite, there is now a new section that is explicitly called on-page SEO, which allows you to perform a comprehensive analysis of onsite optimization elements in real time, scanning the tags of the source web page and, at the same time, also all the web pages of competitor sites, identifying users’ Search Intent, evaluating Intent Match scores, and providing a series of targeted suggestions on SEO optimization best practices.

In addition to this very useful functionality-present within projects-the platform now has a revamped area dedicated to auditing, monitoring and managing all aspects of onpage improvement work, which is activated by selecting Pages in the projects menu.

Analyze content and pages in real time to improve ranking

As Google’s algorithm continues to evolve, today it is no longer just the performance of the URL as an entity that matters, but rather the analysis of the specific performance of the content on that page. This is not just a philosophical issue: the new section allows for a more in-depth study of each web page’s content and its performance, quickly offering suggestions for improving ranking.

Focus on crawl budget and keyword cannibalization

The first element that is presented is the summary assessment of site performance, analyzed in three tabs: wasted Crawl Budget is a value that immediately informs about the amount of pages that do not make traffic and visits because they do not even have a keyword placed (and thus unnecessarily take up part of the time Google devotes to our site); Improve Your Pages tells us the achievable improvement in terms of traffic through optimizing only a limited number of pages (those that only need a small boost to take off); Achievable Goal fixes the figure of potential visits we could aspire to by working on the improvable pages and potential keywords.

Driving this overview is the realization that every Web site presents search engines with an outsized number of Web pages, many of which may safely not exist at all. To navigate these pages, Google spends time and resources, even if it does not ultimately yield the desired results: so why waste valuable Crawl Budget on pages that do not render or are cannibalized, instead of focusing it on the best performing resources?

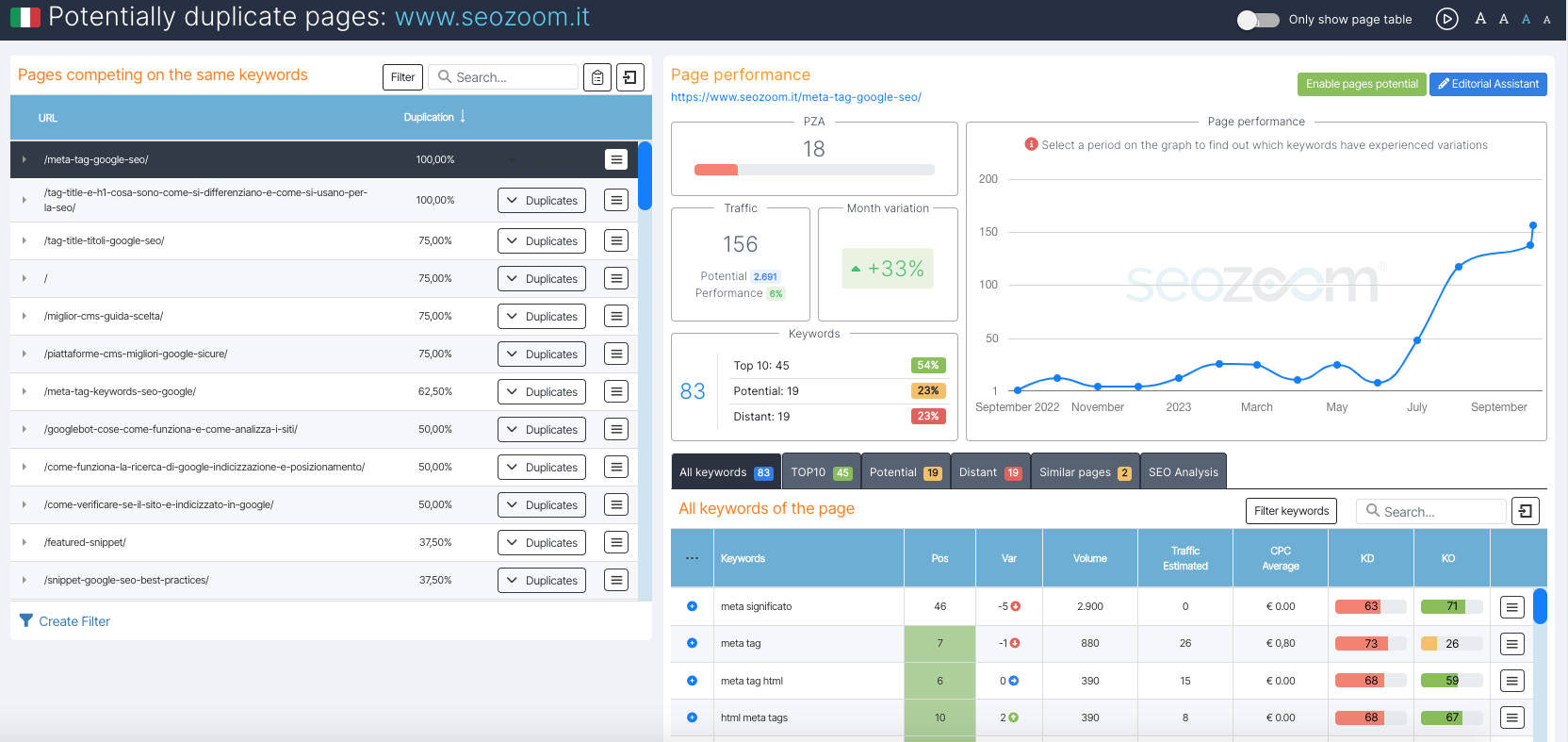

In addition, the section’s navigable tabs also include a specific focus on web pages at risk of cannibalization, another problem that can lead to loss of visibility and is a symptom of overall site management that is not particularly onpage SEO friendly, so to speak.

How SEOZoom’s onpage SEO tools work

The SEOZoom tools in the Pages section are available to users who have placed a domain in a monitored project.

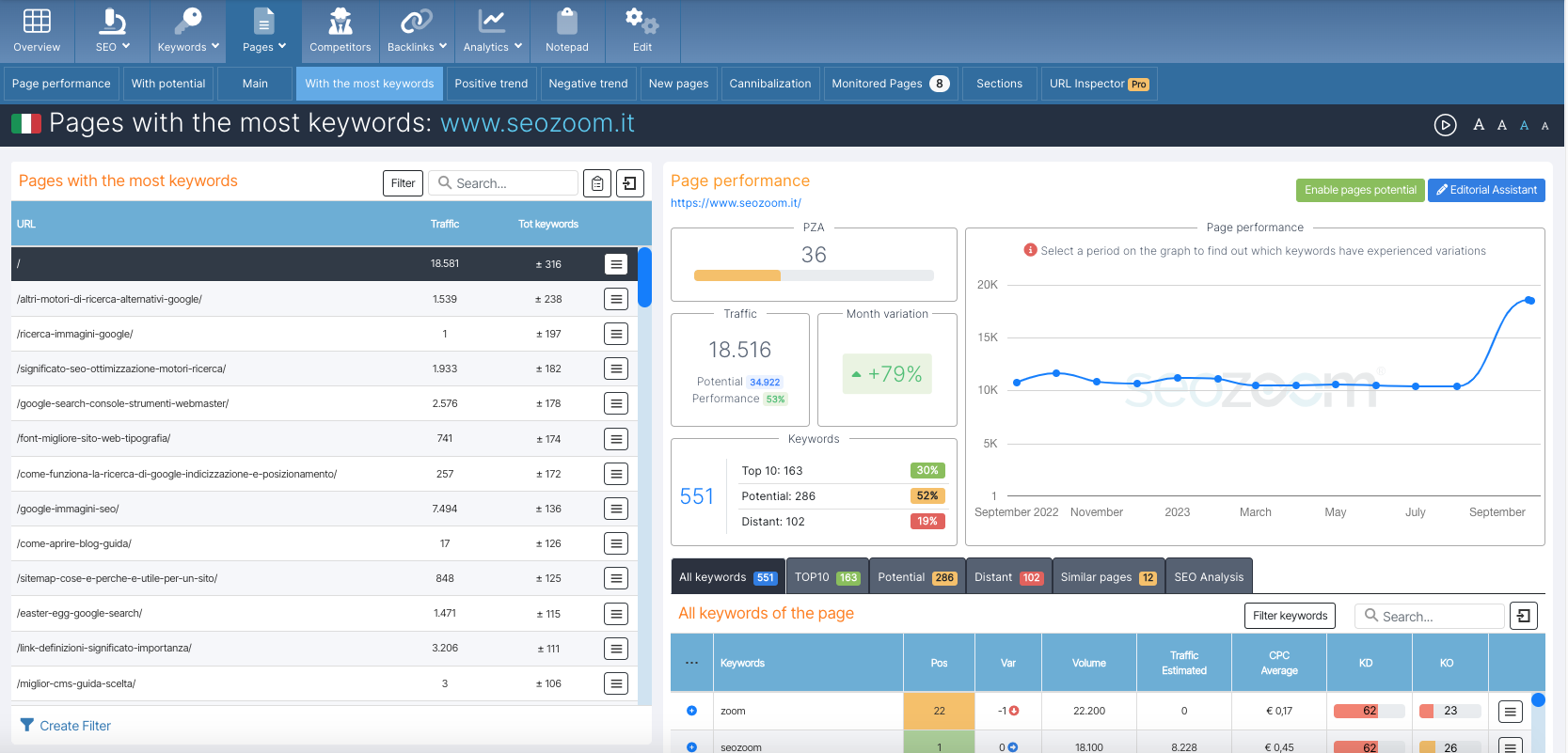

The area has 9 tabs, namely Page Performance (the opening and summary dashboard), With Potential, Main, With the most Keywords, Positive Trend, Negative Trend, New Pages, Cannibalization and Monitored Pages, which we will now look at in detail.

Optimizing content and pages with SEOZoom

The first screen that appears in Page Yield groups the site’s URLs based on a predetermined traffic range, indicating in each row the number of pages that fall within the parameter, the contribution brought to the site (aggregate volume of visits), the number of total keywords, the yield, and the crawl budget commitment of the entire group.

More specifically, the yield column indicates the percentage traffic volume that comes from each of these groupings, while the crawl budget column reveals how many resources are being devoted to crawling these pages, thanks to an estimate made by SEOZoom to help understand which sections of the website are employing a lot of resources and which are bringing in an estimated higher volume of traffic.

By combining the data from these two columns, we can easily and quickly understand whether, compared to the overall situation, the ratio of performance to resources is even or whether there are underperforming web pages that are nevertheless taking up a large number of resources.

On the right, on the other hand, we can move on to the more detailed analysis of each individual web page of the site, discovering its performance in SERPs, the keywords placed, those that could be easier to bring to the first page and those that are far away, up to a specific SEO Analysis that identifies problems, errors and possible corrective actions to be taken onpage.

For example, and these are to name a few of the information that can result, with this analysis it is possible to check for Intent Gap problems-possible focus gaps of a webpage compared to those that are getting the best on Google-or more simply discover the topic-relevant terms used by most competitors, along with secondary terms that are nonetheless relevant to the type of page (always pointing out the presence or absence of such words in the examined page), but also possible SEO errors that create discomfort to the Google spider (which finds itself lost in browsing countless useless web pages rather than browsing the good pages of the site) such as excess 301 redirects, Pagination problems, lack of Canonical indication and so on.

Thus, this section allows you to comprehensively and thoroughly work on the on-page SEO of the site, do more Crawling Budget Optimization analysis, and make the Google spider happy by bringing more attention to the important pages of the site.

Study site content to improve on-page SEO

The area dedicated to page management and on-page SEO is really very large and versatile, but most importantly, it is useful to be able to improve your SEO strategy with key information.

Pages with potential are those that have keywords placed in the second and third pages of Google with a high number of monthly searches, which can therefore generate increased traffic through keyword SEO optimization work. For example, by deepening the analysis in the SEO Analysis tab, SEOZoom can point out which keywords are relevant with respect to the search intent recognized by Google that we have not used in the text, thus providing us with immediate advice to improve the content of the page by possibly considering inserting these keywords within the page or in the heading tags (also checking competitors’ strategies in this regard).

Intuitively, then, the pages With the most keywords are the ones that possess the largest amount of keywords placed on Google, while the Main pages are the ones that make the largest contribution in terms of traffic volume-and in this case, the table allows for even deeper analysis of these URLs, possibly also uncovering similar pages that might cause cannibalization or otherwise carry ambiguity.

Detailed information about each page (and competitors)

The other available focuses are on Positive Trending and Negative Trending pages (easy to understand that they refer, respectively, to pages that are having a positive performance or that have manifested a decline in performance) and, again, on New Pages, i.e., URLs that have achieved new rankings in SERPs-a feature that offers information that can be very important for monitoring competitors, by projecting a competitor site to be monitored, because it allows one to immediately identify the content with which that site is entering SERPs.

The Cannibalization tab is a tool that shows in detail which URLs are competing for the same keywords, comparing web pages and reporting whether there are cases of internal site competition for the same keywords, which generates as we know a potentially negative effect. It should be specified that the SEOZoom tool does not detect whether text is duplicated or copied, but simply that according to the scan performed the indicated pages are ranked for the same keywords and on the same data.

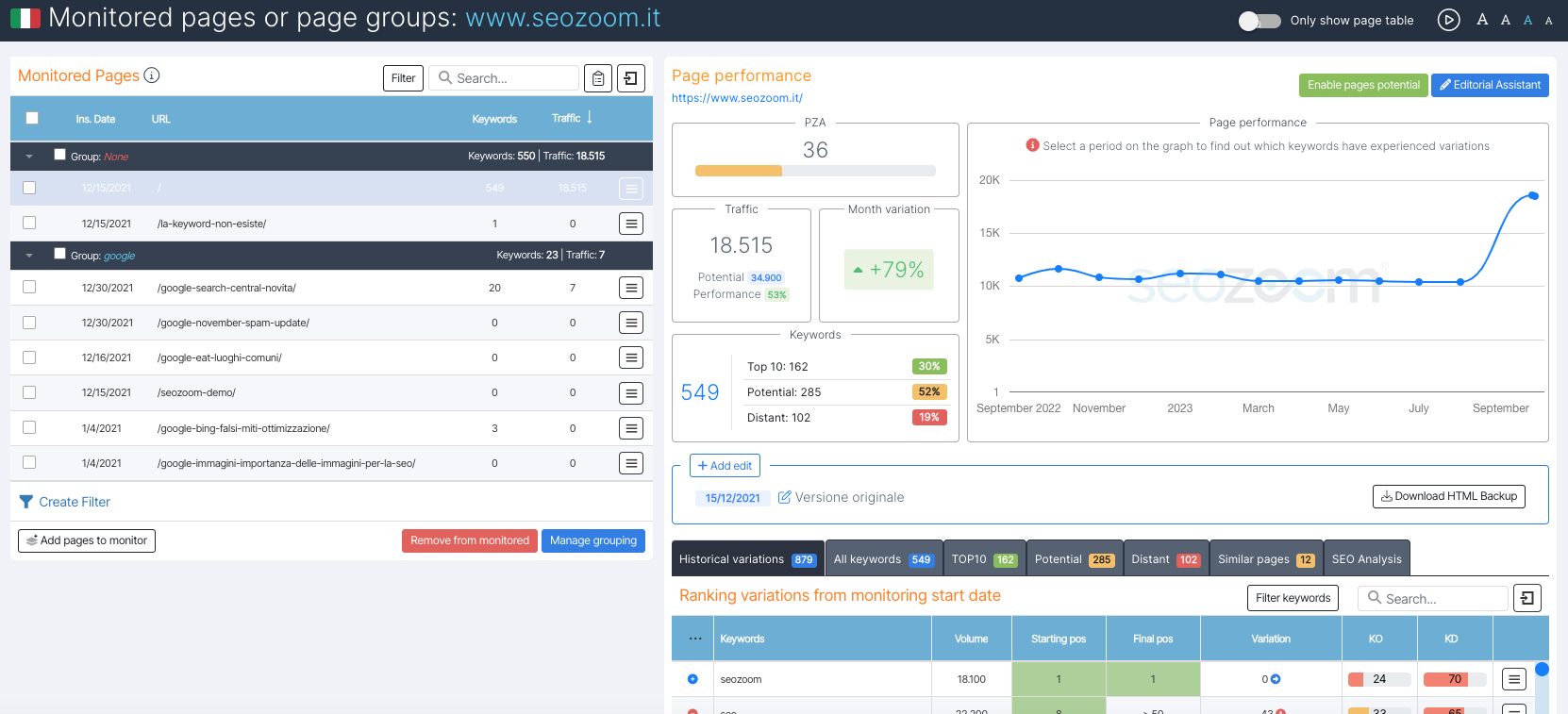

Finally, this section closes with the “Monitored Pages,” a kind of notepad that we need to report directly to SEOZoom the pages of the site that we want to keep under specific control, in addition to the automatic one: strategically, you can enter both the most relevant pages for your online business and those of competitors you need to watch closely, so that you always have them at your fingertips for a quick check.

Also in this area, it is also possible to manage interventions on the site, asking SEOZoom to keep a backup save of the previous version of the page we are about to modify, so that we can compare performance (with the table showing Position Changes from the date we started monitoring) and eventually (in the worst cases) return to the state before the changes.