Page and site speed, the SEO best practices that are valid today

Perhaps it is true, as the German writer Gotthold Ephraim Lessig said (echoed in a very famous commercial), that “the waiting for pleasure is itself pleasure,” but in everyday reality, waiting is not something we enjoy all that much, and especially online as users we do not tolerate delays when we want to navigate a page or a site. So much research over the past few years has highlighted how even a few thousandths of a second can make a difference and result in site abandonment, a decrease in conversion rate, or even a drop in search engine rankings. In fact, if Google is putting so much emphasis on the importance of technical performance and page experience even for ranking purposes something means something, doesn’t it? So let’s try to understand what site and page speed means today from an SEO perspective, and even more precisely, let’s try to understand why in the modern web it is crucial to measure, optimize and monitor performance, and thus how we can ensure that we are providing fast loading of resources and, consequently, offering a quality experience to users, elements can be decisive for the success of our online project.

What is page speed

Page speed can be described as a general, generic performance parameter that indicates how long a specific web page takes to fully load all of its visible content and background processes. This includes the loading of elements such as text, images, videos, scripts and CSS files.

It seems easy to understand what page speed is in terms of how fast the page’s content loads-and so the faster the content loads, the faster the page speed. However, as we were specifying, page speed is somewhat of a generic concept because there is no single metric that can define web page loading speed, and consequently we cannot refer to an unambiguous and determined value (like when we think about the speed of a car), but rather we have to think in terms of the time users interact with the site and the level of quality of the experience provided – which, in a nutshell, is also what Google decided to do with the introduction of Core Web Vitals.

What is site speed

The expression Website speed, or Website performance, on the other hand, is an overall measure that reflects how fast the pages of an entire Web site load on average. This metric takes into account the performance of all pages on the site (or at least a relevant sample of page views), providing an overview of site speed.

Site speed vs. page speed: a crucial distinction

Site speed and page speed are thus two closely related concepts, but with important nuances. Site speed refers to the average loading speed of all pages on a website. It is an overall indicator that reflects the overall performance of the site.

On the other hand, page speed refers to the time it takes to load a specific web page. This parameter can vary greatly from one page to another within the same site, depending on various factors such as the amount and type of content, code structure, and server efficiency.

Why page speed is important: an overview

Before going into technical details, we can anticipate some considerations that make us understand why page speed is a relevant factor in the success of a website even today (or perhaps especially today, in an era dominated by mobile browsing).

To simplify, a site with high speed provides a smoother user experience and can help improve search engine rankings, conversion rates, and user loyalty. In contrast, poor-performing sites that display slowly in a browser can turn users away, who suffer a frustrating experience from clicking on a result in SERPs and having to wait several seconds before page content appears.

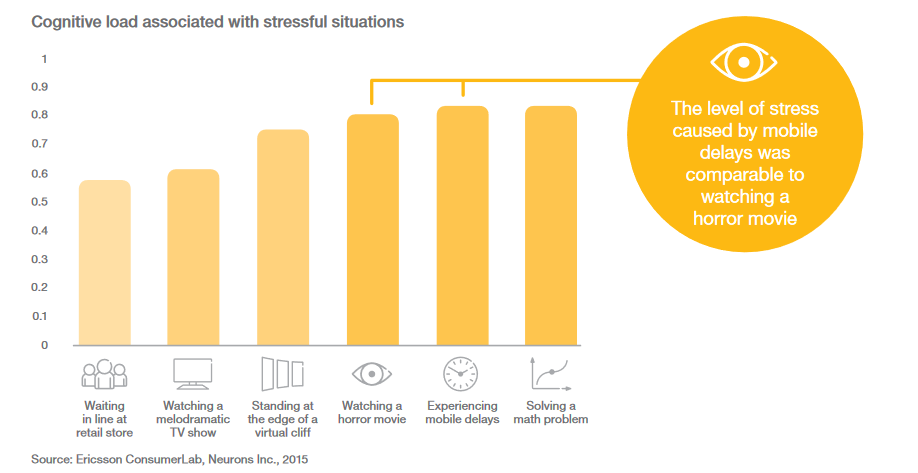

As web.dev developers explain, speed essentially matters to people: according to one survey, the stress response to speed delays on mobile devices is similar to that caused by watching a horror movie or solving a math problem, and greater than waiting in the checkout line at a store.

When a site begins to load, there is a period of time when users wait for content to display, and until that happens, there is no user experience to speak of. This phase is fleeting on fast connections, but on slower connections it can last a few seconds, and people may experience more problems as page resources arrive slowly. When sites send a lot of code, browsers have to use several megabytes to download it (possibly also drawing on the user’s data plan, possibly costing them on a per-consumption basis).Mobile devices have limited CPU power and memory and are often still overwhelmed by what we might consider a small amount of unoptimized code. This creates poor performance leading to unresponsiveness, which causes inevitable user abandonment.

The effects of time on the site

Optimizing Web site speed is not a necessity but a must, especially if we want to beat slower competition and, more importantly, if we do not want to become the turtles in our industry.

In recent years, a lot of interesting statistics have been released about the relationship between Web site loading and performance, showing that technical performance plays an important role in the success of any online venture, and high-performing sites engage and retain users better than low-performing ones.

- The average Web page load time is 2.5 seconds on desktop and 8.6 seconds on mobile device, according to the latest analysis of the top 100 Web pages worldwide by tooltester.

- Web pages on mobile devices take an average of 70.9 percent longer to load than on desktop.

- A loading speed of 10 seconds increases the likelihood of a mobile site visitor bouncing by 123% compared to a loading speed of one second.

- According to Google, the probability of a visitor leaving a page without interacting increases by 32% if the page load time goes from 1 second to 3 seconds.

- According to Google, the probability of a visitor leaving a page without interacting increases to 90% when the page load time goes from 1 second to 5.

- A study by Akamai showed that “47 percent of customers expect Web sites to load in seconds or less” and that a delay of one hundred milliseconds can result in a 7 percent loss in conversions.

- A one-second delay on Amazon could cost $1.6 billion in sales each year.

- According to Pingdom, 78 percent of the top 100 retail Web sites take less than three seconds to load.

- Pinterest reduced perceived wait times by 40 percent and this increased search engine traffic and signups by 15 percent.

- COOK reduced average page load time by 850 milliseconds, increasing conversions by 7 percent, reducing bounce rate by 7 percent, and increasing pages per session by 10 percent.

- The BBC found that it lost a progressive 10% of users for each additional second the site took to load.

- For Mobify, each 100 ms reduction in home page loading speed resulted in a 1.11% increase in session-based conversion, with an average annual revenue increase of nearly $380,000. In addition, a 100 ms decrease in the loading speed of the checkout page generated a 1.55% increase in session-based conversion, which in turn produced an average annual revenue increase of nearly $530 thousand.

- When AutoAnything cut the page load time in half, it experienced a 12% to 13% increase in sales.

- The Village furniture retailer audited the speed of its site and developed a plan to address the problems found, leading to a 20 percent reduction in page load time and a 10 percent increase in conversion rate.

Basically, our goal should be to prove ourselves the fastest site in our niche, outperforming our direct competitors: having a site or e-commerce platform that takes forever to load will not be good for business. People don’t like to wait and will click the back button in a split second, never to return; and for every visitor that bounces we lose money and brand loyalty (and provide an assist to our competitors). Speed and efficiency are crucial nowadays, because people will not sit still and wait for our page when there are other alternatives (and they are aware that alternatives are there).

On the contrary, by offering a fast site we encourage visitors to get comfortable and stay longer, on the site, and making checkout operations smooth can serve to improve conversion rates and build trust and brand loyalty.

How to define and measure page speed

In short, performance and page speed are key aspects of providing a good user experience, and they affect various aspects of the relationship with users, as they relate to retention, improved conversions, overall UX, and, ultimately, people.

All of the statistics listed earlier-and especially economic losses and site abandonment-occur because users do not like to be frustrated: experiencing negative experiences means that users go elsewhere, visit other Web sites, and perform conversions on competing sites. Google easily tracks these behaviors (through bounce rate, average visit time, pogo sticking, and other signals) and analyzes them to try to determine if indeed the page deserves to rank where it is – and so performance also affects SEO, and we’ll soon see how and how much.

It is then time to start getting down into some more technical analysis, looking for techniques to monitor the performance of pages on our site and best practices for optimizing performance.

The premise, as mentioned, is that page speed is a multidimensional concept that can be defined and measured in a variety of ways, and among the most common metrics for understanding page speed are, according to Michael Wiegand:

- Page Load Time: The total time required to fully load a web page, including all visible content and background processes.

- Time to First Byte (TTFB): the time between requesting a web page and receiving the first byte of data from the server. This parameter reflects the efficiency of the server and the speed of the Internet connection.

- Time to Interact (TTI): the time it takes for a web page to become fully interactive, i.e., when the user can begin to interact with the contents of the page.

- Visual Speed Index (VSI): a measure of the speed at which the contents of a web page are displayed. This parameter reflects the speed at which the user can begin to read or interact with the page content.

Each of these parameters offers a different perspective on page speed and can be used to identify and solve specific performance problems. Of course, we can now also rely on the aforementioned Core Web Vitals, which came into existence precisely for the purpose of making the measurement of certain performance parameters, such as stability, interactivity, and page loading (the latter value is determined by Largest Contentful Paint), more objective.

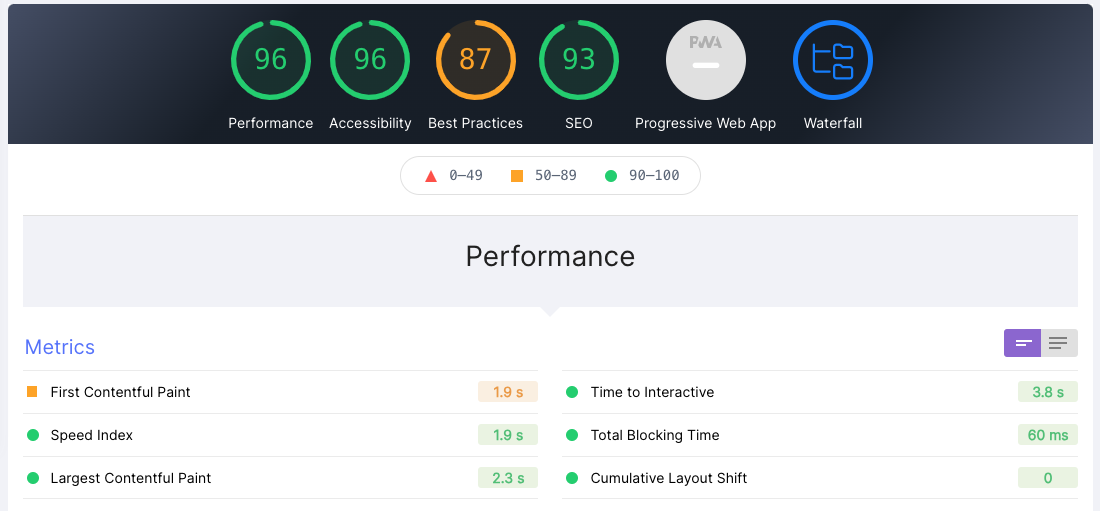

We can evaluate the speed of a page with Google’s PageSpeed Insights, which incorporates data from CrUX (Chrome User Experience Report) and also reports two important speed metrics: First Contentful Paint (FCP) and DOMContentLoaded (DCL). In this sense, page speed can be described as “page load time” (the time it takes to fully display the content of a specific page) or as “time to first byte” (how long it takes the browser to receive the first byte of information from the web server).

SEOZoom’s tools for analyzing page speed

To enable easier monitoring of technical page performance, there are a number of tools and functions within SEOZoom that allow us to check the status of our website’s optimization, both from the point of view of detecting problems (the SEO Spider, first of all) and from the point of view of actual performance.

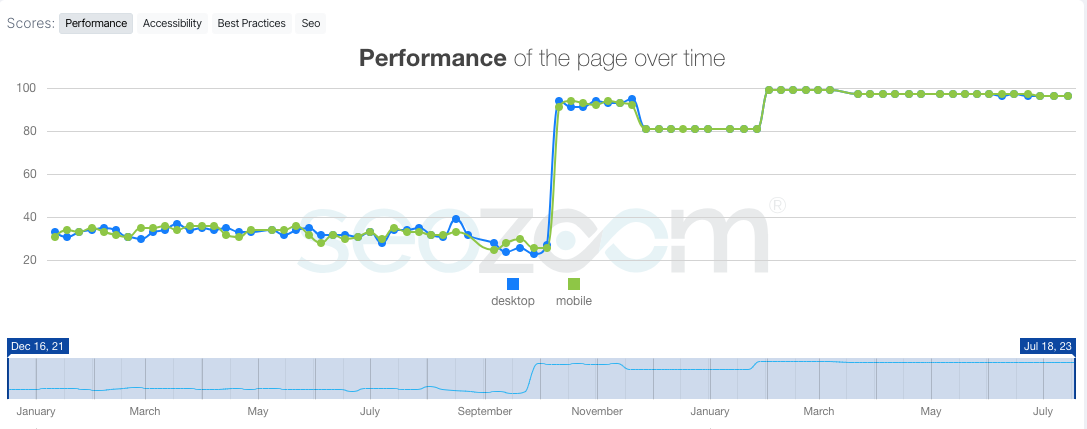

In particular, within the projects area is the Page Speed test, based on data from Google’s tools, which shows the behavior of the site with respect to some of the main parameters that refer to technical performance, based on static monitoring on a weekly basis of the values detected by the Google Lighthouse tool.

To be precise, SEOZoom scans the project site through its servers located in Italy, applying the rules that Google provides to evaluate performance and used, precisely, to compose the Lighthouse report, which can be found in the developer tools section of Google Chrome.

The first dashboard shows the results of the Performance section, which performs an analysis on the speed of the website’s Home Page, with all the information to improve the responsiveness of the online project thanks to a rating on a 100 basis, while the other tabs allow you to deepen the analysis.

Immediately below the graph, SEOZoom provides a more in-depth PageSpeed analysis on some crucial performance aspects, available for both desktop and mobile versions of the site, with particular reference to the Core Web Vitals values. By browsing the submenu we get additional information, statistics, and suggestions for optimizing website speed and performance, useful for guiding our work and prioritizing interventions.

In addition, we can ask SEOZoom to monitor up to five page types (such as category page, TAG page, single product page, or article page): just click on “Add Template Page,” indicate the page type and URL on which to run speed and usability tests, and in this way it will be possible to analyze the speed of each type of website template page, while also monitoring its performance trend over time.

What are the factors that slow down page and performance

But what are specifically in factors that negatively affect page loading speed? This article has listed some of them, but they (inevitably) cannot take into account a crucial variable, namely the quality of the end user’s connection – while instead we have more or less complete control over these.

- The hosting service

Hosting services play a crucial role in Web site performance because they provide the server where the domain resides, and thus their performance directly affects that of the Web site. Factors affecting host performance range from server configurations to facilities, from uptime to the specifications of the machines running the servers. By choosing good and reliable hosting we can ensure that the web server can process requests fast enough to meet all the activity on the site, and we obviously reduce the elements that can compromise speed optimization.

- The website theme

Themes control the design of the Web site, from layouts and font to color scheme, but not all are created equal, and some are much cleaner and more optimized than others because they have a smaller file size, which makes them easier to load. This also means that they have less frills (think animation or specialized design templates) than others that push harder on these aspects, thus offering a good compromise if we are aiming for better loading performance.

- Large files

There several files that can be large, such as HTML, CSS and mainly JavaScript: any bytes and kilobytes we can remove from these files will positively affect page performance. As we know, one of the main suspects for slow sites is JavaScript, the code that many modern sites rely on to make the site dynamic, because it makes a wide range of animations and transitions possible; but JavaScript is also heavy, and excessive use makes a page heavy and difficult to load-for example, it can cause a low FID score in Core Web Vitals because processing these JavaScript files takes longer, especially on mobile devices, which have processing power that is not as exceptional as desktop computers.

- Poorly written code

Incorrect and poorly optimized code can lead to many problems, such as JavaScript errors or invalid HTML markup, also causing significant performance drops; therefore, it is wise to check and clean up the code, remove any errors, and delete any extra lines that do not add significant value.

- Images and videos

We know: heavy images are often the real culprit of a slow-loading page, because they take up a considerable portion of the page weight (in kilobytes or megabytes) and, for example, also impact the LCP score in Core Web Vitals (especially if we present a large, unoptimized header image), which is why SEO optimization of images plays a huge role in increasing site speed.

- Too many plugins and widgets

If we publish the site on WordPress, we probably use plugins and widgets to add functionality: plugins are one of the secrets of WordPress, one of the components that makes it one of the best CMSs. But using too many plugins is counterproductive, because they slow down the site: each of these elements we install adds a bit of code to the page, making it heavier than it should be. Therefore, unoptimized plugins can also play a significant role in slowing down a Web site.

- Absence of a CDN

CDN stands for Content Delivery Network and identifies an interconnected network of servers and related data centers distributed in various geographical locations, which can help page loading performance, especially if we have an international audience. In summary, a CDN saves static Web site content, such as CSS and image files, to various servers in the network so that these files are quickly served to people who live near one of the servers in the network, making this process faster and more efficient.

- Excess redirects

Redirects are a natural part of any Web site and there is nothing wrong with using them.However, we must always remember that redirects can affect page loading performance if not configured correctly. In most cases they will not slow down significantly, but any redirect chains generate problems and lengthen the time: the advice is therefore to reduce the steps needed to reach the final destination, avoiding creating too many redirects and thus reducing the site load.

Best practices for optimizing page speed

Staying in the field of practical interventions, we can refer to several techniques and interventions ways to reduce the loading time of page speed, following the best practices identified by Moz:

- Enable compression.

- Minimize JavaScript, CSS and HTML.

- Reduce redirects.

- Remove JavaScript that blocks rendering.

- Take advantage of browser caching.

- Improve server response times.

- Use a content delivery network (CDN).

- Optimize images and video.

We can reorder these tips in terms of difficulty to implement for a standard professional and in level of and impact on site speed:

- Low-difficulty, low-impact interventions

This “category” includes operations that are within everyone’s reach but have a relative impact on improving performance:

- Optimizing images and videos

We said it before, multimedia assets are a frequent problem for sites, but there are various optimization techniques and, on WordPress, there are also specific plugins capable of automatically reducing the size of any image uploaded in new or existing content. This saves a surprising amount of time when every image on a page is properly sized and compressed. Alternatively, we can now also use more modern and efficient file formats, such as AVIF.

- Minimize JavaScript, CSS and HTML

Code minimization is another quick win, and again of are many tools that perform this task, essentially removing all unnecessary elements present (such as spaces, commas, and other unnecessary characters) to save a few kilobytes in size. It may take a developer to apply these changes, but anyone can copy and paste the code into the tools and send the minimized version to the team doing the work.

- Remove JavaScript that blocks rendering

Browsers must build a DOM tree by parsing HTML before they can render a page: if the browser encounters a script during this process, it must stop and execute it before it can continue. Google suggests avoiding and minimizing the use of JavaScript blocking, and you may possibly consider migrating to a tag management platform such as Google Tag Manager, which can remove the burden of JavaScript from pages and place them in a container where they can load at the speed or slowness needed without compromising the rest of the page’s content or functionality.

- Interventions of medium difficulty and medium impact

The three tips below may be a bit more difficult to execute and also depend on who is managing the existing CMS or web server and probably require consulting an IT and/or web developer to complete them effectively.

- Reduce redirects

Each time a page redirects to another page, the visitor has to wait longer for the HTTP request-response cycle to complete, especially then when chains start, which add additional progressive slowdowns to the page load. It is fairly easy to spot problematic server-side redirects, even with our SEO spider, but some sites include more complicated client-side redirect schemes that use JavaScript, and this then requires more specialized intervention to fix the problem, if those JS files affect the functionality of the site in other direct ways.

- Enabling compression

It would be wise to use specific file compression software and reduce the size of CSS, HTML, and JavaScript files greater than 150 bytes; enabling compression in Apache or IIS is a fairly simple process, but it requires access to servers and htaccess files over which IT organizations are reluctant to cede control to marketers.

- Taking advantage of browser caching

Managing browser caching is a viable option to prevent the browser itself from having to reload the entire page when a visitor returns to our site because it allows us to save various information (style sheets, images, JavaScript files, and more). This solution is easy to perform especially if the website resources that do not change very often and if we have control of the htaccess file, while otherwise we have to use plugins or special extensions for various CMS platforms that manage these settings.

- High-difficulty, high-impact intervention

Finally, the highest level of technical complexity, but also the most sensitive results, concerns:

- Improving server response time

Basically, it is believed that the optimal server response time is less than 200 ms. However, the server response time is affected by the amount of traffic the site receives, the resources used by each page, the software used by the server, and the hosting solution used.To improve this parameter, we need to look for and correct performance bottlenecks that may overload the server, such as slow database queries, slow routing, or lack of adequate memory, by considering solutions such as optimizing the databases that provide functionality to the site, monitoring PHP usage, or even looking for a more reliable web hosting service.

- Using a CDN

Adopting a CDN can require a lot of time, money and skills that the average marketer or consultant does not necessarily possess. However, even Google often suggests the use of this content distribution network, which allows users to have faster and more reliable access to the site.

Page speed and SEO

From all that has been written, we should already have an idea of why page speed is still important today and how much it impacts the management of a site, even beyond the ranking aspect (which we will elaborate on shortly).

Of course, success in organic search depends (still) on content and, nowadays, on producing useful content that has a better chance of pleasing users’ needs and pleasing Google by ranking well. However, technical SEO is also an aspect that, if well taken care of, can earn good rankings and increase the existing organic audience, ensuring that people have an admittedly quick, error-free and smooth browsing experience.

In addition, page load speed and site speed in general can directly influence various aspects of site management, which, in turn, indirectly impact SEO, starting with the crawl budget. A low page speed means that search engine crawlers will be able to crawl fewer pages using the allocated crawl budget, and this may negatively affect indexing and thus ranking (some pages may not be indexed and, as a result, may not appear in search results).

Another aspect concerns the bounce rate, i.e., the percentage of visitors who leave the site after viewing only one page, which is often linked precisely to the difficulties of accessing the desired resource in an acceptable time. A high bounce rate can be interpreted by search engines as a signal that the site is not meeting users’ expectations, which could have a negative impact on SEO.

Why Google demands more speed and faster loading times

But there are also aspects to consider, such as that speed is not only good for users, but also for Google. Web sites are often slow because they are inefficient: they may be loading too many large files, not having optimized their multimedia content or not using modern technologies to serve their page, which forces Google to consume more bandwidth, allocate more resources and spend more money on its operations.

Across the Web, every millisecond and every byte adds up quickly, and even simple configuration, process or code changes are enough to make Web sites much faster without inconvenience-and this may be one of the reasons Google is so direct in wanting to “educate” about performance care. A faster Web is better for users and significantly reduces Google’s operating costs, so it will continue to reward faster sites.

Page speed is a ranking factor: Google Page Speed and beyond

Speaking of educating people about performance culture, it was as early as 2010 that Google had included site speed – understood as a measure that reflects how quickly a website responds to web queries – among the signals used in its search ranking algorithms.

Then, in July 2018, there is a turning point in the way Google evaluates and ranks websites and emphasizing the importance of page speed for user experience: the Page Speed system, one of the ranking systems used by Google Search (and later merged into the broader Page Experience) for mobile browsing as well, is introduced.

Initially called Page Speed Update, this algorithm forced a significant change and officially made page speed a ranking factor for mobile searches, thus extending it from desktop searches alone given the increasing use of mobile devices to access the Internet, which led Google to recognize the need to ensure a fast and smooth browsing experience on these devices as well.

More broadly, this algorithm marked a step forward in Google’s mission to make the web faster and more accessible, sending a clear message to website owners that page speed is important and should be taken into account when designing and developing a website.

Then, in 2021, Google announced the oft-cited Page Experience, demonstrating that page speed and user experience are intertwined, just as the focus on Core Web Vitals clearly states that speed is an essential ranking factor-and site owners can rely on metrics and standards with which to work for performance optimization.

Still content relevance and pertinence remain priorities

Going back to information from 2018, the “Speed Update” only had an effect on pages that offered users the “slowest experiences,” or slowest loading experiences, and Google estimated a low percentage of queries. The system applied the same evaluation standard to all pages, without taking into consideration the type of technology used to build the pages, and the introductory article emphasized that the strongest signal still remained the satisfaction of the intent of the search query. For this reason, a slow page could still have achieved a high rank if it counted important and relevant content – and, therefore, “outstanding” but slow content would still appear earlier in the search engine SERPs than mediocre but fast content, but all factors being equal, a fast page would rank better than a slow one.

The speed test tools

Google’s final recommendation was reserved for developers, who were asked to carefully evaluate how their site’s performance affected the user experience and to consider a variety of user experience metrics. Although, Mountain View said, there is no tool that directly indicates whether a page is affected by the new ranking factor introduced with the page speed update, there are still some resources that can be used for analyzing the performance of a site and page.

In detail, Google cited Chrome User Experience Report, a public dataset of key user experience metrics for major Web destinations, based on Chrome users in real-world conditions; Lighthouse, an automated tool and part of the “Chrome Developer Tools” project for analyzing the quality of Web pages (in terms of performance, accessibility, and beyond); and Google PageSpeed insights, a tool for measuring the speed of a page according to the Chrome UX Report and suggesting possible fixes to optimize performance.

What speed means for a website: the value of performance

The web.dev website offers many useful resources for navigating the complex work of optimizing site performance and, specifically, page load times, aimed at helping improve performance to try to reduce user abandonment rates first and foremost. This guide starts with a general consideration: performance plays a significant role in the success of any online business, as high-performing sites engage and retain users better than low-performing ones, as we also said about the importance of having a mobile-friendly site.

This may sound rather trite, but it is useful as yet another cue not to underestimate the value of performance as an onsite SEO factor and to focus on optimizing metrics to improve the user experience, including using tools such as Google Lighthouse. In addition, to “stay fast,” one must forecast and apply a “performance budget” that can be referenced at all times to understand the health of the project.

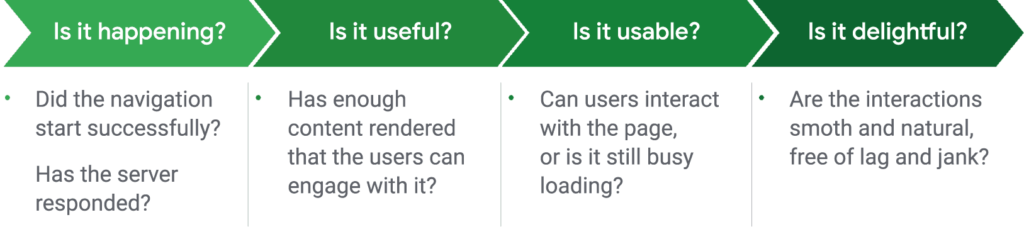

But what really is speed referring to websites? For project developers, it is not just thinking about “website loading in x.xx seconds or the like”: a loading is not a single moment in time because “speed is an experience that no single metric can fully capture.” In fact, there are multiple moments during the loading experience that “can affect whether a user perceives it as fast, and if you focus on just one of them, you may lose sight of and overlook bad experiences that occur during the rest of the time.”

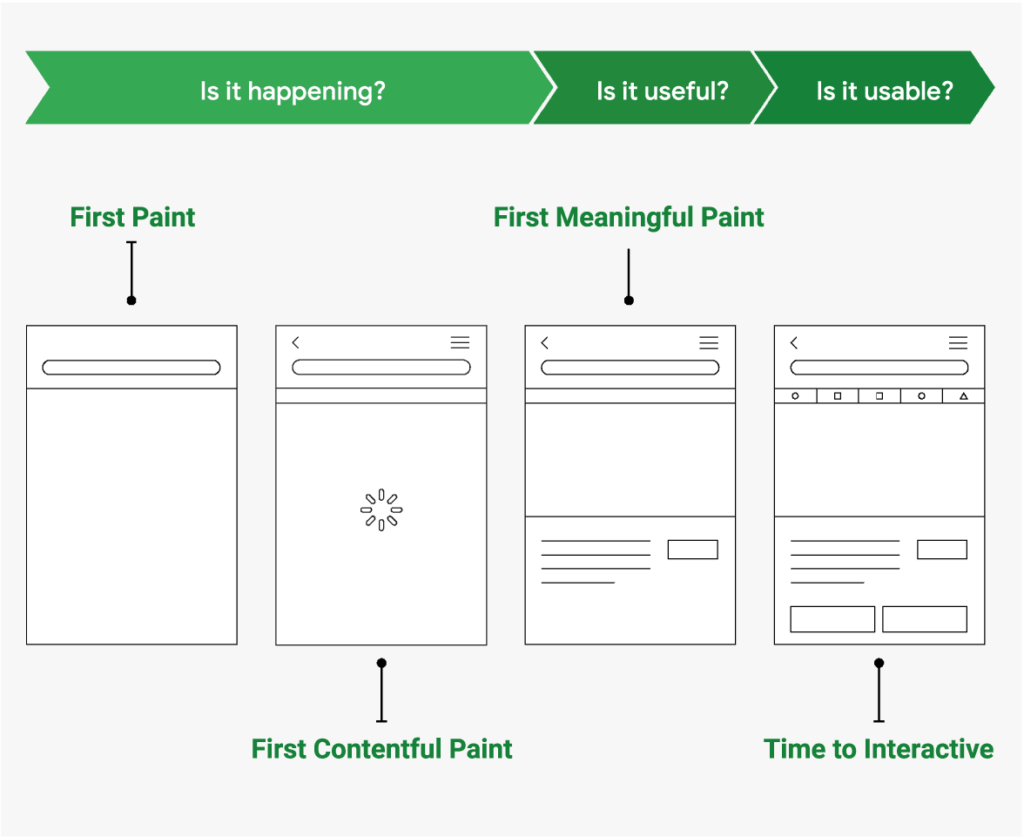

Therefore, rather than measuring loading with a single metric, it is more appropriate to time each moment during the experience that affects the user’s perception of loading speed, trying to understand what the feedback is with respect to precise elements, such as those shown in the image (taken from web.dev).

Using the right metrics to evaluate the time factor

Traditional performance metrics such as load time or DOMContent load time are unreliable, as their occurrence may or may not match such feedback. Therefore, over time additional metrics have emerged that could be used to understand when a page provides this feedback to its users, such as first paint, first contentful paint, first meaningful paint, and time to interactive, as summarized in this image (again from web.dev), which is obviously “older” than the introduction of Core Web Vitals.

It is important to understand the different insights offered by these metrics and to determine which ones are important for the UX offered by your site: some brands even define additional custom metrics specific to the expectations people have of their service, and the blog cites the case of Pinterest (where users want to see images), which has created a custom metric, Pinner Wait Time, that combines Time to Interactive and Above the Fold Image load times.

Why speed matters

That technical background having been made, let’s come to the hard data to explain (once again!) the value of speed. As we know, today’s consumers increasingly rely on mobile devices to access digital content and services, and an analysis of their site traffic can make this concept even more evident.

In Italy, just to bring some fresh data, Audiweb‘s latest analysis revealed that as of April 2023, “more than 39 million users, or 91.6 percent of the population over 18 years of age, have surfed from smartphones,” and through these devices Italians spent 88 percent of the total time online, or 2 hours 37 minutes per person.

Users evaluate usability, experience and speed

It’s not just the numbers that are evolving, though, because consumers have become progressively more demanding, and “when they evaluate the experience on your site, they’re not just comparing you to your competitors, they’re evaluating you against the best-in-class services they use every day.”

Delays caused by mobile speed are not only frustrating, but can also have a negative impact on site results because there is a specific conversion-velocity relationship: according to some studies, a delay of as little as one second in mobile loading times can affect conversion rates by up to 20 percent.

With the theoretical thoughts concluded, the guide goes into practical and technical tips for improving site performance and conversions, starting with a guide to how to measure speed by collecting “lab or field data” to evaluate page performance. Tools mentioned for testing site speed again include Lighthouse (which performs a series of audits on the page and generates a report on Url performance), the Chrome User Experience Report (CrUX), PageSpeed Insights, or the Chrome Developer Tools built into the Chrome browser.

Make a performance budget to maintain the standard

If optimizing a site’s performance is a relatively simple process, more complex is maintaining the level achieved in the medium to long term: an internal Google study reveals that 40 percent of sites worsen their Web performance after 6 months. According to the web.dev authors, setting a “performance budget” is one way to solve this problem by setting maximum time limits that each metric affecting site performance must reach, as in the attached example (again from web.dev).

Practical interventions on the site

Then going into detail, the lengthy article dwells on several central points of the work of improving site performance: starting with image optimization tips, moving through guidance on how to handle JavaScript and CSS, to very practical guidance on aspects such as optimizing third-party resources, working on web fonts, and network quality.

Ultimately, this complex guide seeks to raise awareness among users (developers, SEO specialists, or otherwise engaged on the Web) to develop a performance culture, aiming not just at speed as an end in itself, but at creating a better ecosystem for all users.

How fast does a site need to be? What is a benchmark for loading?

Having sanctioned that there is no absolute specific figure that always applies, webmasters, developers and site owners are (or should be, in light of the relevance of the topic) beleaguered by the question, “How fast should a page load in 2023?”

At a general level, the short and true answer is “as fast as possible,” but for most Web sites having a precise benchmark can serve to better understand performance and the work to be done to optimize it: according to Adam Heitzman, and as also confirmed to some extent by Google, the numerical value to keep in mind to know whether pages load fast is 3 seconds, after which we begin to define slow loading.

Starting from this figure, we then know what level to refer to in order to be sure that we have reached the goal after the many efforts and interventions that are needed to improve page speed, so that we can focus on other areas to be optimized.

The optimal loading time is under 3 seconds

Web pages are uploaded “in pieces”, says Heitzman, so it can happen that “sometimes a photo is uploaded last, or a form first, or an ad will be uploaded first at a point before finally jumping to where it belongs on the page”.

This happens because “all these different elements that make up a web page must be loaded, and this contributes to the overall loading time”. Understanding these aspects is crucial to improve loading times and to meet two requirements that can determine the success of an online project:

- on one hand there are consumers, who are impatient and do not appreciate the long wait;

- on the other there is Google, which pushes on the accelerator (in all senses) and that will give even more weight to speed and user experience with core web vitals and the Page Experience.

It is no coincidence that, as pointed out by a study by Deloitte, even small improvements in the speed of a site can generate a strong impact in conversions.

How to assess loading time

We know that there are several different tools that we can use to evaluate the overall page loading time of a site, but “in our experience Google Pagespeed Insights is the most comprehensive free tool to get started,” the article says.

Just connect to this page and enter the URL to examine to get a full analysis of the content and an evaluation of all the elements that contribute to weight loading, with advice to improve performance. Basically, we will find out in a few seconds everything that could slow down the site and how and what to do to increase speed, divided by loading analysis on mobile and desktop.

Devices do behave differently and, according to some research, the average web page takes 87 percent more time to load onto mobile devices than desktop devices. The reason is to be found in mobile device processors (on average slower and less performing than desktop ones) and in device-specific CSS rules.

Optimizing and speeding up high-performance pages first

A practical tip that comes from Heitzman is to focus the initial optimization effort on the set of high-performance pages that are central to site performance: In fact, it is easier to work on improving only the speed of a page than to act immediately on the whole site, and in addition these interventions can immediately give a further positive impact to our key pages.

Once the analysis on Pagespeed Insights has been performed and we obtained the roadmap of interventions to improve loading time, it is time to roll up our sleeves and work; in most cases, warns the expert, we will have “need the help of a developer to remove certain codes that could slow down the site and there are so many things to consider”.

Often, however, there are also “low-hanging fruits”, easy options to focus our attention on, which can be arranged in a short time and with good impact. The list includes best practices for optimizing and improving loading time that we have already discussed, starting with images, offering at least three different aspects to evaluate.

A simple method to not sacrifice quality for speed is to use the Lazyload system, which allows you to initially load only the images actually present in the part of the screen displayed by the user (without loading the entire Web page at the same time).

Equally simple and relevant is the work to reduce the “physical” weight of images, which is basically one of the best practices for image optimization. In addition to the standard procedures, Heitzman gives direct advice to those who manage a responsive site: it is always better to upload only images of the size that are really needed. Even if the images will adapt to the parameters set by the site to serve a resource of good quality, the lower the pixel density, the faster the image will be loaded. And so, the author said, “don’t just make your web work, because it wouldn’t be great for speed”.

Lastly, even the image file format can have an impact on the loading time: in principle, it may be preferable to use next-gen formats such as JPEG 2000 or Webp instead of the more classic and heavy . PNG or .JPG.

For projects already started and with many images, a practical solution could be to consider converting the format at least of the larger images to assess the actual improvements, and then proceed progressively with the remaining resources.

Other tips to optimize performance

The work of optimizing the site to make it faster goes through, although to a lesser extent, also from small interventions on onpage content: for example, using internal links and breadcrumbs can help to improve the user experience and, at the same time, facilitate the understanding of search engine crawlers, thus improving speed.

Each time a page is redirected to another, it increases the waiting time for the HTTP request: for optimal management, therefore, it would be better to reduce the number of redirects and direct users directly to the final resource, because “every second counts!”.

If we are using a mediocre or low quality web hosting provider, we should consider switching to a more robust hosting solution. Even this aspect, alone, can have a huge impact on page loading times.

The value of the work on speed

According to various researches, most websites have a page loading time of around 6 seconds, twice the average speed suggested as a “milestone” for good performance.

In fact – unless we start from scratch – reducing the page speed to 3 seconds or less is a very difficult best practice to achieve, which requires a lot of work and a lot of time.

The final advice is to focus on these elements and check the speed of the pages at least once a month to monitor any changes that might occur, and that will almost inevitably occur when we add new web pages and plug-ins to the site, remembering that all these efforts can be repaid in terms of conversions and user satisfaction.

Managing website speed to avoid wasting time

Few things are more frustrating than a slow loading of the page we clicked on: a slow website kills conversions and affects its rankings in search, because time is the most precious resource and we need to watch out for the “silent killers” that might hit our site and cause dissatisfaction and disappointment for our users.

And so, Irina Weber on Search Engine Watch has identified an interesting analysis of the 10 most difficult silent killers to spot on a site, seemingly small or hidden elements that do great damage.

- Caching issues

Storage in the browser cache is very important for regular visitors. When a user accesses our website for the first time, their browser stores all files such as images, CSS and Java files for a specified period; on the next visit, browser caching allows you to quickly publish these archived files.

By reducing the number of steps you get faster page loading times and improving the user experience: according to Weber, “caching can definitely help you accelerate your website, but it’s not without problems” and, if not set correctly, “may compromise user interaction”.

If the site is running on WordPress, we can use some cache plug-ins to improve performance; otherwise, you can add Cache-Control and Entity (Etags) headers to http response headers, that reduce the need for visitors to download the same files twice from the server and reduce the number of HPPT requests.

- Database overload

A database overload can be a silent killer when it comes to website performance. WordPress sites often risk having an overloaded database of multiple post revisions, disabled plug-ins, saved drafts and more: “trackbacks and pingbacks have no practical use in WordPress”, says the author, so it is better “to disable them both because they clog up your database and increase the number of requests”. Another good practice is “deleting other junk files like spam folders and recycle bin, transients and database tables that can slow down your website”; again, some plugins allow you to perform all these operations in a practical way.

- Obsolete CMS

Running an outdated version of the CMS can not only slow down the site, but also cause several security vulnerabilities; moreover, even using the latest versions of the plugins and any software will result in faster loading times.

- Excessive use of social media scripts

Social media have become a crucial part of the online project: “regardless of the size of your site, you still have to connect social media and make it easier for users to share your posts”.

But the excessive use of scripts and plugins from various platforms can compromise our performance, so the advice is to limit them only to the social media we actually use and to find alternative ways to plan and automate social activity, looking for systems that automate the process.

- Use of chatbot

Chatbots are “great for handling customer requests” and according to a research “69% of customers want to use chatbots to accelerate communication with a brand”. There is, however, a pitfall, because these systems can damage the speed of the website “in case the script is not implemented properly and take longer to load”.

First, we must make sure “that the chatbot loads itself asynchronously“, that is, “any action performed on the website – such as starting a conversation with a customer or sending pings – must be addressed by external servers”. So, you have to use the right code that enables this action and check if there is any problem with the chatbot scripts thanks to the Google Pagespeed tools.

There are, also in this case, softwares and systems that allow you to configure chatbots in a simple and automatic way.

- Broken links

We know the problems generated by broken links in terms of annoyance for visitors to the site or Google scan difficulty (and we have also included them among the most frequent errors on sites) but we must not overlook the effect they have on bandwidth drainage.

Irina Weber says she has carried out “a detailed analysis for the site of a customer, detecting many 404 errors; after resolving them, the average loading time per user fell from seven to two seconds and there was a sharp reduction in the bounce rate”.

- Render-blocking JavaScript

Each time “the website is loaded into the browser, it sends calls to each script in a queue: the queue of these scripts must be empty before the site appears in the browser; if this is very long it can slow down the pages without allowing visitors to view the site completely”. These types of script queues are called Javascript and CSS render-blocking files because they indeed block the rendering.

To speed up the loading of Web pages, Google recommends deleting rendering blocking scripts; “before removing them, identify which scripts cause problems using Pagespeed Insights“, the author suggests, recalling how many “traffic and conversion analysis platforms are installed using Javascript code that can slow down your site”, but there are also lighter software to embed asynchronously in the page.

- Use of Gravatar

The Gravatar service “offers convenience and easy customization to your user base, but there is a drawback, speed”. This aspect is not really perceptible on smaller websites, “but if you have a large website with many comments on the blog, you will notice a slowdown”.

There are some options to fix this problem:

- Disable the gravatars in WordPress.

- Remove comments that have no value.

- Use caching systems for Gravatar.

- Reduce the size of Gravatar images.

- Layout comments in WP Disable.

- Invalid HTML and CSS

“If you stop using invalid HTML and CSS codes, you will improve the web page rendering time and the overall performance of the site,” Irina Weber says as last point. So we must be sure to “create HTML and CSS in line with W3C standards if you want browsers to interpret your site more regularly”, using validation tools or third-party validation packages.

- Use of AMP

“Everyone knows that AMP – Accelerated Mobile Pages – is a Google project created to speed up Web pages on mobile devices by adding a brand next to snippets in mobile devices”, but according to Weber there are “some challenges” still open.

If on one hand the “creation of AMP improves the performance of the website, removes all the dynamic features that slow down websites”; in other words, “change the design of your website and offer less functionality to your visitors, and this can lead to a reduction in conversions,” and a case study confirms this. The well-known Kinsta hosting service “saw its mobile leads drop by 59% after adding AMP, so they disabled accelerated pages“.

This is actually outdated advice in 2023, because AMP has essentially been overtaken by new technologies, and so many sites have completely abandoned this framework.

Google and speed: quick pointers to improve performance

Over the years, Google has, as seen, devoted a lot of attention to the issue of web page speed, and there are plenty of resources available online that provide us with hints and tips for improving our site’s performance.

One of the most interesting videos, although dating back to as far as 2019, is the special episode of Ask Google Webmasters posted on the official Google Search Central YouTube channel, in which John Mueller and Martin Splitt pause to answer questions posed by practitioners with respect to precisely site speed optimization and its impact on ranking, focusing in particular on four main issues:

- What is every content’s ideal page speed for a better SERP ranking?

- How important are SEO tools’ data and scores such as Google PageSpeed to the SEO?

- How to correctly use all various tools to evaluate performances?

- What is the best metric to rely on in order to understand if a page’s speed is fine or not?

Let’s see their answers in detail.

Ideal page speed is not really a thing

Summing it up, Google evaluates sites’ pages using two big categories: very good or really bad, without real levels or nuances in between. Speed’s categorization (or, better, the users’ speed experience) is therefore fairly approximative, confesses Martin Splitt directly answering the question on “ranking’s ideal page speed” from a tweet.

More then on this aspect, though, the answer concernes the tools Google uses to evaluate a site’s page speed, and is actually John Mueller to explain that data come in two ways: on one hand, “we try to calculate a theorical speed using lab data” while on the other hand we use “real on-field data, gathered from users that actually tried to use those pages”. This last info are “similar” to those obtained through Chrome user experience report, he adds.

Basically, so, Google gathers “hypothetical and practical” data and does not have any kind of specific thresholds or numeric values to which SEOs could refer to: it does not exist anything like an ideal page speed (meant as minimum or maximum score to reach) , because the only suggestion one could give is “make your websites fast for users“.

Tools to measure speed

Second question refers to the various tools available to measure the site’s speed, and specifically the user asks “if the mobile site’s speed is fine according to the analysis done with specific tools, how important is to the SEO to have an high score with Google PageSpeed Insights?”. The cue allows the googler to discuss which one is the most important tool for the SEO and to SEOs, because “there are lots of tools that measure lots of things“, and this can create chaos.

The main aspect to consider is that the various tools (Test My Site and GTmetrix are particularly quoted) measure parameters in slightly different ways, and that’s why Mueller suggests to try each and every tool available, to gather their data and use them to find out which are the easier to fix site’s weak spots, the so-called “low hanging fruits”, that could increment pages’ speed.

To understand speed’s metrics

A lot more technical is the third question coming from Twitter, because the user asks clarifications on the kind of evaluations devtools Audits do on some specific metrics, such as TTI, FCI and FID. Before answering, Splitt explains us what these acronyms mean:

- FID stands for first input delay, a.k.a how much time the site takes to answer since user’s first input;

- TTI is the time to interactive, that measures when one can actually interact with the page;

- FCI stands for first cpu idle, that reports when the JavaScript ‘s (or other elements that need work on the CPU) is over.

The webmaster trend analyst then clarifies some aspects on the reading of metrics’ data: measurements are not perfect (provided range are pretty wide in the shown example) and also we don’t have to fixate on numbers only, but rather understand which are the most problematic aspects and work to correct them. Always keeping the example as basis, the difference between 0.8 milliseconds and 20 milliseconds is the slightest and the answering values are still positive, while to have a CPU work for a whole minute is an entirely different matter.

Metrics and speed, the best to evaluate SEO performances

The fourth question is also focused on metrics and, specifically, on the ones you have to consider in order to improve the site’s SEO performance; Splitt defines other parameters, such as

- FCP, first contentful paint, a.k.a the amount of time the site needs to show the user its first visual content;

- FMP, first meaningful paint, meaning the amount of time it needs to load its first useful content,

and then he offers his vision.

Basically, the absolute best metrics does not exists because evaluations depends on the site’s nature:

- If you have the kind of site where people only read the contents and don’t interact much, then you need to keep an eye on FCP or FMP values rather then FID or TTI;

- If the site is an “interactive web application” and you actually want the people to directly jump to the content and “do something”, you will probably need to study and optimize FID and TTI metrics above everything else.

Splitt also gives practical examples: according to him, it makes no sense to have an interaction-oriented site (a messenger), with an extra quick image loading and message history if that is not possible for users to answer messages fast enough because it takes up to “20 seconds before being able to view the text box and actually begin to write”. Similarly, it really is key for a blog to immediately show the contacts’ form at the end of the posts?

The pivotal point, then, is to not only look at the scores, that over-simplify things trying to measure which one could be the user’s perceiving when he access a random page of our site. The “absolute” scores are an aproximative assessment (a ballpark, says Splitt), to only use in order to understand in outlines which are the site’s performances, but mostly which are the critical aspects to work on relatively to the site’s purposes and the experience you would like to provide.

All in all, Google cannot provide a single score or a value to the site’s speed and that’s why there is no such things as an ideal page speed to reach, tell us John Mueller and Martin Splitt, especially since speed includes so many factors that cannot be narrowed to a number.

False myths about speed: not everything we know is true

Martin Splitt himself later devoted an episode of his SEO Mythbusting series on Youtube to the topic of page speed and its impact on SEO, hosting for the occasion Eric Enge – one of the authors of the massive volume The art of SEO, as well as general manager of Perficient Digital – to discuss and analyze which aspects are still the most challenging for webmasters and site owners.

In the long chat, Splitt and Enge first discuss the importance of page speed in the current context of the Web, and then get to define which is considered a “good” speed of the pages and describe some of the interventions and techniques that can improve this parameter.

But first of all, matching the title of the show, Enge explains from his point of view what is the first misunderstanding that there usually is on this topic, meaning that page speed is a fundamental ranking factor: surely it can impact on metrics and user engagement, says the expert, but it is not only by improving the speed “that you will climb up three positions in the SERP”.

How to consider page speed

Page speed is a big and deep topic, especially because many people do not fully understand it and make mistakes. A useful approach to this topic is to question the goal you are trying to achieve with your work, namely “build a good site for your users“, as Martin Splitt says.

The Googler describes the importance of speed starting from some real life situations: “It’s painful when you’re on the subway, in the car or somewhere in the countryside and your smartphone does not have a good signal, but you need to look for something on the fly and it takes a long time to really load and read the content. For some sites, the same thing can happen even in the city and with perfect signals, with extreme frustration“.

So, it is never good to create frustration in users, synthesizes Splitt, and Google as a search engine does not want “frustrated users when they see contents”, and therefore it made sense “to think that fast websites are a bit more useful for users than very slow sites”.

Page speed and ranking for Google

The explanation convinces Eric Enge, who adds that he imagined that “Google probably somehow uses page speed as a ranking factor, but it can’t be a stronger signal than content”. Splitt confirms, saying that if a site “is the fastest ever, but has poor and low quality contents, it does not offer help to users” and Google obviously takes these aspects into account.

Speed is important and should also be taken into account in terms of ranking, and in this regard Enge refers to some “chilling” statistics: something like 53 percent of sessions are abandoned if the loading of the page exceeds 3 seconds and – perhaps a bit old piece of data, as admits the GM of Perficient Digital – the average loading of a page is 15.3 seconds.

A complex element to assess

One of the problematic aspects of page speed is that it depends on many elements: “Sometimes they are slow servers, other times the servers respond very quickly but there is a lot of Javascript to process first, which is a wasteful resource because it must first be fully downloaded, analyzed and then executed,” recalls Splitt.

And there are other aspects to consider: “Imagine having a paid connection – for example, if you are flying or something like that – and then paying for example 10 euros for 20 megabytes of navigation: think about how much data you are extracting in those 15 seconds of loading!”.

Enge adds that the average page size is much higher than the share recommended by Google – which is about 500 k-bytes or less – while in all market sectors it exceeds (and by much) the megabyte. The Googler notes that “less is better”, but that’s not always the case in the Web ecosystem, and this has prompted Google to include page speed among ranking factors, even if the warning “content is king” is still valid.

How to optimize the site’s page speed

But what are the interventions that can be applied to a site to improve page speed? According to Eric Enge, even those who have not fully understood the meaning of this parameter (or maybe, as he said before, considers it a crucial and primary value), know that there are some aspects to focus attention.

For instance, “I think almost everyone recognizes that images are a potential problem, so they use responsive images instead of waiting for the browser to resize them” or limit the size to avoid weighing on the upload and other image optimizations.

And this is the first level of page speed optimization.

But there are other more difficult or technical things that are not yet on the SEO priority list, such as uploading lazy images (which ensures that the below-the-fold resources do not load until the user reaches that part of the page) or the right awareness of the importance of having a good server hosting service and a correctly set CDN.

The difficulties in understanding the tools

Another problem reported by Enge – another “false myth” – concerns the use of tools to evaluate the speed of the pages and the understanding of recommended interventions to reduce page speed. The SEO expert gives an example to clarify this concept: “I connect to the tools of Google Lighthouse and a report indicates that there is a particular intervention that could reduce the loading time by six seconds; I run it, but there is no immediate improvement in speed“.

The point is that often these elements are connected to each other, and therefore working on just one aspect does not lead to the “hoped for” results: in general – almost always – we need instead a combination of interventions and changes to improve the speed of the pages for real, not just a miracle fix.

On this point Martin Splitt agrees, and admits that Lighthouse is a complicated tool and that there is often an underlying understanding error: it is a tool that uses “lab data” and therefore does not show “what users see”. In fact, “you are literally testing the speed of your pages from your machine, your browser, your Internet connection, and not necessarily what is the user experience when they are from their smartphone with a skipping connection”.

Lighthouse offers predictions about what can be improved, but that does not mean that it is enough to do that surgery to achieve the result.

Too much attention to scores

Another underlying problem is that people pay too much attention to scores as such, the Googler still says, and this leads to another unfounded myth: “Google uses the Lighthouse score for ranking“, but that is not true and it is not how things work.

“They rely too much on those scores,” confirms Enge, according to which this trust can mislead them, because it leads them to think that having a good score means that the site is in place, when instead you are still problems.

Poor consideration of differences between devices

A further critical point is the understanding of how the features of a device can influence the speed of page loading: it is a trap to think that a site is fast just because it loads quickly on your high-end smartphone.

The sites are accessed by many types of devices, which load all pages at different speeds. Thanks to Google Analytics, remembers Splitt, there is the possibility to monitor which devices access your site and work accordingly, maybe even trying to buy the device used more frequently to better understand how users really live the site.

In this regard, Enge quotes his recent study on the CNN.com website, where the loading of pages is about 3 seconds on the fastest phones, while for users who have a lower quality smartphone reaches up to 15 seconds.

A confirmation of the differences – even huge ones – that there are in this area and the need to do an optimal job for all users, using the various tools that Google provides and especially based on real world data, real user metrics. Splitt cites, in particular, Page Speed Insights (which allows you to test the site from different locations and network connection, so as to have a better understanding of the loading experience) or Chrome User Experience Report, which, he said, are not so known and widespread.

AMP is not a ranking factor

It is almost inevitable, speaking of false myths related to the speed of the pages, that the speech then falls on the AMP pages, which have often been indicated with a potential ranking factor despite the denials of Google: Splitt reiterates that the algorithm does not use the presence of AMP as a ranking signal, focusing more generally on the importance of speed for the rankings.

AMP is a “fantastic toolkit” that helps a site to serve fast pages to users, but it is the speed of the page that is important for users and Google, because it impacts on conversions, for instance.

And this improves by configuring the CDN well, making sure that caching is done well, optimizing website architecture and making the progressive web app fast by default (convenient because they preload content in the phone cache and can also be used with poor or no network coverage, as Enge recalls), among other things: AMP can help and simplify some processes, but it does not count in itself.

What the speed means to the ranking on Google

Before concluding the episode, Splitt and Enge still dwell on the meaning of “speed as ranking factor” and the Googler – still emphasizing the priority given to content ratings – explains that “If there are two results that are basically good compared to the content, then the ranking will probably reward the one that is faster, which then will have a better position”.

It is good to understand that Google does not rate speed “based on scores, Lighthouse or something like that: more than anything it is as if we grouped the pages by categories – these are programmatically slow, these are OK, these are fast – as you see also speed ratio of the Google Search Console”. So, the people who work on the site have to “figure out if they have really slow pages and how to make them faster, and improve those that are in the middle group, but it doesn’t matter if you have a score of 90 or 95, because that’s not what really makes the difference,” concludes Splitt.