3 ways to hide a site to Google but not to users

Usually, our articles focus on methods and advice to try to ensure maximum visibility of a site’s pages on Google, which is the basic goal of SEO; but sometimes there are circumstances that go in the completely opposite direction, and that make it necessary an intervention to delete a web page from Google, or at least hide it from search results. It is Google that explains that we have three methods to accomplish this task, to be used depending on what best meets our needs and the case in which we find ourselves.

When to hide content from Search

But what are the circumstances that can drive us to this move? We can identify some, ranging from technical issues – for example, e-commerce site page dedicated to a product no longer valid, or content with inaccurate information that does not deserve a re-editing, or even presence of spam messages or potentially offensive – to the most serious, which also result in legal issues.

This is the case with warnings about the removal of certain resources from the site coming from people who feel offended for any reason by that particular content – which may be copied or stolen, or worse to report misleading and legally actionable information – which needs to be answered promptly.

As we know, there are specific procedures and techniques to complete the content removal from Google, such as the use of the appropriate Search Console tool for removals , which however only allows you to hide the page for six months or delete cached copies; a sharper intervention is to physically remove the offending pages and return a 404 or 410 status code, but in other situations this is not the right way.

How to hide a site to Google but leave it open to users

The topic is at the center of an episode of #Askgooglebot on YouTube starring John Mueller, which answers the specific question of a user who wants to know precisely whether it is possible to hide a website in the Search but at the same time allow users to have access to it through a permalink.

The short answer is that we can effectively prevent the content published on the site from being indexed in the Search (and it is a legal procedure for Google), thanks to three different systems: use of a password to limit access, a crawl block or an indexing block.

In all cases, blocking content from Googlebot is not contrary to Google’s webmaster guidelines, provided that the same block applies to users at the same time; in other words, If the site is password protected when scanning by Googlebot, it must also be password protected for users, or it must set explicit guidelines to prevent Googlebot from scanning or indexing the site. Otherwise – if the site presents to Googlebot different content than those served to users – we risk falling into what is called “cloaking“, a black hat tactic that violates Google’s guidelines.

The 3 correct ways to hide content from search engines

According to Google, the best approach to maintaining a private site is to block access to its content and pages through a password: in this way, neither search engines nor random Web users will be able to view its contents, and so it’s a pretty safe and effective method.

Setting a password is a common practice for developing websites: the site is published online in real time to share the ongoing work with customers and receive their feedback, but access limitation prevents Google from scanning and indexing pages that are not yet fully optimized.

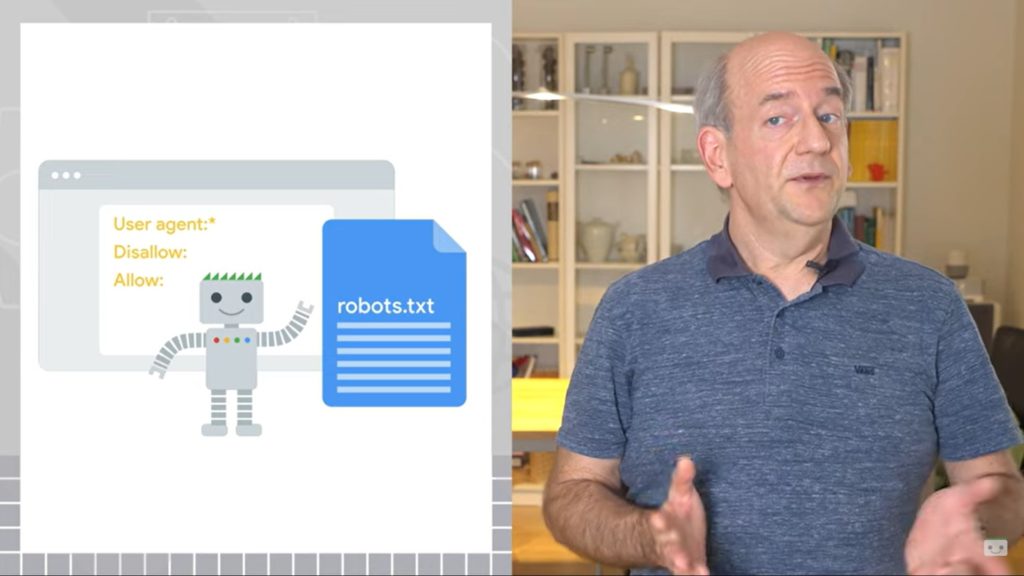

The second method is more technical, because it consists in preventing Googlebot from having access and scanning the pages of the site through specific limitations within the robots.txt file: in this way, users can access the site with a direct link, but the page and its content will not be detected by “well-behaved” search engines. However, this is not the best option, says Mueller, because search engines could still index the website address without accessing the content (especially if these Urls are linked from other open pages) – a rare possibility, but to be taken into account.

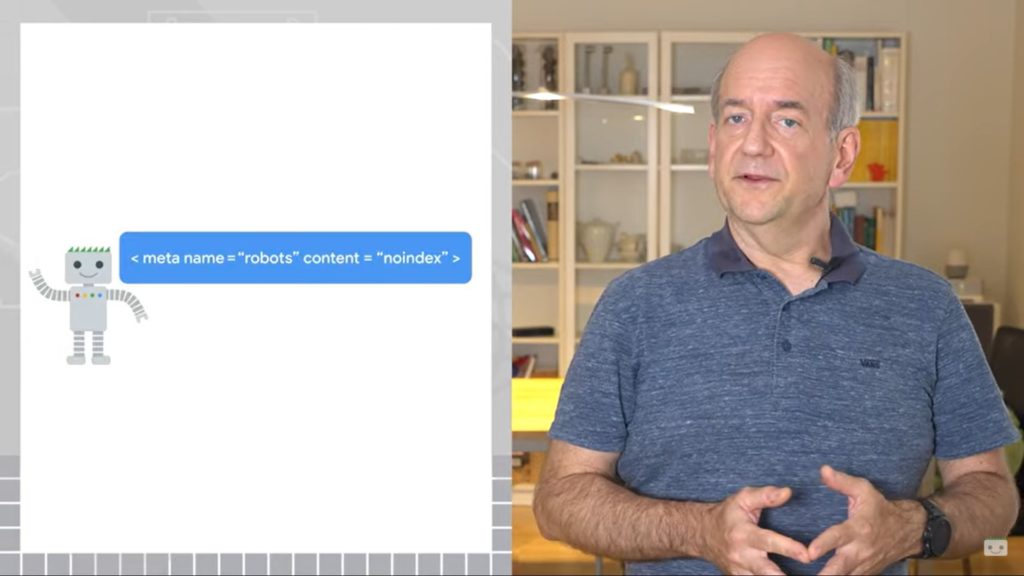

The last option is to block indexing by adding a noindex meta tag robots command to the pages, which tells search engines not to index that page after scanning it; Of course, common users do not see the meta tag and can therefore normally access the page.

The best solution to hide content according to Google

In his conclusions, Mueller sets out Google’s general recommendations, and in particular stresses that the suggestion “for private content is to use password protection” because “it is easy to verify that it works and prevents anyone from accessing the content”.

The other two methods – block scanning or indexing – “are good options when the content is not private or if there are only parts of a website whose display you wish to prevent in search results,” explains the Googler.